任务描述:将麦子学院指定网页下教师信息(姓名,职称,介绍信息)爬取下来并保存到数据库。

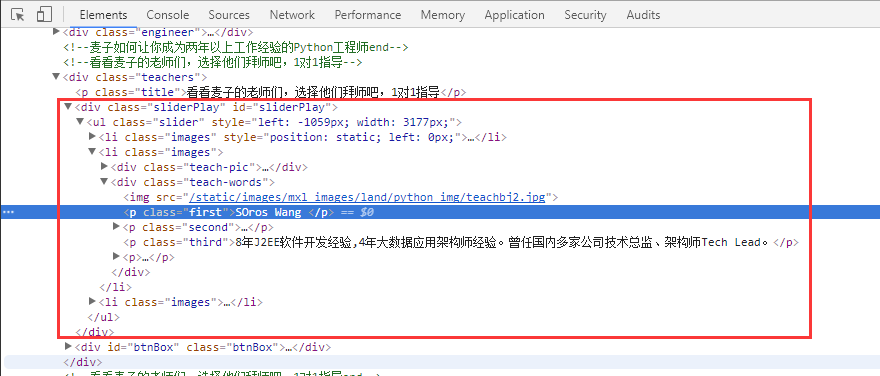

1.页面分析:

2.代码:

mydb.py:

#!/usr/bin/env/python

#coding:utf-8

'''

操作数据库

'''

import MySQLdb as db

class DBHelper():

def __init__(self,tableName):

self.tableName=tableName

try:

self.conn=db.connect(

host='localhost',

port = 3306,

user='root',

passwd='root',

db ='pythondb',

charset='utf8'

)

self.cursor=self.conn.cursor()

except Exception as e:

print(e)

def createTable(self,pros,types):

sql='create table '+self.tableName+'('

for i in range(len(pros)):

if i==0:

sql+=pros[i]+' '+types[i]

else:

sql+=','+pros[i]+' '+types[i]

sql+=')'

self.cursor.execute(sql)

def insert(self,sql):

try:

print(sql)

self.cursor.execute(sql)

print('insert successfully!')

except Exception as e:

print('insert failed!')

self.conn.rollback()

def delete(self,sql):

try:

print(sql)

self.cursor.execute(sql)

print('delete successfully!')

except Exception as e:

print('delete failed!')

self.conn.rollback()

def queryBySql(self,sql):

return self.cursor.execute(sql)

def queryAll(self):

self.cursor.execute('select * from '+self.tableName)

# 获取所有记录列表

results = self.cursor.fetchall()

return results

def close(self):

self.cursor.close()

self.conn.commit()

self.conn.close()

if __name__=='__main__':

print('test mydb DBHelper')

helper=DBHelper('teacher')

# pros=['name','title','production']

# types=['varchar(20)','varchar(50)','varchar(200)']

# dbhelper.createTable(pros,types)

sql='insert into teacher values("李希","成都莫比乌斯科技创始人","精通Windows及Linux系统平台的运维、大型分布式架构网站的部署和管理,具有15年资深IT从业经验。")'

helper.insert(sql)

for x in helper.queryAll():

print(x)

helper.close()

mymodel.py:

#!/usr/bin/env/python

#coding:utf-8

class Teacher():

def __init__(self,name,title,production):

self._name=name

self._title=title

self._production=production

def get_name(self):

return self._name

def set_name(self,value):

self._name=value

def get_title(self):

return self._title

def set_title(self,value):

self._title=value

def get_production(self):

return self._production

def set_production(self,value):

self._production=value

def __str__(self):

return 'name ='+self.name+',title ='+self.title+',production ='+self.production

name=property(get_name,set_name)

title=property(get_title,set_title)

production=property(get_production,set_production)

if __name__=='__main__':

print('test mymodel Teacher')

p=Teacher('a','t','p')

print(p)

p.name='aa'

p.title='tt'

p.production='pp'

print(p)

main.py:

#!/usr/bin/env/python

#coding:utf-8

import mydb,mymodel

import urllib

from urllib import request

import re

class SpiderMan:

def __init__(self,url):

self.url=url

self.dbhelper=mydb.DBHelper('teacher')

def crawl(self):

#pattern

pattern_div=r"<div class='sliderPlay' id='sliderPlay'>[sS]*div id='btnBox' class='btnBox'>"

pattern_name=r'<p class="first">s*(.+)s*</p>'

pattern_title=r'<p class="second">s*(.+)s*</p>'

pattern_production=r'<p class="third">s*(.+)s*</p>'

#request

headers={

"User-Agent":"Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/60.0.3112.90 Safari/537.36",

'Host':'www.maiziedu.com',

'Referer':'www.maiziedu.com'

}

req=request.Request(self.url,headers=headers)

#response

resp=request.urlopen(req)

html=resp.read().decode('utf-8')

#analysis

html_div=re.search(pattern_div,html).group()

name_list=re.findall(pattern_name,html_div)

title_list=re.findall(pattern_title,html_div)

production_list=re.findall(pattern_production,html_div)

# print("name_list:")

# print(name_list)

# print("title_list:")

# print(title_list)

# print("production_list:")

# print(production_list)

#save

for i in range(len(name_list)):

name=name_list[i]

title=title_list[i]

production=production_list[i]

sql='insert into '+self.dbhelper.tableName+' values('

sql+='"'+name+'"'+','+'"'+title+'"'+','+'"'+production+'"'

sql+=')'

self.dbhelper.insert(sql)

#close

self.dbhelper.close()

if __name__=='__main__':

url='http://www.maiziedu.com/line/python/'

spider=SpiderMan(url)

spider.crawl()

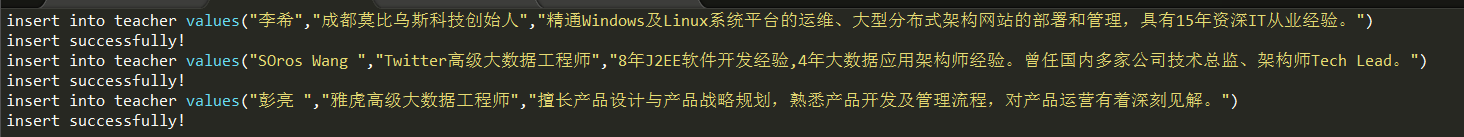

3.运行结果: