view、reshape

- 两者功能一样:将数据依次展开后,再变形

- 变形后的数据量与变形前数据量必须相等。即满足维度:ab...f = xy...z

- reshape是pytorch根据numpy中的reshape来的

- -1表示,其他维度数据已给出情况下,

import torch

a = torch.rand(2, 3, 2, 3)

a

# 输出:

tensor([[[[0.4850, 0.0073, 0.8941],

[0.0208, 0.4396, 0.7841]],

[[0.0553, 0.3554, 0.0726],

[0.9669, 0.3918, 0.9356]],

[[0.6169, 0.2080, 0.1028],

[0.5816, 0.3509, 0.6983]]],

[[[0.7545, 0.8693, 0.4751],

[0.2206, 0.3384, 0.2877]],

[[0.9521, 0.6172, 0.5058],

[0.6835, 0.0624, 0.6261]],

[[0.7752, 0.3820, 0.5585],

[0.1547, 0.1420, 0.6051]]]])

# 将数据依次展开后,再变形为对应维度。所以,数据的顺序是一样的。且总的数据维度是不变的。

a.view(4, 9)

# 输出:

tensor([[0.4850, 0.0073, 0.8941, 0.0208, 0.4396, 0.7841, 0.0553, 0.3554, 0.0726],

[0.9669, 0.3918, 0.9356, 0.6169, 0.2080, 0.1028, 0.5816, 0.3509, 0.6983],

[0.7545, 0.8693, 0.4751, 0.2206, 0.3384, 0.2877, 0.9521, 0.6172, 0.5058],

[0.6835, 0.0624, 0.6261, 0.7752, 0.3820, 0.5585, 0.1547, 0.1420, 0.6051]])

# reshape和view的效果一样

a.reshape(4, 9)

# 输出:

tensor([[0.4850, 0.0073, 0.8941, 0.0208, 0.4396, 0.7841, 0.0553, 0.3554, 0.0726],

[0.9669, 0.3918, 0.9356, 0.6169, 0.2080, 0.1028, 0.5816, 0.3509, 0.6983],

[0.7545, 0.8693, 0.4751, 0.2206, 0.3384, 0.2877, 0.9521, 0.6172, 0.5058],

[0.6835, 0.0624, 0.6261, 0.7752, 0.3820, 0.5585, 0.1547, 0.1420, 0.6051]])

# -1表示,除了其他维度以外的最大维度数据量

# 此处为2*3*2*3=36, 36/4=9

a.reshape(4, -1)

# 输出:

tensor([[0.4850, 0.0073, 0.8941, 0.0208, 0.4396, 0.7841, 0.0553, 0.3554, 0.0726],

[0.9669, 0.3918, 0.9356, 0.6169, 0.2080, 0.1028, 0.5816, 0.3509, 0.6983],

[0.7545, 0.8693, 0.4751, 0.2206, 0.3384, 0.2877, 0.9521, 0.6172, 0.5058],

[0.6835, 0.0624, 0.6261, 0.7752, 0.3820, 0.5585, 0.1547, 0.1420, 0.6051]])

# 36/(2*3) = 6

# 所以此时-1表示6

a.reshape(2, 3, -1)

# 输出:

tensor([[[0.4850, 0.0073, 0.8941, 0.0208, 0.4396, 0.7841],

[0.0553, 0.3554, 0.0726, 0.9669, 0.3918, 0.9356],

[0.6169, 0.2080, 0.1028, 0.5816, 0.3509, 0.6983]],

[[0.7545, 0.8693, 0.4751, 0.2206, 0.3384, 0.2877],

[0.9521, 0.6172, 0.5058, 0.6835, 0.0624, 0.6261],

[0.7752, 0.3820, 0.5585, 0.1547, 0.1420, 0.6051]]])

# 给定的-1以外的其他维度数据的乘积,必须能整除总的数据量

# 比如,此处的5不能整除36,所以会报错

a.reshape(5, -1)

# 输出:

---------------------------------------------------------------------------

RuntimeError Traceback (most recent call last)

<ipython-input-26-af98f63a60b8> in <module>

----> 1 a.reshape(5, -1)

RuntimeError: shape '[5, -1]' is invalid for input of size 36

# 变成其他形状后,如果记得原数据形状,可以变形回去

a.view(4, 9).view(2, 3, 2, 3)

# 输出:

tensor([[[[0.4850, 0.0073, 0.8941],

[0.0208, 0.4396, 0.7841]],

[[0.0553, 0.3554, 0.0726],

[0.9669, 0.3918, 0.9356]],

[[0.6169, 0.2080, 0.1028],

[0.5816, 0.3509, 0.6983]]],

[[[0.7545, 0.8693, 0.4751],

[0.2206, 0.3384, 0.2877]],

[[0.9521, 0.6172, 0.5058],

[0.6835, 0.0624, 0.6261]],

[[0.7752, 0.3820, 0.5585],

[0.1547, 0.1420, 0.6051]]]])

unsqueeze

- 功能:指定维度,为其增加(插入)1个维度

- 必须给定维度数据,不然会报错

a = torch.rand(2, 3)

a

# 输出:

tensor([[0.3074, 0.2152, 0.0082],

[0.2831, 0.9236, 0.2705]])

# 在0维处,增加1个维度。

a.unsqueeze(0)

# 支持负数索引,等价于 a.unsqueeze(-3)

# 输出:

tensor([[[0.3074, 0.2152, 0.0082],

[0.2831, 0.9236, 0.2705]]])

a.unsqueeze(1)

# 等价于a.unsqueeze(-2)

# 输出:

tensor([[[0.3074, 0.2152, 0.0082]],

[[0.2831, 0.9236, 0.2705]]])

a.unsqueeze(2)

# 等价于a.unsqueeze(-1)

# 输出:

tensor([[[0.3074],

[0.2152],

[0.0082]],

[[0.2831],

[0.9236],

[0.2705]]])

a.unsqueeze(0).unsqueeze(-1) # 分别在0和最后维度上面都增加了一个维度,相比上面的数据,外层多了一对[]括号

# 输出:

tensor([[[[0.3074],

[0.2152],

[0.0082]],

[[0.2831],

[0.9236],

[0.2705]]]])

squeeze 删除为1的维度

- 默认将所有为1的维度

- 传入指定维度,删除指定维度为1的维度

a = torch.rand(2, 1, 3, 1, 2, 1)

a

# 输出:

tensor([[[[[[0.4492],

[0.6223]]],

[[[0.8522],

[0.4971]]],

[[[0.2585],

[0.6034]]]]],

[[[[[0.1904],

[0.5564]]],

[[[0.3120],

[0.2698]]],

[[[0.6620],

[0.5963]]]]]])

a.squeeze() # 默认删除所有为1的维度,即(2, 1, 3, 1, 2, 1)删除后得到(2, 3, 2)

a

# 输出:

tensor([[[0.4492, 0.6223],

[0.8522, 0.4971],

[0.2585, 0.6034]],

[[0.1904, 0.5564],

[0.3120, 0.2698],

[0.6620, 0.5963]]])

a.squeeze(1).squeeze(2).squeeze(3) # 通过指定维度删除1,与上面效果相同

a

# 输出:

tensor([[[0.4492, 0.6223],

[0.8522, 0.4971],

[0.2585, 0.6034]],

[[0.1904, 0.5564],

[0.3120, 0.2698],

[0.6620, 0.5963]]])

expand

- 功能:扩展维度到指定维度。扩展的数据是复制当前维度的数据。

- 只有只有维度大小为1的维度可以扩展

- -1表示保持原维度

a = torch.rand(2, 3, 1)

a

# 输出:

tensor([[[0.1162],

[0.6026],

[0.5674]],

[[0.7272],

[0.4351],

[0.1708]]])

# 扩展数据,只有维度为1的地方可以被扩展

a.expand(2, -1, 3)

# 输出:

tensor([[[0.1162, 0.1162, 0.1162],

[0.6026, 0.6026, 0.6026],

[0.5674, 0.5674, 0.5674]],

[[0.7272, 0.7272, 0.7272],

[0.4351, 0.4351, 0.4351],

[0.1708, 0.1708, 0.1708]]])

repeat

- 表示扩展数据到原维度的倍数。

- 1表示保持原维度。

- 不建议使用此方法,因为复制数据会重新申请内存空间,比较占内存

a

# 输出:

tensor([[[0.1162],

[0.6026],

[0.5674]],

[[0.7272],

[0.4351],

[0.1708]]])

a.repeat(1, 1, 3)

# 输出:

tensor([[[0.1655, 0.1655, 0.1655],

[0.2952, 0.2952, 0.2952],

[0.8438, 0.8438, 0.8438]],

[[0.0215, 0.0215, 0.0215],

[0.0677, 0.0677, 0.0677],

[0.6002, 0.6002, 0.6002]]])

矩阵转置

- 行列互换

- .t()只支持2D的数据

a = torch.rand(5, 2)

a

# 输出:

tensor([[0.9841, 0.2180],

[0.5082, 0.8553],

[0.5250, 0.7228],

[0.9064, 0.0074],

[0.2752, 0.3939]])

a.t()

# 输出:

tensor([[0.9841, 0.5082, 0.5250, 0.9064, 0.2752],

[0.2180, 0.8553, 0.7228, 0.0074, 0.3939]])

transpose维度交换

- 相当于被选择的两个维度数据进行转置,即:行列互换

- 也可以理解为,每一行数据顺时针旋转90度为一列后,依次拼接在右边(最后面)

a = torch.rand(2, 3, 4)

a

# 输出:

tensor([[[0.1696, 0.6733, 0.7269, 0.1066],

[0.5433, 0.2820, 0.0214, 0.9471],

[0.6922, 0.8220, 0.8422, 0.8682]],

[[0.6121, 0.8133, 0.6502, 0.4529],

[0.9810, 0.9233, 0.5279, 0.2193],

[0.1775, 0.8487, 0.4938, 0.3994]]])

a.transpose(0, 2)

# 输出:

tensor([[[0.1696, 0.6121],

[0.5433, 0.9810],

[0.6922, 0.1775]],

[[0.6733, 0.8133],

[0.2820, 0.9233],

[0.8220, 0.8487]],

[[0.7269, 0.6502],

[0.0214, 0.5279],

[0.8422, 0.4938]],

[[0.1066, 0.4529],

[0.9471, 0.2193],

[0.8682, 0.3994]]])

a.transpose(0, 2).contiguous().view(4, 6)

# 输出:

tensor([[0.1696, 0.6121, 0.5433, 0.9810, 0.6922, 0.1775],

[0.6733, 0.8133, 0.2820, 0.9233, 0.8220, 0.8487],

[0.7269, 0.6502, 0.0214, 0.5279, 0.8422, 0.4938],

[0.1066, 0.4529, 0.9471, 0.2193, 0.8682, 0.3994]])

permute

与transpose不同的是:

- transpose一次只能进行两个维度之间的交换

- permute可以一次进行多个维度的交换。其实质就是实现多个transpose步骤。

- 给定维度索引。

a = torch.rand(2, 3, 4)

a

# 输出:

tensor([[[0.7855, 0.6239, 0.3785, 0.8138],

[0.9595, 0.5210, 0.3816, 0.1612],

[0.0152, 0.9714, 0.4245, 0.4754]],

[[0.1475, 0.4961, 0.0812, 0.3769],

[0.4279, 0.0595, 0.0717, 0.9871],

[0.9480, 0.3525, 0.3076, 0.0367]]])

a.permute(1, 2, 0)

# 输出:

tensor([[[0.7855, 0.1475],

[0.6239, 0.4961],

[0.3785, 0.0812],

[0.8138, 0.3769]],

[[0.9595, 0.4279],

[0.5210, 0.0595],

[0.3816, 0.0717],

[0.1612, 0.9871]],

[[0.0152, 0.9480],

[0.9714, 0.3525],

[0.4245, 0.3076],

[0.4754, 0.0367]]])

# 通过2次transpose可以实现permute的效果

a.transpose(0, 1).transpose(1, 2)

# 输出:

tensor([[[0.7855, 0.1475],

[0.6239, 0.4961],

[0.3785, 0.0812],

[0.8138, 0.3769]],

[[0.9595, 0.4279],

[0.5210, 0.0595],

[0.3816, 0.0717],

[0.1612, 0.9871]],

[[0.0152, 0.9480],

[0.9714, 0.3525],

[0.4245, 0.3076],

[0.4754, 0.0367]]])

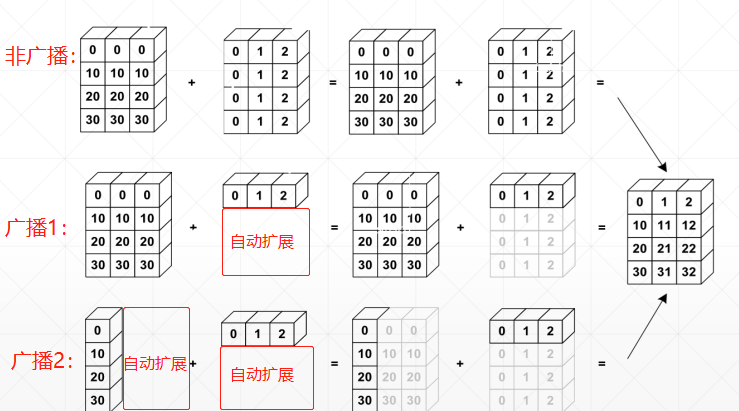

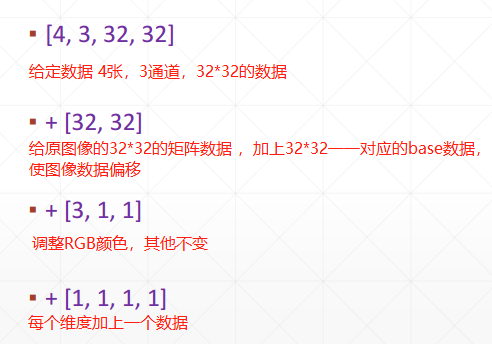

broadcast广播(自动扩展):

- 定义:两个维度不同的数据相加,会自动将维度小的数据扩张到与大维度相同的维度

- 特点:自动扩展维度,并且不用复制数据,节省空间

- 前提:小维度的数据,要么不给定维度,要么给定1维,要么与原数据维度大小相同的维度,否则会报错。

a = torch.rand(3, 4, 2)

a

# 输出:

tensor([[[0.7220, 0.7834],

[0.4305, 0.6128],

[0.6032, 0.5751],

[0.2304, 0.3003]],

[[0.1044, 0.1071],

[0.1373, 0.4874],

[0.3963, 0.5231],

[0.1851, 0.8962]],

[[0.0677, 0.0587],

[0.7268, 0.8807],

[0.5445, 0.2110],

[0.8755, 0.8577]]])

b = torch.rand(1, 4, 2) # b的第一维度为1,a的为3

b

# 输出:

tensor([[[0.6676, 0.5702],

[0.3334, 0.8553],

[0.5392, 0.0754],

[0.9488, 0.7814]]])

# 相加和,b被自动广播为3,然后再与a相加,得到结果

a + b

# 输出:

tensor([[[1.0925, 1.1274],

[0.7881, 1.6091],

[0.6568, 0.8419],

[1.8460, 1.7360]],

[[0.9783, 0.9697],

[1.0127, 1.3521],

[0.7147, 0.3189],

[1.3435, 1.3845]],

[[0.8699, 1.0997],

[0.5712, 1.8381],

[0.6607, 1.0689],

[0.9599, 1.7686]]])