GOICE项目初探

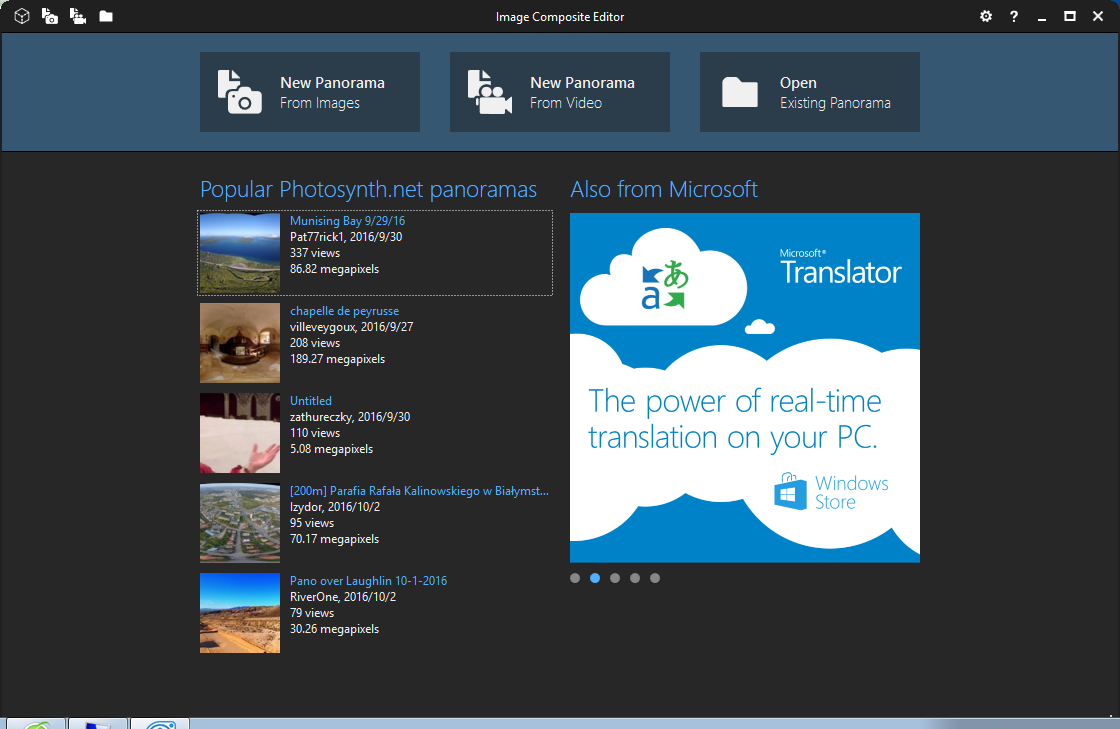

在图像拼接方面,市面上能够找到的软件中,要数MS的ICE效果、鲁棒性最好,而且界面也很美观。应该说有很多值得学习的地方,虽然这个项目不开源,但是利用现有的资料,也可以实现很多具体的拼接工作。

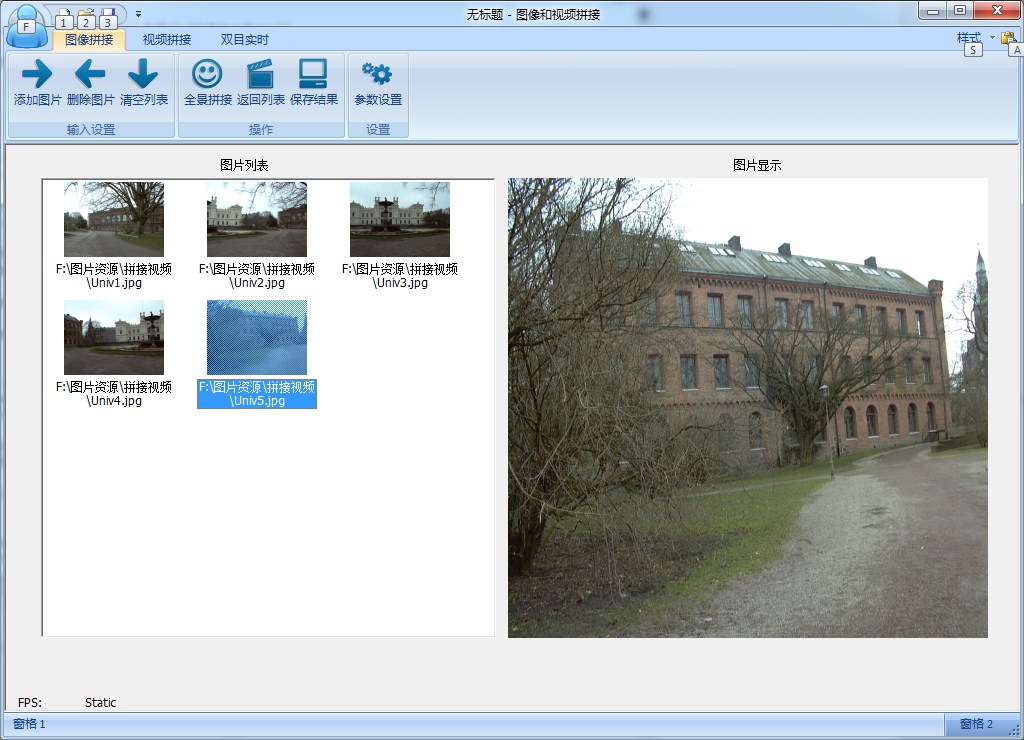

基于现有的有限资源,主要是以opencv自己提供的stitch_detail进行修改和封包,基于ribbon编写界面,我也尝试实现了GOICE项目,实现全景图片的拼接、横向视频的拼接,如果下一步有时间的话再将双目实时拼接从以前的代码中移植过来。

这里简单地将一些技术要点进行解析,欢迎批评指正和合作交流!

一、对现有算法进行重新封装

opencv原本的算法主要包含在Stitching Pipeline中,结构相对比较复杂,具体可以查看opencv refman

对算法进行重构后整理如下:

//使用变量 Ptr<FeaturesFinder> finder; Mat full_img, img; int num_images = m_ImageList.size(); vector<ImageFeatures> features(num_images); vector<Mat> images(num_images); vector<cv::Size> full_img_sizes(num_images); double seam_work_aspect = 1; vector<MatchesInfo> pairwise_matches; BestOf2NearestMatcher matcher(try_gpu, match_conf); vector<int> indices; vector<Mat> img_subset; vector<cv::Size> full_img_sizes_subset; HomographyBasedEstimator estimator; vector<CameraParams> cameras; vector<cv::Point> corners(num_images); vector<Mat> masks_warped(num_images); vector<Mat> images_warped(num_images); vector<cv::Size> sizes(num_images); vector<Mat> masks(num_images); Mat img_warped, img_warped_s; Mat dilated_mask, seam_mask, mask, mask_warped; Ptr<Blender> blender; double compose_work_aspect = 1; //拼接开始 if (features_type == "surf") finder = new SurfFeaturesFinder(); else finder = new OrbFeaturesFinder(); //寻找特征点 m_progress.SetPos(20); for (int i = 0; i < num_images; ++i) { full_img = m_ImageList[i].clone(); full_img_sizes[i] = full_img.size();//读到的是大小 if (full_img.empty()) { MessageBox("图片读取错误,请确认后重新尝试!"); return; } if (work_megapix < 0) { img = full_img; work_scale = 1; is_work_scale_set = true; }else{ if (!is_work_scale_set) { work_scale = min(1.0, sqrt(work_megapix * 1e6 / full_img.size().area())); is_work_scale_set = true; } resize(full_img, img, cv::Size(), work_scale, work_scale); } if (!is_seam_scale_set) { seam_scale = min(1.0, sqrt(seam_megapix * 1e6 / full_img.size().area())); seam_work_aspect = seam_scale / work_scale; is_seam_scale_set = true; } (*finder)(img, features[i]); features[i].img_idx = i; resize(full_img, img, cv::Size(), seam_scale, seam_scale); images[i] = img.clone(); } finder->collectGarbage(); full_img.release(); img.release(); //进行匹配 m_progress.SetPos(30); matcher(features, pairwise_matches); matcher.collectGarbage(); indices = leaveBiggestComponent(features, pairwise_matches, conf_thresh); for (size_t i = 0; i < indices.size(); ++i) { img_subset.push_back(images[indices[i]]); full_img_sizes_subset.push_back(full_img_sizes[indices[i]]); } m_progress.SetPos(40); images = img_subset; //判断图片是否足够 num_images = static_cast<int>(img_subset.size()); if (num_images < 2) { MessageBox("图片特征太少,尝试添加更多图片!"); return; } estimator(features, pairwise_matches, cameras); for (size_t i = 0; i < cameras.size(); ++i) { Mat R; cameras[i].R.convertTo(R, CV_32F); cameras[i].R = R; //LOGLN("Initial intrinsics #" << indices[i]+1 << ": " << cameras[i].K()); } //开始对准 m_progress.SetPos(50); Ptr<detail::BundleAdjusterBase> adjuster; if (ba_cost_func == "reproj") adjuster = new detail::BundleAdjusterReproj(); else adjuster = new detail::BundleAdjusterRay(); adjuster->setConfThresh(conf_thresh); Mat_<uchar> refine_mask = Mat::zeros(3, 3, CV_8U); if (ba_refine_mask[0] == 'x') refine_mask(0,0) = 1; if (ba_refine_mask[1] == 'x') refine_mask(0,1) = 1; if (ba_refine_mask[2] == 'x') refine_mask(0,2) = 1; if (ba_refine_mask[3] == 'x') refine_mask(1,1) = 1; if (ba_refine_mask[4] == 'x') refine_mask(1,2) = 1; adjuster->setRefinementMask(refine_mask); (*adjuster)(features, pairwise_matches, cameras); // Find median focal length vector<double> focals; for (size_t i = 0; i < cameras.size(); ++i) focals.push_back(cameras[i].focal); sort(focals.begin(), focals.end()); float warped_image_scale; if (focals.size() % 2 == 1) warped_image_scale = static_cast<float>(focals[focals.size() / 2]); else warped_image_scale = static_cast<float>(focals[focals.size() / 2 - 1] + focals[focals.size() / 2]) * 0.5f; //开始融合 m_progress.SetPos(60); if (do_wave_correct) { vector<Mat> rmats; for (size_t i = 0; i < cameras.size(); ++i) rmats.push_back(cameras[i].R); waveCorrect(rmats, wave_correct); for (size_t i = 0; i < cameras.size(); ++i) cameras[i].R = rmats[i]; } //最后修正 m_progress.SetPos(70); // Preapre images masks for (int i = 0; i < num_images; ++i) { masks[i].create(images[i].size(), CV_8U); masks[i].setTo(Scalar::all(255)); } Ptr<WarperCreator> warper_creator; { if (warp_type == "plane") warper_creator = new cv::PlaneWarper(); else if (warp_type == "cylindrical") warper_creator = new cv::CylindricalWarper(); else if (warp_type == "spherical") warper_creator = new cv::SphericalWarper(); else if (warp_type == "fisheye") warper_creator = new cv::FisheyeWarper(); else if (warp_type == "stereographic") warper_creator = new cv::StereographicWarper(); else if (warp_type == "compressedPlaneA2B1") warper_creator = new cv::CompressedRectilinearWarper(2, 1); else if (warp_type == "compressedPlaneA1.5B1") warper_creator = new cv::CompressedRectilinearWarper(1.5, 1); else if (warp_type == "compressedPlanePortraitA2B1") warper_creator = new cv::CompressedRectilinearPortraitWarper(2, 1); else if (warp_type == "compressedPlanePortraitA1.5B1") warper_creator = new cv::CompressedRectilinearPortraitWarper(1.5, 1); else if (warp_type == "paniniA2B1") warper_creator = new cv::PaniniWarper(2, 1); else if (warp_type == "paniniA1.5B1") warper_creator = new cv::PaniniWarper(1.5, 1); else if (warp_type == "paniniPortraitA2B1") warper_creator = new cv::PaniniPortraitWarper(2, 1); else if (warp_type == "paniniPortraitA1.5B1") warper_creator = new cv::PaniniPortraitWarper(1.5, 1); else if (warp_type == "mercator") warper_creator = new cv::MercatorWarper(); else if (warp_type == "transverseMercator") warper_creator = new cv::TransverseMercatorWarper(); } if (warper_creator.empty()) { cout << "Can't create the following warper '" << warp_type << "' "; return;} Ptr<RotationWarper> warper = warper_creator->create(static_cast<float>(warped_image_scale * seam_work_aspect)); for (int i = 0; i < num_images; ++i) { Mat_<float> K; cameras[i].K().convertTo(K, CV_32F); float swa = (float)seam_work_aspect; K(0,0) *= swa; K(0,2) *= swa; K(1,1) *= swa; K(1,2) *= swa; corners[i] = warper->warp(images[i], K, cameras[i].R, INTER_LINEAR, BORDER_REFLECT, images_warped[i]); sizes[i] = images_warped[i].size(); warper->warp(masks[i], K, cameras[i].R, INTER_NEAREST, BORDER_CONSTANT, masks_warped[i]); } vector<Mat> images_warped_f(num_images); for (int i = 0; i < num_images; ++i) images_warped[i].convertTo(images_warped_f[i], CV_32F); Ptr<ExposureCompensator> compensator = ExposureCompensator::createDefault(expos_comp_type); compensator->feed(corners, images_warped, masks_warped); //接缝修正 m_progress.SetPos(80); Ptr<SeamFinder> seam_finder; if (seam_find_type == "no") seam_finder = new detail::NoSeamFinder(); else if (seam_find_type == "voronoi") seam_finder = new detail::VoronoiSeamFinder(); else if (seam_find_type == "gc_color") seam_finder = new detail::GraphCutSeamFinder(GraphCutSeamFinderBase::COST_COLOR); else if (seam_find_type == "gc_colorgrad") seam_finder = new detail::GraphCutSeamFinder(GraphCutSeamFinderBase::COST_COLOR_GRAD); else if (seam_find_type == "dp_color") seam_finder = new detail::DpSeamFinder(DpSeamFinder::COLOR); else if (seam_find_type == "dp_colorgrad") seam_finder = new detail::DpSeamFinder(DpSeamFinder::COLOR_GRAD); if (seam_finder.empty()) { MessageBox("无法对图像进行缝隙融合"); return; } //输出最后结果 m_progress.SetPos(90); seam_finder->find(images_warped_f, corners, masks_warped); // Release unused memory images.clear(); images_warped.clear(); images_warped_f.clear(); masks.clear(); for (int img_idx = 0; img_idx < num_images; ++img_idx) { // Read image and resize it if necessary full_img = m_ImageList[img_idx]; if (!is_compose_scale_set) { if (compose_megapix > 0) compose_scale = min(1.0, sqrt(compose_megapix * 1e6 / full_img.size().area())); is_compose_scale_set = true; // Compute relative scales compose_work_aspect = compose_scale / work_scale; // Update warped image scale warped_image_scale *= static_cast<float>(compose_work_aspect); warper = warper_creator->create(warped_image_scale); // Update corners and sizes for (int i = 0; i < num_images; ++i) { // Update intrinsics cameras[i].focal *= compose_work_aspect; cameras[i].ppx *= compose_work_aspect; cameras[i].ppy *= compose_work_aspect; // Update corner and size cv::Size sz = full_img_sizes[i]; if (std::abs(compose_scale - 1) > 1e-1) { sz.width = cvRound(full_img_sizes[i].width * compose_scale); sz.height = cvRound(full_img_sizes[i].height * compose_scale); } Mat K; cameras[i].K().convertTo(K, CV_32F); cv::Rect roi = warper->warpRoi(sz, K, cameras[i].R); corners[i] = roi.tl(); sizes[i] = roi.size(); } } if (abs(compose_scale - 1) > 1e-1) resize(full_img, img, cv::Size(), compose_scale, compose_scale); else img = full_img; full_img.release(); cv::Size img_size = img.size(); Mat K; cameras[img_idx].K().convertTo(K, CV_32F); // Warp the current image warper->warp(img, K, cameras[img_idx].R, INTER_LINEAR, BORDER_REFLECT, img_warped); // Warp the current image mask mask.create(img_size, CV_8U); mask.setTo(Scalar::all(255)); warper->warp(mask, K, cameras[img_idx].R, INTER_NEAREST, BORDER_CONSTANT, mask_warped); // Compensate exposure compensator->apply(img_idx, corners[img_idx], img_warped, mask_warped); img_warped.convertTo(img_warped_s, CV_16S); img_warped.release(); img.release(); mask.release(); dilate(masks_warped[img_idx], dilated_mask, Mat()); resize(dilated_mask, seam_mask, mask_warped.size()); mask_warped = seam_mask & mask_warped; if (blender.empty()) { blender = Blender::createDefault(blend_type, try_gpu); cv::Size dst_sz = resultRoi(corners, sizes).size(); float blend_width = sqrt(static_cast<float>(dst_sz.area())) * blend_strength / 100.f; if (blend_width < 1.f) blender = Blender::createDefault(Blender::NO, try_gpu); else if (blend_type == Blender::MULTI_BAND) { MultiBandBlender* mb = dynamic_cast<MultiBandBlender*>(static_cast<Blender*>(blender)); mb->setNumBands(static_cast<int>(ceil(log(blend_width)/log(2.)) - 1.)); LOGLN("Multi-band blender, number of bands: " << mb->numBands()); } else if (blend_type == Blender::FEATHER) { FeatherBlender* fb = dynamic_cast<FeatherBlender*>(static_cast<Blender*>(blender)); fb->setSharpness(1.f/blend_width); LOGLN("Feather blender, sharpness: " << fb->sharpness()); } blender->prepare(corners, sizes); } // Blend the current image blender->feed(img_warped_s, mask_warped, corners[img_idx]); } Mat result, result_mask; blender->blend(result, result_mask); m_progress.SetPos(100); AfxMessageBox("拼接成功!"); m_progress.ShowWindow(false); m_progress.SetPos(0); //格式转换 result.convertTo(result,CV_8UC3); showImage(result,IDC_PBDST); //保存结果 m_matResult = result.clone();

基本上没有修改代码的结构,但是做了几个改变

1、原来的算法既读取文件名,又保存mat变量,我这里将其统一成为使用vector<Mat>来进行保存;

2、将LOGLN的部分以messagebox的方式显示出来,并且进行错误控制;

3、添加适当注释,并且在合适的地方控制进度条显示。

二、主要界面编写技巧

主要界面使用了Ribbon的方法,结合使用IconWorkshop生成图标。如何生成这样的图片在我的博客中有专门介绍。

内容方面,使用了基于listctrl的缩略图的显示,具体参考我的另一篇blog--"图像处理界面--缩略图的显示"

三、视频拼接的处理方法

相比较图像拼接,这次添加了一个“横向视频”的拼接。其实算法原理是比较朴素的(当然这里考虑的是比较简单的情况)。就是对于精心拍摄的视频,那么只要每隔一段时间取一个图片,然后把这些图片进行拼接,就能够得到视频的全景图片。

void CMFCApplication1View::OnButtonOpenmov() { CString pathName; CString szFilters= _T("*(*.*)|*.*|avi(*.avi)|*.avi|mp4(*.mp4)|*.mp4||"); CFileDialog dlg(TRUE,NULL,NULL,NULL,szFilters,this); VideoCapture capture; Mat frame; int iFrameCount = 0; int iFram = 0; if(dlg.DoModal()==IDOK){ //获得路径 pathName=dlg.GetPathName(); //设置窗体 m_ListThumbnail.ShowWindow(false); m_imagerect.ShowWindow(false); m_imagedst.ShowWindow(true); m_progress.ShowWindow(false); m_msg.ShowWindow(false); //打开视频并且抽取图片 capture.open((string)pathName); if (!capture.isOpened()) { MessageBox("视频打开错误!"); return; } m_VectorMovImageNames.clear(); m_MovImageList.clear(); char cbuf[100]; while (capture.read(frame)) { //每隔50帧取一图 if (0 == iFram%50) { m_MovImageList.push_back(frame.clone()); } showImage(frame,IDC_PBDST); iFram = iFram +1; } } }

四、反思和小结

1)虽然现在已经对opencv的算法进行了集成,但是由于算法原理还是繁琐复杂的,下一步要结合对更复杂问题的进一步研究吃透算法;

2)使用ribbon进行程序设计现在已经比较熟悉了。能够认识到工具擅长解决的问题、能够认识到工具不好解决的问题,能够快速实现,才算是掌握;