//顶帽去光差,radius为模板半径

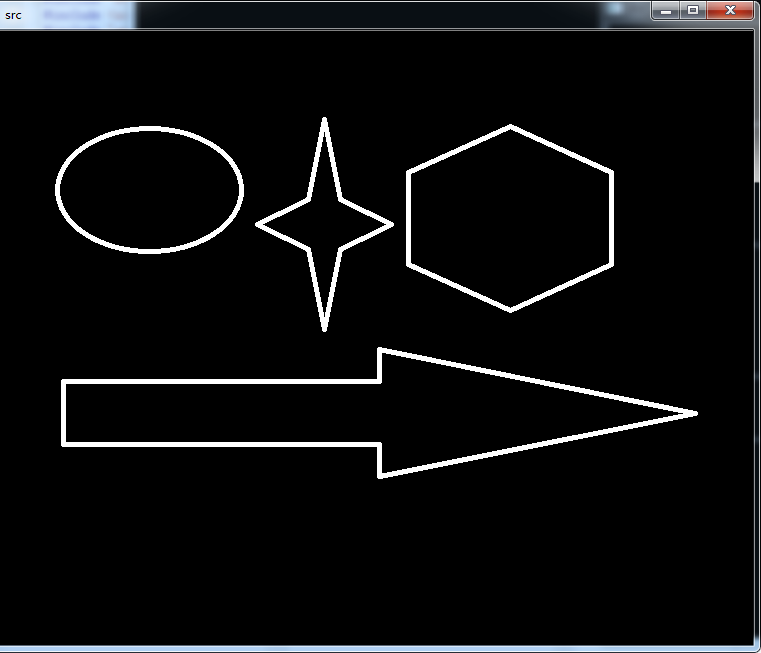

Mat moveLightDiff(Mat src,int radius){

Mat dst;

Mat srcclone = src.clone();

Mat mask = Mat::zeros(radius*2,radius*2,CV_8U);

circle(mask,Point(radius,radius),radius,Scalar(255),-1);

//顶帽

erode(srcclone,srcclone,mask);

dilate(srcclone,srcclone,mask);

dst = src - srcclone;

return dst;

}

算法来自于冈萨雷斯《数字图像处理教程》形态学篇章。完全按照教程实现,具备一定作用。

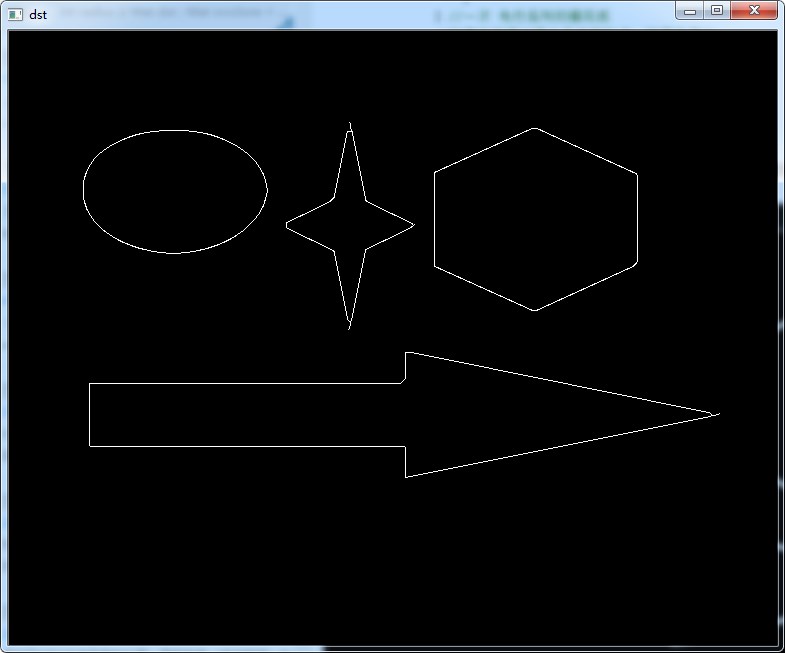

//将 DEPTH_8U型二值图像进行细化 经典的Zhang并行快速细化算法

//细化算法

void thin(const Mat &src, Mat &dst, const int iterations){

const int height =src.rows -1;

const int width =src.cols -1;

//拷贝一个数组给另一个数组

if(src.data != dst.data)

src.copyTo(dst);

int n = 0,i = 0,j = 0;

Mat tmpImg;

uchar *pU, *pC, *pD;

bool isFinished =FALSE;

for(n=0; n<iterations; n++){

dst.copyTo(tmpImg);

isFinished =FALSE; //一次 先行后列扫描 开始

//扫描过程一 开始

for(i=1; i<height; i++) {

pU = tmpImg.ptr<uchar>(i-1);

pC = tmpImg.ptr<uchar>(i);

pD = tmpImg.ptr<uchar>(i+1);

for(int j=1; j<width; j++){

if(pC[j] > 0){

int ap=0;

int p2 = (pU[j] >0);

int p3 = (pU[j+1] >0);

if (p2==0 && p3==1)

ap++;

int p4 = (pC[j+1] >0);

if(p3==0 && p4==1)

ap++;

int p5 = (pD[j+1] >0);

if(p4==0 && p5==1)

ap++;

int p6 = (pD[j] >0);

if(p5==0 && p6==1)

ap++;

int p7 = (pD[j-1] >0);

if(p6==0 && p7==1)

ap++;

int p8 = (pC[j-1] >0);

if(p7==0 && p8==1)

ap++;

int p9 = (pU[j-1] >0);

if(p8==0 && p9==1)

ap++;

if(p9==0 && p2==1)

ap++;

if((p2+p3+p4+p5+p6+p7+p8+p9)>1 && (p2+p3+p4+p5+p6+p7+p8+p9)<7){

if(ap==1){

if((p2*p4*p6==0)&&(p4*p6*p8==0)){

dst.ptr<uchar>(i)[j]=0;

isFinished =TRUE;

}

}

}

}

} //扫描过程一 结束

dst.copyTo(tmpImg);

//扫描过程二 开始

for(i=1; i<height; i++){

pU = tmpImg.ptr<uchar>(i-1);

pC = tmpImg.ptr<uchar>(i);

pD = tmpImg.ptr<uchar>(i+1);

for(int j=1; j<width; j++){

if(pC[j] > 0){

int ap=0;

int p2 = (pU[j] >0);

int p3 = (pU[j+1] >0);

if (p2==0 && p3==1)

ap++;

int p4 = (pC[j+1] >0);

if(p3==0 && p4==1)

ap++;

int p5 = (pD[j+1] >0);

if(p4==0 && p5==1)

ap++;

int p6 = (pD[j] >0);

if(p5==0 && p6==1)

ap++;

int p7 = (pD[j-1] >0);

if(p6==0 && p7==1)

ap++;

int p8 = (pC[j-1] >0);

if(p7==0 && p8==1)

ap++;

int p9 = (pU[j-1] >0);

if(p8==0 && p9==1)

ap++;

if(p9==0 && p2==1)

ap++;

if((p2+p3+p4+p5+p6+p7+p8+p9)>1 && (p2+p3+p4+p5+p6+p7+p8+p9)<7){

if(ap==1){

if((p2*p4*p8==0)&&(p2*p6*p8==0)){

dst.ptr<uchar>(i)[j]=0;

isFinished =TRUE;

}

}

}

}

}

} //一次 先行后列扫描完成

//如果在扫描过程中没有删除点,则提前退出

if(isFinished ==FALSE)

break;

}

}

}

#end of thin

细化算法,在处理毛笔字一类的时候效果很好。使用的过程中,注意需要保留的部分要处理为白色,也就是scalar(255)