Pom文件添加:

<dependencies> <dependency> <groupId>org.slf4j</groupId> <artifactId>slf4j-simple</artifactId> <version>1.7.25</version> <scope>compile</scope> </dependency> <dependency> <groupId>org.apache.logging.log4j</groupId> <artifactId>log4j-core</artifactId> <version>2.8.2</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-common</artifactId> <version>2.7.2</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-client</artifactId> <version>2.7.2</version> </dependency> <dependency> <groupId>org.apache.hadoop</groupId> <artifactId>hadoop-hdfs</artifactId> <version>2.7.2</version> </dependency> <dependency> <groupId>jdk.tools</groupId> <artifactId>jdk.tools</artifactId> <version>1.8</version> <scope>system</scope> <systemPath>C:/Java/jdk1.8/lib/tools.jar</systemPath> </dependency> <dependency> <groupId>junit</groupId> <artifactId>junit</artifactId> <version>4.11</version> <scope>compile</scope> </dependency> </dependencies>

Mapper 类:

package com.kpwong.mr; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.LongWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Mapper; import java.io.IOException; //Map 阶段 //KEYIN 输入数据 KEY //VALUEIN输入数据value //KEYOUT输出数据类型 //VALUEOUT 输出数据Value类型 public class WordCountMapper extends Mapper<LongWritable, Text,Text, IntWritable> { Text k = new Text(); IntWritable v = new IntWritable(1); @Override protected void map(LongWritable key, Text value, Context context) throws IOException, InterruptedException { //获取一行数据 String line = value.toString(); //切割单词 String[] words = line.split(" "); for(String word : words) { /* Text k = new Text(); */ k.set(word); /* IntWritable v = new IntWritable(); v.set(1); */ context.write(k,v); } } }

Reducer类:

package com.kpwong.mr; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Reducer; import java.io.IOException; public class WordCountReducer extends Reducer<Text, IntWritable, Text,IntWritable> { IntWritable v = new IntWritable(); @Override protected void reduce(Text key, Iterable<IntWritable> values, Context context) throws IOException, InterruptedException { //累加求和 int sum = 0; for(IntWritable value:values) { sum += value.get(); } //写出 v.set(sum); context.write(key,v); } }

Driver类:(注意:Driver,Mapper,Reducer写法格式都是固定的。)

package com.kpwong.mr; import org.apache.hadoop.conf.Configuration; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; import java.io.IOException; public class WordCountDriver { public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException { //1获取Job对象 Configuration conf = new Configuration(); Job job = Job.getInstance(conf); //2设置Jar存储位置 job.setJarByClass(WordCountDriver.class); //3关联Map和Reduce类 job.setMapperClass(WordCountMapper.class); job.setReducerClass(WordCountReducer.class); //4设置Mapper阶段的输出数据的key value 类型 job.setMapOutputKeyClass(Text.class); job.setMapOutputValueClass(IntWritable.class); //5设置最终数据的key value类型 job.setOutputKeyClass(Text.class); job.setOutputValueClass(IntWritable.class); //6设置输入输出路径 FileInputFormat.setInputPaths(job,new Path(args[0])); FileOutputFormat.setOutputPath(job,new Path(args[1])); //7提交Job boolean result = job.waitForCompletion(true); System.exit(result?0:1); } }

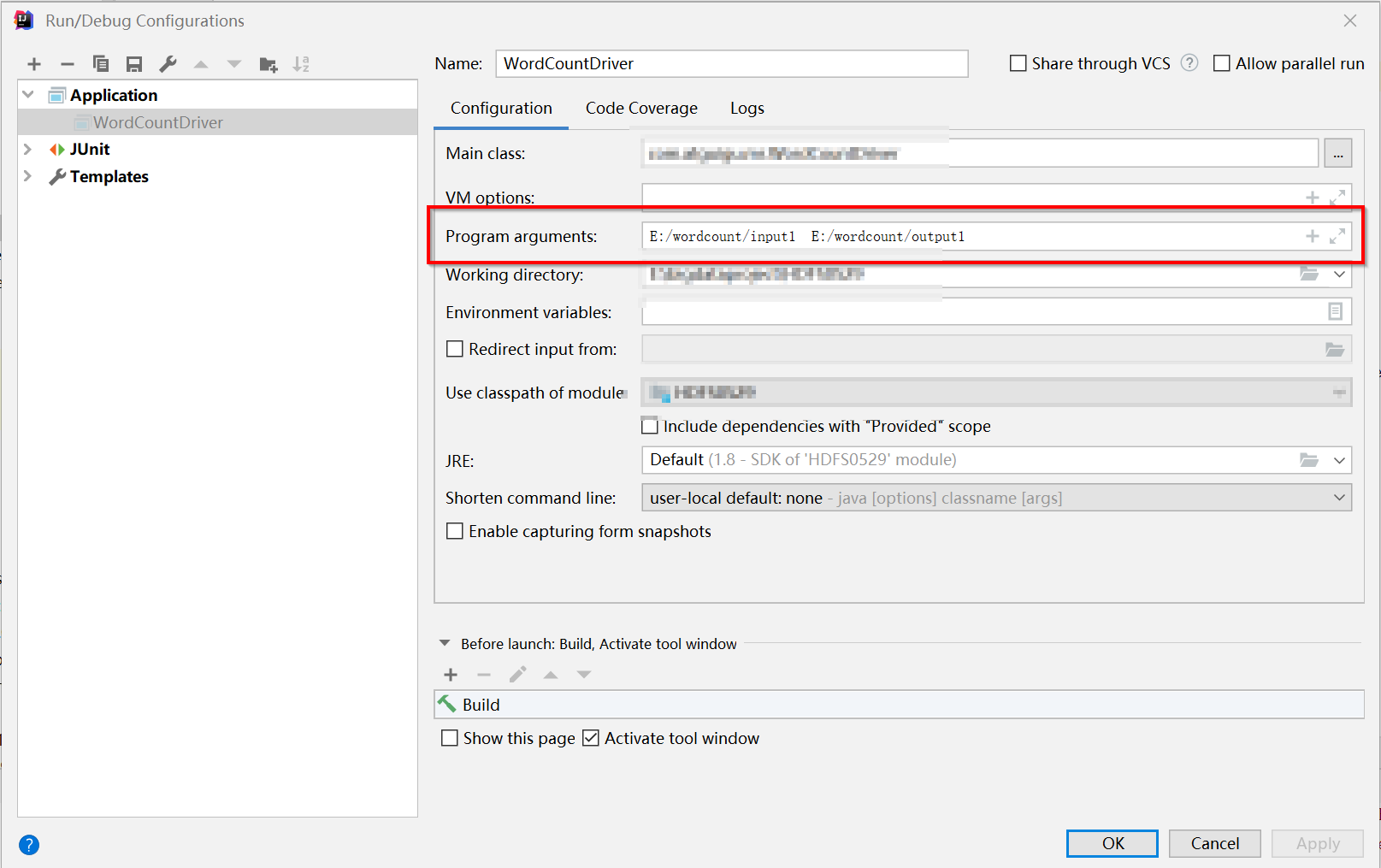

添加运行参数args[0],args[1]:

把要统计的txt文件放入input1文件夹。注意output1文件夹不能新建。系统会自己去创建output1文件夹.