环境安装

pip install lxml

解析原理:

- 获取页面源码数据

- 实例化一个etree的对象,并且将页面源码数据加载到该对象中

- 调用该对象的xpath方法进行指定标签的定位

- 注意:xpath函数必须结合着xpath表达式进行标签定位和内容捕获

实例

1、例如爬取58二手房相关的数据

代码:

1 import requests 2 from lxml import etree 3 4 url = 'https://bj.58.com/shahe/ershoufang/?utm_source=market&spm=u-2d2yxv86y3v43nkddh1.BDPCPZ_BT&PGTID=0d30000c-0047-e4e6-f587-683307ca570e&ClickID=1' 5 headers = { 6 'User-Agent':'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/72.0.3626.119 Safari/537.36' 7 } 8 page_text = requests.get(url=url,headers=headers).text 9 10 tree = etree.HTML(page_text) # 创建一个etree实例对象 11 li_list = tree.xpath('//ul[@class="house-list-wrap"]/li') 12 fp = open('58.csv','w',encoding='utf-8') 13 for li in li_list: 14 title = li.xpath('./div[2]/h2/a/text()')[0] 15 price = li.xpath('./div[3]//text()') 16 price = ''.join(price) 17 fp.write(title+":"+price+' ') 18 fp.close() 19 print('over')

2、爬取高清图片

这里我们用到urllib来快速的存储我们的图片

1 import requests 2 from lxml import etree 3 import os 4 import urllib 5 6 url = 'http://pic.netbian.com/4kmeinv/' 7 headers = { 8 'User-Agent':'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/72.0.3626.119 Safari/537.36' 9 } 10 response = requests.get(url=url,headers=headers) 11 #response.encoding = 'utf-8' 12 if not os.path.exists('./imgs'): 13 os.mkdir('./imgs') 14 page_text = response.text 15 16 tree = etree.HTML(page_text) 17 li_list = tree.xpath('//div[@class="slist"]/ul/li') 18 for li in li_list: 19 img_name = li.xpath('./a/b/text()')[0] 20 #处理中文乱码 21 img_name = img_name.encode('iso-8859-1').decode('gbk') 22 img_url = 'http://pic.netbian.com'+li.xpath('./a/img/@src')[0] 23 img_path = './imgs/'+img_name+'.jpg' 24 urllib.request.urlretrieve(url=img_url,filename=img_path) 25 print(img_path,'下载成功!') 26 print('over!!!')

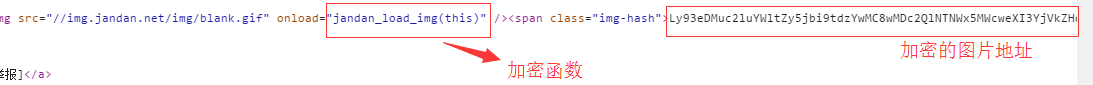

3、下载煎蛋网的图片数据

这里会有常见的反爬机制:数据加密

打开请求返回的response找到加密的图片

原来的element里的HTML是加载好之后的图片地址,不能直接获取

代码:

1 import requests 2 from lxml import etree 3 import base64 4 import urllib 5 6 headers = { 7 'User-Agent':'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/72.0.3626.119 Safari/537.36' 8 } 9 url = 'http://jandan.net/ooxx' 10 page_text = requests.get(url=url,headers=headers).text 11 12 tree = etree.HTML(page_text) 13 img_hash_list = tree.xpath('//span[@class="img-hash"]/text()') 14 for img_hash in img_hash_list: 15 img_url = 'http:'+base64.b64decode(img_hash).decode() # 将图片的地址进行解密获取原地址 16 img_name = img_url.split('/')[-1] 17 urllib.request.urlretrieve(url=img_url,filename=img_name)

4、下载简历模板

当在连续请求时,由于请求次数太多ip被禁掉,可以使用代理ip或请求结束的时候断开本次连接

1 import requests 2 import random 3 from lxml import etree 4 headers = { 5 'Connection':'close', #当请求成功后,马上断开该次请求(及时释放请求池中的资源) 6 'User-Agent':'Mozilla/5.0 (Windows NT 6.1; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/72.0.3626.119 Safari/537.36' 7 } 8 url = 'http://sc.chinaz.com/jianli/free_%d.html' 9 for page in range(1,4): 10 if page == 1: 11 new_url = 'http://sc.chinaz.com/jianli/free.html' 12 else: 13 new_url = format(url%page) 14 15 response = requests.get(url=new_url,headers=headers) 16 response.encoding = 'utf-8' 17 page_text = response.text 18 19 tree = etree.HTML(page_text) 20 div_list = tree.xpath('//div[@id="container"]/div') 21 for div in div_list: 22 detail_url = div.xpath('./a/@href')[0] 23 name = div.xpath('./a/img/@alt')[0] 24 25 detail_page = requests.get(url=detail_url,headers=headers).text 26 tree = etree.HTML(detail_page) 27 download_list = tree.xpath('//div[@class="clearfix mt20 downlist"]/ul/li/a/@href') 28 download_url = random.choice(download_list) 29 data = requests.get(url=download_url,headers=headers).content 30 fileName = name+'.rar' 31 with open(fileName,'wb') as fp: 32 fp.write(data) 33 print(fileName,'下载成功')