1. org.apache.pig.backend.executionengine.ExecException: ERROR 4010: Cannot find hadoop configurations in classpath (neither hadoop-site.xml nor core-site.xml was found in the classpath).If you plan to use local mode, please put -x local option in command line

显而易见,提示找不到与hadoop相关的配置文件。所以我们需要把hadoop安装目录下的“conf”子目录添加到系统环境变量PATH中:

#set java environment

PIG_HOME=/home/hadoop/pig-0.9.2

HBASE_HOME=/home/hadoop/hbase-0.94.3

HIVE_HOME=/home/hadoop/hive-0.9.0

HADOOP_HOME=/home/hadoop/hadoop-1.1.1

JAVA_HOME=/home/hadoop/jdk1.7.0

PATH=$JAVA_HOME/bin:$PIG_HOME/bin:$HBASE_HOME/bin:$HIVE_HOME/bin:$HADOOP_HOME/bin:$HADOOP_HOME/conf:$PATH

CLASSPATH=.:$JAVA_HOME/lib/dt.jar:$HBASE_HOME/lib:$PIG_HOME/lib:$HIVE_HOME/lib:$JAVA_HOME/lib/tools.jar

export PIG_HOME

export HBASE_HOME

export HADOOP_HOME

export JAVA_HOME

export HIVE_HOME

export PATH

export CLASSPATH

2、could only be replicated to 0 nodes, instead of 1解决办法

hadoop@ubuntu:~/hadoop-1.1.1/bin$ hadoop fs -put /home/hadoop/file/* input

12/12/27 18:15:30 WARN hdfs.DFSClient: Error Recovery for block null bad datanode[0] nodes == null

12/12/27 18:15:30 WARN hdfs.DFSClient: Could not get block locations. Source file "/user/hadoop/input/file1.txt" – Aborting…

put: java.io.IOException: File /user/hadoop/input/file1.txt could only be replicated to 0 nodes, instead of 1

12/12/27 18:15:30 ERROR hdfs.DFSClient: Failed to close file /user/hadoop/input/file1.txt

org.apache.hadoop.ipc.RemoteException: java.io.IOException: File /user/hadoop/input/file1.txt could only be replicated to 0 nodes, instead of 1

异常何时产生

Hadoop上传文件抛出的错误,即"hadoop fs –put [本地地址] [hadoop目录]"产生的异常。

该问题在网上答案蛮多,先总结如下:

1、系统或hdfs是否有足够空间(本人就是因为硬盘空间不足导致异常发生)

2、datanode数是否正常

3、是否在safemode

4、防火墙是否关闭

5、关闭hadoop、格式化、重启hadoop

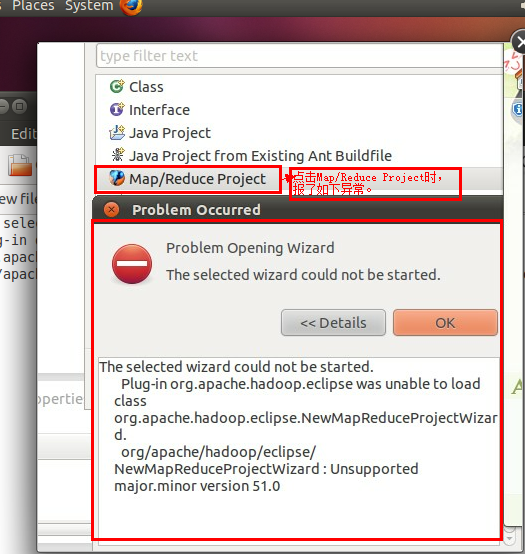

3、org/apache/hadoop/eclipse/NewMapReduceProjectWizard : Unsupported major.minor version 51.0解决办法

The selected wizard could not be started.

Plug-in org.apache.hadoop.eclipse was unable to load class org.apache.hadoop.eclipse.NewMapReduceProjectWizard.

org/apache/hadoop/eclipse/NewMapReduceProjectWizard : Unsupported major.minor version 51.0

如下图所示:

在eclipse与hadoop集成时,由于jdk版本太低导致该错误产生,我的解决办法就是换个高版本的jdk。

4、hadoop-hadoop-*-ubuntu.out: Permission denied解决方案

his script is Deprecated. Instead use start-dfs.sh and start-mapred.sh

starting namenode, logging to /home/hadoop/hadoop-0.21.0/logs/hadoop-hadoop-namenode-ubuntu.out

/home/hadoop/hadoop-0.21.0/bin/../bin/hadoop-daemon.sh: line 126: /home/hadoop/hadoop-0.21.0/logs/hadoop-hadoop-namenode-ubuntu.out: Permission denied

head: cannot open `/home/hadoop/hadoop-0.21.0/logs/hadoop-hadoop-namenode-ubuntu.out' for reading: No such file or directory

localhost: starting datanode, logging to /home/hadoop/hadoop-0.21.0/logs/hadoop-hadoop-datanode-ubuntu.out

localhost: /home/hadoop/hadoop-0.21.0/bin/hadoop-daemon.sh: line 126: /home/hadoop/hadoop-0.21.0/logs/hadoop-hadoop-datanode-ubuntu.out: Permission denied

localhost: head: cannot open `/home/hadoop/hadoop-0.21.0/logs/hadoop-hadoop-datanode-ubuntu.out' for reading: No such file or directory

localhost: starting secondarynamenode, logging to /home/hadoop/hadoop-0.21.0/logs/hadoop-hadoop-secondarynamenode-ubuntu.out

localhost: /home/hadoop/hadoop-0.21.0/bin/hadoop-daemon.sh: line 126: /home/hadoop/hadoop-0.21.0/logs/hadoop-hadoop-secondarynamenode-ubuntu.out: Permission denied

localhost: head: cannot open `/home/hadoop/hadoop-0.21.0/logs/hadoop-hadoop-secondarynamenode-ubuntu.out' for reading: No such file or directory

starting jobtracker, logging to /home/hadoop/hadoop-0.21.0/logs/hadoop-hadoop-jobtracker-ubuntu.out

/home/hadoop/hadoop-0.21.0/bin/../bin/hadoop-daemon.sh: line 126: /home/hadoop/hadoop-0.21.0/logs/hadoop-hadoop-jobtracker-ubuntu.out: Permission denied

head: cannot open `/home/hadoop/hadoop-0.21.0/logs/hadoop-hadoop-jobtracker-ubuntu.out' for reading: No such file or directory

localhost: starting tasktracker, logging to /home/hadoop/hadoop-0.21.0/logs/hadoop-hadoop-tasktracker-ubuntu.out

localhost: /home/hadoop/hadoop-0.21.0/bin/hadoop-daemon.sh: line 126: /home/hadoop/hadoop-0.21.0/logs/hadoop-hadoop-tasktracker-ubuntu.out: Permission denied

localhost: head: cannot open `/home/hadoop/hadoop-0.21.0/logs/hadoop-hadoop-tasktracker-ubuntu.out' for reading: No such file or directory

从信息中可以看出以下文件在/home/hadoop/hadoop-0.21.0/logs/中不存在,解决办法就是创建如下文件:

hadoop-hadoop-namenode-ubuntu.out

hadoop-hadoop-datanode-ubuntu.out

hadoop-hadoop-jobtracker-ubuntu.out

hadoop-hadoop-tasktracker-ubuntu.out

hadoop-hadoop-secondarynamenode-ubuntu.out

/home/hadoop/hadoop-0.21.0/logs/中logs目录所在组所在用户都要改为hadoop用户,命令如此:

root@ubuntu:/home/hadoop/hadoop-0.21.0/logs# chgrp -R hadoop logs

root@ubuntu:/home/hadoop/hadoop-0.21.0/logs# chown -R hadoop logs

5、

hadoop@ubuntu:~/hadoop-0.21.0/bin$ ./start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-mapred.sh

starting namenode, logging to /home/hadoop/hadoop-0.21.0/logs/hadoop-hadoop-namenode-ubuntu.out

log4j:ERROR setFile(null,true) call failed.

java.io.FileNotFoundException: /home/hadoop/hadoop-0.21.0/logs/SecurityAuth.audit (Permission denied)

at java.io.FileOutputStream.openAppend(Native Method)

at java.io.FileOutputStream.<init>(FileOutputStream.java:192)

at java.io.FileOutputStream.<init>(FileOutputStream.java:116)

at org.apache.log4j.FileAppender.setFile(FileAppender.java:290)

at org.apache.log4j.FileAppender.activateOptions(FileAppender.java:164)

at org.apache.log4j.DailyRollingFileAppender.activateOptions(DailyRollingFileAppender.java:216)

at org.apache.log4j.config.PropertySetter.activate(PropertySetter.java:257)

at org.apache.log4j.config.PropertySetter.setProperties(PropertySetter.java:133)

localhost: starting datanode, logging to /home/hadoop/hadoop-0.21.0/logs/hadoop-hadoop-datanode-ubuntu.out

localhost: log4j:ERROR setFile(null,true) call failed.

localhost: java.io.FileNotFoundException: /home/hadoop/hadoop-0.21.0/logs/SecurityAuth.audit (Permission denied)

localhost: at java.io.FileOutputStream.openAppend(Native Method)

localhost: at java.io.FileOutputStream.<init>(FileOutputStream.java:192)

localhost: at java.io.FileOutputStream.<init>(FileOutputStream.java:116)

localhost: at org.apache.log4j.FileAppender.setFile(FileAppender.java:290)

localhost: at org.apache.log4j.FileAppender.activateOptions(FileAppender.java:164)

localhost: at org.apache.log4j.DailyRollingFileAppender.activateOptions(DailyRollingFileAppender.java:216)

localhost: at org.apache.log4j.config.PropertySetter.activate(PropertySetter.java:257)

localhost: at org.apache.log4j.config.PropertySetter.setProperties(PropertySetter.java:133)

localhost: starting secondarynamenode, logging to /home/hadoop/hadoop-0.21.0/logs/hadoop-hadoop-secondarynamenode-ubuntu.out

localhost: log4j:ERROR setFile(null,true) call failed.

localhost: java.io.FileNotFoundException: /home/hadoop/hadoop-0.21.0/logs/SecurityAuth.audit (Permission denied)

localhost: at java.io.FileOutputStream.openAppend(Native Method)

localhost: at java.io.FileOutputStream.<init>(FileOutputStream.java:192)

localhost: at java.io.FileOutputStream.<init>(FileOutputStream.java:116)

localhost: at org.apache.log4j.FileAppender.setFile(FileAppender.java:290)

localhost: at org.apache.log4j.FileAppender.activateOptions(FileAppender.java:164)

localhost: at org.apache.log4j.DailyRollingFileAppender.activateOptions(DailyRollingFileAppender.java:216)

localhost: at org.apache.log4j.config.PropertySetter.activate(PropertySetter.java:257)

localhost: at org.apache.log4j.config.PropertySetter.setProperties(PropertySetter.java:133)

starting jobtracker, logging to /home/hadoop/hadoop-0.21.0/logs/hadoop-hadoop-jobtracker-ubuntu.out

log4j:ERROR setFile(null,true) call failed.

java.io.FileNotFoundException: /home/hadoop/hadoop-0.21.0/logs/SecurityAuth.audit (Permission denied)

at java.io.FileOutputStream.openAppend(Native Method)

at java.io.FileOutputStream.<init>(FileOutputStream.java:192)

at java.io.FileOutputStream.<init>(FileOutputStream.java:116)

at org.apache.log4j.FileAppender.setFile(FileAppender.java:290)

at org.apache.log4j.FileAppender.activateOptions(FileAppender.java:164)

at org.apache.log4j.DailyRollingFileAppender.activateOptions(DailyRollingFileAppender.java:216)

at org.apache.log4j.config.PropertySetter.activate(PropertySetter.java:257)

at org.apache.log4j.config.PropertySetter.setProperties(PropertySetter.java:133)

localhost: starting tasktracker, logging to /home/hadoop/hadoop-0.21.0/logs/hadoop-hadoop-tasktracker-ubuntu.out

localhost: log4j:ERROR setFile(null,true) call failed.

localhost: java.io.FileNotFoundException: /home/hadoop/hadoop-0.21.0/logs/SecurityAuth.audit (Permission denied)

localhost: at java.io.FileOutputStream.openAppend(Native Method)

localhost: at java.io.FileOutputStream.<init>(FileOutputStream.java:192)

localhost: at java.io.FileOutputStream.<init>(FileOutputStream.java:116)

localhost: at org.apache.log4j.FileAppender.setFile(FileAppender.java:290)

localhost: at org.apache.log4j.FileAppender.activateOptions(FileAppender.java:164)

localhost: at org.apache.log4j.DailyRollingFileAppender.activateOptions(DailyRollingFileAppender.java:216)

localhost: at org.apache.log4j.config.PropertySetter.activate(PropertySetter.java:257)

localhost: at org.apache.log4j.config.PropertySetter.setProperties(PropertySetter.java:133)

hadoop@ubuntu:~/hadoop-0.21.0/bin$

从提示可以看出SecurityAuth.audit、history所在组不是在hadoop组下,所在用户也不是hadoop用户下,解决办法就是把SecurityAuth.audit、history都放在hadoop组、hadoop用户下,命令如下:

root@ubuntu:/home/hadoop/hadoop-0.21.0/logs# chgrp -R hadoop history

root@ubuntu:/home/hadoop/hadoop-0.21.0/logs# chgrp -R hadoop SecurityAuth.audit

root@ubuntu:/home/hadoop/hadoop-0.21.0/logs# chown -R hadoop history

root@ubuntu:/home/hadoop/hadoop-0.21.0/logs# chown -R hadoop SecurityAuth.audit

6、

hadoop@ubuntu:~/hadoop-0.21.0/bin$ ./start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-mapred.sh

starting namenode, logging to /home/hadoop/hadoop-0.21.0/logs/hadoop-hadoop-namenode-ubuntu.out

localhost: starting datanode, logging to /home/hadoop/hadoop-0.21.0/logs/hadoop-hadoop-datanode-ubuntu.out

localhost: starting secondarynamenode, logging to /home/hadoop/hadoop-0.21.0/logs/hadoop-hadoop-secondarynamenode-ubuntu.out

localhost: Exception in thread "main" org.apache.hadoop.hdfs.server.common.InconsistentFSStateException: Directory /home/hadoop/hadoop-datastore/dfs/namesecondary is in an inconsistent state: checkpoint directory does not exist or is not accessible.

localhost: at org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode$CheckpointStorage.recoverCreate(SecondaryNameNode.java:534)

localhost: at org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode.initialize(SecondaryNameNode.java:149)

localhost: at org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode.<init>(SecondaryNameNode.java:119)

localhost: at org.apache.hadoop.hdfs.server.namenode.SecondaryNameNode.main(SecondaryNameNode.java:481)

starting jobtracker, logging to /home/hadoop/hadoop-0.21.0/logs/hadoop-hadoop-jobtracker-ubuntu.out

localhost: starting tasktracker, logging to /home/hadoop/hadoop-0.21.0/logs/hadoop-hadoop-tasktracker-ubuntu.out

hadoop@ubuntu:~/hadoop-0.21.0/bin$

注意到是目录权限的问题,把权限修改为hadoop权限就ok

root@ubuntu:/home/hadoop# chgrp -R hadoop hadoop-datastore

root@ubuntu:/home/hadoop# chown -R hadoop hadoop-datastore

7、

hadoop@ubuntu:~/hadoop-0.21.0/bin$ ./start-all.sh

This script is Deprecated. Instead use start-dfs.sh and start-mapred.sh

starting namenode, logging to /home/hadoop/hadoop-0.21.0/logs/hadoop-hadoop-namenode-ubuntu.out

localhost: starting datanode, logging to /home/hadoop/hadoop-0.21.0/logs/hadoop-hadoop-datanode-ubuntu.out

localhost: secondarynamenode running as process 9524. Stop it first.

starting jobtracker, logging to /home/hadoop/hadoop-0.21.0/logs/hadoop-hadoop-jobtracker-ubuntu.out

localhost: tasktracker running as process 9814. Stop it first.

secondarynamenode running as process 9524. Stop it first.

tasktracker running as process 9814. Stop it first.

提示我们secondarynamenode、tasktracker正在运行着,要先停掉。

hadoop@ubuntu:~/hadoop-0.21.0/bin$ ./stop-all.sh

8、

2012-12-19 23:22:49,130 INFO org.apache.hadoop.security.Groups: Group mapping impl=org.apache.hadoop.security.ShellBasedUnixGroupsMapping; cacheTimeout=300000

2012-12-19 23:22:57,959 ERROR org.apache.hadoop.hdfs.server.datanode.DataNode: java.io.IOException: Incompatible namespaceIDs in /home/hadoop/hadoop-datastore/dfs/data: namenode namespaceID = 1198306651; datanode namespaceID = 159487754

at org.apache.hadoop.hdfs.server.datanode.DataStorage.doTransition(DataStorage.java:237)

at org.apache.hadoop.hdfs.server.datanode.DataStorage.recoverTransitionRead(DataStorage.java:152)

at org.apache.hadoop.hdfs.server.datanode.DataNode.startDataNode(DataNode.java:336)

at org.apache.hadoop.hdfs.server.datanode.DataNode.<init>(DataNode.java:260)

at org.apache.hadoop.hdfs.server.datanode.DataNode.<init>(DataNode.java:237)

at org.apache.hadoop.hdfs.server.datanode.DataNode.makeInstance(DataNode.java:1440)

at org.apache.hadoop.hdfs.server.datanode.DataNode.instantiateDataNode(DataNode.java:1393)

at org.apache.hadoop.hdfs.server.datanode.DataNode.createDataNode(DataNode.java:1407)

at org.apache.hadoop.hdfs.server.datanode.DataNode.main(DataNode.java:1552)

2012-12-19 23:22:58,141 INFO org.apache.hadoop.hdfs.server.datanode.DataNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down DataNode at ubuntu/192.168.11.156

************************************************************/

hadoop@ubuntu:~/hadoop-datastore/dfs/data$ cd /home/hadoop/hadoop-datastore/

hadoop@ubuntu:~/hadoop-datastore$ rm -rf *

9、

2012-12-19 23:48:31,449 ERROR org.apache.hadoop.hdfs.server.namenode.NameNode: org.apache.hadoop.hdfs.server.common.InconsistentFSStateException: Directory /home/hadoop/hadoop-datastore/dfs/name is in an inconsistent state: storage directory does not exist or is not accessible.

at org.apache.hadoop.hdfs.server.namenode.FSImage.recoverTransitionRead(FSImage.java:407)

at org.apache.hadoop.hdfs.server.namenode.FSDirectory.loadFSImage(FSDirectory.java:110)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.initialize(FSNamesystem.java:291)

at org.apache.hadoop.hdfs.server.namenode.FSNamesystem.<init>(FSNamesystem.java:270)

at org.apache.hadoop.hdfs.server.namenode.NameNode.loadNamesystem(NameNode.java:271)

at org.apache.hadoop.hdfs.server.namenode.NameNode.initialize(NameNode.java:303)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:433)

at org.apache.hadoop.hdfs.server.namenode.NameNode.<init>(NameNode.java:421)

at org.apache.hadoop.hdfs.server.namenode.NameNode.createNameNode(NameNode.java:1359)

at org.apache.hadoop.hdfs.server.namenode.NameNode.main(NameNode.java:1368)

hadoop@ubuntu:~/hadoop-datastore$ hadoop namenode -format