09-5.部署 EFK 插件

EFK 对应的目录:kubernetes/cluster/addons/fluentd-elasticsearch

$ cd /opt/k8s/kubernetes/cluster/addons/fluentd-elasticsearch

$ ls *.yaml

es-service.yaml es-statefulset.yaml fluentd-es-configmap.yaml fluentd-es-ds.yaml kibana-deployment.yaml kibana-service.yaml

修改定义文件

$ cp es-statefulset.yaml{,.orig}

$ diff es-statefulset.yaml{,.orig}

76c76

< - image: longtds/elasticsearch:v5.6.4

---

> - image: k8s.gcr.io/elasticsearch:v5.6.4

$ cp fluentd-es-ds.yaml{,.orig}

$ diff fluentd-es-ds.yaml{,.orig}

79c79

< image: netonline/fluentd-elasticsearch:v2.0.4

---

> image: k8s.gcr.io/fluentd-elasticsearch:v2.0.4

给 Node 设置标签

DaemonSet fluentd-es 只会调度到设置了标签 beta.kubernetes.io/fluentd-ds-ready=true 的 Node,需要在期望运行 fluentd 的 Node 上设置该标签;

$ kubectl get nodes

NAME STATUS ROLES AGE VERSION

kube-node1 Ready <none> 3d v1.10.4

kube-node2 Ready <none> 3d v1.10.4

kube-node3 Ready <none> 3d v1.10.4

$ kubectl label nodes kube-node3 beta.kubernetes.io/fluentd-ds-ready=true

node "kube-node3" labeled

执行定义文件

$ pwd

/opt/k8s/kubernetes/cluster/addons/fluentd-elasticsearch

$ ls *.yaml

es-service.yaml es-statefulset.yaml fluentd-es-configmap.yaml fluentd-es-ds.yaml kibana-deployment.yaml kibana-service.yaml

$ kubectl create -f .

检查执行结果

$ kubectl get pods -n kube-system -o wide|grep -E 'elasticsearch|fluentd|kibana'

elasticsearch-logging-0 1/1 Running 0 5m 172.30.81.7 kube-node1

elasticsearch-logging-1 1/1 Running 0 2m 172.30.39.8 kube-node3

fluentd-es-v2.0.4-hntfp 1/1 Running 0 5m 172.30.39.6 kube-node3

kibana-logging-7445dc9757-pvpcv 1/1 Running 0 5m 172.30.39.7 kube-node3

$ kubectl get service -n kube-system|grep -E 'elasticsearch|kibana'

elasticsearch-logging ClusterIP 10.254.50.198 <none> 9200/TCP 5m

kibana-logging ClusterIP 10.254.255.190 <none> 5601/TCP 5m

kibana Pod 第一次启动时会用较长时间(0-20分钟)来优化和 Cache 状态页面,可以 tailf 该 Pod 的日志观察进度:

[k8s@kube-node1 fluentd-elasticsearch]$ kubectl logs kibana-logging-7445dc9757-pvpcv -n kube-system -f

{"type":"log","@timestamp":"2018-06-16T11:36:18Z","tags":["info","optimize"],"pid":1,"message":"Optimizing and caching bundles for graph, ml, kibana, stateSessionStorageRedirect, timelion and status_page. This may take a few minutes"}

{"type":"log","@timestamp":"2018-06-16T11:40:03Z","tags":["info","optimize"],"pid":1,"message":"Optimization of bundles for graph, ml, kibana, stateSessionStorageRedirect, timelion and status_page complete in 224.57 seconds"}

注意:只有当的 Kibana pod 启动完成后,才能查看 kibana dashboard,否则会提示 refuse。

访问 kibana

-

通过 kube-apiserver 访问:

$ kubectl cluster-info|grep -E 'Elasticsearch|Kibana' Elasticsearch is running at https://192.168.1.106:6443/api/v1/namespaces/kube-system/services/elasticsearch-logging/proxy Kibana is running at https://192.168.1.106:6443/api/v1/namespaces/kube-system/services/kibana-logging/proxy浏览器访问 URL:

https://192.168.1.106:6443/api/v1/namespaces/kube-system/services/kibana-logging/proxy

对于 virtuabox 做了端口映射:https://127.0.0.1:8080/api/v1/namespaces/kube-system/services/kibana-logging/proxy -

通过 kubectl proxy 访问:

创建代理

$ kubectl proxy --address='192.168.1.106' --port=8086 --accept-hosts='^*$' Starting to serve on 192.168.1.80:8086浏览器访问 URL:

https://192.168.1.106:8086/api/v1/namespaces/kube-system/services/kibana-logging/proxy

对于 virtuabox 做了端口映射:https://127.0.0.1:8086/api/v1/namespaces/kube-system/services/kibana-logging/proxy

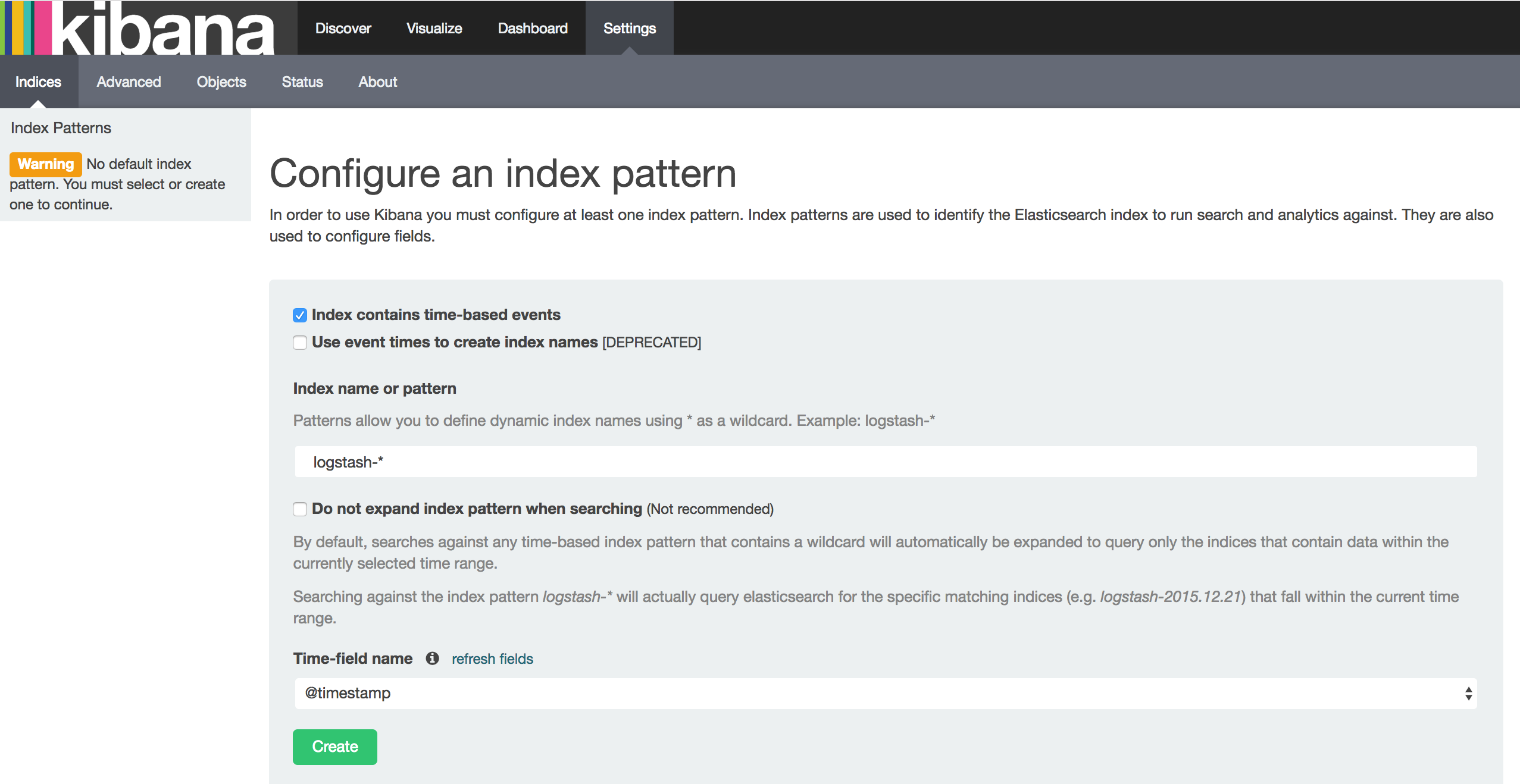

在 Settings -> Indices 页面创建一个 index(相当于 mysql 中的一个 database),选中 Index contains time-based events,使用默认的 logstash-* pattern,点击 Create ;

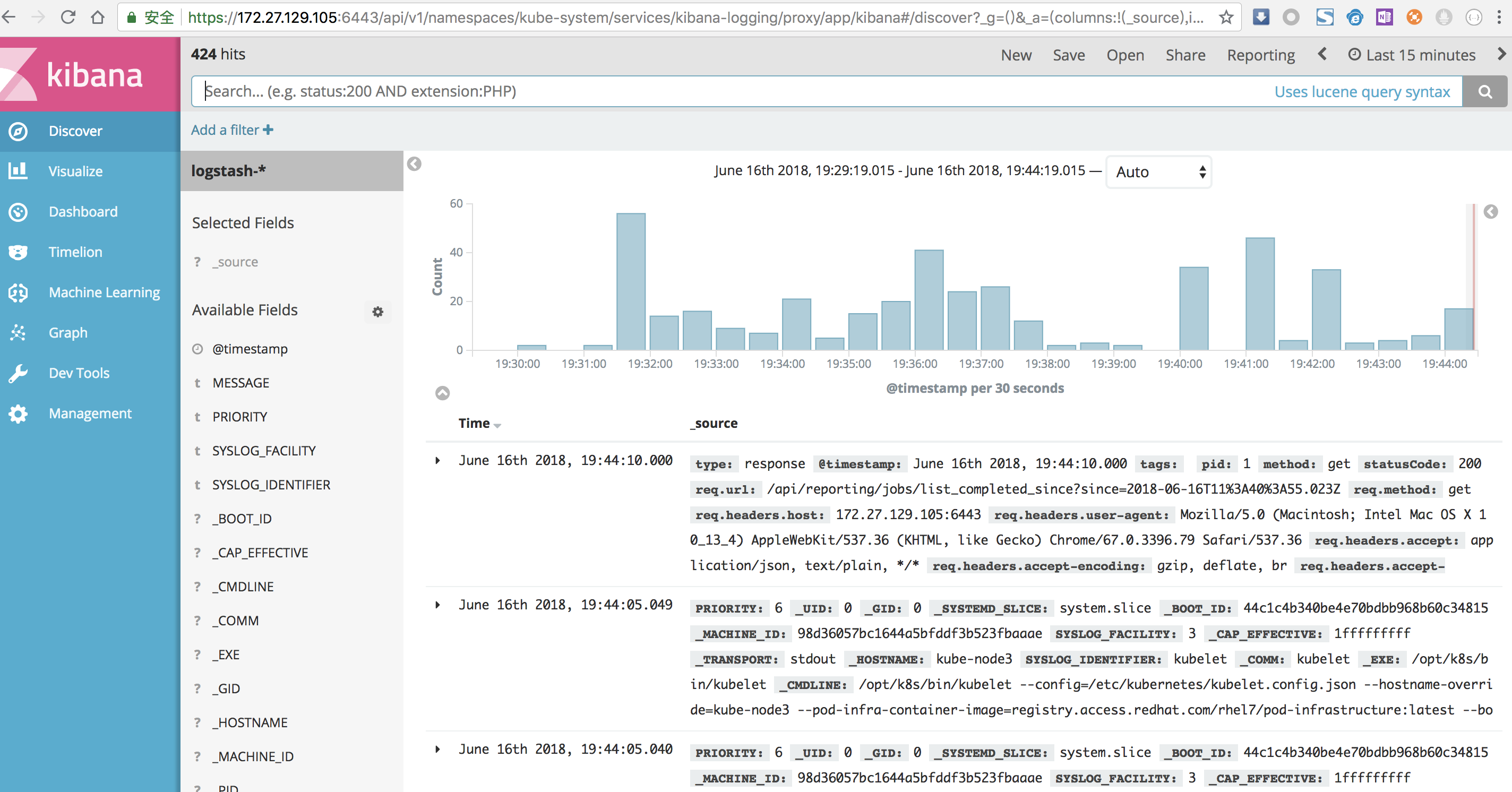

创建 Index 后,稍等几分钟就可以在 Discover 菜单下看到 ElasticSearch logging 中汇聚的日志;

链接:https://www.orchome.com/662

著作权归作者所有。商业转载请联系作者获得授权,非商业转载请注明出处。