参考地址:https://www.cnblogs.com/xiangsikai/p/11433276.html

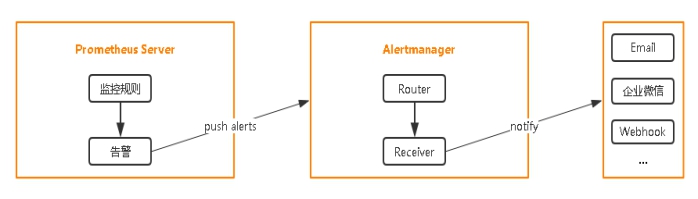

设置告警和通知的主要步骤如下:

一、部署Alertmanager

官网参考地址:https://github.com/prometheus/alertmanager

配置文件

1.alertmanager主配置文件

[root@localhost alertmanager]# cat alertmanager-configmap.yaml apiVersion: v1 kind: ConfigMap metadata: #配置文件名称 name: alertmanager-config namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: EnsureExists data: alertmanager.yml: | global: resolve_timeout: 1m # 告警自定义邮件 smtp_smarthost: 'smtp.sina.com.cn:25' smtp_from: 'xxx@sina.com' smtp_auth_username: 'xxx@sina.com' smtp_auth_password: '123456789' smtp_require_tls: false receivers: - name: default-receiver email_configs: - to: "xxx@qq.com" route: group_interval: 1m group_wait: 10s receiver: default-receiver repeat_interval: 1m [root@localhost alertmanager]#

2.部署核心组件

[root@localhost alertmanager]# cat alertmanager-deployment.yaml apiVersion: apps/v1 kind: Deployment metadata: name: alertmanager namespace: kube-system labels: k8s-app: alertmanager kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile version: v0.14.0 spec: replicas: 1 selector: matchLabels: k8s-app: alertmanager version: v0.14.0 template: metadata: labels: k8s-app: alertmanager version: v0.14.0 annotations: scheduler.alpha.kubernetes.io/critical-pod: '' spec: priorityClassName: system-cluster-critical containers: - name: prometheus-alertmanager image: "prom/alertmanager:v0.14.0" imagePullPolicy: "IfNotPresent" args: - --config.file=/etc/config/alertmanager.yml - --storage.path=/data - --web.external-url=/ ports: - containerPort: 9093 readinessProbe: httpGet: path: /#/status port: 9093 initialDelaySeconds: 30 timeoutSeconds: 30 volumeMounts: - name: config-volume mountPath: /etc/config - name: storage-volume mountPath: "/data" resources: limits: cpu: 10m memory: 50Mi requests: cpu: 10m memory: 50Mi - name: prometheus-alertmanager-configmap-reload image: "jimmidyson/configmap-reload:v0.1" imagePullPolicy: "IfNotPresent" args: - --volume-dir=/etc/config - --webhook-url=http://localhost:9093/-/reload volumeMounts: - name: config-volume mountPath: /etc/config readOnly: true resources: limits: cpu: 10m memory: 10Mi requests: cpu: 10m memory: 10Mi volumes: - name: config-volume configMap: name: alertmanager-config - name: storage-volume emptyDir: {} [root@localhost alertmanager]#

这里没有采用pv自动存储模式,原yaml文件参考以下

#alertmanager-deployment.yaml apiVersion: apps/v1 kind: Deployment metadata: name: alertmanager namespace: kube-system labels: k8s-app: alertmanager kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile version: v0.14.0 spec: replicas: 1 selector: matchLabels: k8s-app: alertmanager version: v0.14.0 template: metadata: labels: k8s-app: alertmanager version: v0.14.0 annotations: scheduler.alpha.kubernetes.io/critical-pod: '' spec: priorityClassName: system-cluster-critical containers: - name: prometheus-alertmanager image: "prom/alertmanager:v0.14.0" imagePullPolicy: "IfNotPresent" args: - --config.file=/etc/config/alertmanager.yml - --storage.path=/data - --web.external-url=/ ports: - containerPort: 9093 readinessProbe: httpGet: path: /#/status port: 9093 initialDelaySeconds: 30 timeoutSeconds: 30 volumeMounts: - name: config-volume mountPath: /etc/config - name: storage-volume mountPath: "/data" subPath: "" resources: limits: cpu: 10m memory: 50Mi requests: cpu: 10m memory: 50Mi - name: prometheus-alertmanager-configmap-reload image: "jimmidyson/configmap-reload:v0.1" imagePullPolicy: "IfNotPresent" args: - --volume-dir=/etc/config - --webhook-url=http://localhost:9093/-/reload volumeMounts: - name: config-volume mountPath: /etc/config readOnly: true resources: limits: cpu: 10m memory: 10Mi requests: cpu: 10m memory: 10Mi volumes: - name: config-volume configMap: name: alertmanager-config - name: storage-volume persistentVolumeClaim: claimName: alertmanager # 使用的自动PV存储 #alertmanager-pvc.yaml apiVersion: v1 kind: PersistentVolumeClaim metadata: name: alertmanager namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: EnsureExists spec: # 使用自己的动态PV storageClassName: managed-nfs-storage accessModes: - ReadWriteOnce resources: requests: storage: "2Gi"

3.暴露Prot端口

[root@localhost alertmanager]# cat alertmanager-service.yaml apiVersion: v1 kind: Service metadata: name: alertmanager namespace: kube-system labels: kubernetes.io/cluster-service: "true" addonmanager.kubernetes.io/mode: Reconcile kubernetes.io/name: "Alertmanager" spec: ports: - name: http port: 80 protocol: TCP targetPort: 9093 selector: k8s-app: alertmanager type: NodePort [root@localhost alertmanager]#

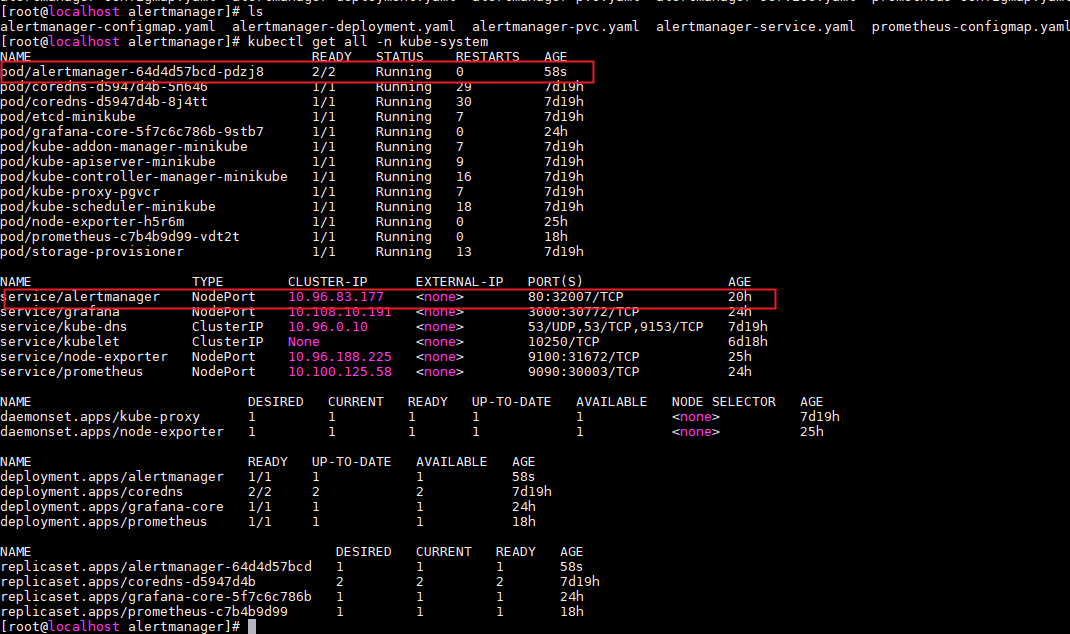

部署

1、创建configmap、deployment、service kubectl create -f alertmanager-configmap.yaml kubectl apply -f alertmanager-deployment.yaml kubectl apply -f alertmanager-service.yaml

2、查看

二、配置Prometheus与Alertmanager通信

1、编辑 prometheus目录中的configmap.yaml 配置文件添加绑定信息

[root@localhost alertmanager]# cd ../prometheus/ [root@localhost prometheus]# ls configmap.yaml configmap.yaml.bak prometheus.deploy.yml prometheus.deploy.yml.bak prometheus-rules.yaml prometheus.svc.yml rbac-setup.yaml [root@localhost prometheus]# cat configmap.yaml apiVersion: v1 kind: ConfigMap metadata: name: prometheus-config namespace: kube-system data: prometheus.yml: | global: scrape_interval: 15s evaluation_interval: 15s scrape_configs: - job_name: 'kubernetes-apiservers' kubernetes_sd_configs: - role: endpoints scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token relabel_configs: - source_labels: [__meta_kubernetes_namespace, __meta_kubernetes_service_name, __meta_kubernetes_endpoint_port_name] action: keep regex: default;kubernetes;https - job_name: 'kubernetes-nodes' kubernetes_sd_configs: - role: node scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token relabel_configs: - action: labelmap regex: __meta_kubernetes_node_label_(.+) - target_label: __address__ replacement: kubernetes.default.svc:443 - source_labels: [__meta_kubernetes_node_name] regex: (.+) target_label: __metrics_path__ replacement: /api/v1/nodes/${1}/proxy/metrics - job_name: 'kubernetes-cadvisor' kubernetes_sd_configs: - role: node scheme: https tls_config: ca_file: /var/run/secrets/kubernetes.io/serviceaccount/ca.crt bearer_token_file: /var/run/secrets/kubernetes.io/serviceaccount/token relabel_configs: - action: labelmap regex: __meta_kubernetes_node_label_(.+) - target_label: __address__ replacement: kubernetes.default.svc:443 - source_labels: [__meta_kubernetes_node_name] regex: (.+) target_label: __metrics_path__ replacement: /api/v1/nodes/${1}/proxy/metrics/cadvisor - job_name: 'kubernetes-service-endpoints' kubernetes_sd_configs: - role: endpoints relabel_configs: - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scrape] action: keep regex: true - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_scheme] action: replace target_label: __scheme__ regex: (https?) - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_path] action: replace target_label: __metrics_path__ regex: (.+) - source_labels: [__address__, __meta_kubernetes_service_annotation_prometheus_io_port] action: replace target_label: __address__ regex: ([^:]+)(?::d+)?;(d+) replacement: $1:$2 - action: labelmap regex: __meta_kubernetes_service_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_service_name] action: replace target_label: kubernetes_name - job_name: 'kubernetes-services' kubernetes_sd_configs: - role: service metrics_path: /probe params: module: [http_2xx] relabel_configs: - source_labels: [__meta_kubernetes_service_annotation_prometheus_io_probe] action: keep regex: true - source_labels: [__address__] target_label: __param_target - target_label: __address__ replacement: blackbox-exporter.example.com:9115 - source_labels: [__param_target] target_label: instance - action: labelmap regex: __meta_kubernetes_service_label_(.+) - source_labels: [__meta_kubernetes_namespace] target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_service_name] target_label: kubernetes_name - job_name: 'kubernetes-ingresses' kubernetes_sd_configs: - role: ingress relabel_configs: - source_labels: [__meta_kubernetes_ingress_annotation_prometheus_io_probe] action: keep regex: true - source_labels: [__meta_kubernetes_ingress_scheme,__address__,__meta_kubernetes_ingress_path] regex: (.+);(.+);(.+) replacement: ${1}://${2}${3} target_label: __param_target - target_label: __address__ replacement: blackbox-exporter.example.com:9115 - source_labels: [__param_target] target_label: instance - action: labelmap regex: __meta_kubernetes_ingress_label_(.+) - source_labels: [__meta_kubernetes_namespace] target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_ingress_name] target_label: kubernetes_name - job_name: 'kubernetes-pods' kubernetes_sd_configs: - role: pod relabel_configs: - source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_scrape] action: keep regex: true - source_labels: [__meta_kubernetes_pod_annotation_prometheus_io_path] action: replace target_label: __metrics_path__ regex: (.+) - source_labels: [__address__, __meta_kubernetes_pod_annotation_prometheus_io_port] action: replace regex: ([^:]+)(?::d+)?;(d+) replacement: $1:$2 target_label: __address__ - action: labelmap regex: __meta_kubernetes_pod_label_(.+) - source_labels: [__meta_kubernetes_namespace] action: replace target_label: kubernetes_namespace - source_labels: [__meta_kubernetes_pod_name] action: replace target_label: kubernetes_pod_name - job_name: 'Linux' static_configs: - targets: ['node-exporter:9100'] labels: instance: Linux-node alerting: # 告警配置文件 alertmanagers: # 修改:使用静态绑定 - static_configs: # 修改:targets、指定地址与端口 - targets: ["alertmanager:80"] rule_files: - "/opt/rules/*.rules" [root@localhost prometheus]#

2、生效配置文件 kubectl apply -f configmap.yaml

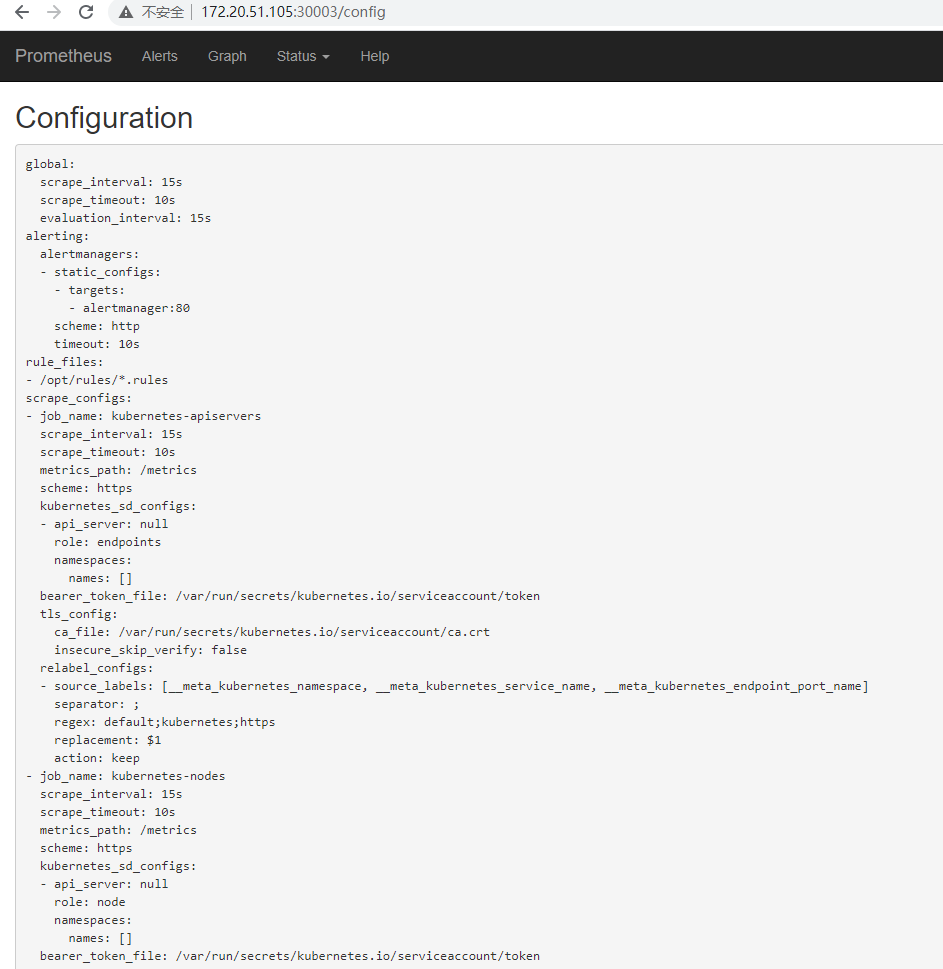

3、web控制台查看配置是否生效

http://172.20.51.105:30003/config

三、配置告警

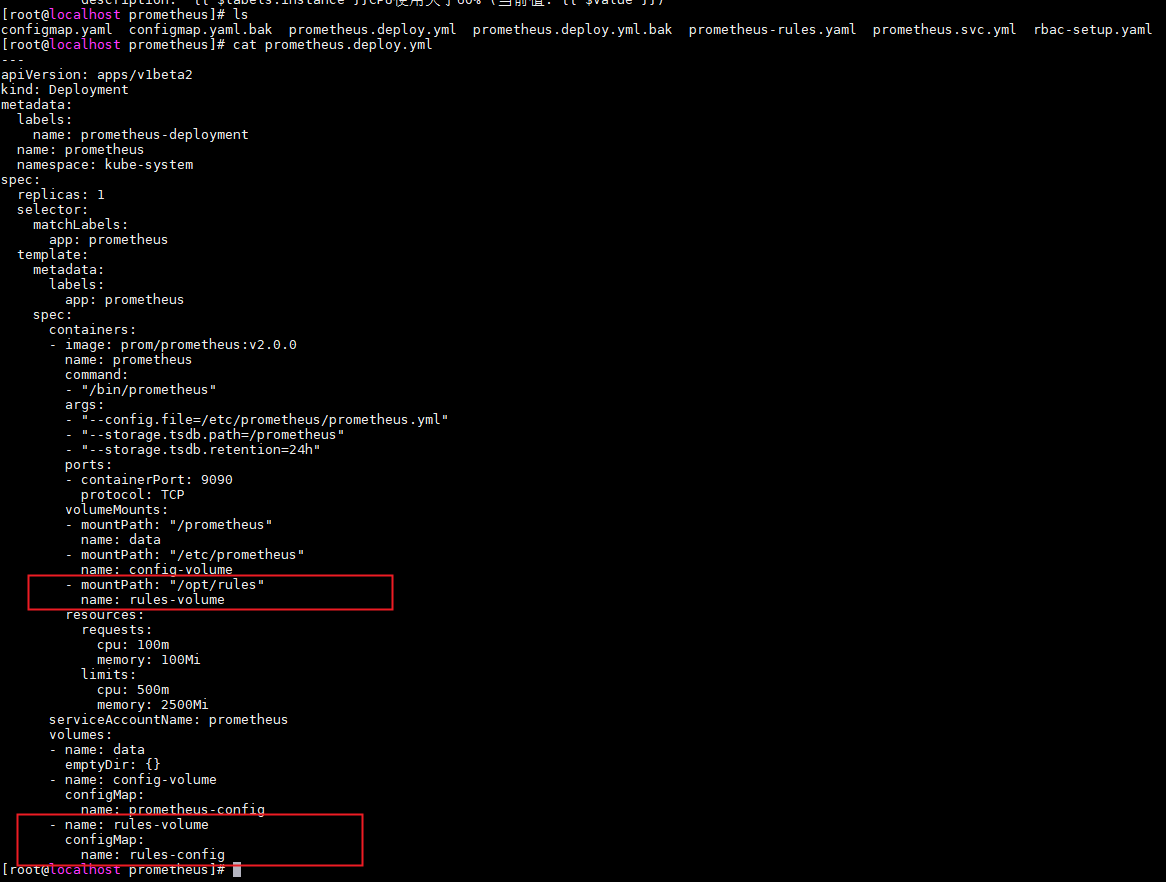

1. prometheus指定rules目录

2. configmap存储告警规则

[root@localhost prometheus]# ls configmap.yaml configmap.yaml.bak prometheus.deploy.yml prometheus.deploy.yml.bak prometheus-rules.yaml prometheus.svc.yml rbac-setup.yaml [root@localhost prometheus]# cat prometheus-rules.yaml apiVersion: v1 kind: ConfigMap metadata: name: rules-config namespace: kube-system data: # 通用角色 general.rules: | groups: - name: general.rules rules: - alert: InstanceDown expr: up == 0 for: 1m labels: severity: error annotations: summary: "Instance {{ $labels.instance }} 停止工作" description: "{{ $labels.instance }} job {{ $labels.job }} 已经停止5分钟以上." # Node对所有资源的监控 node.rules: | groups: - name: node.rules rules: - alert: NodeFilesystemUsage expr: 100 - (node_filesystem_free_bytes{fstype=~"ext4|xfs"} / node_filesystem_size_bytes{fstype=~"ext4|xfs"} * 100) > 80 for: 1m labels: severity: warning annotations: summary: "Instance {{ $labels.instance }} : {{ $labels.mountpoint }} 分区使用率过高" description: "{{ $labels.instance }}: {{ $labels.mountpoint }} 分区使用大于80% (当前值: {{ $value }})" - alert: NodeMemoryUsage expr: 100 - (node_memory_MemFree_bytes+node_memory_Cached_bytes+node_memory_Buffers_bytes) / node_memory_MemTotal_bytes * 100 > 1 for: 1m labels: severity: warning annotations: summary: "Instance {{ $labels.instance }} 内存使用率过高" description: "{{ $labels.instance }}内存使用大于80% (当前值: {{ $value }})" - alert: NodeCPUUsage expr: 100 - (avg(irate(node_cpu_seconds_total{mode="idle"}[5m])) by (instance) * 100) > 60 for: 1m labels: severity: warning annotations: summary: "Instance {{ $labels.instance }} CPU使用率过高" description: "{{ $labels.instance }}CPU使用大于60% (当前值: {{ $value }})" [root@localhost prometheus]#

3. configmap挂载到容器rules目录

4. 生效配置

kubectl apply -f prometheus-rules.yaml

kubectl apply -f prometheus-deploy.yaml

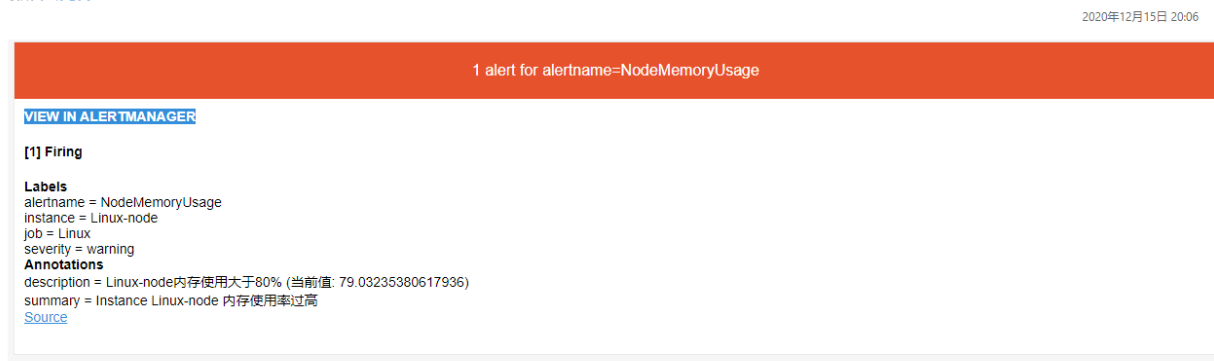

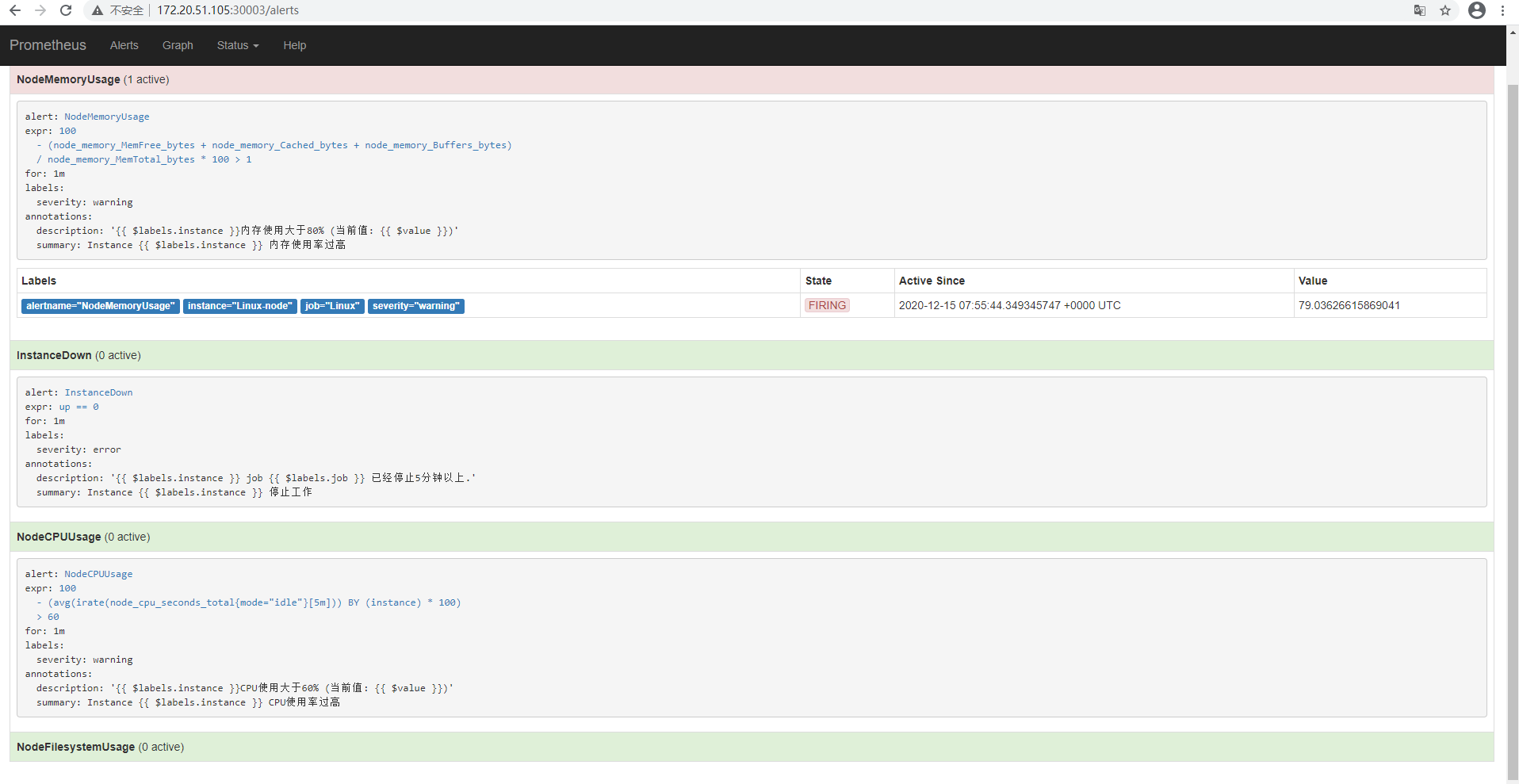

四、查看告警结果

故意将内存阈值设置小,为了演示告警

Alertmanager告警信息

Prometheus告警信息

邮件告警信息