四,引入Redis

4.1 实验环境说明

| 主机名 | IP地址 | 用途 |

|---|---|---|

| ES1 | 192.168.200.191 | elasticsearch-node1 |

| ES2 | 192.168.200.192 | elasticsearch-node2 |

| ES3 | 192.168.200.193 | elasticsearch-node3 |

| Logstash-Kibana | 192.168.200.194 | 日志可视化服务器 |

| Web | 192.168.200.195 | 模拟各种待收集的日志客户端 |

4.2 在logstash-Kibana上安装部署redis

#安装epel源[root@Logstash-Kibana ~]# yum -y install epel-release#利用yum安装redis[root@Logstash-Kibana ~]# yum -y install redis[root@Logstash-Kibana ~]# redis-server --versionRedis server v=3.2.12 sha=00000000:0 malloc=jemalloc-3.6.0 bits=64 build=3dc3425a3049d2ef#修改redis配置文件[root@Logstash-Kibana ~]# cp /etc/redis.conf{,.bak}[root@Logstash-Kibana ~]# cat -n /etc/redis.conf.bak | sed -n '61p;480p'61 bind 127.0.0.1480 # requirepass foobared[root@Logstash-Kibana ~]# cat -n /etc/redis.conf | sed -n '61p;480p'61 bind 0.0.0.0480 requirepass yunjisuan#启动redis-server[root@Logstash-Kibana ~]# systemctl start redis[root@Logstash-Kibana ~]# netstat -antup | grep redistcp 0 0 0.0.0.0:6379 0.0.0.0:* LISTEN 15822/redis-server

4.3 在Web服务器上安装logstash

#yum安装jdk1.8[root@WebServer ~]# yum -y install java-1.8.0-openjdk#添加ELK的yum源文件[root@WebServer ~]# vim /etc/yum.repos.d/elastic.repo[root@WebServer ~]# cat /etc/yum.repos.d/elastic.repo[elastic-6.x]name=Elastic repository for 6.x packagesbaseurl=https://artifacts.elastic.co/packages/6.x/yumgpgcheck=1gpgkey=https://artifacts.elastic.co/GPG-KEY-elasticsearchenabled=1autorefresh=1type=rpm-md#yum安装logstash和filebeat[root@WebServer ~]# yum -y install logstash filebeat#创建收集数据写入redis的logstash配置文件[root@WebServer ~]# vim /etc/logstash/conf.d/logstash-to-redis.conf[root@WebServer ~]# cat /etc/logstash/conf.d/logstash-to-redis.confinput {file {path => ["/var/log/messages"]type => "system"tags => ["syslog","test"]start_position => "beginning"}file {path => ["/var/log/audit/audit.log"]type => "system"tags => ["auth","test"]start_position => "beginning"}}filter {}output {redis {host => ["192.168.200.194:6379"]password => "yunjisuan"db => "0"data_type => "list"key => "logstash"}}#启动WebServer服务器上的logstash[root@WebServer ~]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/logstash-to-redis.conf#验证logstash是否成功将数据写入redis[root@Logstash-Kibana ~]# redis-cli -a yunjisuan info Keyspace# Keyspacedb0:keys=1,expires=0,avg_ttl=0[root@Logstash-Kibana ~]# redis-cli -a yunjisuan scan 01) "0"2) 1) "logstash"[root@Logstash-Kibana ~]# redis-cli -a yunjisuan lrange logstash 0 11) "{"host":"WebServer","message":"Jul 3 03:50:54 localhost journal: Runtime journal is using 6.0M (max allowed 48.7M, trying to leave 73.0M free of 481.1M available xe2x86x92 current limit 48.7M).","type":"system","@version":"1","@timestamp":"2018-08-24T13:03:55.486Z","path":"/var/log/messages","tags":["syslog","test"]}"2) "{"host":"WebServer","message":"type=DAEMON_START msg=audit(1530561057.301:6300): op=start ver=2.8.1 format=raw kernel=3.10.0-862.el7.x86_64 auid=4294967295 pid=625 uid=0 ses=4294967295 subj=system_u:system_r:auditd_t:s0 res=success","type":"system","@version":"1","@timestamp":"2018-08-24T13:03:55.478Z","path":"/var/log/audit/audit.log","tags":["auth","test"]}"[root@Logstash-Kibana ~]# redis-cli -a yunjisuan llen logstash(integer) 9068

4.4 在logstash-kibana服务器上配置读取redis数据的logstash配置文件

#在Logstash-Kibana进行如下操作[root@Logstash-Kibana ~]# vim /etc/logstash/conf.d/logstash-from-redis.conf[root@Logstash-Kibana ~]# cat /etc/logstash/conf.d/logstash-from-redis.confinput {redis {host => "192.168.200.194"port => 6379password => "yunjisuan"db => "0"data_type => "list"key => "logstash"}}filter {}output {if [type] == "system" {if [tags][0] == "syslog" {elasticsearch {hosts => ["http://192.168.200.191:9200","http://192.168.200.192:9200","http://192.168.200.193:9200"]index => "logstash-mr_chen-syslog-%{+YYYY.MM.dd}"}stdout { codec => rubydebug }}else if [tags][0] == "auth" {elasticsearch {hosts => ["http://192.168.200.191:9200","http://192.168.200.192:9200","http://192.168.200.193:9200"]index => "logstash-mr_chen-auth-%{+YYYY.MM.dd}"}stdout { codec => rubydebug }}}}

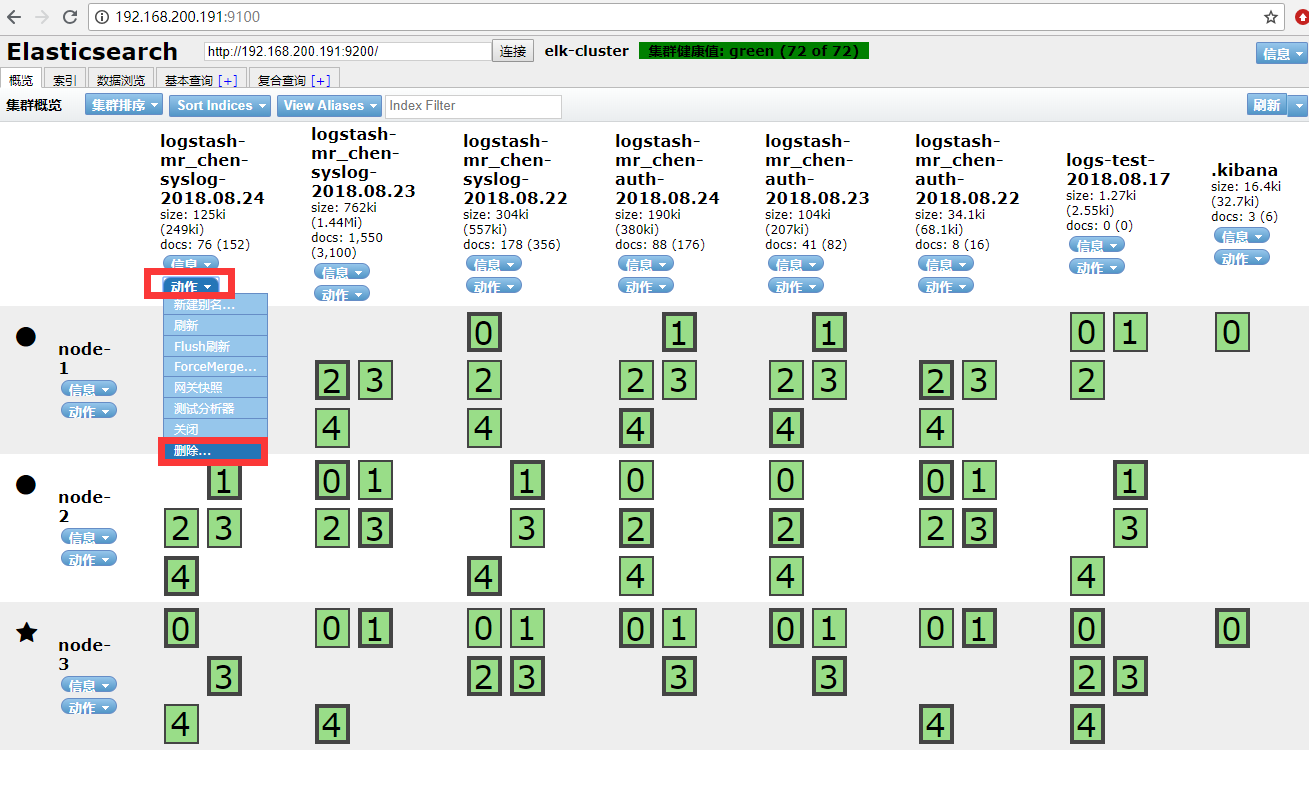

4.5 在ES1上启动图形化ES插件,清空ES上所有的索引

[root@ES1 ~]# cd elasticsearch-head/[root@ES1 elasticsearch-head]# npm run start> elasticsearch-head@0.0.0 start /root/elasticsearch-head> grunt server>> Local Npm module "grunt-contrib-jasmine" not found. Is it installed?Running "connect:server" (connect) taskWaiting forever...Started connect web server on http://localhost:9100

4.6 在logstash-kibana服务器上启动logstash,并查看kibana

#启动logstash[root@Logstash-Kibana ~]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/logstash-from-redis.conf#查看redis的key情况[root@Logstash-Kibana ~]# redis-cli -a yunjisuan info Keyspace# Keyspace[root@Logstash-Kibana ~]# redis-cli -a yunjisuan llen logstash(integer) 0

我们神奇的发现redis里的key已经全部都没有了

这是因为redis在这里充当的是一个轻量级消息队列

写入redis的logstash是生产者模型

读取redis的logstash是消费者模型

重新创建好索引后,如下图

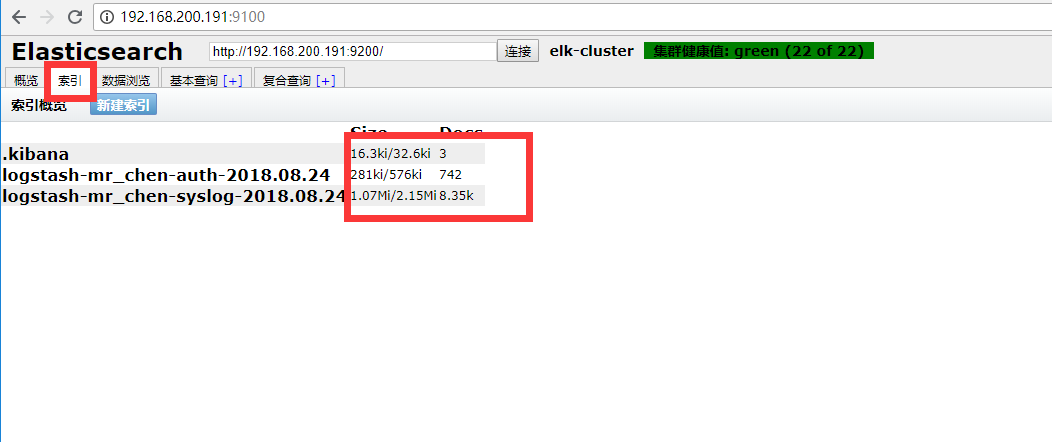

查看elasticsearch里索引的数据大小

五,引入Filebeat

filebeat优点:轻量。缺点:不支持正则

logstash优点:支持正则提取。缺点:比较重,依赖于java

5.1 在WebServer上yum安装filebeat

#安装filebeat[root@WebServer ~]# yum -y install filebeat#修改filebeat配置文件[root@WebServer ~]# cp /etc/filebeat/filebeat.yml{,.bak}[root@WebServer ~]# egrep -v "#|^$" /etc/filebeat/filebeat.yml.bak > /etc/filebeat/filebeat.yml#将配置文件修改成如下[root@WebServer ~]# vim /etc/filebeat/filebeat.yml[root@WebServer ~]# cat /etc/filebeat/filebeat.ymlfilebeat.inputs:- type: logpaths:- /var/log/messagestags: ["syslog","test"]fields:type: systemfields_under_root: true- type: logpaths:- /var/log/audit/audit.logtags: ["auth","test"]fields:type: systemfields_under_root: trueoutput.redis:hosts: ["192.168.200.194"]password: "yunjisuan"key: "filebeat"db: 0datatype: list#启动filebeat进行数据收集测试[root@WebServer ~]# systemctl start filebeat#查看logstash-kibana服务器中的redis是否有数据[root@Logstash-Kibana ~]# redis-cli -a yunjisuan llen filebeat(integer) 9109

利用图形化软件清空ES中的索引,再开启logstash读取redis数据写入ES

#修改logstash配置文件[root@Logstash-Kibana ~]# vim /etc/logstash/conf.d/logstash-from-redis.conf[root@Logstash-Kibana ~]# cat /etc/logstash/conf.d/logstash-from-redis.confinput {redis {host => "192.168.200.194"port => 6379password => "yunjisuan"db => "0"data_type => "list"key => "filebeat" #修改本行的读取的redis的key即可}}filter {}output {if [type] == "system" {if [tags][0] == "syslog" {elasticsearch {hosts => ["http://192.168.200.191:9200","http://192.168.200.192:9200","http://192.168.200.193:9200"]index => "logstash-mr_chen-syslog-%{+YYYY.MM.dd}"}stdout { codec => rubydebug }}else if [tags][0] == "auth" {elasticsearch {hosts => ["http://192.168.200.191:9200","http://192.168.200.192:9200","http://192.168.200.193:9200"]index => "logstash-mr_chen-auth-%{+YYYY.MM.dd}"}stdout { codec => rubydebug }}}}#清空ES数据后,启动logstash读取redis数据[root@Logstash-Kibana ~]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/logstash-from-redis.conf#查看redis的key被消费情况[root@Logstash-Kibana ~]# redis-cli -a yunjisuan llen filebeat(integer) 8359[root@Logstash-Kibana ~]# redis-cli -a yunjisuan llen filebeat(integer) 8109[root@Logstash-Kibana ~]# redis-cli -a yunjisuan llen filebeat(integer) 7984[root@Logstash-Kibana ~]# redis-cli -a yunjisuan llen filebeat(integer) 7859[root@Logstash-Kibana ~]# redis-cli -a yunjisuan llen filebeat(integer) 7359[root@Logstash-Kibana ~]# redis-cli -a yunjisuan llen filebeat(integer) 5234[root@Logstash-Kibana ~]# redis-cli -a yunjisuan llen filebeat(integer) 4484[root@Logstash-Kibana ~]# redis-cli -a yunjisuan llen filebeat(integer) 3734[root@Logstash-Kibana ~]# redis-cli -a yunjisuan llen filebeat(integer) 2984[root@Logstash-Kibana ~]# redis-cli -a yunjisuan llen filebeat(integer) 2484[root@Logstash-Kibana ~]# redis-cli -a yunjisuan llen filebeat(integer) 1984[root@Logstash-Kibana ~]# redis-cli -a yunjisuan llen filebeat(integer) 1609[root@Logstash-Kibana ~]# redis-cli -a yunjisuan llen filebeat(integer) 984[root@Logstash-Kibana ~]# redis-cli -a yunjisuan llen filebeat(integer) 0[root@Logstash-Kibana ~]# redis-cli -a yunjisuan llen filebeat(integer) 0

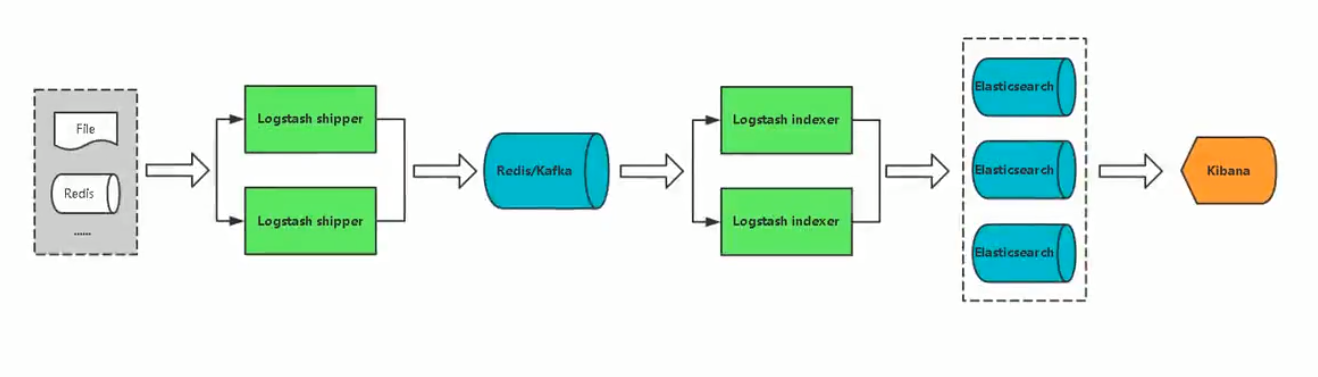

六,生产应用案例(Filebeat+Redis+ELK)

| 主机名 | IP地址 | 用途 |

|---|---|---|

| ES1 | 192.168.200.191 | elasticsearch-node1 |

| ES2 | 192.168.200.192 | elasticsearch-node2 |

| ES3 | 192.168.200.193 | elasticsearch-node3 |

| Logstash-Kibana | 192.168.200.194 | 日志可视化服务器 |

| WebServer | 192.168.200.195 | 模拟各种待收集的日志客户端 |

6.1 收集Nginx日志

6.1.1 部署nginxWeb

[root@WebServer ~]# yum -y install pcre-devel openssl-devel[root@WebServer ~]# tar xf nginx-1.10.2.tar.gz -C /usr/src/[root@WebServer ~]# cd /usr/src/nginx-1.10.2/[root@WebServer nginx-1.10.2]# useradd -s /sbin/nologin -M nginx[root@WebServer nginx-1.10.2]# ./configure --user=nginx --group=nginx --prefix=/usr/local/nginx --with-http_stub_status_module --with-http_ssl_module[root@WebServer nginx-1.10.2]# make && make install[root@WebServer nginx-1.10.2]# ln -s /usr/local/nginx/sbin/* /usr/local/sbin/[root@WebServer nginx-1.10.2]# which nginx/usr/local/sbin/nginx[root@WebServer nginx-1.10.2]# nginx -vnginx version: nginx/1.10.2[root@WebServer ~]# cd /usr/local/nginx/[root@WebServer nginx]# egrep -v "#|^$" conf/nginx.conf.default > conf/nginx.conf[root@WebServer nginx]# vim conf/nginx.conf[root@WebServer nginx]# cat conf/nginx.confworker_processes 1;events {worker_connections 1024;}http {include mime.types;default_type application/octet-stream;log_format main '$remote_addr - $remote_user [$time_local] "$request" ''$status $body_bytes_sent "$http_referer" ''"$http_user_agent" "$http_x_forwarded_for"';log_format json '{ "@timestamp":"$time_iso8601", ''"remote_addr":"$remote_addr",''"remote_user":"$remote_user",''"body_bytes_sent":"$body_bytes_sent",''"request_time":"$request_time",''"status":"$status",''"request_uri":"$request_uri",''"request_method":"$request_method",''"http_referer":"$http_referer",''"body_bytes_sent":"$body_bytes_sent",''"http_x_forwarded_for":"$http_x_forwared_for",''"http_user_agent":"$http_user_agent"}';access_log logs/access_main.log main; #开启main格式访问日志记录access_log logs/access_json.log json; #开启json格式访问日志记录sendfile on;keepalive_timeout 65;server {listen 80;server_name www.yunjisuan.com;location / {root html/www;index index.html index.htm;}}}[root@WebServer nginx]# mkdir -p html/www[root@WebServer nginx]# echo "welcome to yunjisuan" > html/www/index.html[root@WebServer nginx]# cat html/www/index.htmlwelcome to yunjisuan[root@WebServer nginx]# /usr/local/nginx/sbin/nginx[root@WebServer nginx]# netstat -antup | grep nginxtcp 0 0 0.0.0.0:80 0.0.0.0:* LISTEN 14716/nginx: master[root@WebServer nginx]# curl 192.168.200.195welcome to yunjisuan[root@WebServer nginx]# curl 192.168.200.195welcome to yunjisuan[root@WebServer nginx]# cat logs/access_main.log #查看main格式访问日志192.168.200.195 - - [25/Aug/2018:23:42:44 +0800] "GET / HTTP/1.1" 200 21 "-" "curl/7.29.0" "-"192.168.200.195 - - [25/Aug/2018:23:42:45 +0800] "GET / HTTP/1.1" 200 21 "-" "curl/7.29.0" "-"[root@WebServer nginx]# cat logs/access_json.log #查看json格式访问日志{ "@timestamp":"2018-08-25T23:42:44+08:00", "remote_addr":"192.168.200.195","remote_user":"-","body_bytes_sent":"21","request_time":"0.000","status":"200","request":"GET / HTTP/1.1","request_method":"GET","http_referer":"-","body_bytes_sent":"21","http_x_forwarded_for":"-","http_user_agent":"curl/7.29.0"}{ "@timestamp":"2018-08-25T23:42:45+08:00", "remote_addr":"192.168.200.195","remote_user":"-","body_bytes_sent":"21","request_time":"0.000","status":"200","request":"GET / HTTP/1.1","request_method":"GET","http_referer":"-","body_bytes_sent":"21","http_x_forwarded_for":"-","http_user_agent":"curl/7.29.0"}

6.1.2 修改WebServer服务器上的filebeat配置文件

#filebeat配置文件修改成如下所示[root@WebServer nginx]# cat /etc/filebeat/filebeat.ymlfilebeat.inputs:- type: logpaths:- /usr/local/nginx/logs/access_json.log #收集json格式的访问日志tags: ["access"]fields:app: wwwtype: nginx-access-jsonfields_under_root: true- type: logpaths:- /usr/local/nginx/logs/access_main.log #收集main格式的访问日志tags: ["access"]fields:app: wwwtype: nginx-accessfields_under_root: true- type: logpaths:- /usr/local/nginx/logs/error.log #收集错误日志tags: ["error"]fields:app: wwwtype: nginx-errorfields_under_root: trueoutput.redis: #输出到redishosts: ["192.168.200.194"]password: "yunjisuan"key: "filebeat"db: 0datatype: list#启动filebeat[root@WebServer nginx]# systemctl start filebeat#查看logstash-kibana服务器上redis储存的key[root@Logstash-Kibana ~]# redis-cli -a yunjisuan llen filebeat(integer) 7

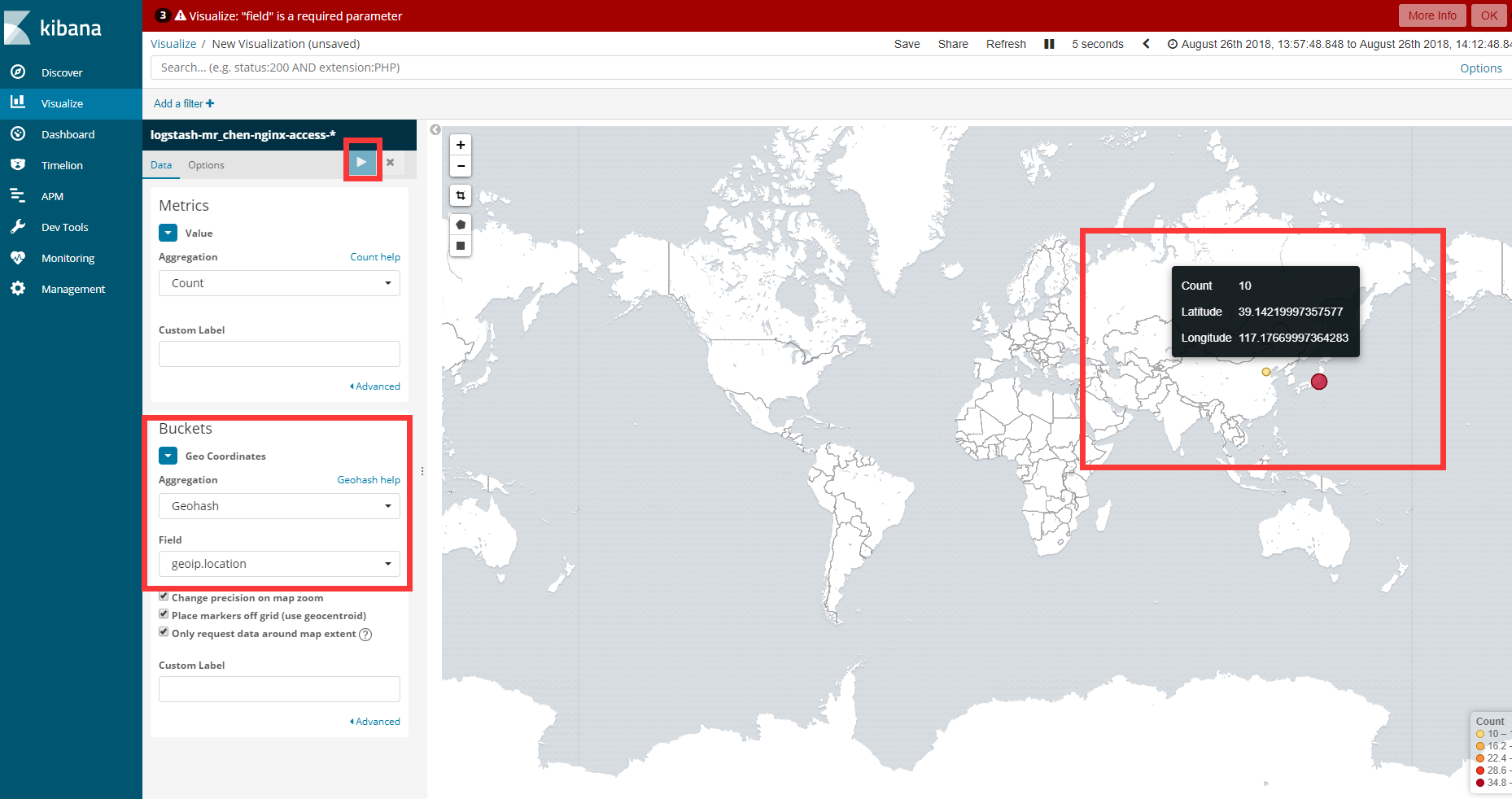

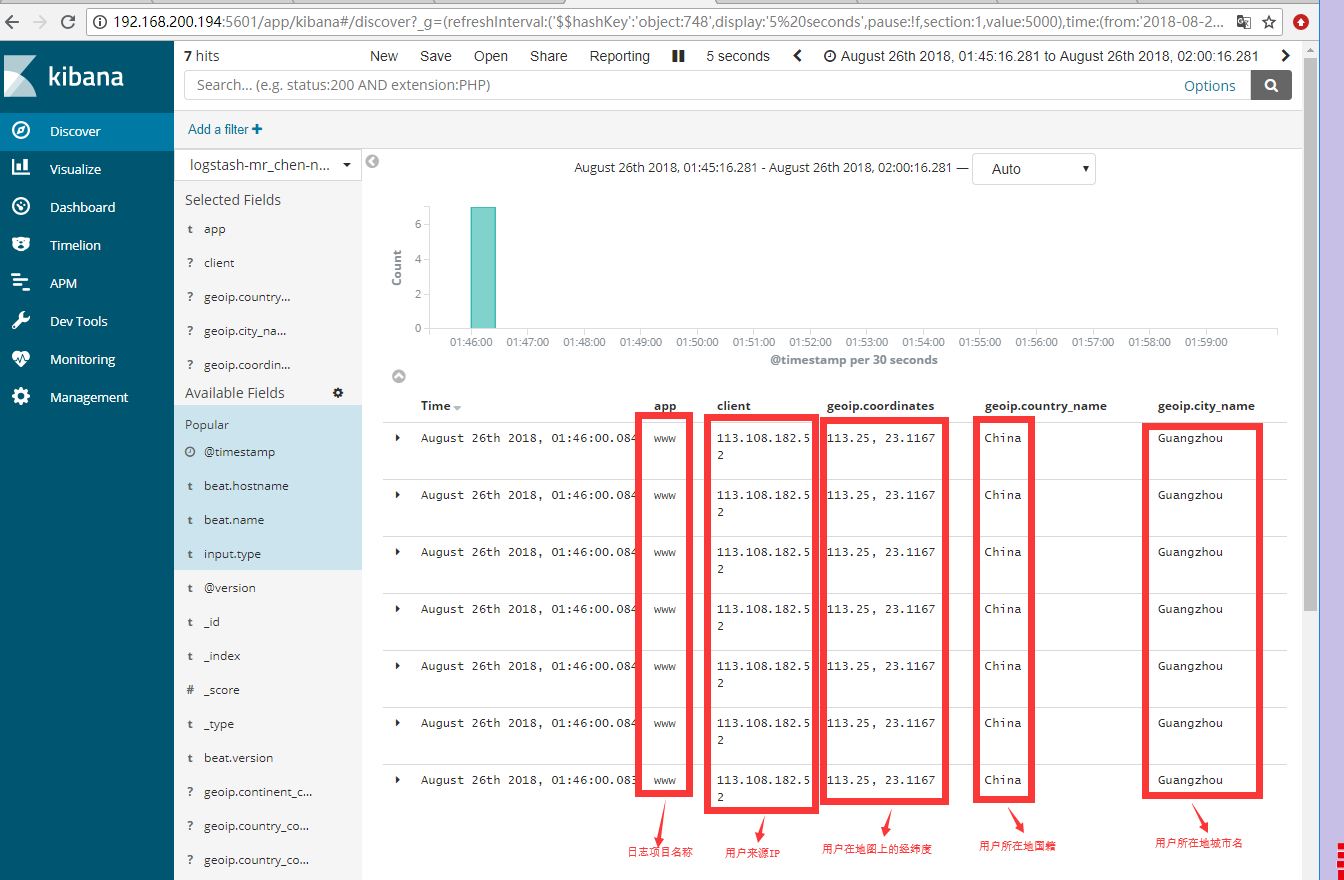

6.1.3 修改logstash-kibana服务器上logstash的配置文件

#logstash配置文件修改成如下所示[root@Logstash-Kibana ~]# vim /etc/logstash/conf.d/logstash-from-redis.conf[root@Logstash-Kibana ~]# cat /etc/logstash/conf.d/logstash-from-redis.confinput {redis {host => "192.168.200.194"port => 6379password => "yunjisuan"db => "0"data_type => "list"key => "filebeat"}}filter {if [app] == "www" { #如果日志项目名称是wwwif [type] == "nginx-access-json" { #如果是json类型的数据json {source => "message" #将源为message的json格式数据进行解析remove_field => ["message"] #移除message字段}geoip {source => "remote_addr" #针对remote_addr的数据进行来源解析target => "geoip" #将解析结果输出到geoip字段中database => "/opt/GeoLite2-City.mmdb" #geoip的解析库文件位置add_field => ["[geoip][coordinates]","%{[geoip][longitude]}"] #添加列表格式字段数据add_field => ["[geoip][coordinates]","%{[geoip][latitude]}"] #添加列表格式字段数据}mutate {convert => ["[geoip][coordinates]","float"] #将列表格式转换成字符串格式}}if [type] == "nginx-access" { #如果是main格式类型数据grok {match => {"message" => '(?<client>[0-9.]+).*' #从message中抓取client字段数据}}geoip {source => "client" #对client字段数据进行来源解析target => "geoip"database => "/opt/GeoLite2-City.mmdb"add_field => ["[geoip][coordinates]","%{[geoip][longitude]}"]add_field => ["[geoip][coordinates]","%{[geoip][latitude]}"]}mutate {convert => ["[geoip][coordinates]","float"]}}}}output {elasticsearch {hosts => ["http://192.168.200.191:9200","http://192.168.200.192:9200","http://192.168.200.193:9200"]index => "logstash-mr_chen-%{type}-%{+YYYY.MM.dd}" #根据type变量的值的不同写入不同的索引}stdout { codec => rubydebug }}#启动logstash进程[root@Logstash-Kibana ~]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/logstash-from-redis.conf#查看redis的key的消费情况[root@Logstash-Kibana ~]# redis-cli -a yunjisuan llen filebeat(integer) 0

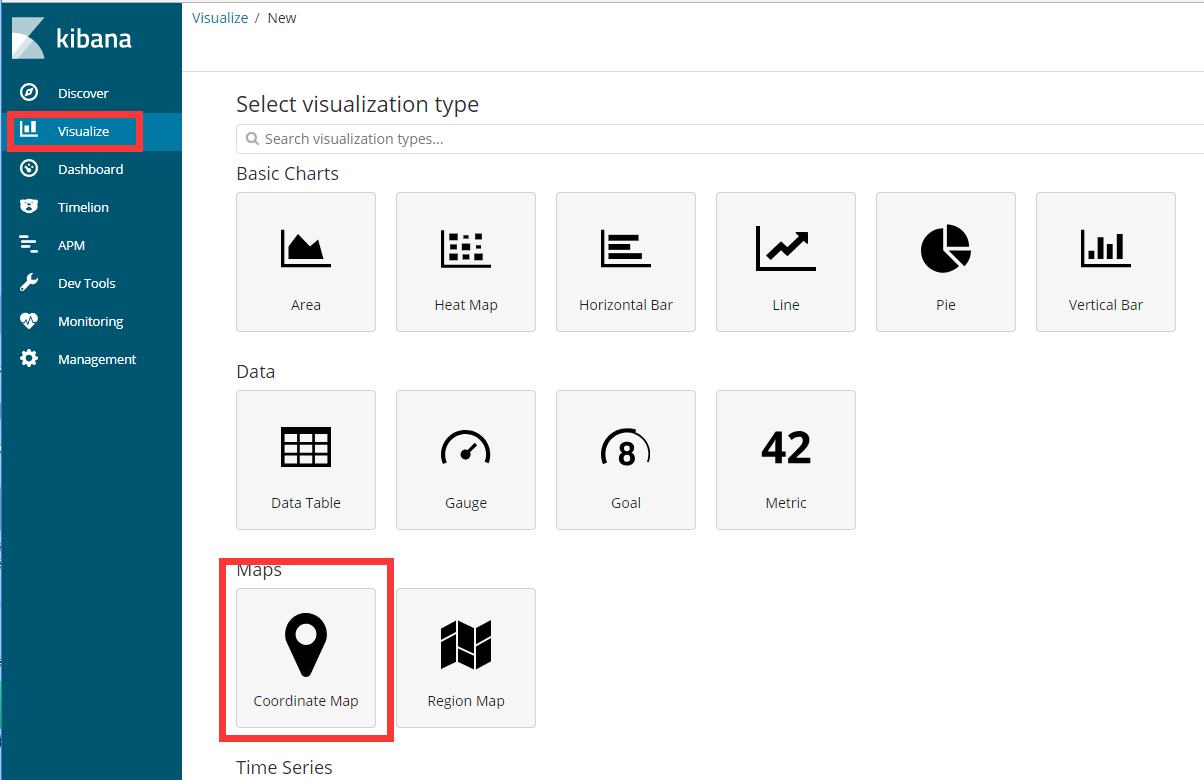

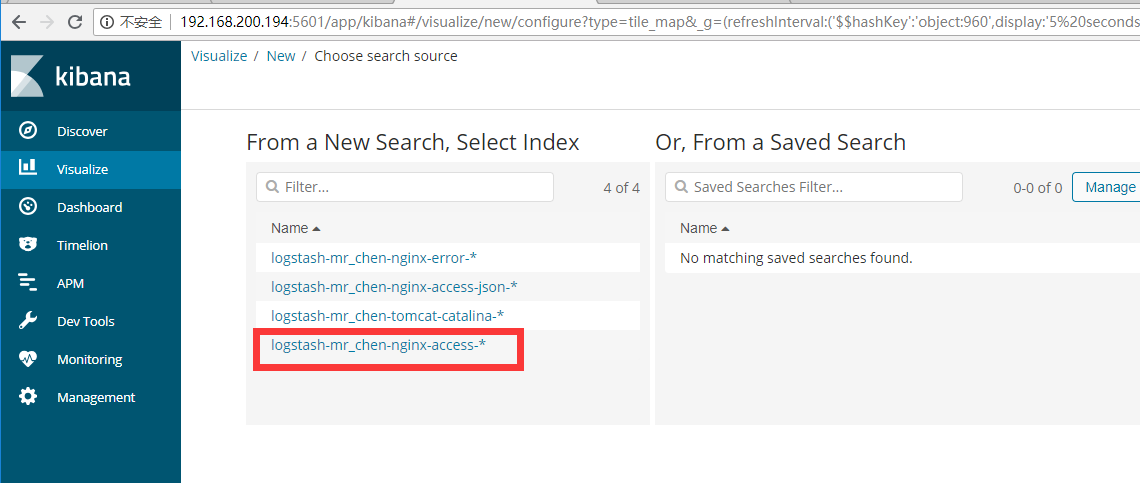

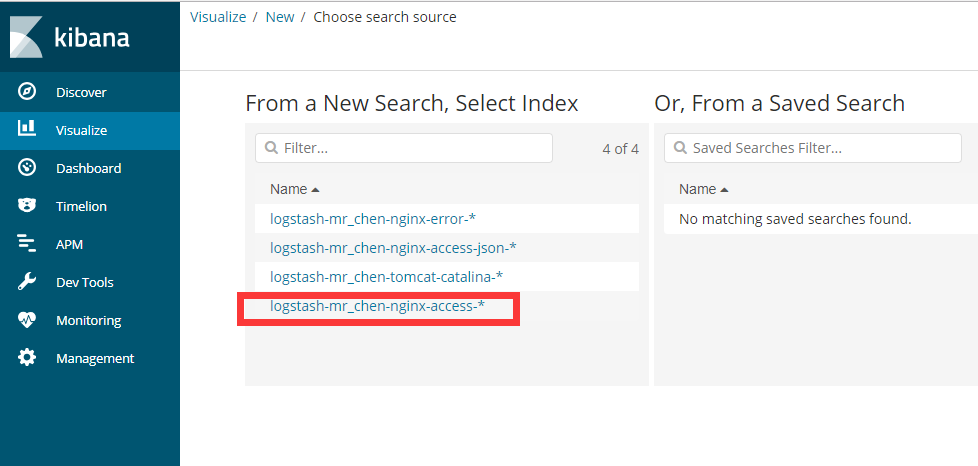

6.1.4 创建kibana的索引

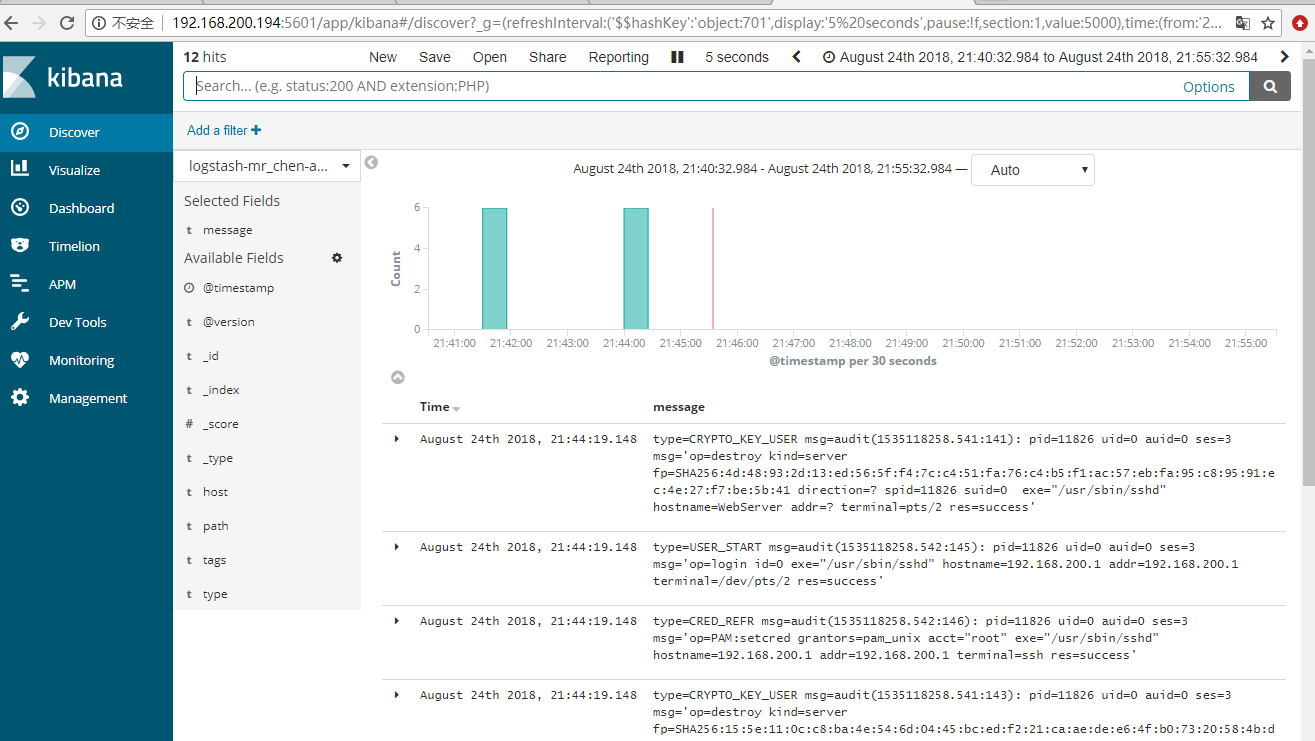

在kibana上关联索引,进行数据收集的展示

6.2 收集Java堆栈日志

6.2.1 部署tomcat

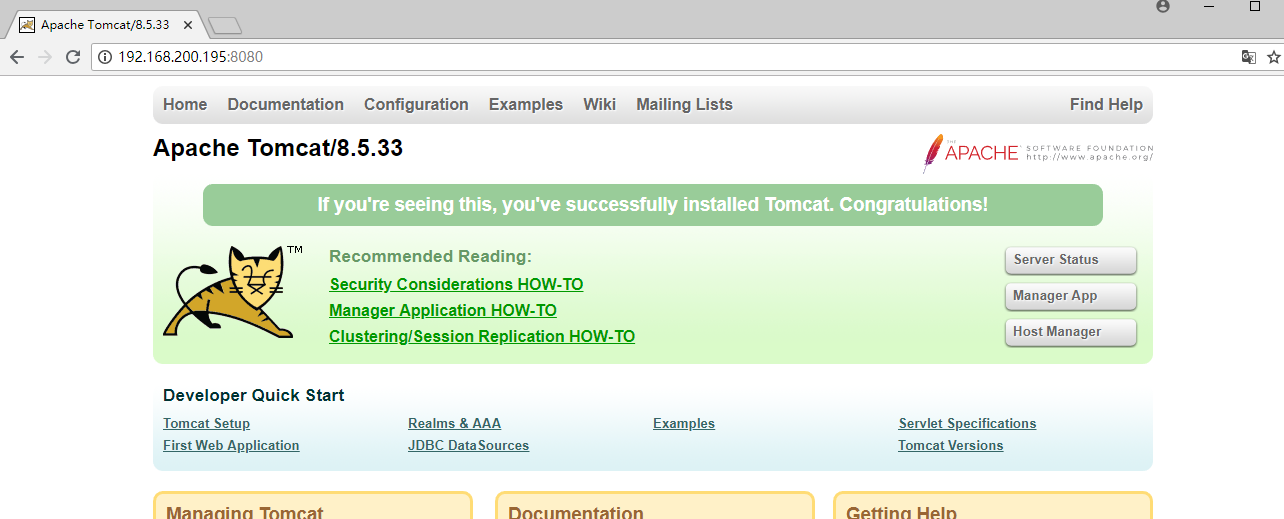

[root@WebServer ~]# wget http://mirror.bit.edu.cn/apache/tomcat/tomcat-8/v8.5.33/bin/apache-tomcat-8.5.33.tar.gz[root@WebServer ~]# tar xf apache-tomcat-8.5.33.tar.gz -C /usr/local/[root@WebServer ~]# mv /usr/local/apache-tomcat-8.5.33 /usr/local/tomcat[root@WebServer ~]# /usr/local/tomcat/bin/startup.shUsing CATALINA_BASE: /usr/local/tomcatUsing CATALINA_HOME: /usr/local/tomcatUsing CATALINA_TMPDIR: /usr/local/tomcat/tempUsing JRE_HOME: /usrUsing CLASSPATH: /usr/local/tomcat/bin/bootstrap.jar:/usr/local/tomcat/bin/tomcat-juli.jarTomcat started.[root@WebServer ~]# tail -f /usr/local/tomcat/logs/catalina.out #查看日志26-Aug-2018 11:53:53.432 信息 [localhost-startStop-1] org.apache.catalina.startup.HostConfig.deployDirectory Deployment of web application directory [/usr/local/tomcat/webapps/docs] has finished in [22] ms26-Aug-2018 11:53:53.432 信息 [localhost-startStop-1] org.apache.catalina.startup.HostConfig.deployDirectory Deploying web application directory [/usr/local/tomcat/webapps/examples]26-Aug-2018 11:53:53.717 信息 [localhost-startStop-1] org.apache.catalina.startup.HostConfig.deployDirectory Deployment of web application directory [/usr/local/tomcat/webapps/examples] has finished in [285] ms26-Aug-2018 11:53:53.718 信息 [localhost-startStop-1] org.apache.catalina.startup.HostConfig.deployDirectory Deploying web application directory [/usr/local/tomcat/webapps/host-manager]26-Aug-2018 11:53:53.742 信息 [localhost-startStop-1] org.apache.catalina.startup.HostConfig.deployDirectory Deployment of web application directory [/usr/local/tomcat/webapps/host-manager] has finished in [24] ms26-Aug-2018 11:53:53.742 信息 [localhost-startStop-1] org.apache.catalina.startup.HostConfig.deployDirectory Deploying web application directory [/usr/local/tomcat/webapps/manager]26-Aug-2018 11:53:53.764 信息 [localhost-startStop-1] org.apache.catalina.startup.HostConfig.deployDirectory Deployment of web application directory [/usr/local/tomcat/webapps/manager] has finished in [22] ms26-Aug-2018 11:53:53.778 信息 [main] org.apache.coyote.AbstractProtocol.start Starting ProtocolHandler ["http-nio-8080"]26-Aug-2018 11:53:53.796 信息 [main] org.apache.coyote.AbstractProtocol.start Starting ProtocolHandler ["ajp-nio-8009"]26-Aug-2018 11:53:53.800 信息 [main] org.apache.catalina.startup.Catalina.start Server startup in 903 ms

用浏览器访问tomcat

6.2.2 配置filebeat收集日志

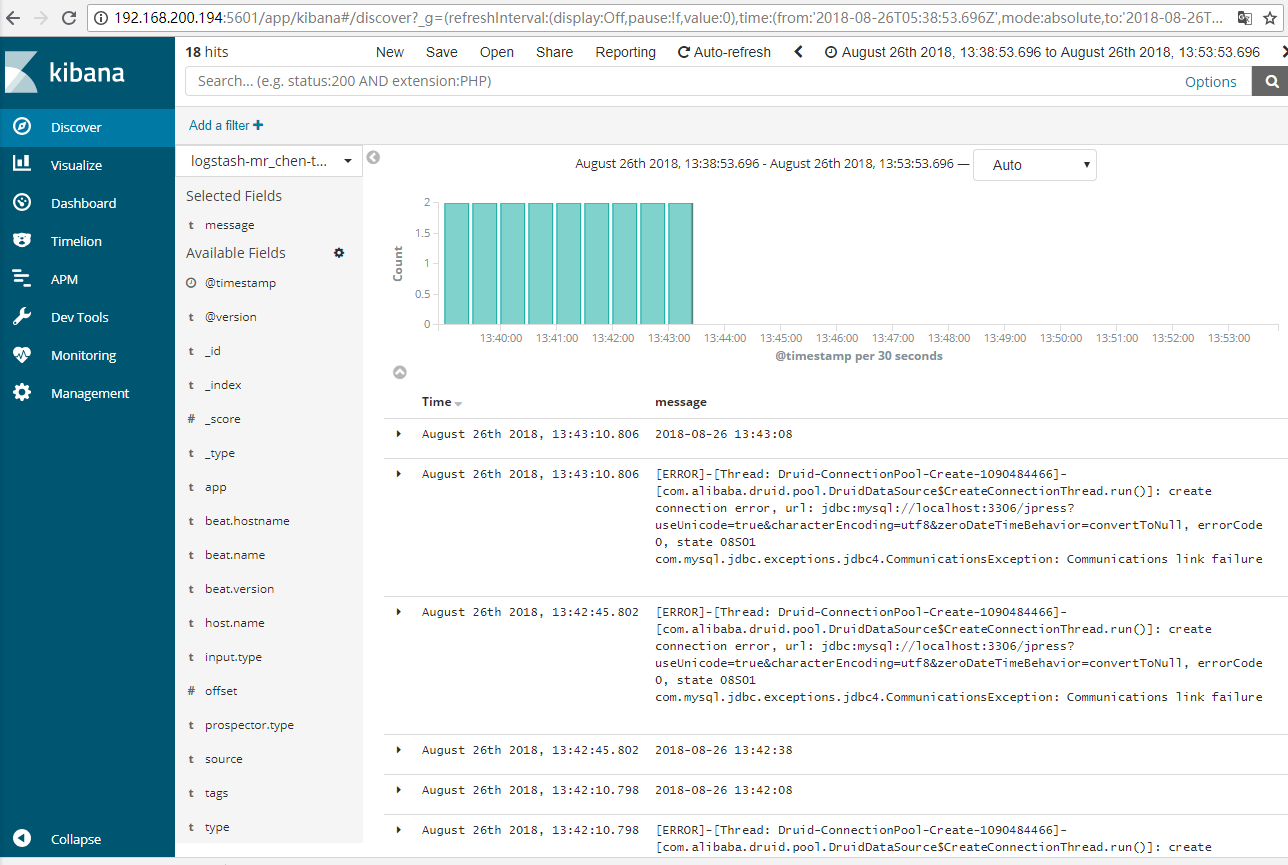

catalina.out就是tomcat的堆栈日志

#catalina.out的堆栈报错示例2018-08-26 13:20:08[ERROR]-[Thread: Druid-ConnectionPool-Create-1090484466]-[com.alibaba.druid.pool.DruidDataSource$CreateConnectionThread.run()]: create connection error, url: jdbc:mysql://localhost:3306/jpress?useUnicode=true&characterEncoding=utf8&zeroDateTimeBehavior=convertToNull, errorCode 0, state 08S01com.mysql.jdbc.exceptions.jdbc4.CommunicationsException: Communications link failureThe last packet sent successfully to the server was 0 milliseconds ago. The driver has not received any packets from the server.at sun.reflect.GeneratedConstructorAccessor25.newInstance(Unknown Source)at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)at java.lang.reflect.Constructor.newInstance(Constructor.java:423)at com.mysql.jdbc.Util.handleNewInstance(Util.java:411)at com.mysql.jdbc.SQLError.createCommunicationsException(SQLError.java:1117)at com.mysql.jdbc.MysqlIO.<init>(MysqlIO.java:350)at com.mysql.jdbc.ConnectionImpl.coreConnect(ConnectionImpl.java:2393)at com.mysql.jdbc.ConnectionImpl.connectOneTryOnly(ConnectionImpl.java:2430)at com.mysql.jdbc.ConnectionImpl.createNewIO(ConnectionImpl.java:2215)at com.mysql.jdbc.ConnectionImpl.<init>(ConnectionImpl.java:813)at com.mysql.jdbc.JDBC4Connection.<init>(JDBC4Connection.java:47)at sun.reflect.GeneratedConstructorAccessor22.newInstance(Unknown Source)at sun.reflect.DelegatingConstructorAccessorImpl.newInstance(DelegatingConstructorAccessorImpl.java:45)at java.lang.reflect.Constructor.newInstance(Constructor.java:423)at com.mysql.jdbc.Util.handleNewInstance(Util.java:411)at com.mysql.jdbc.ConnectionImpl.getInstance(ConnectionImpl.java:399)at com.mysql.jdbc.NonRegisteringDriver.connect(NonRegisteringDriver.java:334)at com.alibaba.druid.filter.FilterChainImpl.connection_connect(FilterChainImpl.java:148)at com.alibaba.druid.filter.stat.StatFilter.connection_connect(StatFilter.java:211)at com.alibaba.druid.filter.FilterChainImpl.connection_connect(FilterChainImpl.java:142)at com.alibaba.druid.pool.DruidAbstractDataSource.createPhysicalConnection(DruidAbstractDataSource.java:1423)at com.alibaba.druid.pool.DruidAbstractDataSource.createPhysicalConnection(DruidAbstractDataSource.java:1477)at com.alibaba.druid.pool.DruidDataSource$CreateConnectionThread.run(DruidDataSource.java:2001)Caused by: java.net.ConnectException: 拒绝连接 (Connection refused)at java.net.PlainSocketImpl.socketConnect(Native Method)at java.net.AbstractPlainSocketImpl.doConnect(AbstractPlainSocketImpl.java:350)at java.net.AbstractPlainSocketImpl.connectToAddress(AbstractPlainSocketImpl.java:206)at java.net.AbstractPlainSocketImpl.connect(AbstractPlainSocketImpl.java:188)at java.net.SocksSocketImpl.connect(SocksSocketImpl.java:392)at java.net.Socket.connect(Socket.java:589)at java.net.Socket.connect(Socket.java:538)at java.net.Socket.<init>(Socket.java:434)at java.net.Socket.<init>(Socket.java:244)at com.mysql.jdbc.StandardSocketFactory.connect(StandardSocketFactory.java:257)at com.mysql.jdbc.MysqlIO.<init>(MysqlIO.java:300)... 17 more#修改filebeat配置文件加入对tomcat的堆栈报错的数据收集[root@WebServer ~]# cat /etc/filebeat/filebeat.ymlfilebeat.inputs:- type: logpaths:- /usr/local/nginx/logs/access_json.logtags: ["access"]fields:app: wwwtype: nginx-access-jsonfields_under_root: true- type: logpaths:- /usr/local/nginx/logs/access_main.logtags: ["access"]fields:app: wwwtype: nginx-accessfields_under_root: true- type: logpaths:- /usr/local/nginx/logs/error.logtags: ["error"]fields:app: wwwtype: nginx-errorfields_under_root: true- type: logpaths:- /usr/local/tomcat/logs/catalina.outtags: ["tomcat"]fields:app: wwwtype: tomcat-catalinafields_under_root: truemultiline:pattern: '^['negate: truematch: afteroutput.redis:hosts: ["192.168.200.194"]password: "yunjisuan"key: "filebeat"db: 0datatype: list#启动filebeat[root@WebServer ~]# systemctl start filebeat#查看redis的数据队列数[root@Logstash-Kibana ~]# redis-cli -a yunjisuan llen filebeat(integer) 11#启动logstash-kibana服务器下的logstash进程[root@Logstash-Kibana ~]# /usr/share/logstash/bin/logstash -f /etc/logstash/conf.d/logstash-from-redis.conf

6.2.3 创建kibana的索引展示

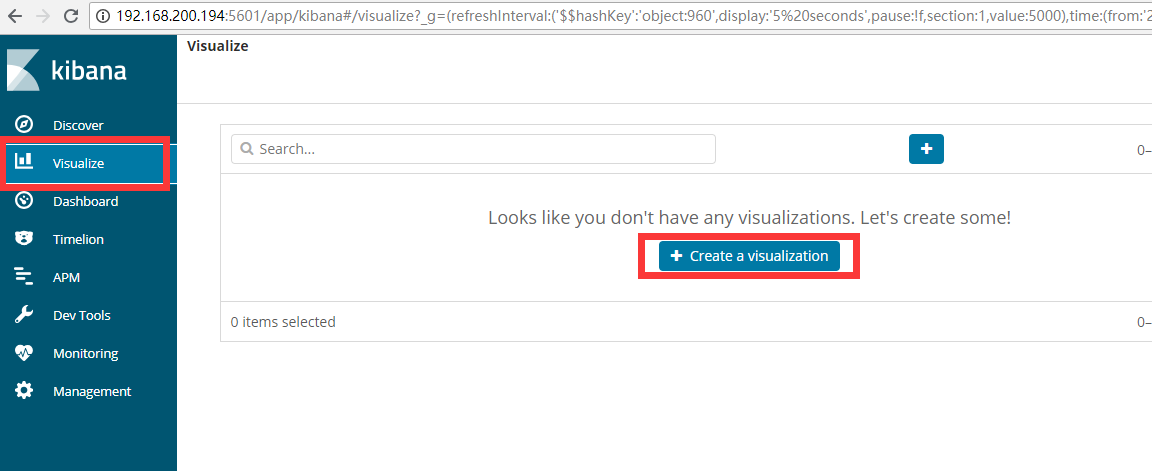

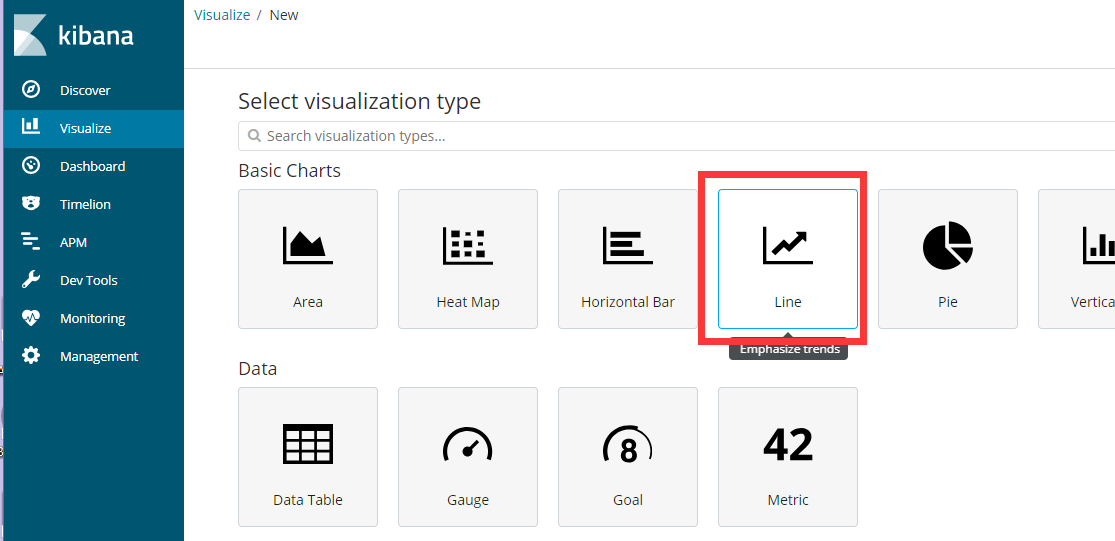

6.3 Kibana可视化和仪表盘

在nginx访问日志的main格式中,模拟些不同的访问IP

113.108.182.52123.150.187.130203.186.145.250114.80.166.240119.147.146.18958.89.67.152[root@WebServer nginx]# a='58.89.67.152 - - [26/Aug/2018:14:17:33 +0800] "GET / HTTP/1.1" 200 21 "-" "curl/7.29.0" "-"'[root@WebServer nginx]# for i in `seq 50`;do echo $a >> /usr/local/nginx/logs/access_main.log ;done

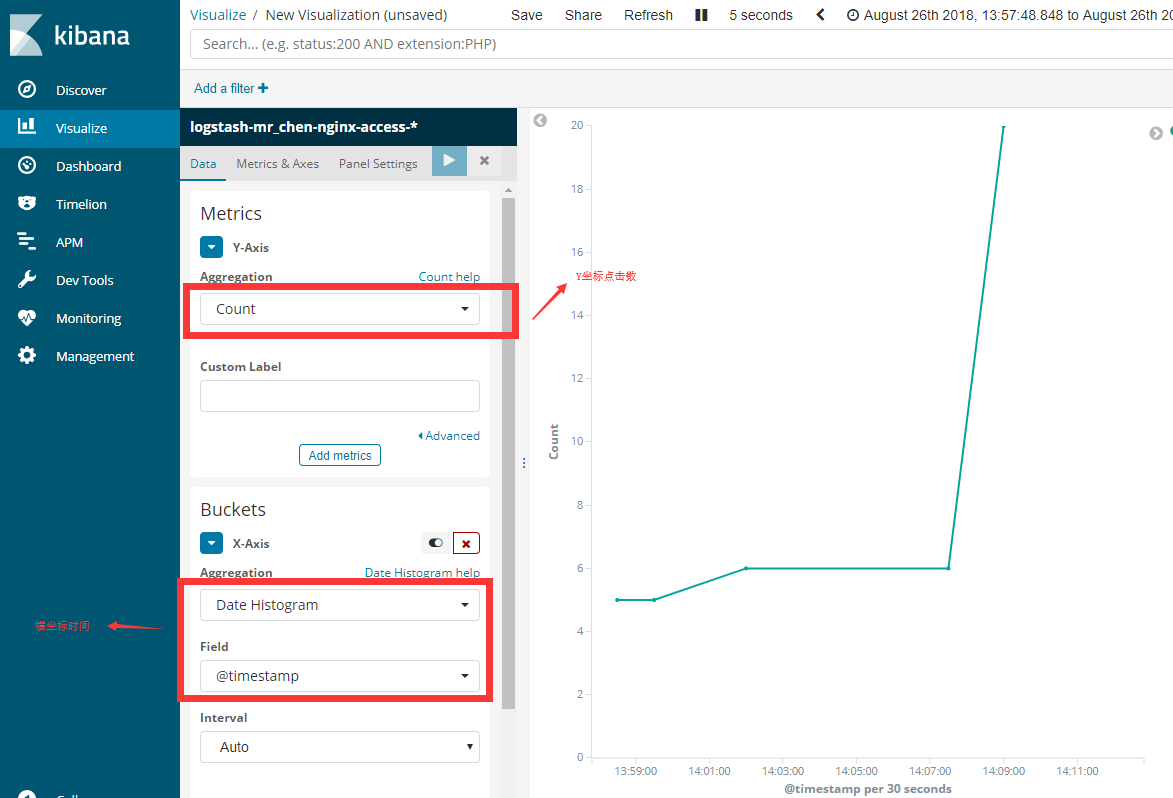

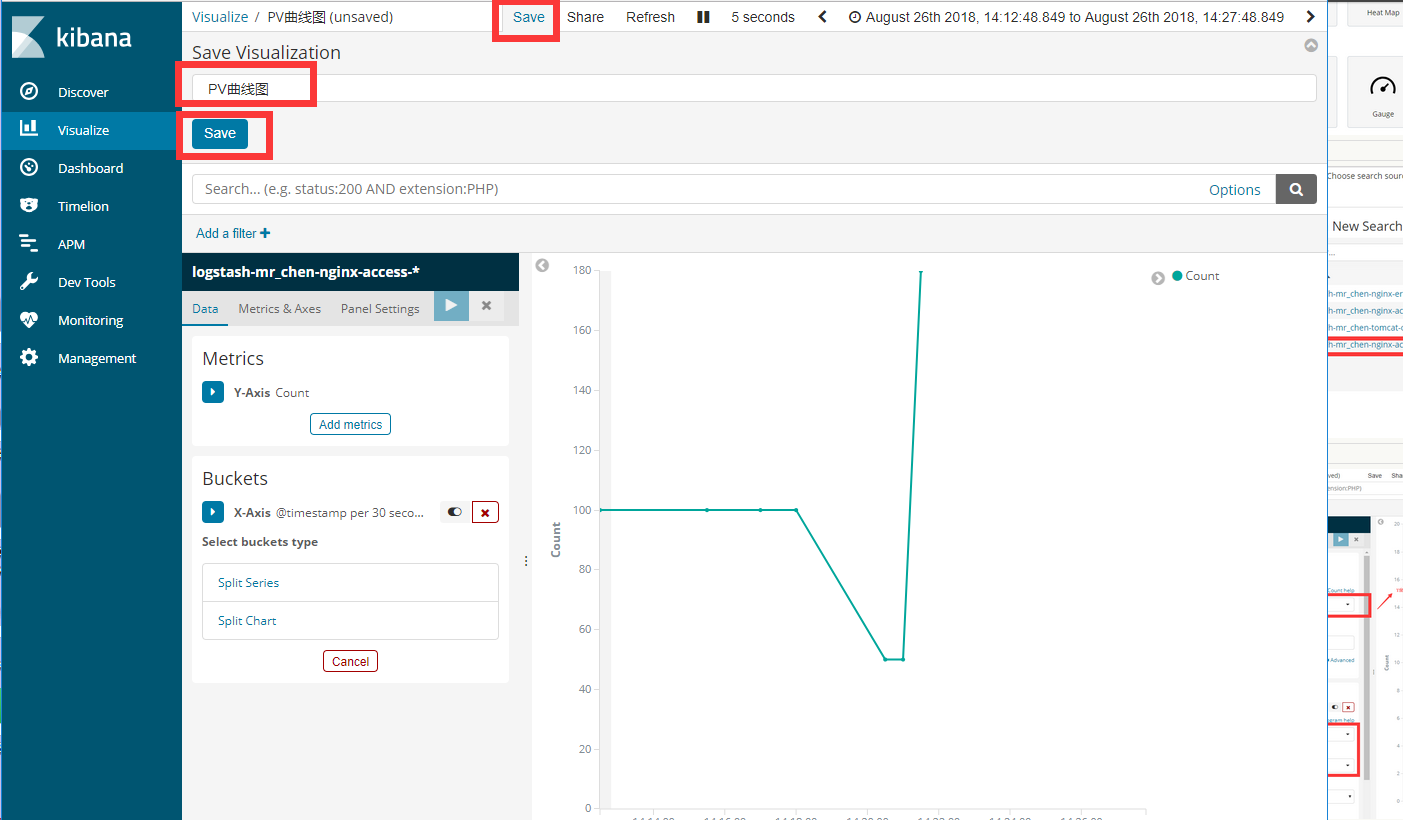

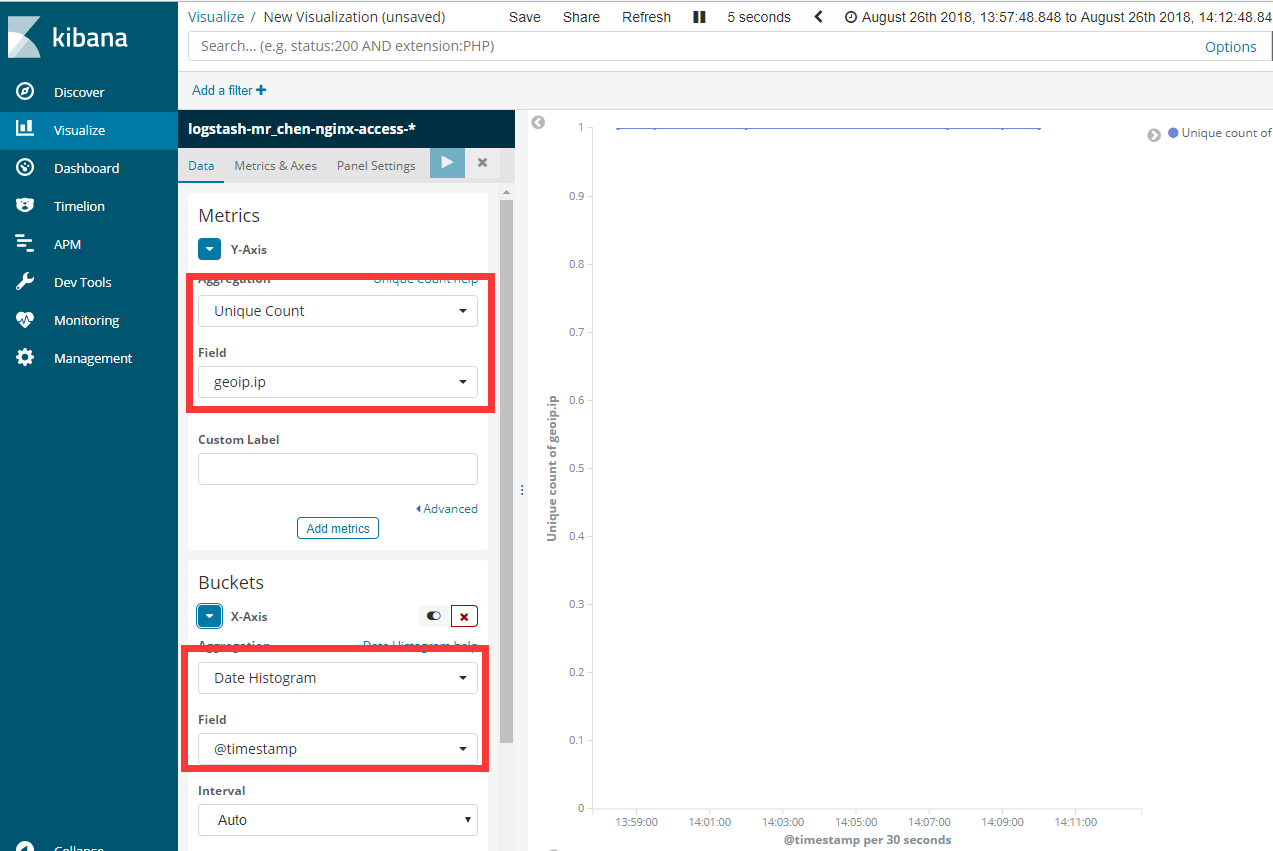

6.3.1 PV/IP

统计pv其实就是统计单位时间内的访问量

统计IP其实就是统计去重复以后的访问IP数

6.3.2 用户地理位置分布