一.smote相关理论

(1).

SMOTE是一种对普通过采样(oversampling)的一个改良。普通的过采样会使得训练集中有很多重复的样本。

SMOTE的全称是Synthetic Minority Over-Sampling Technique,译为“人工少数类过采样法”。

SMOTE没有直接对少数类进行重采样,而是设计了算法来人工合成一些新的少数类的样本。

为了叙述方便,就假设阳性为少数类,阴性为多数类

合成新少数类的阳性样本的算法如下:

- 选定一个阳性样本ss

- 找到ss最近的kk个样本,kk可以取5,10之类。这kk个样本可能有阳性的也有阴性的。

- 从这kk个样本中随机挑选一个样本,记为rr。

- 合成一个新的阳性样本s′s′,s′=λs+(1−λ)rs′=λs+(1−λ)r,λλ是(0,1)(0,1)之间的随机数。换句话说,新生成的点在rr与ss之间的连线上。

重复以上步骤,就可以生成很多阳性样本。

=======画了几张图,更新一下======

用图的形式说明一下SMOTE的步骤:

1.先选定一个阳性样本(假设阳性为少数类)

2.找出这个阳性样本的k近邻(假设k=5)。5个近邻已经被圈出。

3.随机从这k个近邻中选出一个样本(用绿色圈出来了)。

4.在阳性样本和被选出的这个近邻之间的连线上,随机找一点。这个点就是人工合成的新的阳性样本(绿色正号标出)。

以上来自http://sofasofa.io/forum_main_post.php?postid=1000817中的叙述

(2).

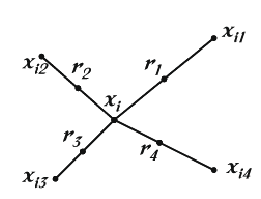

With this approach, the positive class is over-sampled by taking each minority class sample and introducing synthetic examples along the line segments joining any/all of the k minority class nearest neighbours. Depending upon the amount of over-sampling required, neighbours from the k nearest neighbours are randomly chosen. This process is illustrated in the following Figure, where xixi is the selected point, xi1xi1 to xi4xi4are some selected nearest neighbours and r1r1 to r4r4 the synthetic data points created by the randomized interpolation. The implementation of this work uses only one nearest neighbour with the euclidean distance, and balances both classes to 50% distribution.

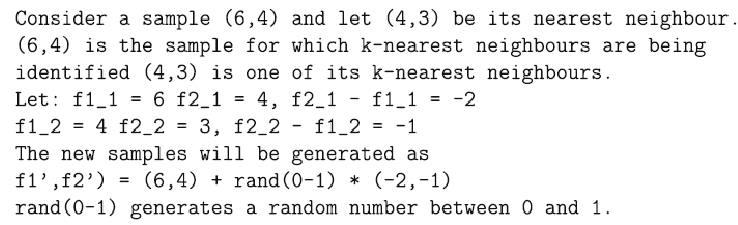

Synthetic samples are generated in the following way: Take the difference between the feature vector (sample) under consideration and its nearest neighbour. Multiply this difference by a random number between 0 and 1, and add it to the feature vector under consideration. This causes the selection of a random point along the line segment between two specific features. This approach effectively forces the decision region of the minority class to become more general. An example is detailed in the next Figure.

In short, the main idea is to form new minority class examples by interpolating between several minority class examples that lie together. In contrast with the common replication techniques (for example random oversampling), in which the decision region usually become more specific, with SMOTE the overfitting problem is somehow avoided by causing the decision boundaries for the minority class to be larger and to spread further into the majority class space, since it provides related minority class samples to learn from. Specifically, selecting a small k-value could also avoid the risk of including some noise in the data.

以上来自https://sci2s.ugr.es/multi-imbalanced中的叙述

二.spark实现smote

核心代码如下,完整代码https://github.com/jiangnanboy/spark-smote/blob/master/spark%20smote.txt

1 /** 2 * (1) 对于少数类(X)中每一个样本x,计算它到少数类样本集(X)中所有样本的距离,得到其k近邻。 3 * (2) 根据样本不平衡比例设置一个采样比例以确定采样倍率sampling_rate,对于每一个少数类样本x, 4 * 从其k近邻中随机选择sampling_rate个近邻,假设选择的近邻为 x(1),x(2),...,x(sampling_rate)。 5 * (3) 对于每一个随机选出的近邻 x(i)(i=1,2,...,sampling_rate),分别与原样本按照如下的公式构建新的样本 6 * xnew=x+rand(0,1)?(x(i)?x) 7 * 8 * http://sofasofa.io/forum_main_post.php?postid=1000817 9 * http://sci2s.ugr.es/multi-imbalanced 10 * @param session 11 * @param labelFeatures 12 * @param knn 样本相似近邻 13 * @param samplingRate 近邻采样率 (knn * samplingRate),从knn中选择几个近邻 14 * @parm rationToMax 采样比率(与最多类样本数的比率) 0.1表示与最多样本的比率是 -> (1:10),即达到最多样本的比率 15 * @return 16 */ 17 public static Dataset<Row> smote(SparkSession session, Dataset<Row> labelFeatures, int knn, double samplingRate, double rationToMax) { 18 19 Dataset<Row> labelCountDataset = labelFeatures.groupBy("label").agg(count("label").as("keyCount")); 20 List<Row> listRow = labelCountDataset.collectAsList(); 21 ConcurrentMap<String, Long> keyCountConMap = new ConcurrentHashMap<>(); //每个label对应的样本数 22 for(Row row : listRow) 23 keyCountConMap.put(row.getString(0), row.getLong(1)); 24 Row maxSizeRow = labelCountDataset.select(max("keyCount").as("maxSize")).first(); 25 long maxSize = maxSizeRow.getAs("maxSize");//最大样本数 26 27 JavaPairRDD<String, SparseVector> sparseVectorJPR = labelFeatures.toJavaRDD().mapToPair(row -> { 28 String label = row.getString(0); 29 SparseVector features = (SparseVector) row.get(1); 30 return new Tuple2<String, SparseVector>(label, features); 31 }); 32 33 JavaPairRDD<String, List<SparseVector>> combineByKeyPairRDD = sparseVectorJPR.combineByKey(sparseVector -> { 34 List<SparseVector> list = new ArrayList<>(); 35 list.add(sparseVector); 36 return list; 37 }, (list, sparseVector) -> {list.add(sparseVector);return list;}, 38 (list_A, list_B) -> {list_A.addAll(list_B);return list_A;}); 39 40 41 JavaSparkContext jsc = JavaSparkContext.fromSparkContext(session.sparkContext()); 42 final Broadcast<ConcurrentMap<String, Long>> keyCountBroadcast = jsc.broadcast(keyCountConMap); 43 final Broadcast<Long> maxSizeBroadcast = jsc.broadcast(maxSize); 44 final Broadcast<Integer> knnBroadcast = jsc.broadcast(knn); 45 final Broadcast<Double> samplingRateBroadcast = jsc.broadcast(samplingRate); 46 final Broadcast<Double> rationToMaxBroadcast = jsc.broadcast(rationToMax); 47 48 /** 49 * JavaPairRDD<String, List<SparseVector>> 50 * JavaPairRDD<String, String> 51 * JavaRDD<Row> 52 */ 53 JavaPairRDD<String, List<SparseVector>> pairRDD = combineByKeyPairRDD 54 .filter(slt -> { 55 return slt._2().size() > 1; 56 }) 57 .mapToPair(slt -> { 58 String label = slt._1(); 59 ConcurrentMap<String, Long> keySizeConMap = keyCountBroadcast.getValue(); 60 long oldSampleSize = keySizeConMap.get(label); 61 long max = maxSizeBroadcast.getValue(); 62 double ration = rationToMaxBroadcast.getValue(); 63 int Knn = knnBroadcast.getValue(); 64 double rate = samplingRateBroadcast.getValue(); 65 if (oldSampleSize < maxSize * rationToMax) { 66 int needSampleSize = (int) (max * ration - oldSampleSize); 67 List<SparseVector> list = generateSample(slt._2(), needSampleSize, Knn, rate); 68 return new Tuple2<String, List<SparseVector>>(label, list); 69 } else { 70 return slt; 71 } 72 }); 73 74 JavaRDD<Row> javaRowRDD = pairRDD.flatMapToPair(slt -> { 75 List<Tuple2<String, SparseVector>> floatPairList = new ArrayList<>(); 76 String label = slt._1(); 77 for(SparseVector sv : slt._2()) 78 floatPairList.add(new Tuple2<String, SparseVector>(label, sv)); 79 return floatPairList.iterator(); 80 }).map(svt->{ 81 return RowFactory.create(svt._1(), svt._2()); 82 }); 83 84 Dataset<Row> resultDataset = session.createDataset(javaRowRDD.rdd(), EncoderInit.getlabelFeaturesRowEncoder()); 85 return resultDataset; 86 }