部署raid10磁盘阵列至少需要4快硬盘。

linux系统中,使用mdadm命令创建和管理软件RAID磁盘阵列。

测试:使用mdadm命令创建raid10,名称为/dev/md0

1、测试

[root@linuxprobe dev]# pwd ## 查看当前路径

/dev

[root@linuxprobe dev]# find md* ## 查看md开头的文件

find: ‘md*’: No such file or directory

[root@linuxprobe dev]# find sd* ## 查看硬盘

sda

sda1

sda2

sdb

sdc

sdd

sde

[root@linuxprobe dev]# mdadm -Cv /dev/md0 -a yes -n 4 -l 10 /dev/sdb /dev/sdc /dev/sdd /dev/sde ## 创建 raid10 磁盘阵列 /dev/md0

## -C 代表创建,-v表示显示过程,-a yes 代表自动创建设备文件,-n 4 表示使用4块硬盘来部署磁盘阵列,-l 10 表示RAID10方案

mdadm: layout defaults to n2

mdadm: layout defaults to n2

mdadm: chunk size defaults to 512K

mdadm: size set to 20954624K

mdadm: Defaulting to version 1.2 metadata

mdadm: array /dev/md0 started.

2、检测磁盘阵列

[root@linuxprobe dev]# pwd

/dev

[root@linuxprobe dev]# find md*

md0

3、将制作好的 RAID10磁盘阵列进行格式化,指定为ext4文件系统

[root@linuxprobe dev]# mkfs.ext4 /dev/md0

mke2fs 1.42.9 (28-Dec-2013)

Filesystem label=

OS type: Linux

Block size=4096 (log=2)

Fragment size=4096 (log=2)

Stride=128 blocks, Stripe width=256 blocks

2621440 inodes, 10477312 blocks

523865 blocks (5.00%) reserved for the super user

First data block=0

Maximum filesystem blocks=2157969408

320 block groups

32768 blocks per group, 32768 fragments per group

8192 inodes per group

Superblock backups stored on blocks:

32768, 98304, 163840, 229376, 294912, 819200, 884736, 1605632, 2654208,

4096000, 7962624

Allocating group tables: done

Writing inode tables: done

Creating journal (32768 blocks): done

Writing superblocks and filesystem accounting information: done

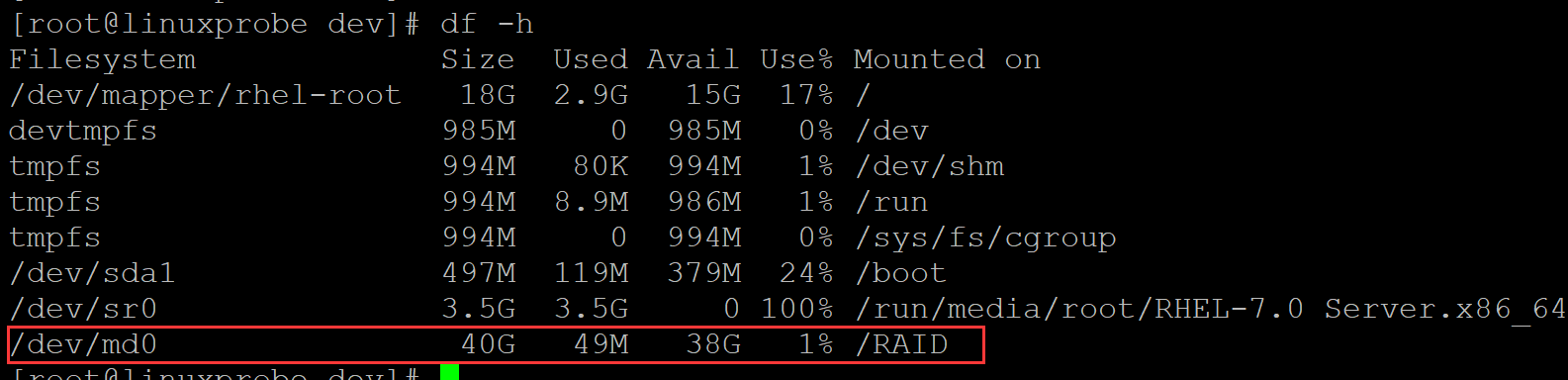

4、对格式化为ext4的磁盘阵列进行挂载

[root@linuxprobe dev]# df -h ## 查看当前硬盘挂载情况

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/rhel-root 18G 2.9G 15G 17% /

devtmpfs 985M 0 985M 0% /dev

tmpfs 994M 80K 994M 1% /dev/shm

tmpfs 994M 8.9M 986M 1% /run

tmpfs 994M 0 994M 0% /sys/fs/cgroup

/dev/sda1 497M 119M 379M 24% /boot

/dev/sr0 3.5G 3.5G 0 100% /run/media/root/RHEL-7.0 Server.x86_64

[root@linuxprobe dev]# mkdir /RAID ## 创建挂载点

[root@linuxprobe dev]# mount /dev/md0 /RAID/ ## 挂载

[root@linuxprobe dev]# df -h ## 查看硬盘挂载情况

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/rhel-root 18G 2.9G 15G 17% /

devtmpfs 985M 0 985M 0% /dev

tmpfs 994M 80K 994M 1% /dev/shm

tmpfs 994M 8.9M 986M 1% /run

tmpfs 994M 0 994M 0% /sys/fs/cgroup

/dev/sda1 497M 119M 379M 24% /boot

/dev/sr0 3.5G 3.5G 0 100% /run/media/root/RHEL-7.0 Server.x86_64

/dev/md0 40G 49M 38G 1% /RAID

5、查看/dev/md0磁盘阵列的详细信息

[root@linuxprobe /]# mdadm -D /dev/md0

/dev/md0:

Version : 1.2

Creation Time : Wed Oct 28 21:58:25 2020

Raid Level : raid10

Array Size : 41909248 (39.97 GiB 42.92 GB)

Used Dev Size : 20954624 (19.98 GiB 21.46 GB)

Raid Devices : 4

Total Devices : 4

Persistence : Superblock is persistent

Update Time : Wed Oct 28 22:28:17 2020

State : clean

Active Devices : 4

Working Devices : 4

Failed Devices : 0

Spare Devices : 0

Layout : near=2

Chunk Size : 512K

Name : linuxprobe.com:0 (local to host linuxprobe.com)

UUID : 468018e0:1f90d057:a9944b8e:ab4e3e85

Events : 17

Number Major Minor RaidDevice State

0 8 16 0 active sync /dev/sdb

1 8 32 1 active sync /dev/sdc

2 8 48 2 active sync /dev/sdd

3 8 64 3 active sync /dev/sde

6、设为开机自动挂载

[root@linuxprobe dev]# cat /etc/fstab ## 查看当前配置文件

#

# /etc/fstab

# Created by anaconda on Wed Oct 28 20:19:08 2020

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/rhel-root / xfs defaults 1 1

UUID=2f2c5a2f-df13-4b36-99c3-9edd1b976d40 /boot xfs defaults 1 2

/dev/mapper/rhel-swap swap swap defaults 0 0

[root@linuxprobe dev]# echo -e "/dev/md0\t/RAID\text4\tdefaults\t0\t0" >> /etc/fstab ## 修改配置文件,也可以使用vim编辑器

[root@linuxprobe dev]# cat /etc/fstab ## 查看修改后配置文件

#

# /etc/fstab

# Created by anaconda on Wed Oct 28 20:19:08 2020

#

# Accessible filesystems, by reference, are maintained under '/dev/disk'

# See man pages fstab(5), findfs(8), mount(8) and/or blkid(8) for more info

#

/dev/mapper/rhel-root / xfs defaults 1 1

UUID=2f2c5a2f-df13-4b36-99c3-9edd1b976d40 /boot xfs defaults 1 2

/dev/mapper/rhel-swap swap swap defaults 0 0

/dev/md0 /RAID ext4 defaults 0 0