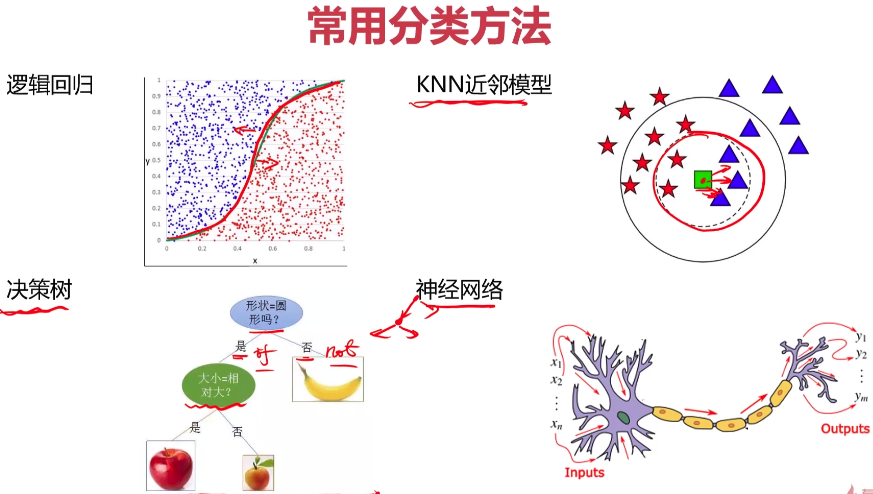

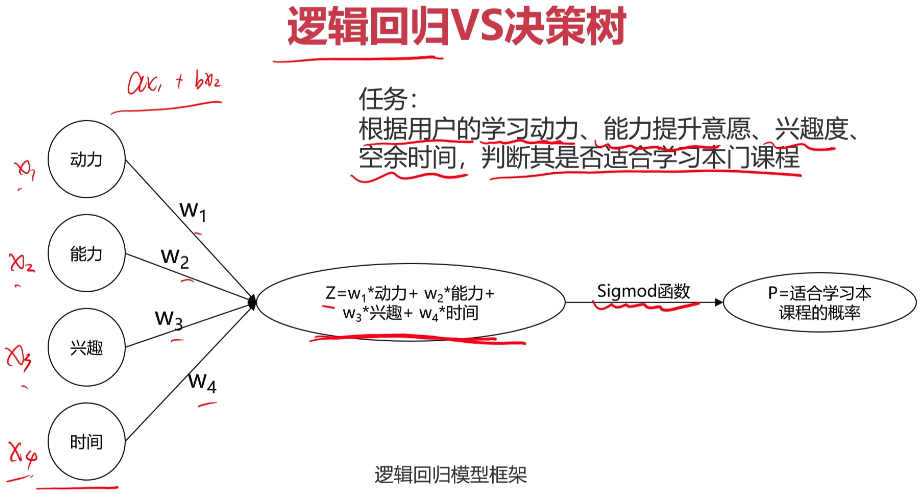

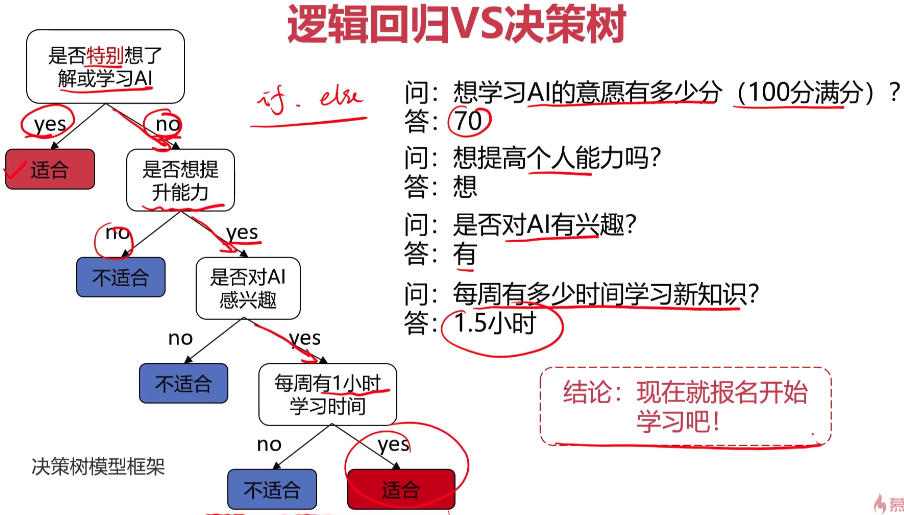

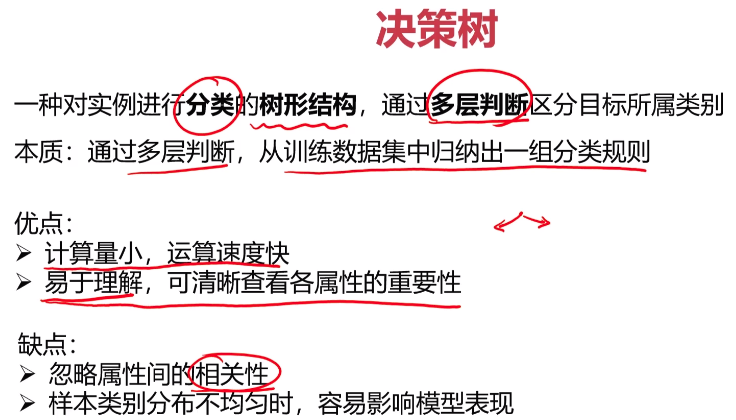

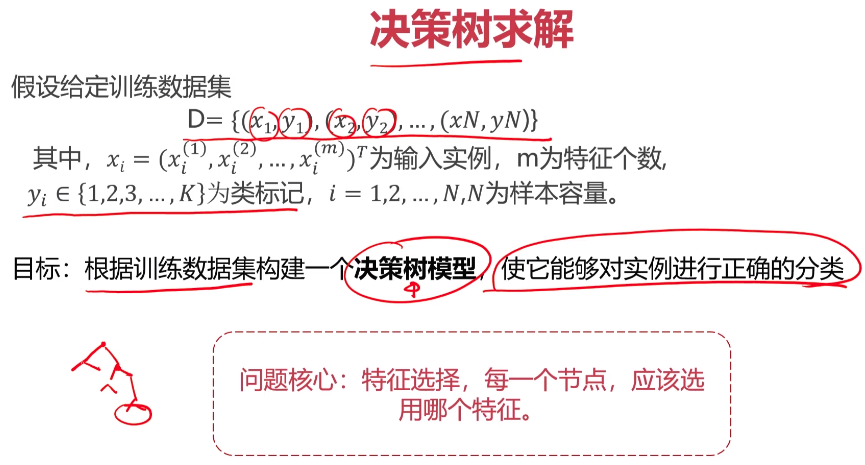

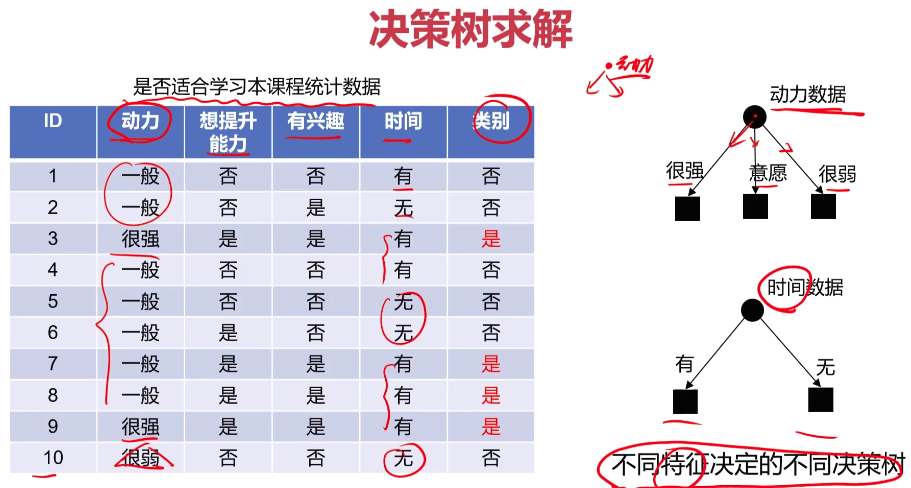

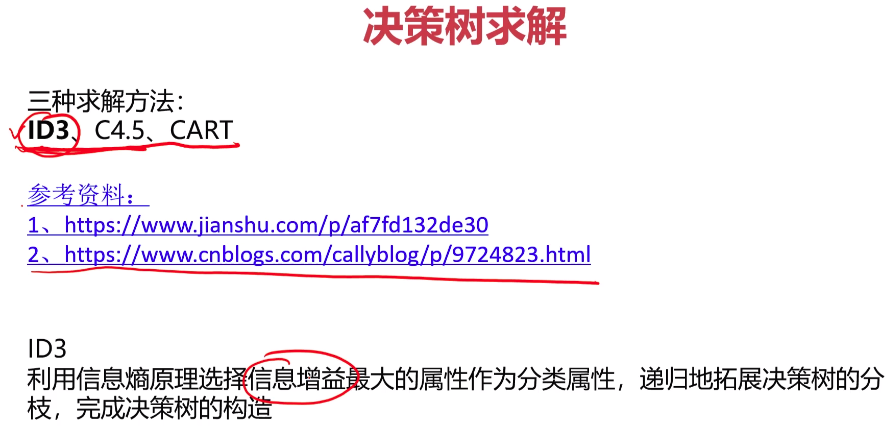

- 决策树

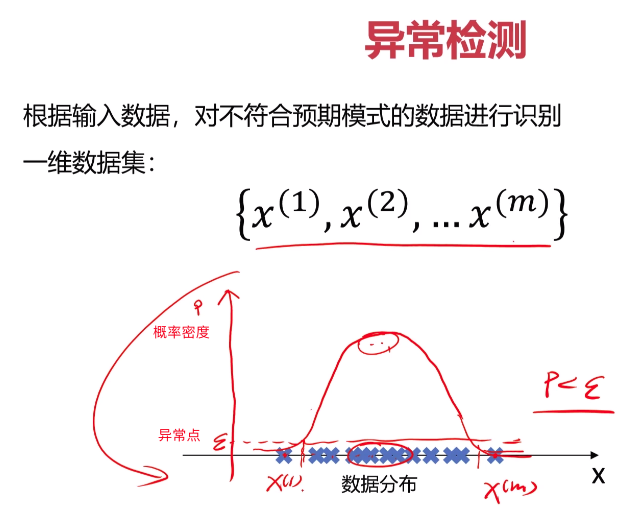

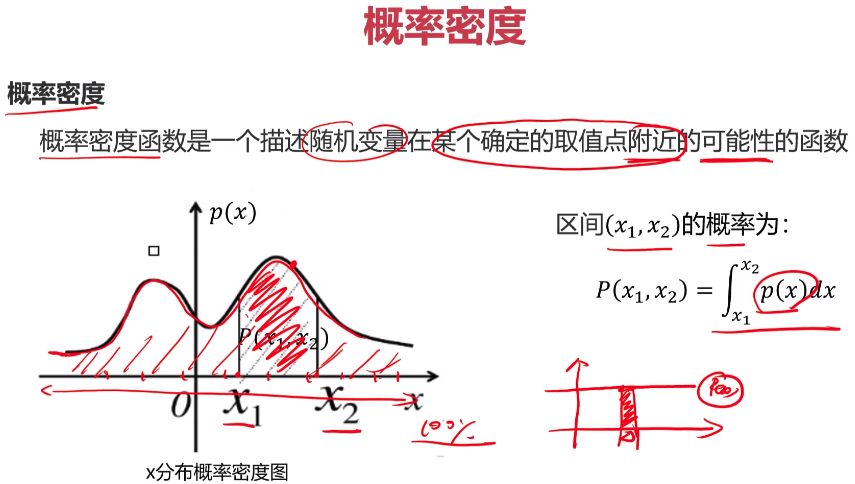

- 异常检测

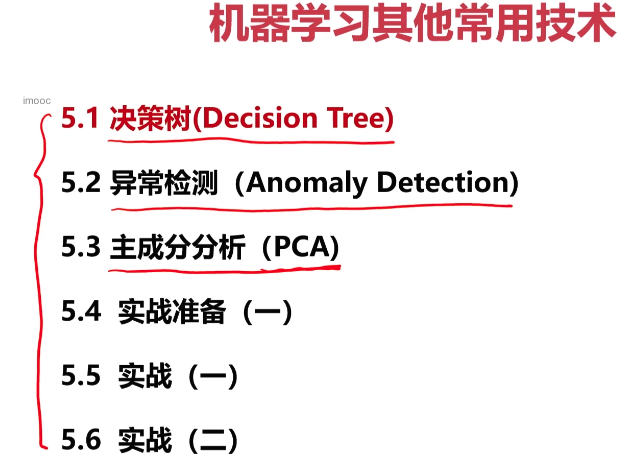

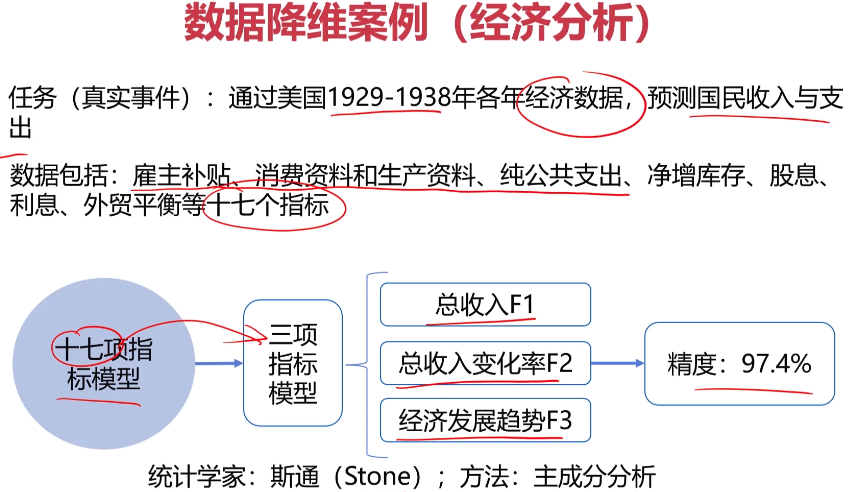

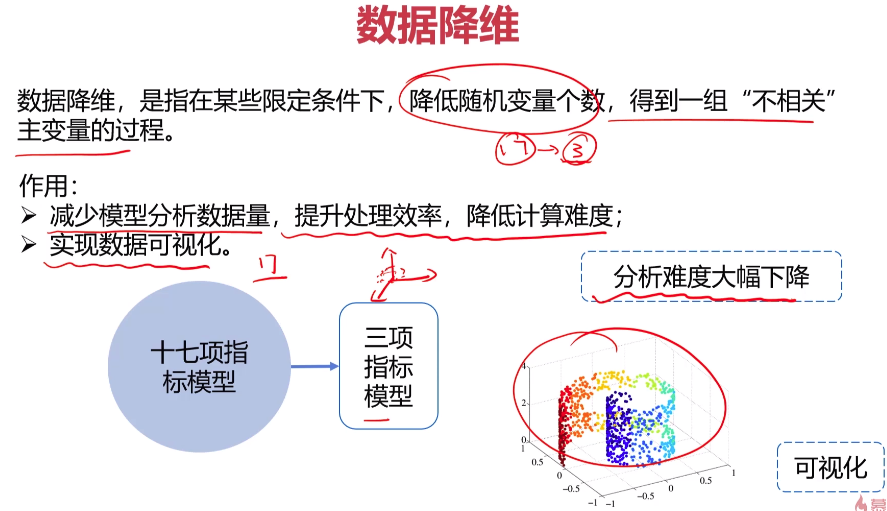

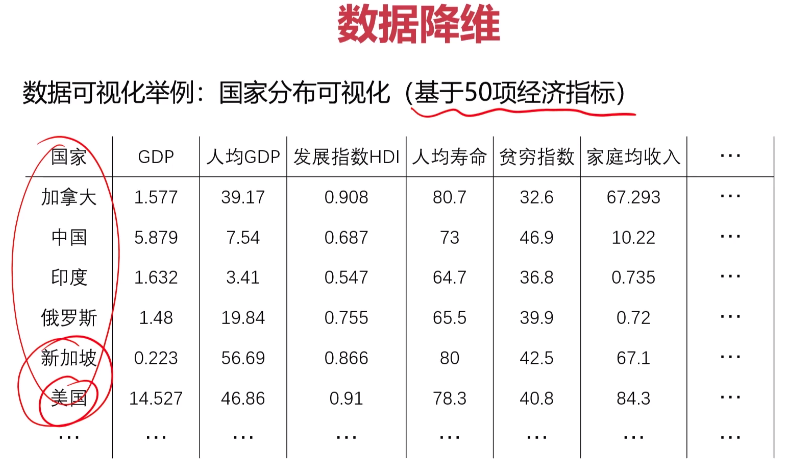

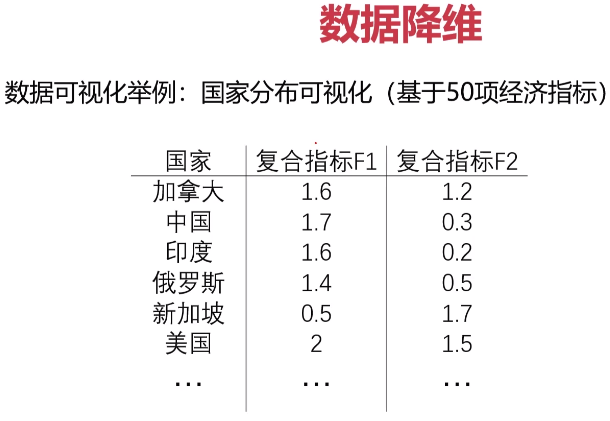

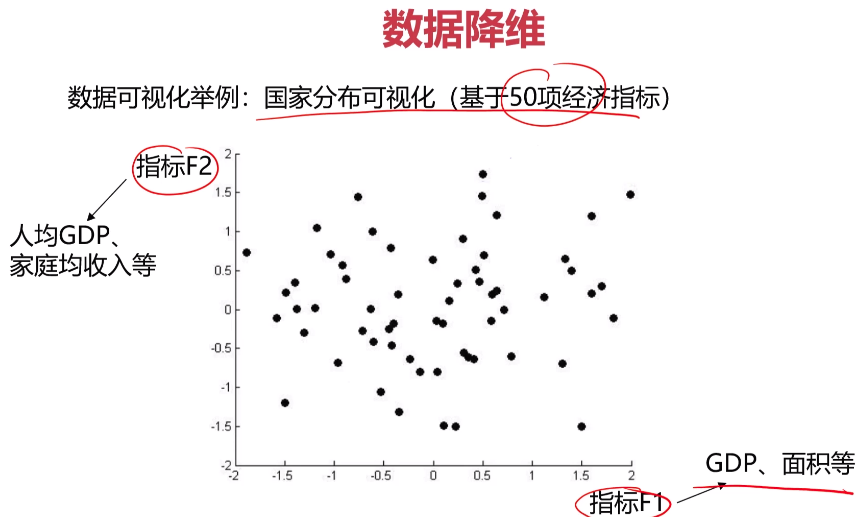

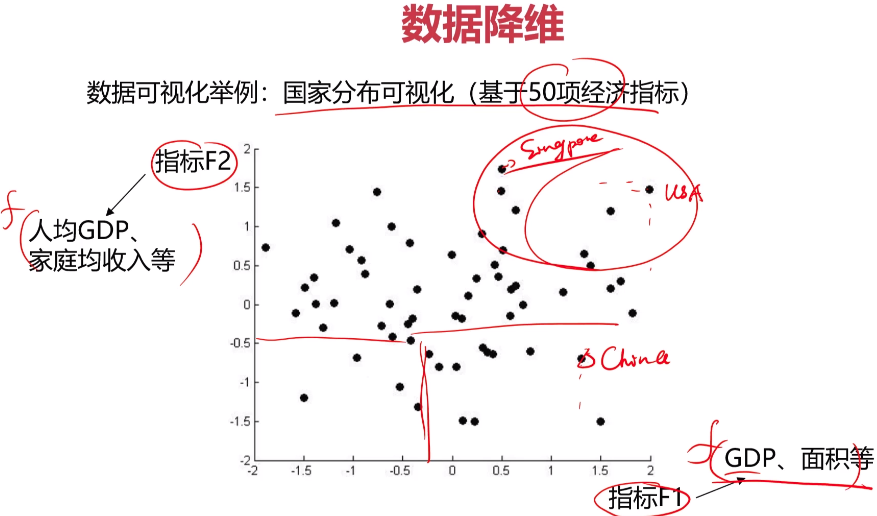

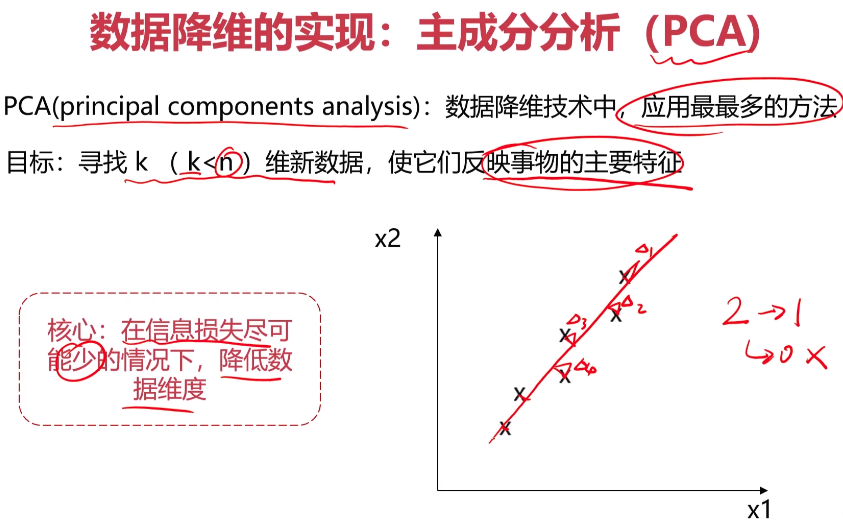

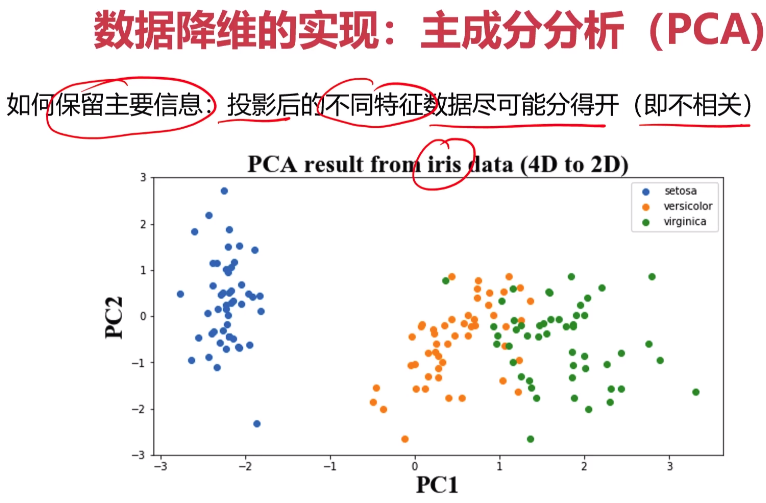

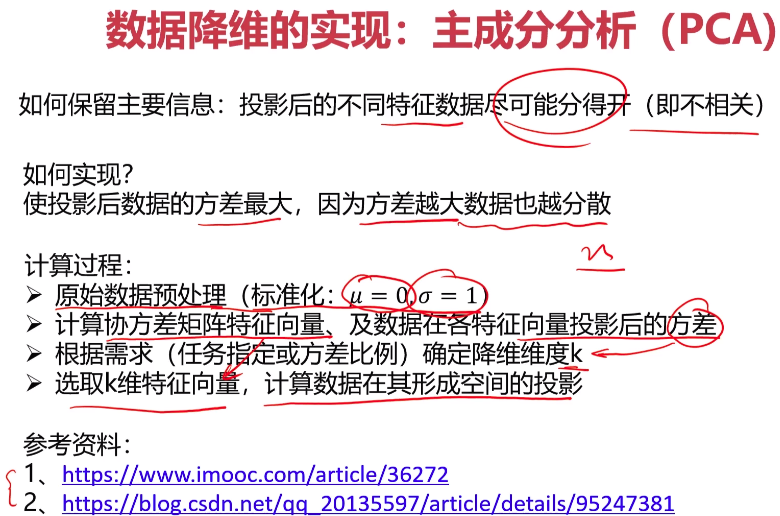

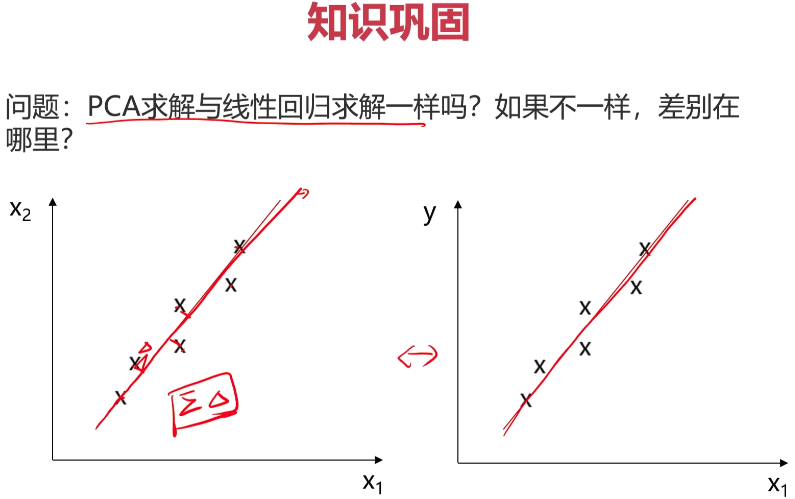

- 主成分分析

- 实战准备

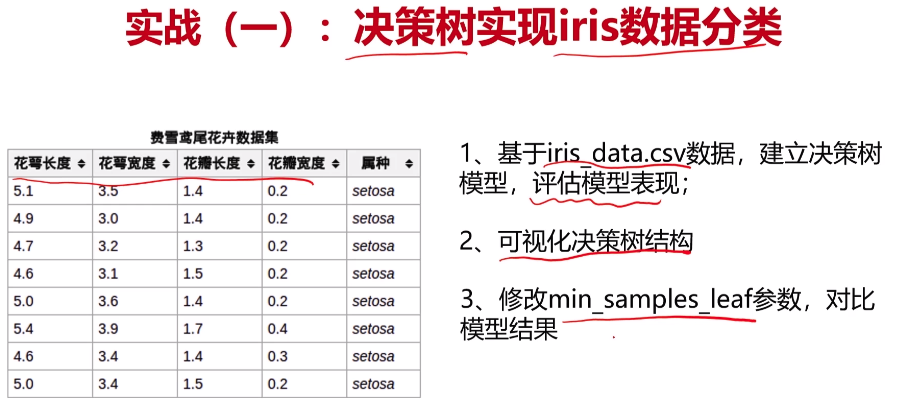

- 实战一

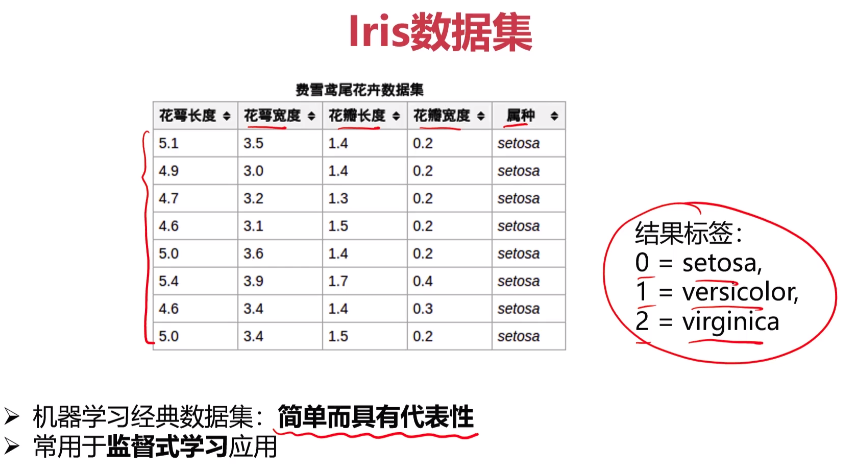

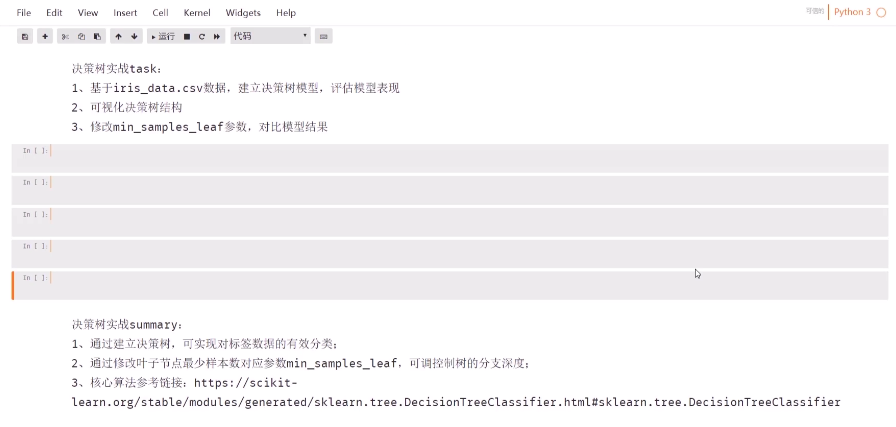

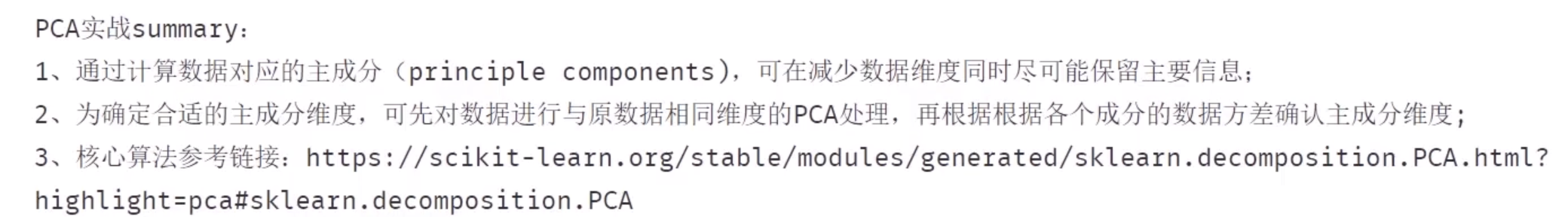

数据文件:iris_data.csv 内容为:

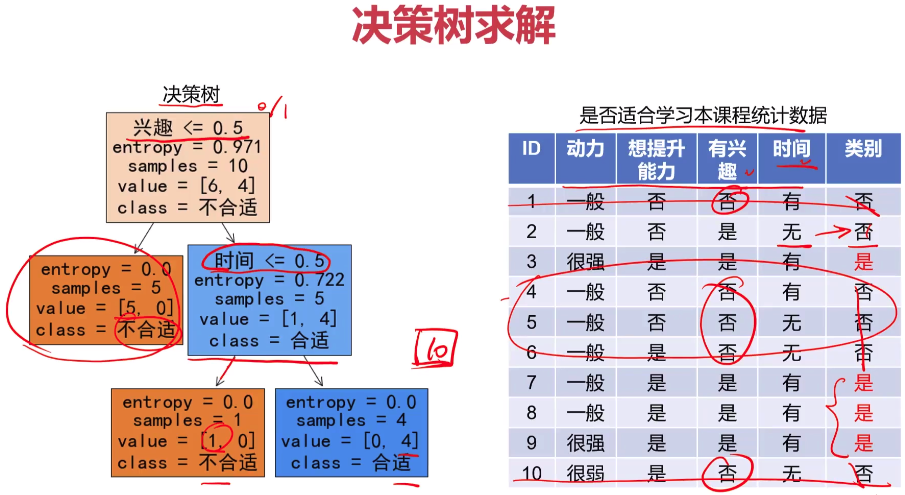

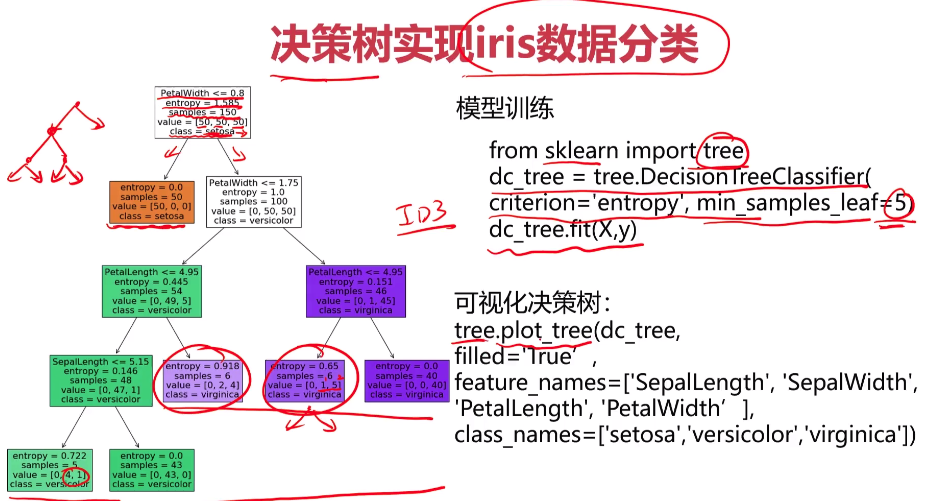

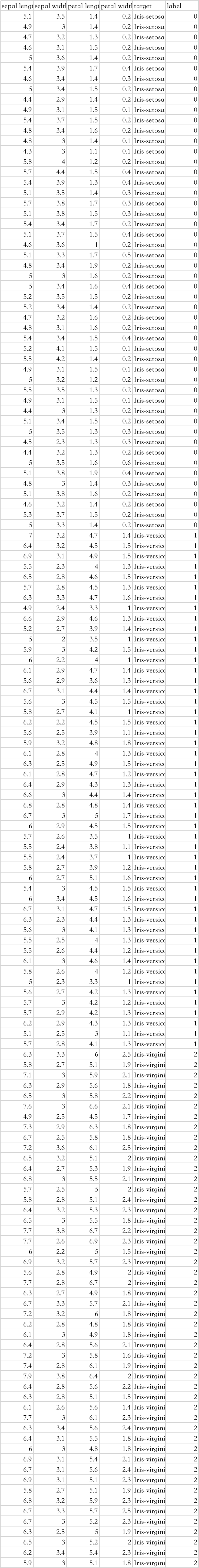

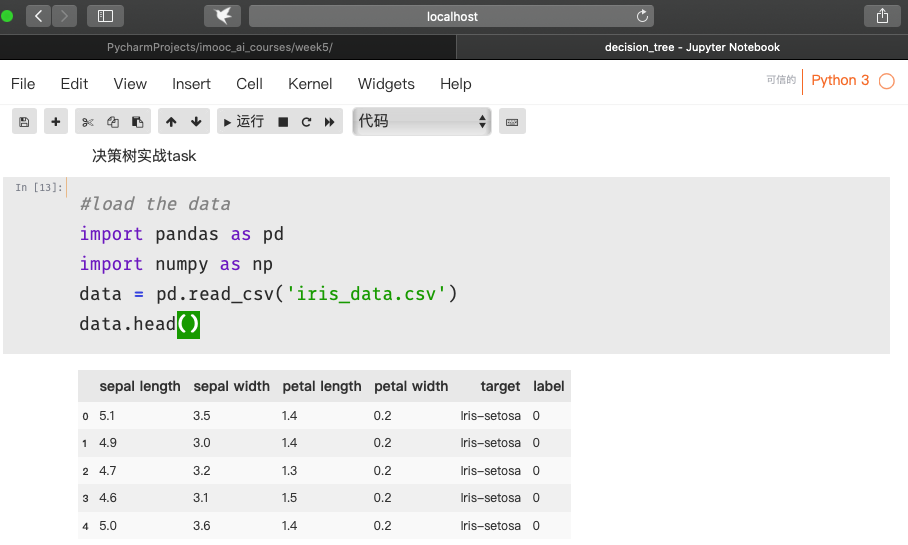

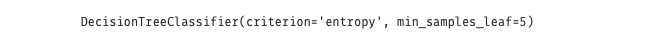

1 #establish the decision tree model 创建决策树模型 2 from sklearn import tree 3 dc_tree = tree.DecisionTreeClassifier(criterion='entropy',min_samples_leaf=5)#建立分类器 参数1:调用默认信息增益最大化的方法 参数2:建立决策树的分类叶子数最少样本数 4 dc_tree.fit(x,y)

1 #visualize the tree 图形展示 2 %matplotlib inline 3 from matplotlib import pyplot as plt 4 fig = plt.figure(figsize=(20,20)) #图形尺寸 5 tree.plot_tree(dc_tree,filled='True',feature_names=['SepalLength','SepalWidth','PetalLength','PetalWidth'],class_names=['setosa','versicolor','virginica'])#展示树状图 参数1:数据 参数2:背景填充色 参数3:属性名称 参数4:分类名称

1 [Text(496.0, 978.48, 'PetalWidth <= 0.8 entropy = 1.585 samples = 150 value = [50, 50, 50] class = setosa'), 2 Text(372.0, 761.0400000000001, 'entropy = 0.0 samples = 50 value = [50, 0, 0] class = setosa'), 3 Text(620.0, 761.0400000000001, 'PetalWidth <= 1.75 entropy = 1.0 samples = 100 value = [0, 50, 50] class = versicolor'), 4 Text(372.0, 543.6, 'PetalLength <= 4.95 entropy = 0.445 samples = 54 value = [0, 49, 5] class = versicolor'), 5 Text(248.0, 326.1600000000001, 'SepalLength <= 5.15 entropy = 0.146 samples = 48 value = [0, 47, 1] class = versicolor'), 6 Text(124.0, 108.72000000000003, 'entropy = 0.722 samples = 5 value = [0, 4, 1] class = versicolor'), 7 Text(372.0, 108.72000000000003, 'entropy = 0.0 samples = 43 value = [0, 43, 0] class = versicolor'), 8 Text(496.0, 326.1600000000001, 'entropy = 0.918 samples = 6 value = [0, 2, 4] class = virginica'), 9 Text(868.0, 543.6, 'PetalLength <= 4.95 entropy = 0.151 samples = 46 value = [0, 1, 45] class = virginica'), 10 Text(744.0, 326.1600000000001, 'entropy = 0.65 samples = 6 value = [0, 1, 5] class = virginica'), 11 Text(992.0, 326.1600000000001, 'entropy = 0.0 samples = 40 value = [0, 0, 40] class = virginica')]

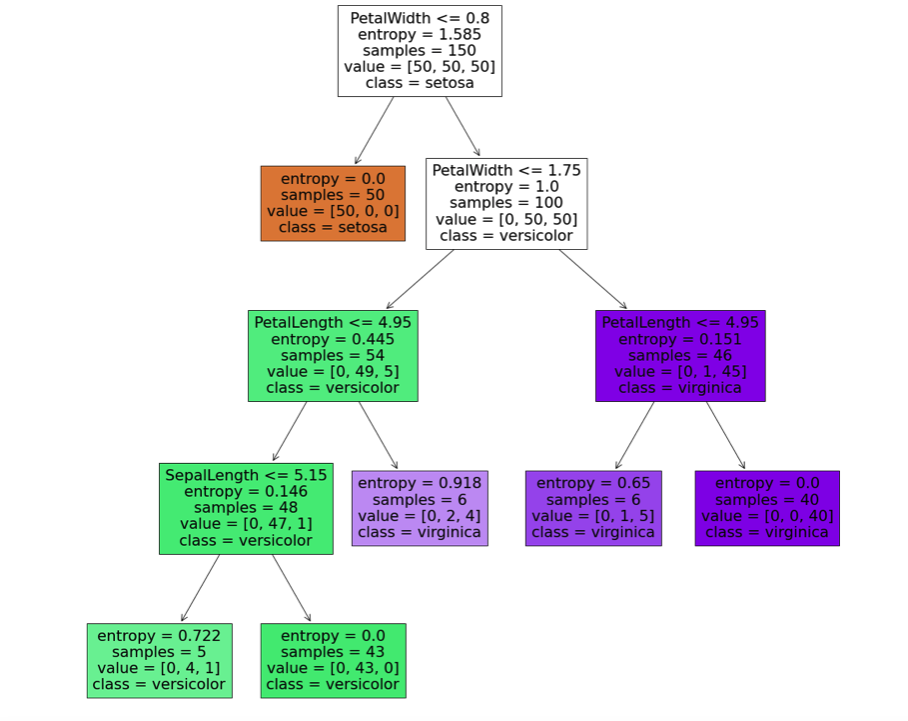

1 dc_tree = tree.DecisionTreeClassifier(criterion='entropy',min_samples_leaf=1)#建立分类器 参数1:调用默认信息增益最大化的方法 参数2:建立决策树的分类叶子数最少样本数 2 dc_tree.fit(x,y) 3 fig = plt.figure(figsize=(20,20)) #图形尺寸 4 tree.plot_tree(dc_tree,filled='True',feature_names=['SepalLength','SepalWidth','PetalLength','PetalWidth'],class_names=['setosa','versicolor','virginica'])#展示树状图 参数1:数据 参数2:背景填充色 参数3:属性名称 参数4:分类名称

1 [Text(558.0, 996.6, 'PetalWidth <= 0.8 entropy = 1.585 samples = 150 value = [50, 50, 50] class = setosa'), 2 Text(472.15384615384613, 815.4000000000001, 'entropy = 0.0 samples = 50 value = [50, 0, 0] class = setosa'), 3 Text(643.8461538461538, 815.4000000000001, 'PetalWidth <= 1.75 entropy = 1.0 samples = 100 value = [0, 50, 50] class = versicolor'), 4 Text(343.38461538461536, 634.2, 'PetalLength <= 4.95 entropy = 0.445 samples = 54 value = [0, 49, 5] class = versicolor'), 5 Text(171.69230769230768, 453.0, 'PetalWidth <= 1.65 entropy = 0.146 samples = 48 value = [0, 47, 1] class = versicolor'), 6 Text(85.84615384615384, 271.79999999999995, 'entropy = 0.0 samples = 47 value = [0, 47, 0] class = versicolor'), 7 Text(257.53846153846155, 271.79999999999995, 'entropy = 0.0 samples = 1 value = [0, 0, 1] class = virginica'), 8 Text(515.0769230769231, 453.0, 'PetalWidth <= 1.55 entropy = 0.918 samples = 6 value = [0, 2, 4] class = virginica'), 9 Text(429.23076923076917, 271.79999999999995, 'entropy = 0.0 samples = 3 value = [0, 0, 3] class = virginica'), 10 Text(600.9230769230769, 271.79999999999995, 'SepalLength <= 6.95 entropy = 0.918 samples = 3 value = [0, 2, 1] class = versicolor'), 11 Text(515.0769230769231, 90.59999999999991, 'entropy = 0.0 samples = 2 value = [0, 2, 0] class = versicolor'), 12 Text(686.7692307692307, 90.59999999999991, 'entropy = 0.0 samples = 1 value = [0, 0, 1] class = virginica'), 13 Text(944.3076923076923, 634.2, 'PetalLength <= 4.85 entropy = 0.151 samples = 46 value = [0, 1, 45] class = virginica'), 14 Text(858.4615384615383, 453.0, 'SepalLength <= 5.95 entropy = 0.918 samples = 3 value = [0, 1, 2] class = virginica'), 15 Text(772.6153846153845, 271.79999999999995, 'entropy = 0.0 samples = 1 value = [0, 1, 0] class = versicolor'), 16 Text(944.3076923076923, 271.79999999999995, 'entropy = 0.0 samples = 2 value = [0, 0, 2] class = virginica'), 17 Text(1030.1538461538462, 453.0, 'entropy = 0.0 samples = 43 value = [0, 0, 43] class = virginica')]

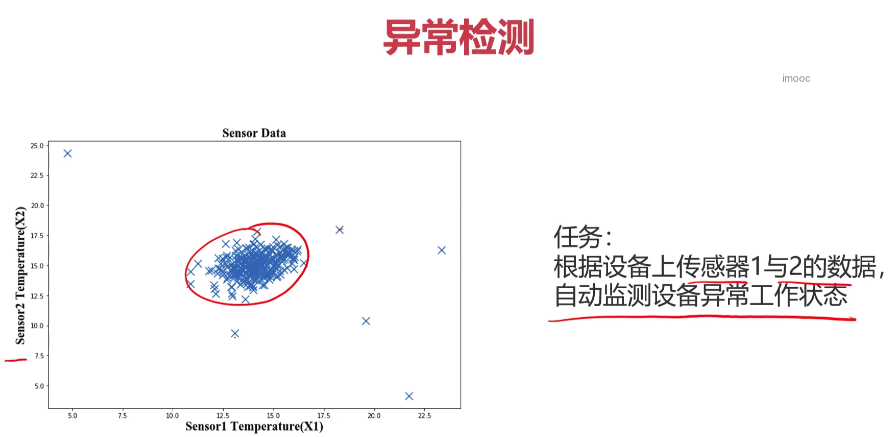

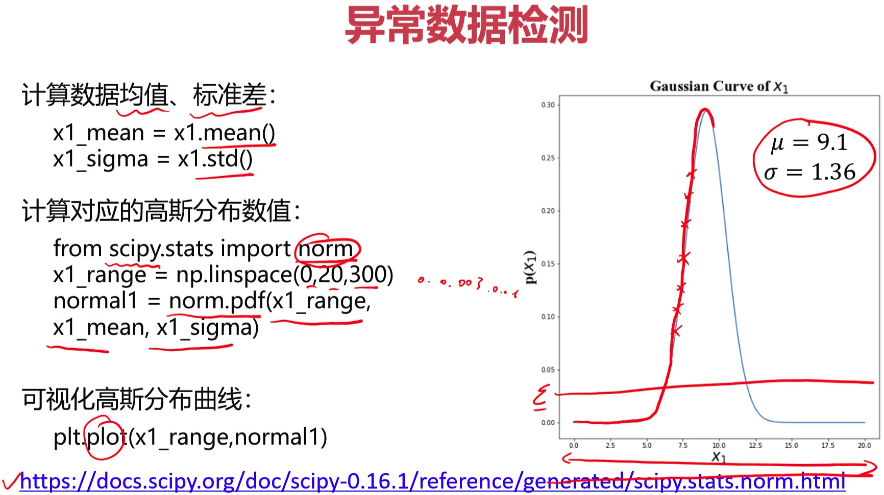

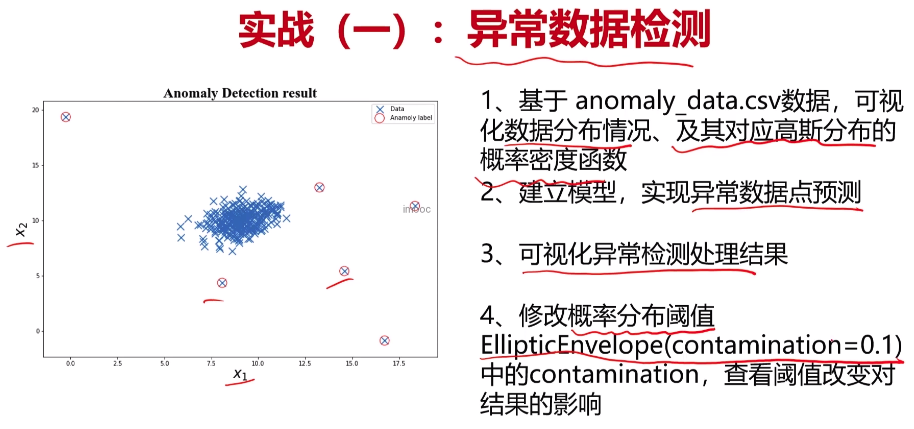

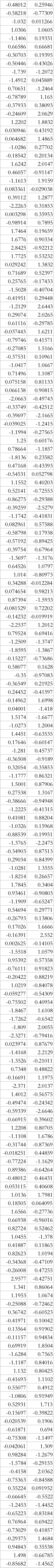

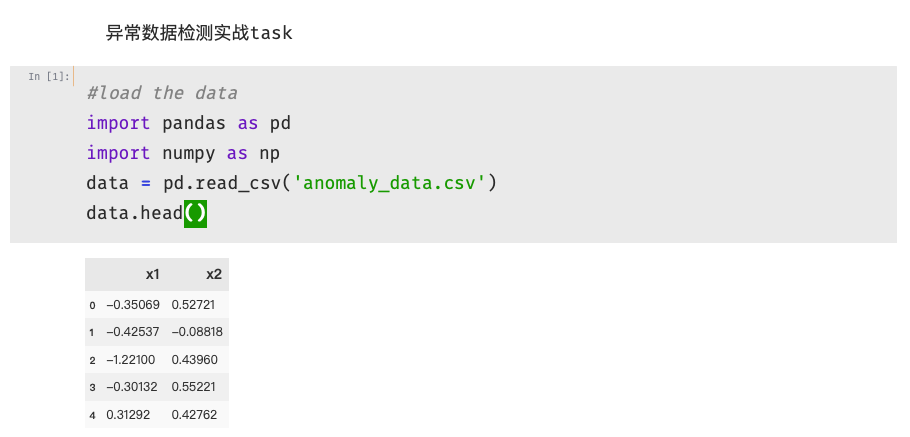

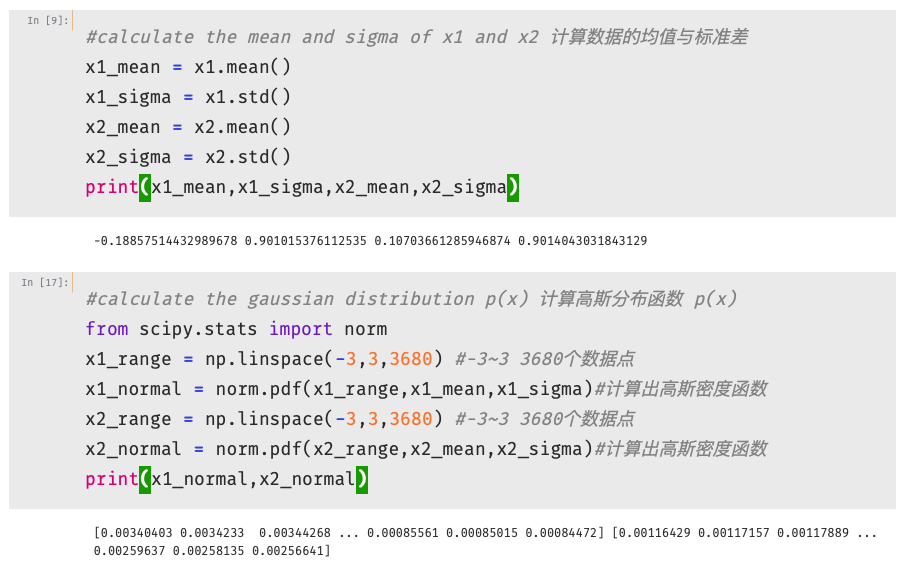

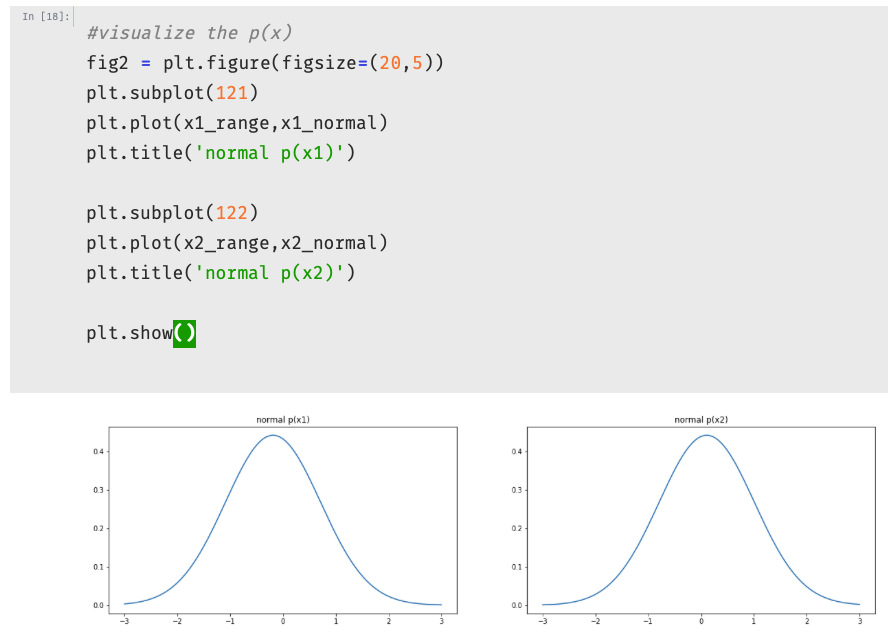

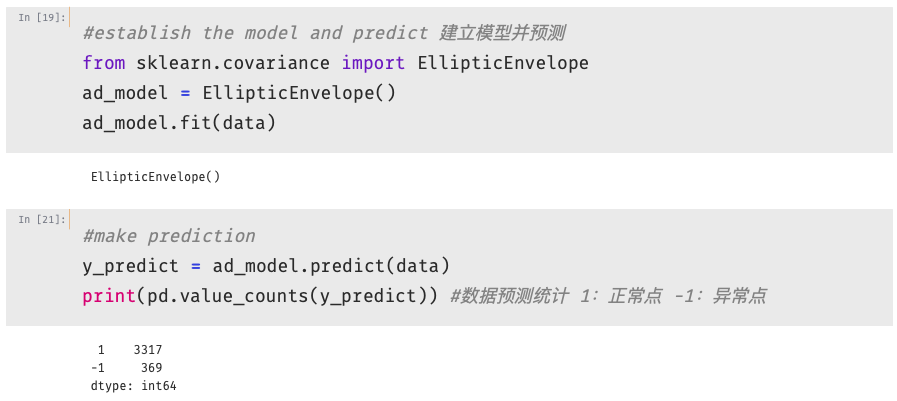

- 异常数据检测

数据文件 anomaly_data.csv 内容为:

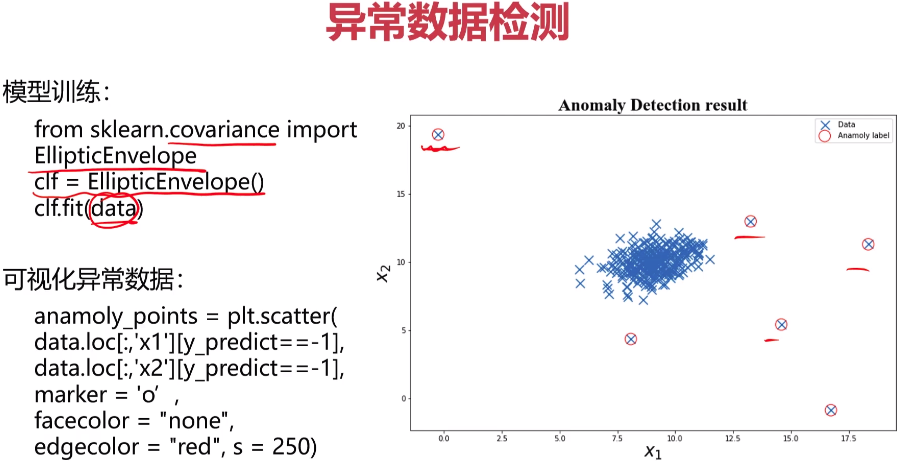

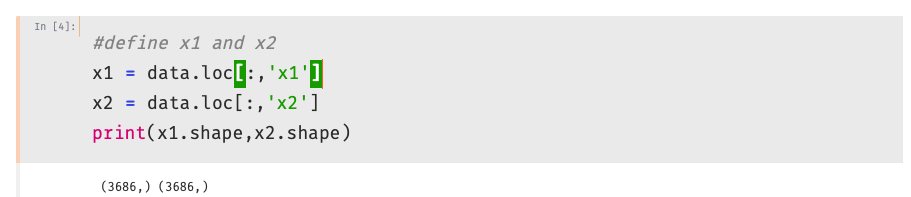

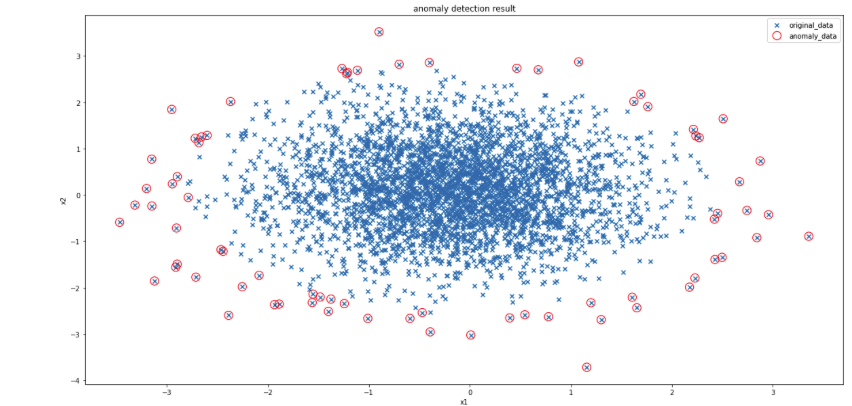

1 #visualize the result 预测结果图形化展示 2 #原始数据 3 fig4 = plt.figure(figsize=(20,10)) 4 original_data = plt.scatter(data.loc[:,'x1'],data.loc[:,'x2'],marker='x')#参数3:数据标记 5 anomaly_data = plt.scatter(data.loc[:,'x1'][y_predict==-1],data.loc[:,'x2'][y_predict==-1],marker='o',facecolor='none',edgecolor='red',s=150)#参数3:数据标记 参数4:颜色填充 参数5:边框颜色 参数6:尺寸 6 plt.title('anomaly detection result') 7 plt.xlabel('x1') 8 plt.ylabel('x2') 9 plt.legend((original_data,anomaly_data),('original_data','anomaly_data'))#题注设置 10 plt.show()

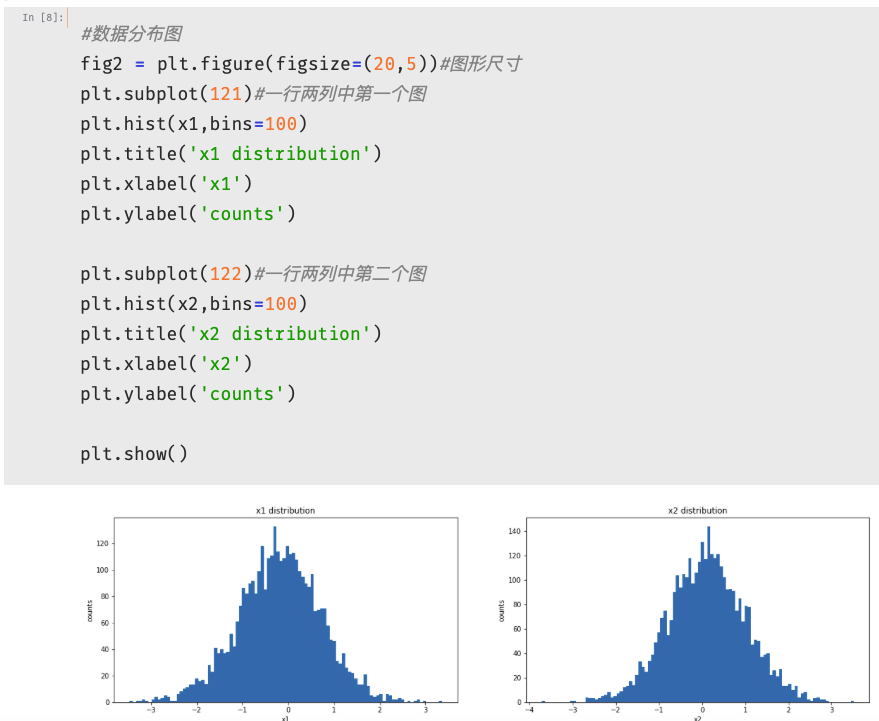

1 #establish the model and predict 建立模型更改检测精度并预测 2 ad_model = EllipticEnvelope(contamination=0.02) 3 ad_model.fit(data) 4 y_predict = ad_model.predict(data) 5 6 #visualize the result 更改检测精度后预测结果图形化展示 7 #原始数据 8 fig5 = plt.figure(figsize=(20,10)) 9 original_data = plt.scatter(data.loc[:,'x1'],data.loc[:,'x2'],marker='x')#参数3:数据标记 10 anomaly_data = plt.scatter(data.loc[:,'x1'][y_predict==-1],data.loc[:,'x2'][y_predict==-1],marker='o',facecolor='none',edgecolor='red',s=150)#参数3:数据标记 参数4:颜色填充 参数5:边框颜色 参数6:尺寸 11 plt.title('anomaly detection result') 12 plt.xlabel('x1') 13 plt.ylabel('x2') 14 plt.legend((original_data,anomaly_data),('original_data','anomaly_data'))#题注设置 15 plt.show()

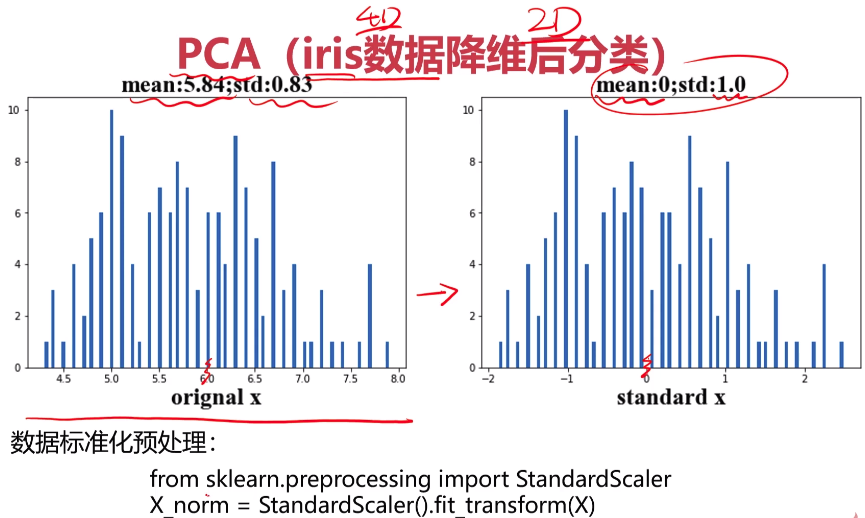

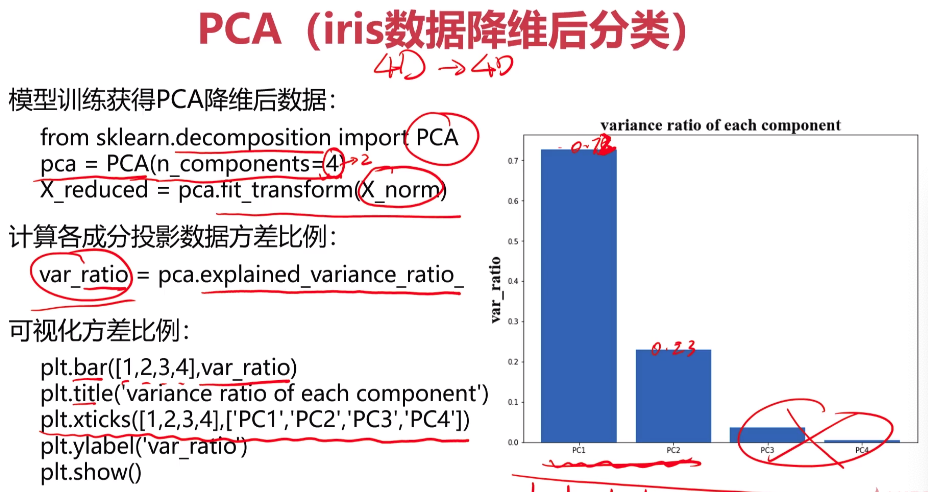

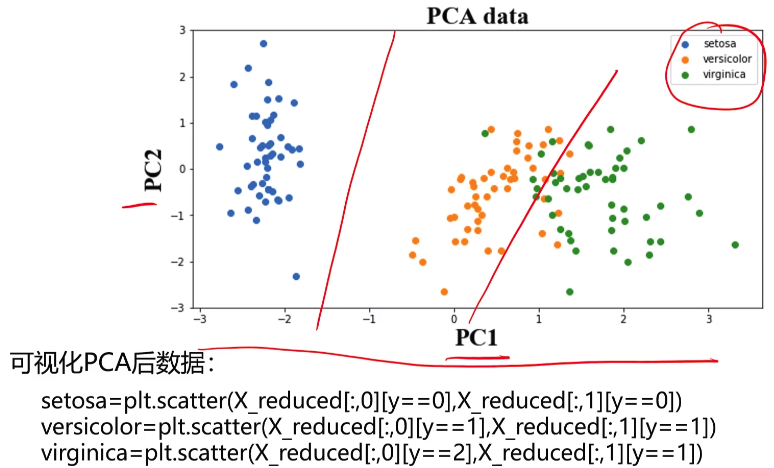

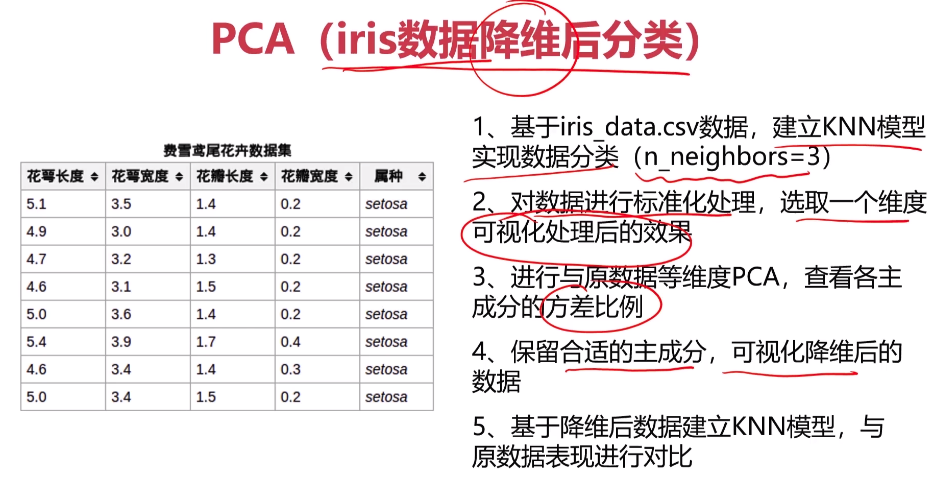

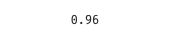

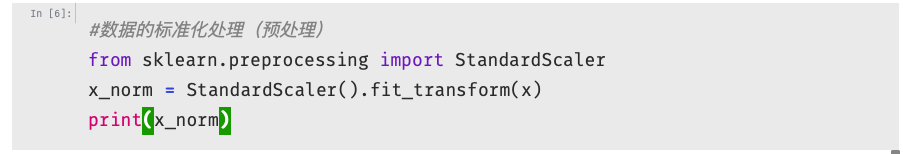

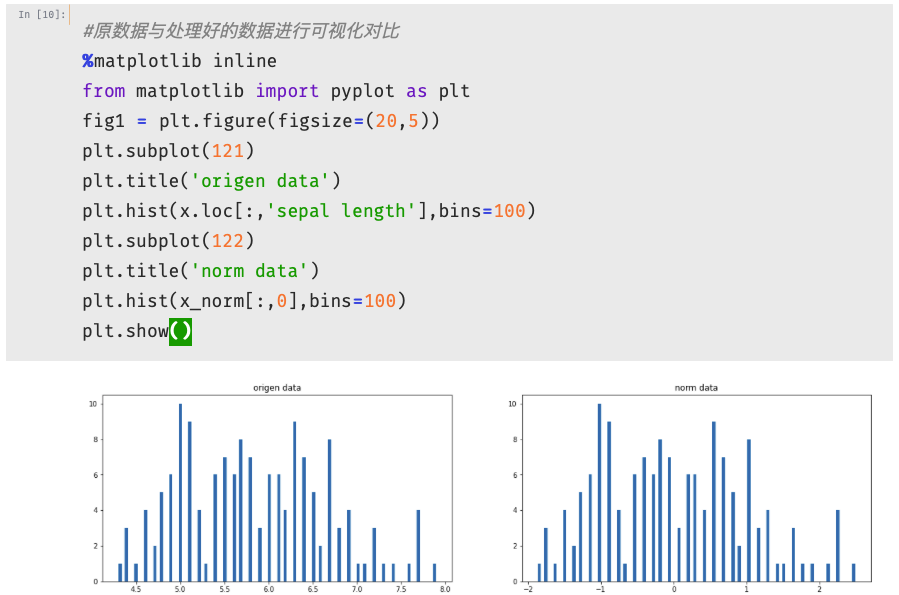

- 主成分分析实战

1 #establish knn model and calculate the accuracy 建立模型 训练模型 评估模型 2 from sklearn.neighbors import KNeighborsClassifier 3 KNN = KNeighborsClassifier(n_neighbors=3) #KNN分类器 :参数1:取最近三个点作为分类 4 KNN.fit(x,y) 5 y_predict = KNN.predict(x) 6 from sklearn.metrics import accuracy_score 7 accuracy = accuracy_score(y,y_predict) 8 print(accuracy)

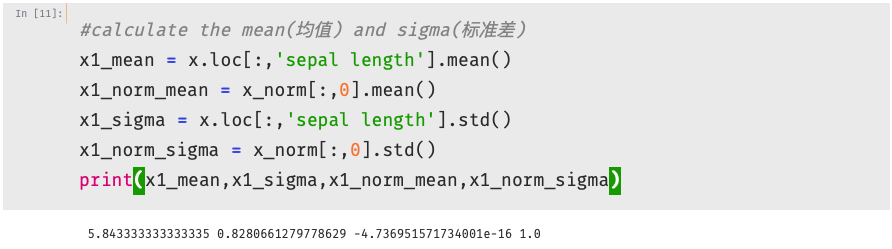

1 [[-9.00681170e-01 1.03205722e+00 -1.34127240e+00 -1.31297673e+00] 2 [-1.14301691e+00 -1.24957601e-01 -1.34127240e+00 -1.31297673e+00] 3 [-1.38535265e+00 3.37848329e-01 -1.39813811e+00 -1.31297673e+00] 4 [-1.50652052e+00 1.06445364e-01 -1.28440670e+00 -1.31297673e+00] 5 [-1.02184904e+00 1.26346019e+00 -1.34127240e+00 -1.31297673e+00] 6 [-5.37177559e-01 1.95766909e+00 -1.17067529e+00 -1.05003079e+00] 7 [-1.50652052e+00 8.00654259e-01 -1.34127240e+00 -1.18150376e+00] 8 [-1.02184904e+00 8.00654259e-01 -1.28440670e+00 -1.31297673e+00] 9 [-1.74885626e+00 -3.56360566e-01 -1.34127240e+00 -1.31297673e+00] 10 [-1.14301691e+00 1.06445364e-01 -1.28440670e+00 -1.44444970e+00] 11 [-5.37177559e-01 1.49486315e+00 -1.28440670e+00 -1.31297673e+00] 12 [-1.26418478e+00 8.00654259e-01 -1.22754100e+00 -1.31297673e+00] 13 [-1.26418478e+00 -1.24957601e-01 -1.34127240e+00 -1.44444970e+00] 14 [-1.87002413e+00 -1.24957601e-01 -1.51186952e+00 -1.44444970e+00] 15 [-5.25060772e-02 2.18907205e+00 -1.45500381e+00 -1.31297673e+00] 16 [-1.73673948e-01 3.11468391e+00 -1.28440670e+00 -1.05003079e+00] 17 [-5.37177559e-01 1.95766909e+00 -1.39813811e+00 -1.05003079e+00] 18 [-9.00681170e-01 1.03205722e+00 -1.34127240e+00 -1.18150376e+00] 19 [-1.73673948e-01 1.72626612e+00 -1.17067529e+00 -1.18150376e+00] 20 [-9.00681170e-01 1.72626612e+00 -1.28440670e+00 -1.18150376e+00] 21 [-5.37177559e-01 8.00654259e-01 -1.17067529e+00 -1.31297673e+00] 22 [-9.00681170e-01 1.49486315e+00 -1.28440670e+00 -1.05003079e+00] 23 [-1.50652052e+00 1.26346019e+00 -1.56873522e+00 -1.31297673e+00] 24 [-9.00681170e-01 5.69251294e-01 -1.17067529e+00 -9.18557817e-01] 25 [-1.26418478e+00 8.00654259e-01 -1.05694388e+00 -1.31297673e+00] 26 [-1.02184904e+00 -1.24957601e-01 -1.22754100e+00 -1.31297673e+00] 27 [-1.02184904e+00 8.00654259e-01 -1.22754100e+00 -1.05003079e+00] 28 [-7.79513300e-01 1.03205722e+00 -1.28440670e+00 -1.31297673e+00] 29 [-7.79513300e-01 8.00654259e-01 -1.34127240e+00 -1.31297673e+00] 30 [-1.38535265e+00 3.37848329e-01 -1.22754100e+00 -1.31297673e+00] 31 [-1.26418478e+00 1.06445364e-01 -1.22754100e+00 -1.31297673e+00] 32 [-5.37177559e-01 8.00654259e-01 -1.28440670e+00 -1.05003079e+00] 33 [-7.79513300e-01 2.42047502e+00 -1.28440670e+00 -1.44444970e+00] 34 [-4.16009689e-01 2.65187798e+00 -1.34127240e+00 -1.31297673e+00] 35 [-1.14301691e+00 1.06445364e-01 -1.28440670e+00 -1.44444970e+00] 36 [-1.02184904e+00 3.37848329e-01 -1.45500381e+00 -1.31297673e+00] 37 [-4.16009689e-01 1.03205722e+00 -1.39813811e+00 -1.31297673e+00] 38 [-1.14301691e+00 1.06445364e-01 -1.28440670e+00 -1.44444970e+00] 39 [-1.74885626e+00 -1.24957601e-01 -1.39813811e+00 -1.31297673e+00] 40 [-9.00681170e-01 8.00654259e-01 -1.28440670e+00 -1.31297673e+00] 41 [-1.02184904e+00 1.03205722e+00 -1.39813811e+00 -1.18150376e+00] 42 [-1.62768839e+00 -1.74477836e+00 -1.39813811e+00 -1.18150376e+00] 43 [-1.74885626e+00 3.37848329e-01 -1.39813811e+00 -1.31297673e+00] 44 [-1.02184904e+00 1.03205722e+00 -1.22754100e+00 -7.87084847e-01] 45 [-9.00681170e-01 1.72626612e+00 -1.05694388e+00 -1.05003079e+00] 46 [-1.26418478e+00 -1.24957601e-01 -1.34127240e+00 -1.18150376e+00] 47 [-9.00681170e-01 1.72626612e+00 -1.22754100e+00 -1.31297673e+00] 48 [-1.50652052e+00 3.37848329e-01 -1.34127240e+00 -1.31297673e+00] 49 [-6.58345429e-01 1.49486315e+00 -1.28440670e+00 -1.31297673e+00] 50 [-1.02184904e+00 5.69251294e-01 -1.34127240e+00 -1.31297673e+00] 51 [ 1.40150837e+00 3.37848329e-01 5.35295827e-01 2.64698913e-01] 52 [ 6.74501145e-01 3.37848329e-01 4.21564419e-01 3.96171883e-01] 53 [ 1.28034050e+00 1.06445364e-01 6.49027235e-01 3.96171883e-01] 54 [-4.16009689e-01 -1.74477836e+00 1.37235899e-01 1.33225943e-01] 55 [ 7.95669016e-01 -5.87763531e-01 4.78430123e-01 3.96171883e-01] 56 [-1.73673948e-01 -5.87763531e-01 4.21564419e-01 1.33225943e-01] 57 [ 5.53333275e-01 5.69251294e-01 5.35295827e-01 5.27644853e-01] 58 [-1.14301691e+00 -1.51337539e+00 -2.60824029e-01 -2.61192967e-01] 59 [ 9.16836886e-01 -3.56360566e-01 4.78430123e-01 1.33225943e-01] 60 [-7.79513300e-01 -8.19166497e-01 8.03701950e-02 2.64698913e-01] 61 [-1.02184904e+00 -2.43898725e+00 -1.47092621e-01 -2.61192967e-01] 62 [ 6.86617933e-02 -1.24957601e-01 2.50967307e-01 3.96171883e-01] 63 [ 1.89829664e-01 -1.97618132e+00 1.37235899e-01 -2.61192967e-01] 64 [ 3.10997534e-01 -3.56360566e-01 5.35295827e-01 2.64698913e-01] 65 [-2.94841818e-01 -3.56360566e-01 -9.02269170e-02 1.33225943e-01] 66 [ 1.03800476e+00 1.06445364e-01 3.64698715e-01 2.64698913e-01] 67 [-2.94841818e-01 -1.24957601e-01 4.21564419e-01 3.96171883e-01] 68 [-5.25060772e-02 -8.19166497e-01 1.94101603e-01 -2.61192967e-01] 69 [ 4.32165405e-01 -1.97618132e+00 4.21564419e-01 3.96171883e-01] 70 [-2.94841818e-01 -1.28197243e+00 8.03701950e-02 -1.29719997e-01] 71 [ 6.86617933e-02 3.37848329e-01 5.92161531e-01 7.90590793e-01] 72 [ 3.10997534e-01 -5.87763531e-01 1.37235899e-01 1.33225943e-01] 73 [ 5.53333275e-01 -1.28197243e+00 6.49027235e-01 3.96171883e-01] 74 [ 3.10997534e-01 -5.87763531e-01 5.35295827e-01 1.75297293e-03] 75 [ 6.74501145e-01 -3.56360566e-01 3.07833011e-01 1.33225943e-01] 76 [ 9.16836886e-01 -1.24957601e-01 3.64698715e-01 2.64698913e-01] 77 [ 1.15917263e+00 -5.87763531e-01 5.92161531e-01 2.64698913e-01] 78 [ 1.03800476e+00 -1.24957601e-01 7.05892939e-01 6.59117823e-01] 79 [ 1.89829664e-01 -3.56360566e-01 4.21564419e-01 3.96171883e-01] 80 [-1.73673948e-01 -1.05056946e+00 -1.47092621e-01 -2.61192967e-01] 81 [-4.16009689e-01 -1.51337539e+00 2.35044910e-02 -1.29719997e-01] 82 [-4.16009689e-01 -1.51337539e+00 -3.33612130e-02 -2.61192967e-01] 83 [-5.25060772e-02 -8.19166497e-01 8.03701950e-02 1.75297293e-03] 84 [ 1.89829664e-01 -8.19166497e-01 7.62758643e-01 5.27644853e-01] 85 [-5.37177559e-01 -1.24957601e-01 4.21564419e-01 3.96171883e-01] 86 [ 1.89829664e-01 8.00654259e-01 4.21564419e-01 5.27644853e-01] 87 [ 1.03800476e+00 1.06445364e-01 5.35295827e-01 3.96171883e-01] 88 [ 5.53333275e-01 -1.74477836e+00 3.64698715e-01 1.33225943e-01] 89 [-2.94841818e-01 -1.24957601e-01 1.94101603e-01 1.33225943e-01] 90 [-4.16009689e-01 -1.28197243e+00 1.37235899e-01 1.33225943e-01] 91 [-4.16009689e-01 -1.05056946e+00 3.64698715e-01 1.75297293e-03] 92 [ 3.10997534e-01 -1.24957601e-01 4.78430123e-01 2.64698913e-01] 93 [-5.25060772e-02 -1.05056946e+00 1.37235899e-01 1.75297293e-03] 94 [-1.02184904e+00 -1.74477836e+00 -2.60824029e-01 -2.61192967e-01] 95 [-2.94841818e-01 -8.19166497e-01 2.50967307e-01 1.33225943e-01] 96 [-1.73673948e-01 -1.24957601e-01 2.50967307e-01 1.75297293e-03] 97 [-1.73673948e-01 -3.56360566e-01 2.50967307e-01 1.33225943e-01] 98 [ 4.32165405e-01 -3.56360566e-01 3.07833011e-01 1.33225943e-01] 99 [-9.00681170e-01 -1.28197243e+00 -4.31421141e-01 -1.29719997e-01] 100 [-1.73673948e-01 -5.87763531e-01 1.94101603e-01 1.33225943e-01] 101 [ 5.53333275e-01 5.69251294e-01 1.27454998e+00 1.71090158e+00] 102 [-5.25060772e-02 -8.19166497e-01 7.62758643e-01 9.22063763e-01] 103 [ 1.52267624e+00 -1.24957601e-01 1.21768427e+00 1.18500970e+00] 104 [ 5.53333275e-01 -3.56360566e-01 1.04708716e+00 7.90590793e-01] 105 [ 7.95669016e-01 -1.24957601e-01 1.16081857e+00 1.31648267e+00] 106 [ 2.12851559e+00 -1.24957601e-01 1.61574420e+00 1.18500970e+00] 107 [-1.14301691e+00 -1.28197243e+00 4.21564419e-01 6.59117823e-01] 108 [ 1.76501198e+00 -3.56360566e-01 1.44514709e+00 7.90590793e-01] 109 [ 1.03800476e+00 -1.28197243e+00 1.16081857e+00 7.90590793e-01] 110 [ 1.64384411e+00 1.26346019e+00 1.33141568e+00 1.71090158e+00] 111 [ 7.95669016e-01 3.37848329e-01 7.62758643e-01 1.05353673e+00] 112 [ 6.74501145e-01 -8.19166497e-01 8.76490051e-01 9.22063763e-01] 113 [ 1.15917263e+00 -1.24957601e-01 9.90221459e-01 1.18500970e+00] 114 [-1.73673948e-01 -1.28197243e+00 7.05892939e-01 1.05353673e+00] 115 [-5.25060772e-02 -5.87763531e-01 7.62758643e-01 1.57942861e+00] 116 [ 6.74501145e-01 3.37848329e-01 8.76490051e-01 1.44795564e+00] 117 [ 7.95669016e-01 -1.24957601e-01 9.90221459e-01 7.90590793e-01] 118 [ 2.24968346e+00 1.72626612e+00 1.67260991e+00 1.31648267e+00] 119 [ 2.24968346e+00 -1.05056946e+00 1.78634131e+00 1.44795564e+00] 120 [ 1.89829664e-01 -1.97618132e+00 7.05892939e-01 3.96171883e-01] 121 [ 1.28034050e+00 3.37848329e-01 1.10395287e+00 1.44795564e+00] 122 [-2.94841818e-01 -5.87763531e-01 6.49027235e-01 1.05353673e+00] 123 [ 2.24968346e+00 -5.87763531e-01 1.67260991e+00 1.05353673e+00] 124 [ 5.53333275e-01 -8.19166497e-01 6.49027235e-01 7.90590793e-01] 125 [ 1.03800476e+00 5.69251294e-01 1.10395287e+00 1.18500970e+00] 126 [ 1.64384411e+00 3.37848329e-01 1.27454998e+00 7.90590793e-01] 127 [ 4.32165405e-01 -5.87763531e-01 5.92161531e-01 7.90590793e-01] 128 [ 3.10997534e-01 -1.24957601e-01 6.49027235e-01 7.90590793e-01] 129 [ 6.74501145e-01 -5.87763531e-01 1.04708716e+00 1.18500970e+00] 130 [ 1.64384411e+00 -1.24957601e-01 1.16081857e+00 5.27644853e-01] 131 [ 1.88617985e+00 -5.87763531e-01 1.33141568e+00 9.22063763e-01] 132 [ 2.49201920e+00 1.72626612e+00 1.50201279e+00 1.05353673e+00] 133 [ 6.74501145e-01 -5.87763531e-01 1.04708716e+00 1.31648267e+00] 134 [ 5.53333275e-01 -5.87763531e-01 7.62758643e-01 3.96171883e-01] 135 [ 3.10997534e-01 -1.05056946e+00 1.04708716e+00 2.64698913e-01] 136 [ 2.24968346e+00 -1.24957601e-01 1.33141568e+00 1.44795564e+00] 137 [ 5.53333275e-01 8.00654259e-01 1.04708716e+00 1.57942861e+00] 138 [ 6.74501145e-01 1.06445364e-01 9.90221459e-01 7.90590793e-01] 139 [ 1.89829664e-01 -1.24957601e-01 5.92161531e-01 7.90590793e-01] 140 [ 1.28034050e+00 1.06445364e-01 9.33355755e-01 1.18500970e+00] 141 [ 1.03800476e+00 1.06445364e-01 1.04708716e+00 1.57942861e+00] 142 [ 1.28034050e+00 1.06445364e-01 7.62758643e-01 1.44795564e+00] 143 [-5.25060772e-02 -8.19166497e-01 7.62758643e-01 9.22063763e-01] 144 [ 1.15917263e+00 3.37848329e-01 1.21768427e+00 1.44795564e+00] 145 [ 1.03800476e+00 5.69251294e-01 1.10395287e+00 1.71090158e+00] 146 [ 1.03800476e+00 -1.24957601e-01 8.19624347e-01 1.44795564e+00] 147 [ 5.53333275e-01 -1.28197243e+00 7.05892939e-01 9.22063763e-01] 148 [ 7.95669016e-01 -1.24957601e-01 8.19624347e-01 1.05353673e+00] 149 [ 4.32165405e-01 8.00654259e-01 9.33355755e-01 1.44795564e+00] 150 [ 6.86617933e-02 -1.24957601e-01 7.62758643e-01 7.90590793e-01]]

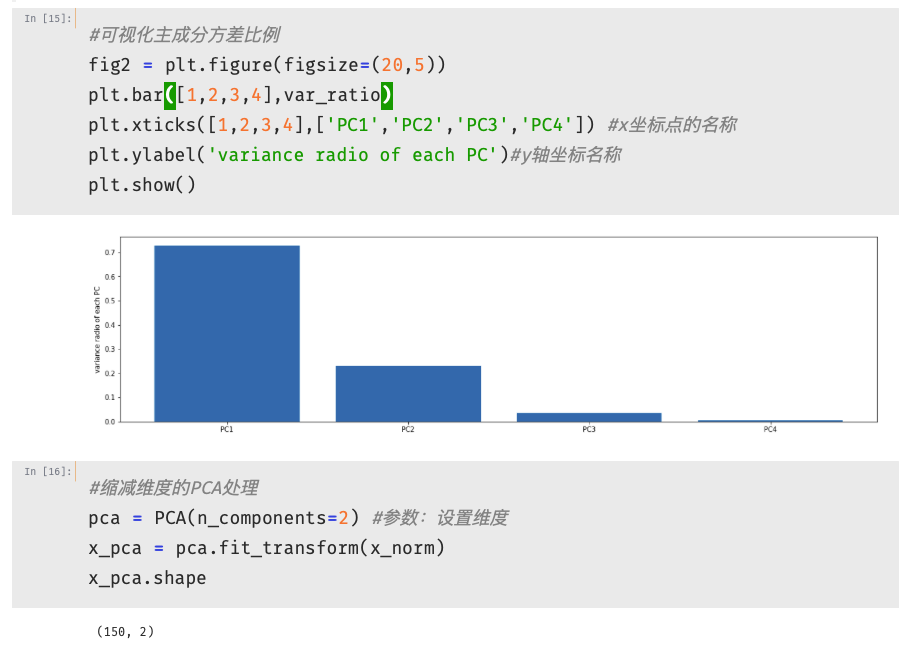

1 #visualize the PCA result 缩减后(降维数据)pca图形展示 2 fig3 = plt.figure(figsize=(10,10)) 3 setosa = plt.scatter(x_pca[:,0][y==0],x_pca[:,1][y==0]) 4 versicolor = plt.scatter(x_pca[:,0][y==1],x_pca[:,1][y==1]) 5 virginica = plt.scatter(x_pca[:,0][y==2],x_pca[:,1][y==2]) 6 plt.legend((setosa,versicolor,virginica),('setosa','versicolor','virginica')) 7 plt.show()

1 #降维后建立KNN模型且查看表现 2 KNN = KNeighborsClassifier(n_neighbors=3) #KNN分类器 :参数1:取最近三个点作为分类 3 KNN.fit(x_pca,y) 4 y_predict = KNN.predict(x_pca) 5 from sklearn.metrics import accuracy_score 6 accuracy = accuracy_score(y,y_predict) 7 print(accuracy)