爬虫

参考博客:http://www.cnblogs.com/wupeiqi/articles/5354900.html

http://www.cnblogs.com/wupeiqi/articles/6283017.html

- 基本操作

概要:

- 发送Http请求,Python Http请求,requests

- 提取指定信息,Python 正则表达式,beautifulsoup

- 数据持久化,

Python两个模块

- requests

- beautifulsoup

Http请求相关知识

- 请求:

请求头:

- cookie

请求体:

- 发送内容

- 响应:

响应头

- 浏览器读取

响应体

- 看到的内容

特殊:

- cookie

- csrftoken

- content-type:

content-type:application/url-form....

name=alex&age=18

content-type:application/json

{name:'alex',age:18}

- 性能相关

- 串行: 1个人,一个任务一个任务,空余时间,玩。

- 线程: 10个人,一个任务一个任务,空余时间,玩。

- 进程: 10个家庭,一个任务一个任务,空余时间,玩。

- 【协程】异步非阻塞:1个人,充分利用时间。

- scrapy框架

- 规则

- redis-scrapy组件

内容详细:

- 基本操作,python伪造浏览器发送请求并或者指定内容

pip3 install requests

response = requests.get('http://www.baidu.com')

response.text

pip3 install beautifulsoup4

from bs4 import Beautifulsoup

soup = Beautifulsoup(response.text,'html.parser')

soup.find(name='h3',attrs={'class':'t'})

soup.find_all(name='h3')

示例:爬取汽车之家新闻

- 模块

requests

GET:

requests.get(url="http://www.oldboyedu.com")

# data="http GET / http1.1

host:oldboyedu.com

....

"

requests.get(url="http://www.oldboyedu.com/index.html?p=1")

# data="http GET /index.html?p=1 http1.1

host:oldboyedu.com

....

"

requests.get(url="http://www.oldboyedu.com/index.html",params={'p':1})

# data="http GET /index.html?p=1 http1.1

host:oldboyedu.com

....

"

POST:

requests.post(url="http://www.oldboyedu.com",data={'name':'alex','age':18}) # 默认请求头:url-formend....

data="http POST / http1.1

host:oldboyedu.com

....

name=alex&age=18"

requests.post(url="http://www.oldboyedu.com",json={'name':'alex','age':18}) # 默认请求头:application/json

data="http POST / http1.1

host:oldboyedu.com

....

{"name": "alex", "age": 18}"

requests.post(

url="http://www.oldboyedu.com",

params={'p':1},

json={'name':'alex','age':18}

) # 默认请求头:application/json

data="http POST /?p=1 http1.1

host:oldboyedu.com

....

{"name": "alex", "age": 18}"

补充:

request.body,永远有值

request.POST,可能没有值

beautifulsoup

soup = beautifulsoup('HTML格式字符串','html.parser')

tag = soup.find(name='div',attrs={})

tags = soup.find_all(name='div',attrs={})

tag.find('h3').text

tag.find('h3').get('属性名称')

tag.find('h3').attrs

HTTP请求:

GET请求:

data="http GET /index?page=1 http1.1

host:baidu.com

....

"

POST请求:

data="http POST /index?page=1 http1.1

host:baidu.com

....

name=alex&age=18"

socket.sendall(data)

示例【github和抽屉】:任何一个不用验证码的网站,通过代码自动登录

1. 按理说

r1 = requests.get(url='https://github.com/login')

s1 = beautifulsoup(r1.text,'html.parser')

val = s1.find(attrs={'name':'authenticity_token'}).get('value')

r2 = requests.post(

url= 'https://github.com/session',

data={

'commit': 'Sign in',

'utf8': '✓',

'authenticity_token': val,

'login':'xxxxx',

'password': 'xxxx',

}

)

r2_cookie_dict = r2.cookies.get_dict() # {'session_id':'asdfasdfksdfoiuljksdf'}

保存登录状态,查看任意URL

r3 = requests.get(

url='xxxxxxxx',

cookies=r2_cookie_dict

)

print(r3.text) # 登录成功之后,可以查看的页面

2. 不按理说

r1 = requests.get(url='https://github.com/login')

s1 = beautifulsoup(r1.text,'html.parser')

val = s1.find(attrs={'name':'authenticity_token'}).get('value')

# cookie返回给你

r1_cookie_dict = r1.cookies.get_dict()

r2 = requests.post(

url= 'https://github.com/session',

data={

'commit': 'Sign in',

'utf8': '✓',

'authenticity_token': val,

'login':'xxxxx',

'password': 'xxxx',

},

cookies=r1_cookie_dict

)

# 授权

r2_cookie_dict = r2.cookies.get_dict() # {}

保存登录状态,查看任意URL

r3 = requests.get(

url='xxxxxxxx',

cookies=r1_cookie_dict

)

print(r3.text) # 登录成功之后,可以查看的页面

- requests

"""

1. method

2. url

3. params

4. data

5. json

6. headers

7. cookies

8. files

9. auth

10. timeout

11. allow_redirects

12. proxies

13. stream

14. cert

================ session,保存请求相关信息(不推荐)===================

import requests

session = requests.Session()

i1 = session.get(url="http://dig.chouti.com/help/service")

i2 = session.post(

url="http://dig.chouti.com/login",

data={

'phone': "8615131255089",

'password': "xxooxxoo",

'oneMonth': ""

}

)

i3 = session.post(

url="http://dig.chouti.com/link/vote?linksId=8589523"

)

print(i3.text)

"""

- beautifulsoup

- find()

- find_all()

- get()

- attrs

- text

内容:

1. 示例:汽车之家

2. 示例:github和chouti

3. requests和beautifulsoup

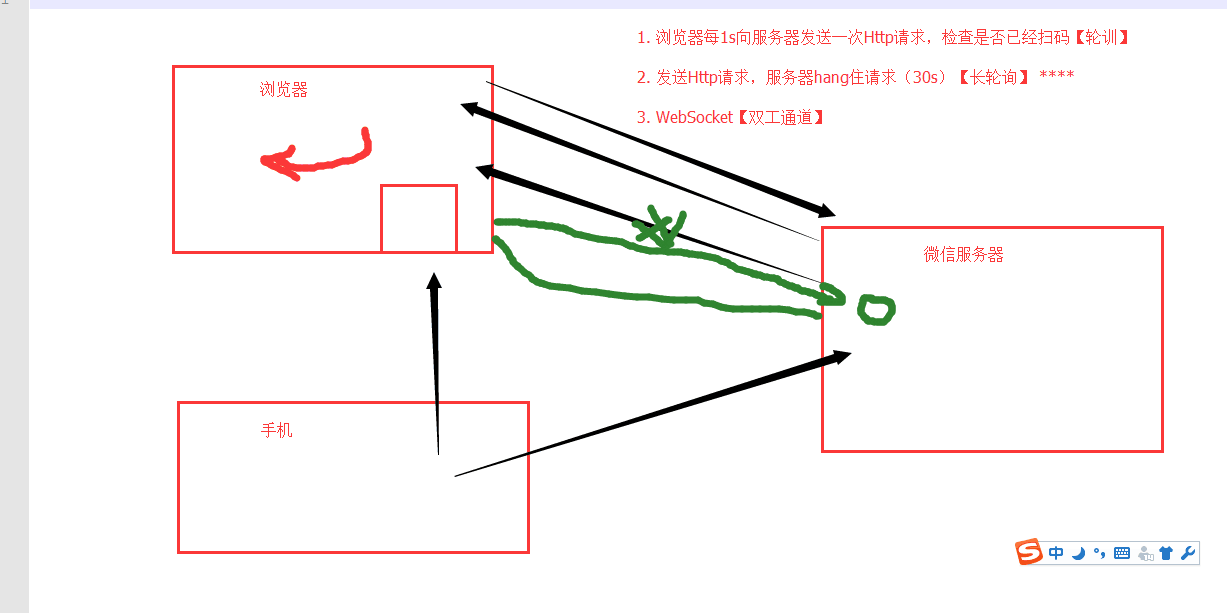

4. 轮询和长轮询

5. Django

request.POST

request.body

# content-type:xxxx

作业:web微信

功能:

1. 二维码显示

2. 长轮询:check_login

3.

- 检测是否已经扫码

- 扫码之后201,头像: base64:.....

- 点击确认200,response.text redirect_ur=....

4. 可选,获取最近联系人信息

安装:

twsited

scrapy框架

课堂代码:https://github.com/liyongsan/git_class/tree/master/day36