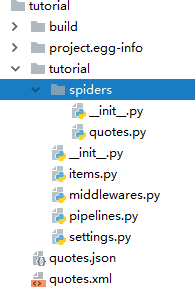

1.创建爬虫项目:

scrapy startproject tutorial

2.创建 spider

cd tutorial

scrapy genspider quotes quotes.toscrape.com

如下图:

3.

quotes.py

___________________________________________________________________________

1 # -*- coding: utf-8 -*- 2 import scrapy 3 from tutorial.items import TutorialItem 4 import logging 5 class QuotesSpider(scrapy.Spider): 6 name = 'quotes' 7 allowed_domains = ['quotes.toscrape.com'] 8 start_urls = ['http://quotes.toscrape.com/'] 9 10 def parse(self, response): 11 quotes=response.css('.quote') 12 for quote in quotes: 13 14 item=TutorialItem() 15 #内容 16 item['text']=quote.css('.text::text').extract_first() 17 18 #作者 19 item['author']=quote.css('.author::text').extract_first() 20 21 #标签 22 item['tags']=quote.css('.tags .tag::text').extract_first() 23 24 yield item 25 26 #下一页 27 next=response.css('.pager .next a::attr("href")').extract_first() 28 url=response.urljoin(next) 29 yield scrapy.Request(url=url,callback=self.parse)

items.py

________________________________________________________________________

1 # -*- coding: utf-8 -*- 2 3 # Define here the models for your scraped items 4 # 5 # See documentation in: 6 # https://doc.scrapy.org/en/latest/topics/items.html 7 8 import scrapy 9 10 11 class TutorialItem(scrapy.Item): 12 # define the fields for your item here like: 13 # name = scrapy.Field() 14 15 16 text=scrapy.Field() 17 author=scrapy.Field() 18 tags=scrapy.Field() 19 piplines.py

_________________________________________________________________________1 # -*- coding: utf-8 -*- 2 3 # Define your item pipelines here 4 # 5 # Don't forget to add your pipeline to the ITEM_PIPELINES setting 6 # See: https://doc.scrapy.org/en/latest/topics/item-pipeline.html 7 from scrapy.exceptions import DropItem 8 9 import pymysql 10 11 class TutorialPipeline(object): 12 # def __init__(self): 13 # self.limit=50 14 # def process_item(self, item, spider): 15 # if item['text']: 16 # if len(item['text'])>self.limit: 17 # item['text']=item['text'][0:self.limit].rstrip()+'...' 18 # return item 19 # else: 20 # return DropItem('Missing Text') 21 def __init__(self): 22 pass 23 def open_spider(self, spider): 24 self.my_conn = pymysql.connect( 25 host = '192.168.113.129', 26 port = 3306, 27 database = 'datas', 28 user = 'root', 29 password = '123456', 30 charset = 'utf8' 31 ) 32 self.my_cursor = self.my_conn.cursor() 33 34 def process_item(self,item, spider): 35 36 dict(item) 37 insert_sql = "insert into quotes(author,tags,text) values(%s,%s,%s)" 38 self.my_cursor.execute(insert_sql,[item['author'],item['tags'],item['text']]) 39 return item 40 def close_spider(self, spider): 41 self.my_conn.commit() 42 43 self.my_cursor.close() 44 45 self.my_conn.close()

setting.py

___________________________________________________________________________

# Obey robots.txt rules ROBOTSTXT_OBEY = True ITEM_PIPELINES = { 'tutorial.pipelines.TutorialPipeline': 200, }

代码配置完:

保存文件格式

scrapy crawl quotes -o quotes.xml

scrapy crawl quotes -o quotes.csv