前言

hadoop是分布式系统,运行在linux之上,配置起来相对复杂。对于hadoop1,很多同学就因为不能搭建正确的运行环境,导致学习兴趣锐减。不过,我有免费的学习视频下载,请点击这里。

hadoop2出来后,解决了hadoop1的几个固有缺陷,比如单点故障、资源利用率低、支持作业类型少等问题,结构发生了很大变化,是hadoop未来使用的一个趋势。当然,配置也更加复杂,网上也没有一篇详细的教程来知道大家可以轻轻松松搭建起这个环境的。我应该算是第一个吧。

hadoop2体系结构

要想理解本节内容,首先需要了解hadoop1的体系结构。在本博客中和我的视频中都有相关内容,这里不再重复,只讲hadoop2的内容。

hadoop1的核心组成是两部分,即HDFS和MapReduce。在hadoop2中变为HDFS和Yarn。

新的HDFS中的NameNode不再是只有一个了,可以有多个(目前只支持2个)。每一个都有相同的职能。

这两个NameNode的地位如何哪?答:一个是active状态的,一个是standby状态的。当集群运行时,只有active状态的NameNode是正常工作的,standby状态的NameNode是处于待命状态的,时刻同步active状态NameNode的数据。一旦active状态的NameNode不能工作,通过手工或者自动切换,standby状态的NameNode就可以转变为active状态的,就可以继续工作了。这就是高可靠。

当NameNode发生故障时,他们的数据如何保持一致哪?在这里,2个NameNode的数据其实是实时共享的。新HDFS采用了一种共享机制,JournalNode集群或者NFS进行共享。NFS是操作系统层面的,JournalNode是hadoop层面的,我们这里使用JournalNode集群进行数据共享。

如何实现NameNode的自动切换哪?这就需要使用ZooKeeper集群进行选择了。HDFS集群中的两个NameNode都在ZooKeeper中注册,当active状态的NameNode出故障时,ZooKeeper能检测到这种情况,它就会自动把standby状态的NameNode切换为active状态。

HDFS Federation(HDFS联盟)是怎么回事?答:联盟的出现是有原因的。我们知道NameNode是核心节点,维护着整个HDFS中的元数据信息,那么其容量是有限的,受制于服务器的内存空间。当NameNode服务器的内存装不下数据后,那么HDFS集群就装不下数据了,寿命也就到头了。因此其扩展性是受限的。HDFS联盟指的是有多个HDFS集群同时工作,那么其容量理论上就不受限了,夸张点说就是无限扩展。

配置过程详述

大家从官网下载的apache hadoop2.2.0的代码是32位操作系统下编译的,不能使用64位的jdk。我下面部署的hadoop代码是自己的64位机器上重新编译过的。服务器都是64位的,本配置尽量模拟真实环境。大家可以以32位的操作系统做练习,这是没关系的。关于基本环境的详细配置,大家可以观看我的视频,或者浏览吴超沉思录的相关文章。

在这里我们选用4台机器进行示范,各台机器的职责如下表格所示

| hadoop101 | hadoop102 | hadoop103 | hadoop104 | |

| 是NameNode吗? | 是,属集群c1 | 是,属集群c1 | 是,属集群c2 | 是,属集群c2 |

| 是DataNode吗? | 是 | 是 | 是 | 是 |

| 是JournalNode吗? | 是 | 是 | 是 | 不是 |

| 是ZooKeeper吗? | 是 | 是 | 是 | 不是 |

| 是ZKFC吗? | 是 | 是 | 是 | 是 |

配置文件一共包括6个,分别是hadoop-env.sh、core-site.xml、hdfs-site.xml、mapred-site.xml、yarn-site.xml和slaves。除了hdfs-site.xml文件在不同集群配置不同外,其余文件在四个节点的配置是完全一样的,可以复制。

文件hadoop-env.sh

就是修改这一行内容,修改后的结果如下

export JAVA_HOME=/usr/local/jdk

【这里的JAVA_HOME的值是jdk的安装路径。如果你那里不一样,请修改为自己的地址】

文件core-site.xml

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://cluster1</value>

</property>

【这里的值指的是默认的HDFS路径。当有多个HDFS集群同时工作时,用户如果不写集群名称,那么默认使用哪个哪?在这里指定!该值来自于hdfs-site.xml中的配置】

<property>

<name>hadoop.tmp.dir</name>

<value>/usr/local/hadoop/tmp</value>

</property>

【这里的路径默认是NameNode、DataNode、JournalNode等存放数据的公共目录。用户也可以自己单独指定这三类节点的目录。】

<property>

<name>ha.zookeeper.quorum</name>

<value>hadoop101:2181,hadoop102:2181,hadoop103:2181</value>

</property>

【这里是ZooKeeper集群的地址和端口。注意,数量一定是奇数,且不少于三个节点】

</configuration>

集群c1的文件hdfs-site.xml

该文件只配置在hadoop101和hadoop102上。

<configuration>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

【指定DataNode存储block的副本数量。默认值是3个,我们现在有4个DataNode,该值不大于4即可。】

<property>

<name>dfs.nameservices</name>

<value>cluster1,cluster2</value>

</property>

【使用federation时,使用了2个HDFS集群。这里抽象出两个NameService实际上就是给这2个HDFS集群起了个别名。名字可以随便起,相互不重复即可】

<property>

<name>dfs.ha.namenodes.cluster1</name>

<value>hadoop101,hadoop102</value>

</property>

【指定NameService是cluster1时的namenode有哪些,这里的值也是逻辑名称,名字随便起,相互不重复即可】

<property>

<name>dfs.namenode.rpc-address.cluster1.hadoop101</name>

<value>hadoop101:9000</value>

</property>

【指定hadoop101的RPC地址】

<property>

<name>dfs.namenode.http-address.cluster1.hadoop101</name>

<value>hadoop101:50070</value>

</property>

【指定hadoop101的http地址】

<property>

<name>dfs.namenode.rpc-address.cluster1.hadoop102</name>

<value>hadoop102:9000</value>

</property>

【指定hadoop102的RPC地址】

<property>

<name>dfs.namenode.http-address.cluster1.hadoop102</name>

<value>hadoop102:50070</value>

</property>

【指定hadoop102的http地址】

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://hadoop101:8485;hadoop102:8485;hadoop103:8485/cluster1</value>

</property>

【指定cluster1的两个NameNode共享edits文件目录时,使用的JournalNode集群信息】

<property>

<name>dfs.ha.automatic-failover.enabled.cluster1</name>

<value>true</value>

</property>

【指定cluster1是否启动自动故障恢复,即当NameNode出故障时,是否自动切换到另一台NameNode】

<property>

<name>dfs.client.failover.proxy.provider.cluster1</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

【指定cluster1出故障时,哪个实现类负责执行故障切换】

<property>

<name>dfs.ha.namenodes.cluster2</name>

<value>hadoop103,hadoop104</value>

</property>

【指定NameService是cluster2时,两个NameNode是谁,这里是逻辑名称,不重复即可。以下配置与cluster1几乎全部相似,不再添加注释】

<property>

<name>dfs.namenode.rpc-address.cluster2.hadoop103</name>

<value>hadoop103:9000</value>

</property>

<property>

<name>dfs.namenode.http-address.cluster2.hadoop103</name>

<value>hadoop103:50070</value>

</property>

<property>

<name>dfs.namenode.rpc-address.cluster2.hadoop104</name>

<value>hadoop104:9000</value>

</property>

<property>

<name>dfs.namenode.http-address.cluster2.hadoop104</name>

<value>hadoop104:50070</value>

</property>

<!--

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://hadoop101:8485;hadoop102:8485;hadoop103:8485/cluster2</value>

</property>

【这段代码是注释掉的,不要打开】

-->

<property>

<name>dfs.ha.automatic-failover.enabled.cluster2</name>

<value>true</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.cluster2</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/usr/local/hadoop/tmp/journal</value>

</property>

【指定JournalNode集群在对NameNode的目录进行共享时,自己存储数据的磁盘路径】

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

【一旦需要NameNode切换,使用ssh方式进行操作】

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/root/.ssh/id_rsa</value>

</property>

【如果使用ssh进行故障切换,使用ssh通信时用的密钥存储的位置】

</configuration>

集群c2的文件hdfs-site.xml

该文件只配置在hadoop103和hadoop104上。

该文件与c1中的hdfs-site.xml配置内容完全相同,只有注释位置不一样,一定要注意,不要随便改

<configuration>

<property>

<name>dfs.replication</name>

<value>2</value>

</property>

<property>

<name>dfs.nameservices</name>

<value>cluster1,cluster2</value>

</property>

<property>

<name>dfs.ha.namenodes.cluster1</name>

<value>hadoop101,hadoop102</value>

</property>

<property>

<name>dfs.namenode.rpc-address.cluster1.hadoop101</name>

<value>hadoop101:9000</value>

</property>

<property>

<name>dfs.namenode.http-address.cluster1.hadoop101</name>

<value>hadoop101:50070</value>

</property>

<property>

<name>dfs.namenode.rpc-address.cluster1.hadoop102</name>

<value>hadoop102:9000</value>

</property>

<property>

<name>dfs.namenode.http-address.cluster1.hadoop102</name>

<value>hadoop102:50070</value>

</property>

<!--

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://hadoop101:8485;hadoop102:8485;hadoop103:8485/cluster1</value>

</property>

【这段代码是注释掉的,不要打开】

-->

<property>

<name>dfs.ha.automatic-failover.enabled.cluster1</name>

<value>true</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.cluster1</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.ha.namenodes.cluster2</name>

<value>hadoop103,hadoop104</value>

</property>

<property>

<name>dfs.namenode.rpc-address.cluster2.hadoop103</name>

<value>hadoop103:9000</value>

</property>

<property>

<name>dfs.namenode.http-address.cluster2.hadoop103</name>

<value>hadoop103:50070</value>

</property>

<property>

<name>dfs.namenode.rpc-address.cluster2.hadoop104</name>

<value>hadoop104:9000</value>

</property>

<property>

<name>dfs.namenode.http-address.cluster2.hadoop104</name>

<value>hadoop104:50070</value>

</property>

<property>

<name>dfs.namenode.shared.edits.dir</name>

<value>qjournal://hadoop101:8485;hadoop102:8485;hadoop103:8485/cluster2</value>

</property>

<property>

<name>dfs.ha.automatic-failover.enabled.cluster2</name>

<value>true</value>

</property>

<property>

<name>dfs.client.failover.proxy.provider.cluster2</name>

<value>org.apache.hadoop.hdfs.server.namenode.ha.ConfiguredFailoverProxyProvider</value>

</property>

<property>

<name>dfs.journalnode.edits.dir</name>

<value>/usr/local/hadoop/tmp/journal</value>

</property>

<property>

<name>dfs.ha.fencing.methods</name>

<value>sshfence</value>

</property>

<property>

<name>dfs.ha.fencing.ssh.private-key-files</name>

<value>/root/.ssh/id_rsa</value>

</property>

</configuration>

文件mapred-site.xml

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

【指定运行mapreduce的环境是yarn,与hadoop1截然不同的地方】

</configuration>

文件yarn-site.xml

<configuration>

<property>

<name>yarn.resourcemanager.hostname</name>

<value>hadoop101</value>

</property>

【自定ResourceManager的地址,还是单点,这是隐患】

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

文件slaves

hadoop101

hadoop102

hadoop103

hadoop104

【指定所有的DataNode节点列表,每行一个节点名称】

注意:以上配置中c1中的hdfs-site.xml文件配置在hadoop101和hadoop102中,c2中的hdfs-site.xml文件配置在hadoop103和hadoop104中。其余文件在各个节点都相同。

启动过程

启动时,要非常小心,请严格按照我这里描述的步骤做,每一步要检查自己的操作是否正确。

1.首先检查各个节点的配置文件是否正确

特别要注意hdfs-site.xml文件,在c1和c2中是不同的。

2.启动ZooKeeper集群

关于ZooKeeper的集群配置和启动描述见吴超沉思录,这里不再详述。

在hadoop101、hadoop102、hadoop103上分别执行命令:zkServer.sh start

命令输出(以hadoop101为例):

[root@hadoop101 hadoop]# zkServer.sh status

JMX enabled by default

Using config: /usr/local/zookeeper/bin/../conf/zoo.cfg

Mode: follower

三个节点都执行完启动命令后,在hadoop101执行以下验证。

验证:

[root@hadoop101 hadoop]# zkCli.sh

Connecting to localhost:2181

2014-02-12 07:20:35,509 [myid:] - INFO [main:Environment@100] - Client environment:zookeeper.version=3.4.5-1392090, built on 09/30/2012 17:52 GMT

2014-02-12 07:20:35,523 [myid:] - INFO [main:Environment@100] - Client environment:host.name=hadoop101

2014-02-12 07:20:35,524 [myid:] - INFO [main:Environment@100] - Client environment:java.version=1.7.0_45

2014-02-12 07:20:35,525 [myid:] - INFO [main:Environment@100] - Client environment:java.vendor=Oracle Corporation

2014-02-12 07:20:35,525 [myid:] - INFO [main:Environment@100] - Client environment:java.home=/usr/local/jdk/jre

2014-02-12 07:20:35,526 [myid:] - INFO [main:Environment@100] - Client environment:java.class.path=/usr/local/zookeeper/bin/../build/classes:/usr/local/zookeeper/bin/../build/lib/*.jar:/usr/local/zookeeper/bin/../lib/slf4j-log4j12-1.6.1.jar:/usr/local/zookeeper/bin/../lib/slf4j-api-1.6.1.jar:/usr/local/zookeeper/bin/../lib/netty-3.2.2.Final.jar:/usr/local/zookeeper/bin/../lib/log4j-1.2.15.jar:/usr/local/zookeeper/bin/../lib/jline-0.9.94.jar:/usr/local/zookeeper/bin/../zookeeper-3.4.5.jar:/usr/local/zookeeper/bin/../src/java/lib/*.jar:/usr/local/zookeeper/bin/../conf:/usr/local/hadoop

2014-02-12 07:20:35,526 [myid:] - INFO [main:Environment@100] - Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

2014-02-12 07:20:35,527 [myid:] - INFO [main:Environment@100] - Client environment:java.io.tmpdir=/tmp

2014-02-12 07:20:35,528 [myid:] - INFO [main:Environment@100] - Client environment:java.compiler=<NA>

2014-02-12 07:20:35,528 [myid:] - INFO [main:Environment@100] - Client environment:os.name=Linux

2014-02-12 07:20:35,529 [myid:] - INFO [main:Environment@100] - Client environment:os.arch=amd64

2014-02-12 07:20:35,529 [myid:] - INFO [main:Environment@100] - Client environment:os.version=2.6.32-431.el6.x86_64

2014-02-12 07:20:35,530 [myid:] - INFO [main:Environment@100] - Client environment:user.name=root

2014-02-12 07:20:35,530 [myid:] - INFO [main:Environment@100] - Client environment:user.home=/root

2014-02-12 07:20:35,531 [myid:] - INFO [main:Environment@100] - Client environment:user.dir=/usr/local/hadoop

2014-02-12 07:20:35,533 [myid:] - INFO [main:ZooKeeper@438] - Initiating client connection, connectString=localhost:2181 sessionTimeout=30000 watcher=org.apache.zookeeper.ZooKeeperMain$MyWatcher@10636a7e

Welcome to ZooKeeper!

2014-02-12 07:20:35,569 [myid:] - INFO [main-SendThread(127.0.0.1:2181):ClientCnxn$SendThread@966] - Opening socket connection to server 127.0.0.1/127.0.0.1:2181. Will not attempt to authenticate using SASL (unknown error)

2014-02-12 07:20:35,587 [myid:] - INFO [main-SendThread(127.0.0.1:2181):ClientCnxn$SendThread@849] - Socket connection established to 127.0.0.1/127.0.0.1:2181, initiating session

JLine support is enabled

2014-02-12 07:20:35,687 [myid:] - INFO [main-SendThread(127.0.0.1:2181):ClientCnxn$SendThread@1207] - Session establishment complete on server 127.0.0.1/127.0.0.1:2181, sessionid = 0x654423404e530000, negotiated timeout = 30000

WATCHER::

WatchedEvent state:SyncConnected type:None path:null

[zk: localhost:2181(CONNECTED) 0] ls /

[zookeeper]

[zk: localhost:2181(CONNECTED) 1]

【可以看到ZK集群 中只有一个节点zookeeper】

3.格式化ZooKeeper集群,目的是在ZooKeeper集群上建立HA的相应节点。

在hadoop101上执行命令:/usr/local/hadoop/bin/hdfs zkfc –formatZK

命令输出:

[root@hadoop101 hadoop]# /usr/local/hadoop/bin/hdfs zkfc -formatZK

14/02/12 07:28:56 INFO tools.DFSZKFailoverController: Failover controller configured for NameNode NameNode at hadoop101/192.168.80.101:9000

14/02/12 07:28:57 INFO zookeeper.ZooKeeper: Client environment:zookeeper.version=3.4.5-1392090, built on 09/30/2012 17:52 GMT

14/02/12 07:28:57 INFO zookeeper.ZooKeeper: Client environment:host.name=hadoop101

14/02/12 07:28:57 INFO zookeeper.ZooKeeper: Client environment:java.version=1.7.0_45

14/02/12 07:28:57 INFO zookeeper.ZooKeeper: Client environment:java.vendor=Oracle Corporation

14/02/12 07:28:57 INFO zookeeper.ZooKeeper: Client environment:java.home=/usr/local/jdk/jre

14/02/12 07:28:57 INFO zookeeper.ZooKeeper: Client environment:java.class.path=/usr/local/hadoop/etc/hadoop:/usr/local/hadoop/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jsch-0.1.42.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-digester-1.8.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-cli-1.2.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-core-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-el-1.0.jar:/usr/local/hadoop/share/hadoop/common/lib/jets3t-0.6.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/common/lib/slf4j-api-1.7.5.jar:/usr/local/hadoop/share/hadoop/common/lib/xz-1.0.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-lang-2.5.jar:/usr/local/hadoop/share/hadoop/common/lib/jasper-runtime-5.5.23.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-xc-1.8.8.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-codec-1.4.jar:/usr/local/hadoop/share/hadoop/common/lib/activation-1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/avro-1.7.4.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-httpclient-3.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/usr/local/hadoop/share/hadoop/common/lib/hadoop-annotations-2.2.0.jar:/usr/local/hadoop/share/hadoop/common/lib/jsp-api-2.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-configuration-1.6.jar:/usr/local/hadoop/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop/share/hadoop/common/lib/zookeeper-3.4.5.jar:/usr/local/hadoop/share/hadoop/common/lib/hadoop-auth-2.2.0.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-io-2.1.jar:/usr/local/hadoop/share/hadoop/common/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/common/lib/stax-api-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-collections-3.2.1.jar:/usr/local/hadoop/share/hadoop/common/lib/servlet-api-2.5.jar:/usr/local/hadoop/share/hadoop/common/lib/junit-4.8.2.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-util-6.1.26.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-mapper-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/common/lib/jsr305-1.3.9.jar:/usr/local/hadoop/share/hadoop/common/lib/mockito-all-1.8.5.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-logging-1.1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-math-2.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-json-1.9.jar:/usr/local/hadoop/share/hadoop/common/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-net-3.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-6.1.26.jar:/usr/local/hadoop/share/hadoop/common/lib/jasper-compiler-5.5.23.jar:/usr/local/hadoop/share/hadoop/common/lib/guava-11.0.2.jar:/usr/local/hadoop/share/hadoop/common/lib/xmlenc-0.52.jar:/usr/local/hadoop/share/hadoop/common/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/common/lib/paranamer-2.3.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-jaxrs-1.8.8.jar:/usr/local/hadoop/share/hadoop/common/lib/jettison-1.1.jar:/usr/local/hadoop/share/hadoop/common/hadoop-common-2.2.0-tests.jar:/usr/local/hadoop/share/hadoop/common/hadoop-common-2.2.0.jar:/usr/local/hadoop/share/hadoop/common/hadoop-nfs-2.2.0.jar:/usr/local/hadoop/share/hadoop/hdfs:/usr/local/hadoop/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-core-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-el-1.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-lang-2.5.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jasper-runtime-5.5.23.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jsp-api-2.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-io-2.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-mapper-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jsr305-1.3.9.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-logging-1.1.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/guava-11.0.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-nfs-2.2.0.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-2.2.0-tests.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/guice-3.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-core-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/xz-1.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/hamcrest-core-1.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/avro-1.7.4.jar:/usr/local/hadoop/share/hadoop/yarn/lib/aopalliance-1.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/hadoop-annotations-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/javax.inject-1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-io-2.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-mapper-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/yarn/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/yarn/lib/junit-4.10.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/yarn/lib/paranamer-2.3.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-api-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-tests-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-site-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-client-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-common-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-common-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/guice-3.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jackson-core-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/xz-1.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/hamcrest-core-1.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/hadoop-annotations-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/javax.inject-1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/commons-io-2.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/junit-4.10.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.2.0-tests.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.2.0.jar:/usr/local/hadoop/contrib/capacity-scheduler/*.jar

14/02/12 07:28:57 INFO zookeeper.ZooKeeper: Client environment:java.library.path=/usr/local/hadoop/lib/native

14/02/12 07:28:57 INFO zookeeper.ZooKeeper: Client environment:java.io.tmpdir=/tmp

14/02/12 07:28:57 INFO zookeeper.ZooKeeper: Client environment:java.compiler=<NA>

14/02/12 07:28:57 INFO zookeeper.ZooKeeper: Client environment:os.name=Linux

14/02/12 07:28:57 INFO zookeeper.ZooKeeper: Client environment:os.arch=amd64

14/02/12 07:28:57 INFO zookeeper.ZooKeeper: Client environment:os.version=2.6.32-431.el6.x86_64

14/02/12 07:28:57 INFO zookeeper.ZooKeeper: Client environment:user.name=root

14/02/12 07:28:57 INFO zookeeper.ZooKeeper: Client environment:user.home=/root

14/02/12 07:28:57 INFO zookeeper.ZooKeeper: Client environment:user.dir=/usr/local/hadoop

14/02/12 07:28:57 INFO zookeeper.ZooKeeper: Initiating client connection, connectString=hadoop101:2181,hadoop102:2181,hadoop103:2181 sessionTimeout=5000 watcher=org.apache.hadoop.ha.ActiveStandbyElector$WatcherWithClientRef@3e9c00ea

14/02/12 07:28:57 INFO zookeeper.ClientCnxn: Opening socket connection to server hadoop102/192.168.80.102:2181. Will not attempt to authenticate using SASL (unknown error)

14/02/12 07:28:57 INFO zookeeper.ClientCnxn: Socket connection established to hadoop102/192.168.80.102:2181, initiating session

14/02/12 07:28:57 INFO zookeeper.ClientCnxn: Session establishment complete on server hadoop102/192.168.80.102:2181, sessionid = 0x6644234039710000, negotiated timeout = 5000

14/02/12 07:28:57 INFO ha.ActiveStandbyElector: Session connected.

14/02/12 07:28:57 INFO ha.ActiveStandbyElector: Successfully created /hadoop-ha/cluster1 in ZK.

14/02/12 07:28:57 INFO zookeeper.ZooKeeper: Session: 0x6644234039710000 closed

14/02/12 07:28:57 INFO zookeeper.ClientCnxn: EventThread shut down

[root@hadoop101 hadoop]#

验证:

[root@hadoop101 hadoop]# zkCli.sh

Connecting to localhost:2181

2014-02-12 07:30:24,355 [myid:] - INFO [main:Environment@100] - Client environment:zookeeper.version=3.4.5-1392090, built on 09/30/2012 17:52 GMT

2014-02-12 07:30:24,373 [myid:] - INFO [main:Environment@100] - Client environment:host.name=hadoop101

2014-02-12 07:30:24,374 [myid:] - INFO [main:Environment@100] - Client environment:java.version=1.7.0_45

2014-02-12 07:30:24,375 [myid:] - INFO [main:Environment@100] - Client environment:java.vendor=Oracle Corporation

2014-02-12 07:30:24,376 [myid:] - INFO [main:Environment@100] - Client environment:java.home=/usr/local/jdk/jre

2014-02-12 07:30:24,376 [myid:] - INFO [main:Environment@100] - Client environment:java.class.path=/usr/local/zookeeper/bin/../build/classes:/usr/local/zookeeper/bin/../build/lib/*.jar:/usr/local/zookeeper/bin/../lib/slf4j-log4j12-1.6.1.jar:/usr/local/zookeeper/bin/../lib/slf4j-api-1.6.1.jar:/usr/local/zookeeper/bin/../lib/netty-3.2.2.Final.jar:/usr/local/zookeeper/bin/../lib/log4j-1.2.15.jar:/usr/local/zookeeper/bin/../lib/jline-0.9.94.jar:/usr/local/zookeeper/bin/../zookeeper-3.4.5.jar:/usr/local/zookeeper/bin/../src/java/lib/*.jar:/usr/local/zookeeper/bin/../conf:/usr/local/hadoop

2014-02-12 07:30:24,378 [myid:] - INFO [main:Environment@100] - Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

2014-02-12 07:30:24,379 [myid:] - INFO [main:Environment@100] - Client environment:java.io.tmpdir=/tmp

2014-02-12 07:30:24,380 [myid:] - INFO [main:Environment@100] - Client environment:java.compiler=<NA>

2014-02-12 07:30:24,381 [myid:] - INFO [main:Environment@100] - Client environment:os.name=Linux

2014-02-12 07:30:24,382 [myid:] - INFO [main:Environment@100] - Client environment:os.arch=amd64

2014-02-12 07:30:24,382 [myid:] - INFO [main:Environment@100] - Client environment:os.version=2.6.32-431.el6.x86_64

2014-02-12 07:30:24,383 [myid:] - INFO [main:Environment@100] - Client environment:user.name=root

2014-02-12 07:30:24,383 [myid:] - INFO [main:Environment@100] - Client environment:user.home=/root

2014-02-12 07:30:24,384 [myid:] - INFO [main:Environment@100] - Client environment:user.dir=/usr/local/hadoop

2014-02-12 07:30:24,387 [myid:] - INFO [main:ZooKeeper@438] - Initiating client connection, connectString=localhost:2181 sessionTimeout=30000 watcher=org.apache.zookeeper.ZooKeeperMain$MyWatcher@10636a7e

Welcome to ZooKeeper!

2014-02-12 07:30:24,422 [myid:] - INFO [main-SendThread(127.0.0.1:2181):ClientCnxn$SendThread@966] - Opening socket connection to server 127.0.0.1/127.0.0.1:2181. Will not attempt to authenticate using SASL (unknown error)

2014-02-12 07:30:24,462 [myid:] - INFO [main-SendThread(127.0.0.1:2181):ClientCnxn$SendThread@849] - Socket connection established to 127.0.0.1/127.0.0.1:2181, initiating session

JLine support is enabled

2014-02-12 07:30:24,494 [myid:] - INFO [main-SendThread(127.0.0.1:2181):ClientCnxn$SendThread@1207] - Session establishment complete on server 127.0.0.1/127.0.0.1:2181, sessionid = 0x654423404e530001, negotiated timeout = 30000

WATCHER::

WatchedEvent state:SyncConnected type:None path:null

[zk: localhost:2181(CONNECTED) 0] ls /

[hadoop-ha, zookeeper]

[zk: localhost:2181(CONNECTED) 1] ls /hadoop-ha

[cluster1]

[zk: localhost:2181(CONNECTED) 2]

【格式化操作的目的是在ZK集群中建立一个节点,用于保存集群c1中NameNode的状态数据】

在hadoop103上执行命令:/usr/local/hadoop/bin/hdfs zkfc –formatZK

命令输出:

[root@hadoop103 hadoop]# /usr/local/hadoop/bin/hdfs zkfc -formatZK

14/02/12 07:32:14 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

14/02/12 07:32:14 INFO tools.DFSZKFailoverController: Failover controller configured for NameNode NameNode at hadoop103/192.168.80.103:9000

14/02/12 07:32:14 INFO zookeeper.ZooKeeper: Client environment:zookeeper.version=3.4.5-1392090, built on 09/30/2012 17:52 GMT

14/02/12 07:32:14 INFO zookeeper.ZooKeeper: Client environment:host.name=hadoop103

14/02/12 07:32:14 INFO zookeeper.ZooKeeper: Client environment:java.version=1.7.0_45

14/02/12 07:32:14 INFO zookeeper.ZooKeeper: Client environment:java.vendor=Oracle Corporation

14/02/12 07:32:14 INFO zookeeper.ZooKeeper: Client environment:java.home=/usr/local/jdk/jre

14/02/12 07:32:14 INFO zookeeper.ZooKeeper: Client environment:java.class.path=/usr/local/hadoop/etc/hadoop:/usr/local/hadoop/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jsch-0.1.42.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-digester-1.8.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-cli-1.2.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-core-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-el-1.0.jar:/usr/local/hadoop/share/hadoop/common/lib/jets3t-0.6.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/common/lib/slf4j-api-1.7.5.jar:/usr/local/hadoop/share/hadoop/common/lib/xz-1.0.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-lang-2.5.jar:/usr/local/hadoop/share/hadoop/common/lib/jasper-runtime-5.5.23.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-xc-1.8.8.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-codec-1.4.jar:/usr/local/hadoop/share/hadoop/common/lib/activation-1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/avro-1.7.4.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-httpclient-3.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/usr/local/hadoop/share/hadoop/common/lib/hadoop-annotations-2.2.0.jar:/usr/local/hadoop/share/hadoop/common/lib/jsp-api-2.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-configuration-1.6.jar:/usr/local/hadoop/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop/share/hadoop/common/lib/zookeeper-3.4.5.jar:/usr/local/hadoop/share/hadoop/common/lib/hadoop-auth-2.2.0.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-io-2.1.jar:/usr/local/hadoop/share/hadoop/common/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/common/lib/stax-api-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-collections-3.2.1.jar:/usr/local/hadoop/share/hadoop/common/lib/servlet-api-2.5.jar:/usr/local/hadoop/share/hadoop/common/lib/junit-4.8.2.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-util-6.1.26.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-mapper-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/common/lib/jsr305-1.3.9.jar:/usr/local/hadoop/share/hadoop/common/lib/mockito-all-1.8.5.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-logging-1.1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-math-2.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-json-1.9.jar:/usr/local/hadoop/share/hadoop/common/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-net-3.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-6.1.26.jar:/usr/local/hadoop/share/hadoop/common/lib/jasper-compiler-5.5.23.jar:/usr/local/hadoop/share/hadoop/common/lib/guava-11.0.2.jar:/usr/local/hadoop/share/hadoop/common/lib/xmlenc-0.52.jar:/usr/local/hadoop/share/hadoop/common/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/common/lib/paranamer-2.3.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-jaxrs-1.8.8.jar:/usr/local/hadoop/share/hadoop/common/lib/jettison-1.1.jar:/usr/local/hadoop/share/hadoop/common/hadoop-common-2.2.0-tests.jar:/usr/local/hadoop/share/hadoop/common/hadoop-common-2.2.0.jar:/usr/local/hadoop/share/hadoop/common/hadoop-nfs-2.2.0.jar:/usr/local/hadoop/share/hadoop/hdfs:/usr/local/hadoop/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-core-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-el-1.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-lang-2.5.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jasper-runtime-5.5.23.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jsp-api-2.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-io-2.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-mapper-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jsr305-1.3.9.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-logging-1.1.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/guava-11.0.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-nfs-2.2.0.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-2.2.0-tests.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/guice-3.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-core-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/xz-1.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/hamcrest-core-1.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/avro-1.7.4.jar:/usr/local/hadoop/share/hadoop/yarn/lib/aopalliance-1.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/hadoop-annotations-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/javax.inject-1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-io-2.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-mapper-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/yarn/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/yarn/lib/junit-4.10.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/yarn/lib/paranamer-2.3.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-api-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-tests-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-site-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-client-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-common-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-common-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/guice-3.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jackson-core-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/xz-1.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/hamcrest-core-1.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/hadoop-annotations-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/javax.inject-1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/commons-io-2.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/junit-4.10.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.2.0-tests.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.2.0.jar:/usr/local/hadoop/contrib/capacity-scheduler/*.jar

14/02/12 07:32:14 INFO zookeeper.ZooKeeper: Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

14/02/12 07:32:14 INFO zookeeper.ZooKeeper: Client environment:java.io.tmpdir=/tmp

14/02/12 07:32:14 INFO zookeeper.ZooKeeper: Client environment:java.compiler=<NA>

14/02/12 07:32:14 INFO zookeeper.ZooKeeper: Client environment:os.name=Linux

14/02/12 07:32:14 INFO zookeeper.ZooKeeper: Client environment:os.arch=amd64

14/02/12 07:32:14 INFO zookeeper.ZooKeeper: Client environment:os.version=2.6.32-431.el6.x86_64

14/02/12 07:32:14 INFO zookeeper.ZooKeeper: Client environment:user.name=root

14/02/12 07:32:14 INFO zookeeper.ZooKeeper: Client environment:user.home=/root

14/02/12 07:32:14 INFO zookeeper.ZooKeeper: Client environment:user.dir=/usr/local/hadoop

14/02/12 07:32:14 INFO zookeeper.ZooKeeper: Initiating client connection, connectString=hadoop101:2181,hadoop102:2181,hadoop103:2181 sessionTimeout=5000 watcher=org.apache.hadoop.ha.ActiveStandbyElector$WatcherWithClientRef@91d3b18

14/02/12 07:32:14 INFO zookeeper.ClientCnxn: Opening socket connection to server hadoop102/192.168.80.102:2181. Will not attempt to authenticate using SASL (unknown error)

14/02/12 07:32:14 INFO zookeeper.ClientCnxn: Socket connection established to hadoop102/192.168.80.102:2181, initiating session

14/02/12 07:32:14 INFO zookeeper.ClientCnxn: Session establishment complete on server hadoop102/192.168.80.102:2181, sessionid = 0x6644234039710001, negotiated timeout = 5000

14/02/12 07:32:14 INFO ha.ActiveStandbyElector: Session connected.

14/02/12 07:32:14 INFO ha.ActiveStandbyElector: Successfully created /hadoop-ha/cluster2 in ZK.

14/02/12 07:32:14 INFO zookeeper.ZooKeeper: Session: 0x6644234039710001 closed

14/02/12 07:32:14 INFO zookeeper.ClientCnxn: EventThread shut down

验证:

[root@hadoop103 hadoop]# zkCli.sh

Connecting to localhost:2181

2014-02-12 07:32:21,770 [myid:] - INFO [main:Environment@100] - Client environment:zookeeper.version=3.4.5-1392090, built on 09/30/2012 17:52 GMT

2014-02-12 07:32:21,786 [myid:] - INFO [main:Environment@100] - Client environment:host.name=hadoop103

2014-02-12 07:32:21,788 [myid:] - INFO [main:Environment@100] - Client environment:java.version=1.7.0_45

2014-02-12 07:32:21,789 [myid:] - INFO [main:Environment@100] - Client environment:java.vendor=Oracle Corporation

2014-02-12 07:32:21,789 [myid:] - INFO [main:Environment@100] - Client environment:java.home=/usr/local/jdk/jre

2014-02-12 07:32:21,790 [myid:] - INFO [main:Environment@100] - Client environment:java.class.path=/usr/local/zookeeper/bin/../build/classes:/usr/local/zookeeper/bin/../build/lib/*.jar:/usr/local/zookeeper/bin/../lib/slf4j-log4j12-1.6.1.jar:/usr/local/zookeeper/bin/../lib/slf4j-api-1.6.1.jar:/usr/local/zookeeper/bin/../lib/netty-3.2.2.Final.jar:/usr/local/zookeeper/bin/../lib/log4j-1.2.15.jar:/usr/local/zookeeper/bin/../lib/jline-0.9.94.jar:/usr/local/zookeeper/bin/../zookeeper-3.4.5.jar:/usr/local/zookeeper/bin/../src/java/lib/*.jar:/usr/local/zookeeper/bin/../conf:/usr/local/hadoop

2014-02-12 07:32:21,791 [myid:] - INFO [main:Environment@100] - Client environment:java.library.path=/usr/java/packages/lib/amd64:/usr/lib64:/lib64:/lib:/usr/lib

2014-02-12 07:32:21,792 [myid:] - INFO [main:Environment@100] - Client environment:java.io.tmpdir=/tmp

2014-02-12 07:32:21,793 [myid:] - INFO [main:Environment@100] - Client environment:java.compiler=<NA>

2014-02-12 07:32:21,793 [myid:] - INFO [main:Environment@100] - Client environment:os.name=Linux

2014-02-12 07:32:21,794 [myid:] - INFO [main:Environment@100] - Client environment:os.arch=amd64

2014-02-12 07:32:21,794 [myid:] - INFO [main:Environment@100] - Client environment:os.version=2.6.32-431.el6.x86_64

2014-02-12 07:32:21,795 [myid:] - INFO [main:Environment@100] - Client environment:user.name=root

2014-02-12 07:32:21,796 [myid:] - INFO [main:Environment@100] - Client environment:user.home=/root

2014-02-12 07:32:21,796 [myid:] - INFO [main:Environment@100] - Client environment:user.dir=/usr/local/hadoop

2014-02-12 07:32:21,801 [myid:] - INFO [main:ZooKeeper@438] - Initiating client connection, connectString=localhost:2181 sessionTimeout=30000 watcher=org.apache.zookeeper.ZooKeeperMain$MyWatcher@10636a7e

Welcome to ZooKeeper!

2014-02-12 07:32:21,850 [myid:] - INFO [main-SendThread(127.0.0.1:2181):ClientCnxn$SendThread@966] - Opening socket connection to server 127.0.0.1/127.0.0.1:2181. Will not attempt to authenticate using SASL (unknown error)

JLine support is enabled

2014-02-12 07:32:21,868 [myid:] - INFO [main-SendThread(127.0.0.1:2181):ClientCnxn$SendThread@849] - Socket connection established to 127.0.0.1/127.0.0.1:2181, initiating session

2014-02-12 07:32:21,906 [myid:] - INFO [main-SendThread(127.0.0.1:2181):ClientCnxn$SendThread@1207] - Session establishment complete on server 127.0.0.1/127.0.0.1:2181, sessionid = 0x6744234039810002, negotiated timeout = 30000

WATCHER::

WatchedEvent state:SyncConnected type:None path:null

[zk: localhost:2181(CONNECTED) 0] ls /

[hadoop-ha, zookeeper]

[zk: localhost:2181(CONNECTED) 1] ls /hadoop-ha

[cluster2, cluster1]

[zk: localhost:2181(CONNECTED) 2]

【集群c2也格式化,产生一个新的ZK节点cluster2】

4.启动JournalNode集群

在hadoop101、hadoop102、hadoop103上分别执行命令:/usr/local/hadoop/sbin/hadoop-daemon.sh start journalnode

命令输出(以hadoop101为例):

[root@hadoop101 hadoop]# /usr/local/hadoop/sbin/hadoop-daemon.sh start journalnode

starting journalnode, logging to /usr/local/hadoop/logs/hadoop-root-journalnode-hadoop101.out

[root@hadoop101 hadoop]#

在每个节点执行完启动命令后,每个节点都执行以下验证。

验证(以hadoop101为例):

[root@hadoop101 hadoop]# jps

23396 JournalNode

23598 Jps

22491 QuorumPeerMain

[root@hadoop101 hadoop]#

【产生一个java进程JournalNode】

查看一下目录结构

[root@hadoop101 hadoop]# jps

23396 JournalNode

22491 QuorumPeerMain

23445 Jps

[root@hadoop101 hadoop]# pwd

/usr/local/hadoop

[root@hadoop101 hadoop]# ls tmp/

journal

[root@hadoop101 hadoop]#

【启动JournalNode后,会在本地磁盘产生一个目录,用户保存NameNode的edits文件的数据】

5.格式化集群c1的一个NameNode

从hadoop101和hadoop102中任选一个即可,这里选择的是hadoop101

在hadoop101执行以下命令:/usr/local/hadoop/bin/hdfs namenode -format -clusterId c1

命令输出:

[root@hadoop101 hadoop]# /usr/local/hadoop/bin/hdfs namenode -format -clusterId c1

14/02/12 08:07:59 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = hadoop101/192.168.80.101

STARTUP_MSG: args = [-format, -clusterId, c1]

STARTUP_MSG: version = 2.2.0

STARTUP_MSG: classpath = /usr/local/hadoop/etc/hadoop:/usr/local/hadoop/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jsch-0.1.42.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-digester-1.8.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-cli-1.2.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-core-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-el-1.0.jar:/usr/local/hadoop/share/hadoop/common/lib/jets3t-0.6.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/common/lib/slf4j-api-1.7.5.jar:/usr/local/hadoop/share/hadoop/common/lib/xz-1.0.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-lang-2.5.jar:/usr/local/hadoop/share/hadoop/common/lib/jasper-runtime-5.5.23.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-xc-1.8.8.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-codec-1.4.jar:/usr/local/hadoop/share/hadoop/common/lib/activation-1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/avro-1.7.4.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-httpclient-3.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/usr/local/hadoop/share/hadoop/common/lib/hadoop-annotations-2.2.0.jar:/usr/local/hadoop/share/hadoop/common/lib/jsp-api-2.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-configuration-1.6.jar:/usr/local/hadoop/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop/share/hadoop/common/lib/zookeeper-3.4.5.jar:/usr/local/hadoop/share/hadoop/common/lib/hadoop-auth-2.2.0.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-io-2.1.jar:/usr/local/hadoop/share/hadoop/common/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/common/lib/stax-api-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-collections-3.2.1.jar:/usr/local/hadoop/share/hadoop/common/lib/servlet-api-2.5.jar:/usr/local/hadoop/share/hadoop/common/lib/junit-4.8.2.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-util-6.1.26.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-mapper-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/common/lib/jsr305-1.3.9.jar:/usr/local/hadoop/share/hadoop/common/lib/mockito-all-1.8.5.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-logging-1.1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-math-2.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-json-1.9.jar:/usr/local/hadoop/share/hadoop/common/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-net-3.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-6.1.26.jar:/usr/local/hadoop/share/hadoop/common/lib/jasper-compiler-5.5.23.jar:/usr/local/hadoop/share/hadoop/common/lib/guava-11.0.2.jar:/usr/local/hadoop/share/hadoop/common/lib/xmlenc-0.52.jar:/usr/local/hadoop/share/hadoop/common/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/common/lib/paranamer-2.3.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-jaxrs-1.8.8.jar:/usr/local/hadoop/share/hadoop/common/lib/jettison-1.1.jar:/usr/local/hadoop/share/hadoop/common/hadoop-common-2.2.0-tests.jar:/usr/local/hadoop/share/hadoop/common/hadoop-common-2.2.0.jar:/usr/local/hadoop/share/hadoop/common/hadoop-nfs-2.2.0.jar:/usr/local/hadoop/share/hadoop/hdfs:/usr/local/hadoop/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-core-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-el-1.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-lang-2.5.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jasper-runtime-5.5.23.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jsp-api-2.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-io-2.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-mapper-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jsr305-1.3.9.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-logging-1.1.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/guava-11.0.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-nfs-2.2.0.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-2.2.0-tests.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/guice-3.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-core-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/xz-1.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/hamcrest-core-1.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/avro-1.7.4.jar:/usr/local/hadoop/share/hadoop/yarn/lib/aopalliance-1.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/hadoop-annotations-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/javax.inject-1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-io-2.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-mapper-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/yarn/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/yarn/lib/junit-4.10.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/yarn/lib/paranamer-2.3.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-api-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-tests-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-site-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-client-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-common-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-common-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/guice-3.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jackson-core-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/xz-1.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/hamcrest-core-1.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/hadoop-annotations-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/javax.inject-1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/commons-io-2.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/junit-4.10.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.2.0-tests.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.2.0.jar:/contrib/capacity-scheduler/*.jar

STARTUP_MSG: build = Unknown -r Unknown; compiled by 'root' on 2013-12-26T08:50Z

STARTUP_MSG: java = 1.7.0_45

************************************************************/

14/02/12 08:07:59 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

Formatting using clusterid: c1

14/02/12 08:08:01 INFO namenode.HostFileManager: read includes:

HostSet(

)

14/02/12 08:08:01 INFO namenode.HostFileManager: read excludes:

HostSet(

)

14/02/12 08:08:01 INFO blockmanagement.DatanodeManager: dfs.block.invalidate.limit=1000

14/02/12 08:08:01 INFO util.GSet: Computing capacity for map BlocksMap

14/02/12 08:08:01 INFO util.GSet: VM type = 64-bit

14/02/12 08:08:01 INFO util.GSet: 2.0% max memory = 966.7 MB

14/02/12 08:08:01 INFO util.GSet: capacity = 2^21 = 2097152 entries

14/02/12 08:08:01 INFO blockmanagement.BlockManager: dfs.block.access.token.enable=false

14/02/12 08:08:01 INFO blockmanagement.BlockManager: defaultReplication = 2

14/02/12 08:08:01 INFO blockmanagement.BlockManager: maxReplication = 512

14/02/12 08:08:01 INFO blockmanagement.BlockManager: minReplication = 1

14/02/12 08:08:01 INFO blockmanagement.BlockManager: maxReplicationStreams = 2

14/02/12 08:08:01 INFO blockmanagement.BlockManager: shouldCheckForEnoughRacks = false

14/02/12 08:08:01 INFO blockmanagement.BlockManager: replicationRecheckInterval = 3000

14/02/12 08:08:01 INFO blockmanagement.BlockManager: encryptDataTransfer = false

14/02/12 08:08:01 INFO namenode.FSNamesystem: fsOwner = root (auth:SIMPLE)

14/02/12 08:08:01 INFO namenode.FSNamesystem: supergroup = supergroup

14/02/12 08:08:01 INFO namenode.FSNamesystem: isPermissionEnabled = true

14/02/12 08:08:01 INFO namenode.FSNamesystem: Determined nameservice ID: cluster1

14/02/12 08:08:01 INFO namenode.FSNamesystem: HA Enabled: true

14/02/12 08:08:01 INFO namenode.FSNamesystem: Append Enabled: true

14/02/12 08:08:01 INFO util.GSet: Computing capacity for map INodeMap

14/02/12 08:08:01 INFO util.GSet: VM type = 64-bit

14/02/12 08:08:01 INFO util.GSet: 1.0% max memory = 966.7 MB

14/02/12 08:08:01 INFO util.GSet: capacity = 2^20 = 1048576 entries

14/02/12 08:08:01 INFO namenode.NameNode: Caching file names occuring more than 10 times

14/02/12 08:08:01 INFO namenode.FSNamesystem: dfs.namenode.safemode.threshold-pct = 0.9990000128746033

14/02/12 08:08:01 INFO namenode.FSNamesystem: dfs.namenode.safemode.min.datanodes = 0

14/02/12 08:08:01 INFO namenode.FSNamesystem: dfs.namenode.safemode.extension = 30000

14/02/12 08:08:01 INFO namenode.FSNamesystem: Retry cache on namenode is enabled

14/02/12 08:08:01 INFO namenode.FSNamesystem: Retry cache will use 0.03 of total heap and retry cache entry expiry time is 600000 millis

14/02/12 08:08:01 INFO util.GSet: Computing capacity for map Namenode Retry Cache

14/02/12 08:08:01 INFO util.GSet: VM type = 64-bit

14/02/12 08:08:01 INFO util.GSet: 0.029999999329447746% max memory = 966.7 MB

14/02/12 08:08:01 INFO util.GSet: capacity = 2^15 = 32768 entries

14/02/12 08:08:03 INFO common.Storage: Storage directory /usr/local/hadoop/tmp/dfs/name has been successfully formatted.

14/02/12 08:08:04 INFO namenode.FSImage: Saving image file /usr/local/hadoop/tmp/dfs/name/current/fsimage.ckpt_0000000000000000000 using no compression

14/02/12 08:08:04 INFO namenode.FSImage: Image file /usr/local/hadoop/tmp/dfs/name/current/fsimage.ckpt_0000000000000000000 of size 196 bytes saved in 0 seconds.

14/02/12 08:08:04 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0

14/02/12 08:08:04 INFO util.ExitUtil: Exiting with status 0

14/02/12 08:08:04 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at hadoop101/192.168.80.101

************************************************************/

[root@hadoop101 hadoop]#

验证:

[root@hadoop101 hadoop]# ls tmp/

dfs journal

[root@hadoop101 hadoop]# ls tmp/dfs/

name

【格式化NameNode会在磁盘产生一个目录,用于保存NameNode的fsimage、edits等文件】

6.启动c1中刚才格式化的NameNode

在hadoop101上执行命令:/usr/local/hadoop/sbin/hadoop-daemon.sh start namenode

命令输出:

[root@hadoop101 hadoop]# /usr/local/hadoop/sbin/hadoop-daemon.sh start namenode

starting namenode, logging to /usr/local/hadoop/logs/hadoop-root-namenode-hadoop101.out

验证:

[root@hadoop101 hadoop]# jps

23396 JournalNode

23598 Jps

23558 NameNode

22491 QuorumPeerMain

[root@hadoop101 hadoop]#

【启动后,产生一个新的java进程NameNode】

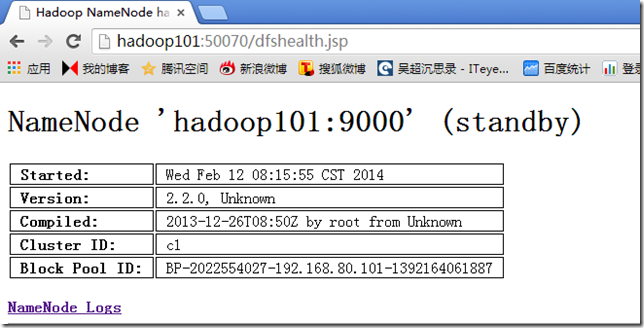

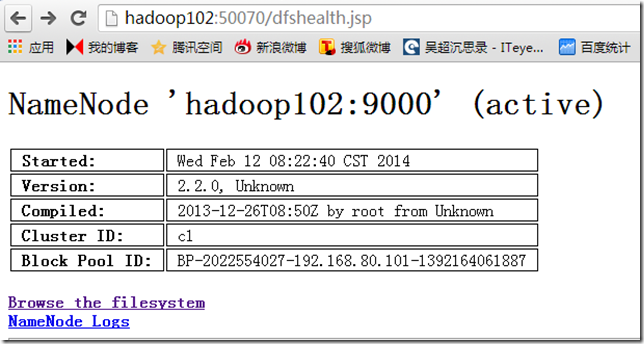

通过浏览器访问,也可以看到下图所示

7.把NameNode的数据从hadoop101同步到hadoop102中

在hadoop102上执行命令:/usr/local/hadoop/bin/hdfs namenode –bootstrapStandby

命令输出:

[root@hadoop102 hadoop]# /usr/local/hadoop/bin/hdfs namenode -bootstrapStandby

14/02/12 08:17:44 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = hadoop102/192.168.80.102

STARTUP_MSG: args = [-bootstrapStandby]

STARTUP_MSG: version = 2.2.0

STARTUP_MSG: classpath = /usr/local/hadoop/etc/hadoop:/usr/local/hadoop/share/hadoop/common/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/common/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jsch-0.1.42.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/common/lib/slf4j-log4j12-1.7.5.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-digester-1.8.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-cli-1.2.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-core-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-el-1.0.jar:/usr/local/hadoop/share/hadoop/common/lib/jets3t-0.6.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/common/lib/slf4j-api-1.7.5.jar:/usr/local/hadoop/share/hadoop/common/lib/xz-1.0.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-lang-2.5.jar:/usr/local/hadoop/share/hadoop/common/lib/jasper-runtime-5.5.23.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-xc-1.8.8.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-codec-1.4.jar:/usr/local/hadoop/share/hadoop/common/lib/activation-1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/avro-1.7.4.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-httpclient-3.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-beanutils-1.7.0.jar:/usr/local/hadoop/share/hadoop/common/lib/hadoop-annotations-2.2.0.jar:/usr/local/hadoop/share/hadoop/common/lib/jsp-api-2.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-beanutils-core-1.8.0.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-configuration-1.6.jar:/usr/local/hadoop/share/hadoop/common/lib/jaxb-impl-2.2.3-1.jar:/usr/local/hadoop/share/hadoop/common/lib/zookeeper-3.4.5.jar:/usr/local/hadoop/share/hadoop/common/lib/hadoop-auth-2.2.0.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-io-2.1.jar:/usr/local/hadoop/share/hadoop/common/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/common/lib/stax-api-1.0.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-collections-3.2.1.jar:/usr/local/hadoop/share/hadoop/common/lib/servlet-api-2.5.jar:/usr/local/hadoop/share/hadoop/common/lib/junit-4.8.2.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-util-6.1.26.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-mapper-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/common/lib/jsr305-1.3.9.jar:/usr/local/hadoop/share/hadoop/common/lib/mockito-all-1.8.5.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-logging-1.1.1.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-math-2.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jaxb-api-2.2.2.jar:/usr/local/hadoop/share/hadoop/common/lib/jersey-json-1.9.jar:/usr/local/hadoop/share/hadoop/common/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/common/lib/commons-net-3.1.jar:/usr/local/hadoop/share/hadoop/common/lib/jetty-6.1.26.jar:/usr/local/hadoop/share/hadoop/common/lib/jasper-compiler-5.5.23.jar:/usr/local/hadoop/share/hadoop/common/lib/guava-11.0.2.jar:/usr/local/hadoop/share/hadoop/common/lib/xmlenc-0.52.jar:/usr/local/hadoop/share/hadoop/common/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/common/lib/paranamer-2.3.jar:/usr/local/hadoop/share/hadoop/common/lib/jackson-jaxrs-1.8.8.jar:/usr/local/hadoop/share/hadoop/common/lib/jettison-1.1.jar:/usr/local/hadoop/share/hadoop/common/hadoop-common-2.2.0-tests.jar:/usr/local/hadoop/share/hadoop/common/hadoop-common-2.2.0.jar:/usr/local/hadoop/share/hadoop/common/hadoop-nfs-2.2.0.jar:/usr/local/hadoop/share/hadoop/hdfs:/usr/local/hadoop/share/hadoop/hdfs/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-cli-1.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-core-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-el-1.0.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-daemon-1.0.13.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-lang-2.5.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jasper-runtime-5.5.23.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-codec-1.4.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jsp-api-2.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-io-2.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/servlet-api-2.5.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-util-6.1.26.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jackson-mapper-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jsr305-1.3.9.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/commons-logging-1.1.1.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/jetty-6.1.26.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/guava-11.0.2.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/xmlenc-0.52.jar:/usr/local/hadoop/share/hadoop/hdfs/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-nfs-2.2.0.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-2.2.0-tests.jar:/usr/local/hadoop/share/hadoop/hdfs/hadoop-hdfs-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/guice-3.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-core-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/xz-1.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/hamcrest-core-1.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/avro-1.7.4.jar:/usr/local/hadoop/share/hadoop/yarn/lib/aopalliance-1.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/hadoop-annotations-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/javax.inject-1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/guice-servlet-3.0.jar:/usr/local/hadoop/share/hadoop/yarn/lib/commons-io-2.1.jar:/usr/local/hadoop/share/hadoop/yarn/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jackson-mapper-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/yarn/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/yarn/lib/junit-4.10.jar:/usr/local/hadoop/share/hadoop/yarn/lib/jersey-guice-1.9.jar:/usr/local/hadoop/share/hadoop/yarn/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/yarn/lib/paranamer-2.3.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-api-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-tests-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-site-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-client-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-nodemanager-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-applications-distributedshell-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-resourcemanager-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-web-proxy-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-applications-unmanaged-am-launcher-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-server-common-2.2.0.jar:/usr/local/hadoop/share/hadoop/yarn/hadoop-yarn-common-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/protobuf-java-2.5.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/snappy-java-1.0.4.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jersey-server-1.9.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/guice-3.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jackson-core-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jersey-core-1.9.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/xz-1.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/hamcrest-core-1.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/avro-1.7.4.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/aopalliance-1.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/hadoop-annotations-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/commons-compress-1.4.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/javax.inject-1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/guice-servlet-3.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/commons-io-2.1.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/netty-3.6.2.Final.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jackson-mapper-asl-1.8.8.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/asm-3.2.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/junit-4.10.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/jersey-guice-1.9.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/log4j-1.2.17.jar:/usr/local/hadoop/share/hadoop/mapreduce/lib/paranamer-2.3.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-plugins-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.2.0-tests.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-jobclient-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-app-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-core-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-shuffle-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-hs-2.2.0.jar:/usr/local/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-client-common-2.2.0.jar:/usr/local/hadoop/contrib/capacity-scheduler/*.jar

STARTUP_MSG: build = Unknown -r Unknown; compiled by 'root' on 2013-12-26T08:50Z

STARTUP_MSG: java = 1.7.0_45

************************************************************/

14/02/12 08:17:44 INFO namenode.NameNode: registered UNIX signal handlers for [TERM, HUP, INT]

=====================================================

About to bootstrap Standby ID hadoop102 from:

Nameservice ID: cluster1

Other Namenode ID: hadoop101

Other NN's HTTP address: hadoop101:50070

Other NN's IPC address: hadoop101/192.168.80.101:9000

Namespace ID: 1496717450

Block pool ID: BP-2022554027-192.168.80.101-1392164061887

Cluster ID: c1

Layout version: -47

=====================================================

14/02/12 08:17:48 INFO common.Storage: Storage directory /usr/local/hadoop/tmp/dfs/name has been successfully formatted.

14/02/12 08:17:48 INFO namenode.TransferFsImage: Opening connection to http://hadoop101:50070/getimage?getimage=1&txid=0&storageInfo=-47:1496717450:0:c1

14/02/12 08:17:48 INFO namenode.TransferFsImage: Transfer took 0.18s at 0.00 KB/s

14/02/12 08:17:48 INFO namenode.TransferFsImage: Downloaded file fsimage.ckpt_0000000000000000000 size 196 bytes.

14/02/12 08:17:48 INFO util.ExitUtil: Exiting with status 0

14/02/12 08:17:48 INFO namenode.NameNode: SHUTDOWN_MSG:

/************************************************************

SHUTDOWN_MSG: Shutting down NameNode at hadoop102/192.168.80.102

************************************************************/

[root@hadoop102 hadoop]#

验证:

[root@hadoop102 hadoop]# ls tmp/

dfs journal

[root@hadoop102 hadoop]# ls tmp/dfs/

name

[root@hadoop102 hadoop]#

【在tmp目录下产生一个目录name】

8.启动c1中另一个Namenode

在hadoop102上执行命令:/usr/local/hadoop/sbin/hadoop-daemon.sh start namenode

命令输出:

[root@hadoop102 hadoop]# /usr/local/hadoop/sbin/hadoop-daemon.sh start namenode

starting namenode, logging to /usr/local/hadoop/logs/hadoop-root-namenode-hadoop102.out

验证:

[root@hadoop102 hadoop]# jps

12355 JournalNode

12611 Jps

12573 NameNode

12081 QuorumPeerMain

[root@hadoop102 hadoop]#

【产生java进程NameNode】

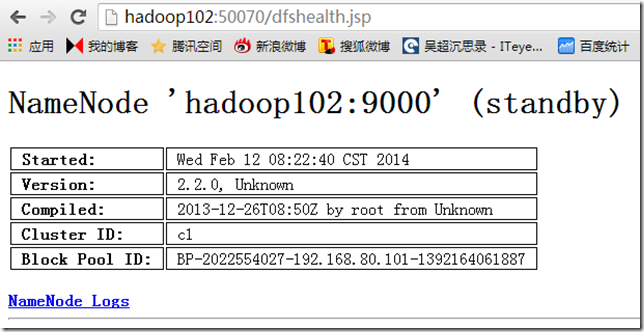

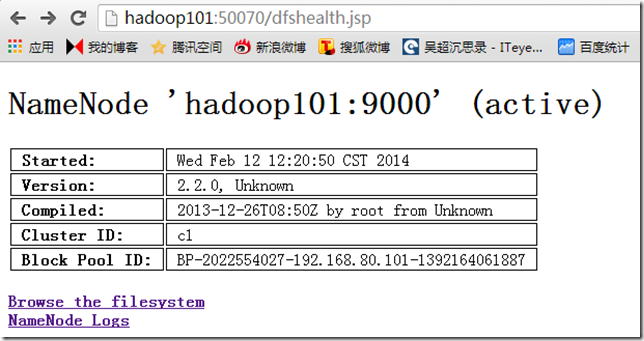

通过浏览器访问,也可以看到下图所示

9.格式化集群c2的一个NameNode

从hadoop103和hadoop104中任选一个即可,这里选择的是hadoop103

在hadoop103执行以下命令:/usr/local/hadoop/bin/hdfs namenode -format -clusterId c2

命令输出:

[root@hadoop103 hadoop]# /usr/local/hadoop/bin/hdfs namenode -format -clusterId c2

14/02/12 08:23:28 INFO namenode.NameNode: STARTUP_MSG:

/************************************************************

STARTUP_MSG: Starting NameNode

STARTUP_MSG: host = hadoop103/192.168.80.103

STARTUP_MSG: args = [-format, -clusterId, c2]

STARTUP_MSG: version = 2.2.0