原文地址:https://www.cnblogs.com/memento/p/9148732.html

Windows 上的单机版安装

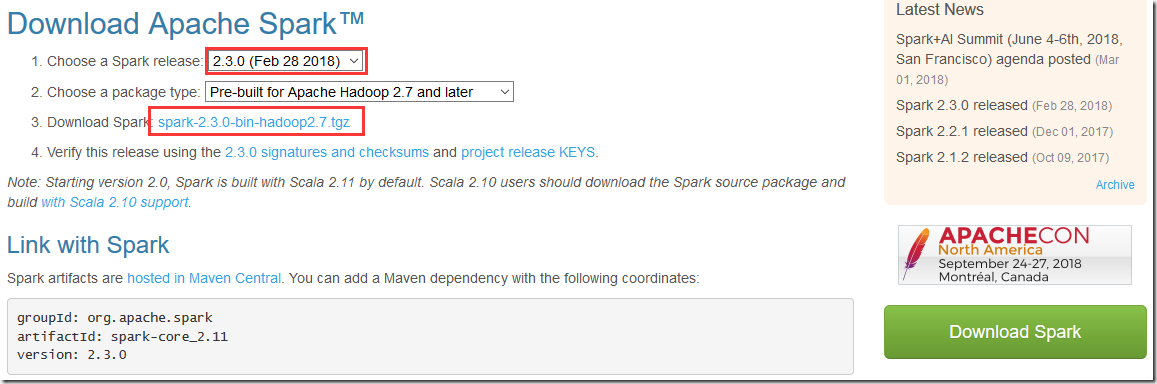

下载地址:http://spark.apache.org/downloads.html

本文以 Spark 2.3.0 为例

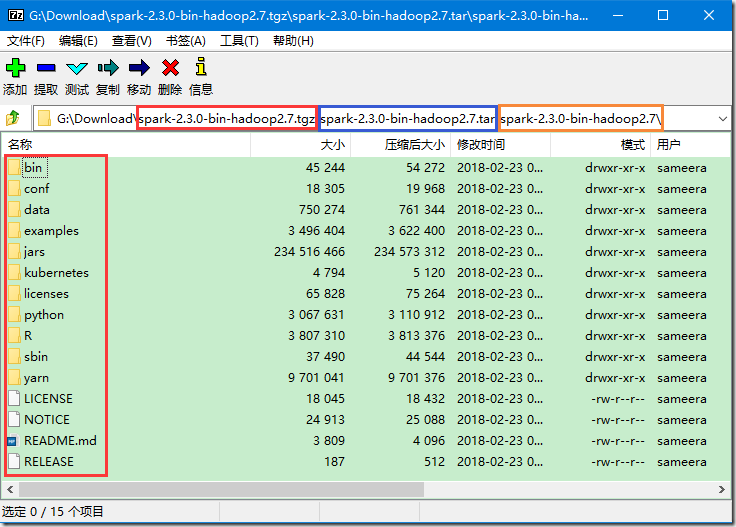

>>> 下载下来的文件是 tgz 格式的压缩文件,直接利用压缩软件将其打开,可以看见里面有一个 tar 格式的压缩文件,继续用压缩软件打开,最终如下图所示:

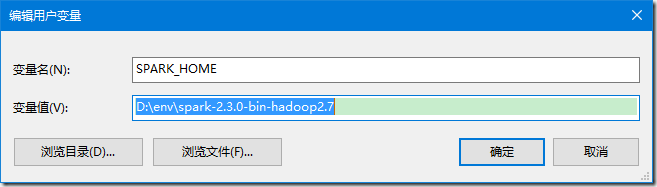

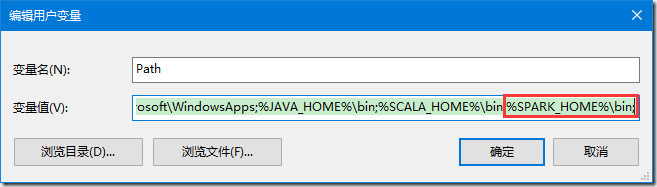

>>> 将其中的 spark-2.3.0-bin-hadoop2.7 文件夹解压,新增 SPARK_HOME 环境变量,设置为解压路径,并将其追加到 PATH 环境变量;

>>> 此时在 cmd 窗口中执行 "spark-shell" 命令可得到如下提示:

C:UsersMemento>spark-shell

2018-06-06 23:39:36 ERROR Shell:397 - Failed to locate the winutils binary in the hadoop binary path

java.io.IOException: Could not locate executable nullinwinutils.exe in the Hadoop binaries.

at org.apache.hadoop.util.Shell.getQualifiedBinPath(Shell.java:379)

at org.apache.hadoop.util.Shell.getWinUtilsPath(Shell.java:394)

at org.apache.hadoop.util.Shell.<clinit>(Shell.java:387)

at org.apache.hadoop.util.StringUtils.<clinit>(StringUtils.java:80)

at org.apache.hadoop.security.SecurityUtil.getAuthenticationMethod(SecurityUtil.java:611)

at org.apache.hadoop.security.UserGroupInformation.initialize(UserGroupInformation.java:273)

at org.apache.hadoop.security.UserGroupInformation.ensureInitialized(UserGroupInformation.java:261)

at org.apache.hadoop.security.UserGroupInformation.loginUserFromSubject(UserGroupInformation.java:791)

at org.apache.hadoop.security.UserGroupInformation.getLoginUser(UserGroupInformation.java:761)

at org.apache.hadoop.security.UserGroupInformation.getCurrentUser(UserGroupInformation.java:634)

at org.apache.spark.util.Utils$$anonfun$getCurrentUserName$1.apply(Utils.scala:2464)

at org.apache.spark.util.Utils$$anonfun$getCurrentUserName$1.apply(Utils.scala:2464)

at scala.Option.getOrElse(Option.scala:121)

at org.apache.spark.util.Utils$.getCurrentUserName(Utils.scala:2464)

at org.apache.spark.SecurityManager.<init>(SecurityManager.scala:222)

at org.apache.spark.deploy.SparkSubmit$.secMgr$lzycompute$1(SparkSubmit.scala:393)

at org.apache.spark.deploy.SparkSubmit$.org$apache$spark$deploy$SparkSubmit$$secMgr$1(SparkSubmit.scala:393)

at org.apache.spark.deploy.SparkSubmit$$anonfun$prepareSubmitEnvironment$7.apply(SparkSubmit.scala:401)

at org.apache.spark.deploy.SparkSubmit$$anonfun$prepareSubmitEnvironment$7.apply(SparkSubmit.scala:401)

at scala.Option.map(Option.scala:146)

at org.apache.spark.deploy.SparkSubmit$.prepareSubmitEnvironment(SparkSubmit.scala:400)

at org.apache.spark.deploy.SparkSubmit$.submit(SparkSubmit.scala:170)

at org.apache.spark.deploy.SparkSubmit$.main(SparkSubmit.scala:136)

at org.apache.spark.deploy.SparkSubmit.main(SparkSubmit.scala)

2018-06-06 23:39:36 WARN NativeCodeLoader:62 - Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Setting default log level to "WARN".

To adjust logging level use sc.setLogLevel(newLevel). For SparkR, use setLogLevel(newLevel).

Spark context Web UI available at http://Memento-PC:4040

Spark context available as 'sc' (master = local[*], app id = local-1528299586814).

Spark session available as 'spark'.

Welcome to

____ __

/ __/__ ___ _____/ /__

_ / _ / _ `/ __/ '_/

/___/ .__/\_,_/_/ /_/\_ version 2.3.0

/_/

Using Scala version 2.11.8 (Java HotSpot(TM) 64-Bit Server VM, Java 1.8.0_151)

Type in expressions to have them evaluated.

Type :help for more information.

提示说在 hadoop 路径下无法定位到 winutils,所以接下来需要配置 Hadoop;

>>> 详见:Windows 下的 Hadoop 2.7.5 环境搭建

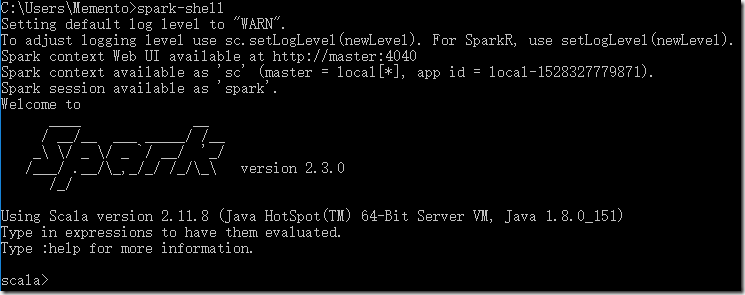

>>> 随后再重新执行 "spark-shell" 命令即可;

By. Memento