1.以wordcount为例

package org.apache.spark.examples import org.apache.spark.{SparkConf, SparkContext} //在任意方法中打印当前线程栈信息(StackTrace) object word1 { def printStackTrace(cls: Class[_]): Unit = { val elements = (new Throwable).getStackTrace System.out.println("Stack for " + cls.getName + ":") for(i <- 0 to elements.length) { System.out.println(elements(i).getClassName + "." + elements(i).getMethodName + "(" + elements(i).getFileName + ":" + elements(i).getLineNumber + ")") } } def main(args:Array[String]): Unit ={ val sparkConf=new SparkConf().setAppName("WORD1").setMaster("local") val sc=new SparkContext(sparkConf) val lines=sc.textFile("E:\spark_file\wordcount.txt") val word=lines.flatMap{x =>x.split(" ")} val pairs=word.map(s =>(s,1)) val wordcnt=pairs.reduceByKey{_+_} wordcnt.foreach(wordcount =>println(wordcount._1+" appread "+wordcount._2+" times/time ")) printStackTrace(classOf[Test]) } }

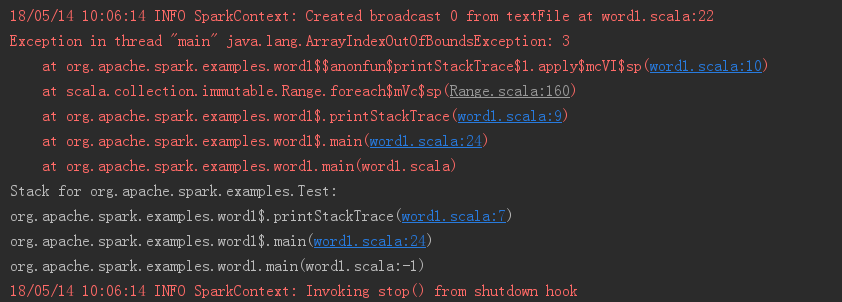

2.输出结果: