1.以wordcount为例

package org.apache.spark.examples import org.apache.spark.examples.SparkPi.logger import org.apache.spark.{SparkConf, SparkContext} object word { private val logger = org.slf4j.LoggerFactory.getLogger(this.getClass) def main(args: Array[String]) { val conf = new SparkConf() .setAppName("WordCount").setMaster("local") val sc = new SparkContext(conf) logger.info("this is textFile1" ) println("this is textFile2print") logger.error("this is textFile3err") logger.warn("this is textFile4warn") logger.debug("this is textFile5debug") val lines = sc.textFile("E:\spark_file\wordcount.txt", 1) val words = lines.flatMap { line => line.split(" ") } val pairs = words.map { word => (word, 1) } val wordCounts = pairs.reduceByKey { _ + _ } wordCounts.foreach(wordCount => println(wordCount._1 + " appeared " + wordCount._2 + " times.")) } }

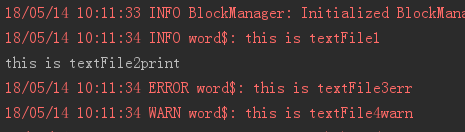

2.控制台输出结果: