饿了么爬虫

转自

抓取思路

结果: 最终实现通过经纬度、商家、关键字等抓取数据

1. 使用charles进行抓包

2. Chrom调试

3. 找出商品请求api

4. 破解sign 和 其它请求参数

5. 使用代码请求单个api是否成功

6. 使用queue进行商店, 分类, 商品系统化抓取

7. 使用协程并发抓取

8. 数据清理, 存储到mongo

项目目录

. ├── conf │ ├── AuthConfig.py │ ├── __pycache__ │ │ └── AuthConfig.cpython-36.pyc │ ├── city.py │ ├── log.conf │ ├── project.conf │ └── untitled.py ├── log │ └── eleme_test.log ├── spider │ ├── REMEAD.md │ ├── __pycache__ │ │ └── auto_crawl.cpython-36.pyc │ ├── auto_crawl.py │ ├── eleme.py │ ├── foods.json │ ├── jlf.xlsx │ ├── new_eleme.py │ └── shops.json ├── tools │ ├── __pycache__ │ │ ├── cookie_manager.cpython-36.pyc │ │ ├── logger.cpython-36.pyc │ │ ├── proxy_manager.cpython-36.pyc │ │ ├── tools.cpython-36.pyc │ │ └── url_format.cpython-36.pyc │ ├── cookie_manager.py │ ├── logger.py │ ├── proxy_manager.py │ ├── sign.js │ ├── tools.py │ └── url_format.py └── utils ├── __init__.py ├── __pycache__ │ ├── __init__.cpython-36.pyc │ ├── download.cpython-36.pyc │ ├── mongo.cpython-36.pyc │ ├── redis_sql.cpython-36.pyc │ └── tools.cpython-36.pyc ├── download.py ├── foods.json ├── mongo.py ├── redis_sql.py ├── shop.json └── tools.py 9 directories, 38 files

商店详情部分代码

def crawl_shop_info_handler(self, new_shop): """ Crawl shop info, contain phone and categorys :param new_shop: shop info :type new_shop: dict :return: None :rtype: NoneType """ city_info = new_shop["cityInfo"] # self.mongo_db.upsert("shops", new_shop) self.mark_job_status("shops", new_shop, 1) if "shopInfo" not in new_shop: shop_info = { "storeId": new_shop["storeId"], "wid": new_shop["wid"], "eleId": new_shop["_id"], "cityId": city_info["_id"] } res = self._get_request_data("shop", city_info, **shop_info) shop_data = res.get("data", {}).get("data", {}) new_shop["shopInfo"] = shop_data.get("shopInfo", {}) if "shopCategorys" not in new_shop: cate_data = { "storeId": new_shop["storeId"] } res = self._get_request_data("cates", city_info, **cate_data) cate_ids = {} cate_lists = res.get("data", {}).get("data", []) for cate in cate_lists: one_data = self.cate_id_join(cate) for two_level in cate.get("detail", []): status = {"status": 0} two_data = self.cate_id_join(two_level) cate_info = self.cate_joint_symbol.join([one_data, two_data]) cate_ids[cate_info] = status new_shop["shopCategorys"] = cate_ids new_shop["update_time_stamp"] = int(time.time() * 1000) new_shop["status"] = 2 self.mongo_db.upsert("shops", new_shop) self.logger.debug("Get shop:%s info success, cate total: %s" % (new_shop["_id"], len(cate_ids)))

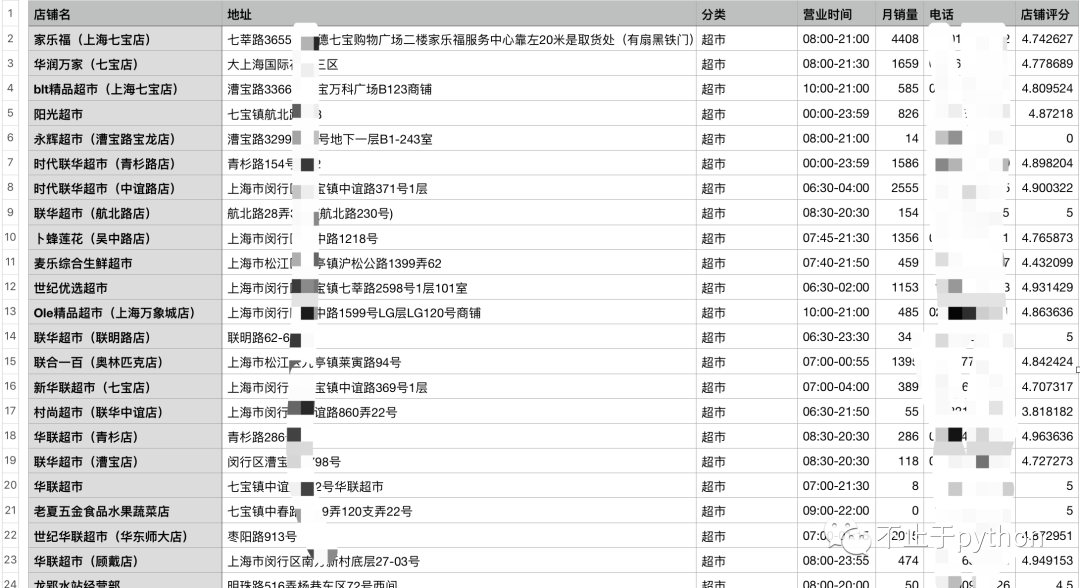

数据展示

商品信息

商店信息