OpenMP是一种应用于多处理器程序设计的并行编程处理方案,它提供了对于并行编程的高层抽象,只需要在程序中添加简单的指令,就可以编写高效的并行程序,而不用关心具体的并行实现细节,降低了并行编程的难度和复杂度。也正因为OpenMP的简单易用性,它并不适合于需要复杂的线程间同步和互斥的场合。

OpenCV中使用Sift或者Surf特征进行图像拼接的算法,需要分别对两幅或多幅图像进行特征提取和特征描述,之后再进行图像特征点的配对,图像变换等操作。不同图像的特征提取和描述的工作是整个过程中最耗费时间的,也是独立 运行的,可以使用OpenMP进行加速。

以下是不使用OpenMP加速的Sift图像拼接原程序:

#include "highgui/highgui.hpp"

#include "opencv2/nonfree/nonfree.hpp"

#include "opencv2/legacy/legacy.hpp"

#include "omp.h"

using namespace cv;

//计算原始图像点位在经过矩阵变换后在目标图像上对应位置

Point2f getTransformPoint(const Point2f originalPoint, const Mat &transformMaxtri);

int main(int argc, char *argv[])

{

float startTime = omp_get_wtime();

Mat image01 = imread("Test01.jpg");

Mat image02 = imread("Test02.jpg");

imshow("拼接图像1", image01);

imshow("拼接图像2", image02);

//灰度图转换

Mat image1, image2;

cvtColor(image01, image1, CV_RGB2GRAY);

cvtColor(image02, image2, CV_RGB2GRAY);

//提取特征点

SiftFeatureDetector siftDetector(800); // 海塞矩阵阈值

vector<KeyPoint> keyPoint1, keyPoint2;

siftDetector.detect(image1, keyPoint1);

siftDetector.detect(image2, keyPoint2);

//特征点描述,为下边的特征点匹配做准备

SiftDescriptorExtractor siftDescriptor;

Mat imageDesc1, imageDesc2;

siftDescriptor.compute(image1, keyPoint1, imageDesc1);

siftDescriptor.compute(image2, keyPoint2, imageDesc2);

float endTime = omp_get_wtime();

std::cout << "不使用OpenMP加速消耗时间: " << endTime - startTime << std::endl;

//获得匹配特征点,并提取最优配对

FlannBasedMatcher matcher;

vector<DMatch> matchePoints;

matcher.match(imageDesc1, imageDesc2, matchePoints, Mat());

sort(matchePoints.begin(), matchePoints.end()); //特征点排序

//获取排在前N个的最优匹配特征点

vector<Point2f> imagePoints1, imagePoints2;

for (int i = 0; i < 10; i++)

{

imagePoints1.push_back(keyPoint1[matchePoints[i].queryIdx].pt);

imagePoints2.push_back(keyPoint2[matchePoints[i].trainIdx].pt);

}

//获取图像1到图像2的投影映射矩阵,尺寸为3*3

Mat homo = findHomography(imagePoints1, imagePoints2, CV_RANSAC);

Mat adjustMat = (Mat_<double>(3, 3) << 1.0, 0, image01.cols, 0, 1.0, 0, 0, 0, 1.0);

Mat adjustHomo = adjustMat*homo;

//获取最强配对点在原始图像和矩阵变换后图像上的对应位置,用于图像拼接点的定位

Point2f originalLinkPoint, targetLinkPoint, basedImagePoint;

originalLinkPoint = keyPoint1[matchePoints[0].queryIdx].pt;

targetLinkPoint = getTransformPoint(originalLinkPoint, adjustHomo);

basedImagePoint = keyPoint2[matchePoints[0].trainIdx].pt;

//图像配准

Mat imageTransform1;

warpPerspective(image01, imageTransform1, adjustMat*homo, Size(image02.cols + image01.cols + 110, image02.rows));

//在最强匹配点左侧的重叠区域进行累加,是衔接稳定过渡,消除突变

Mat image1Overlap, image2Overlap; //图1和图2的重叠部分

image1Overlap = imageTransform1(Rect(Point(targetLinkPoint.x - basedImagePoint.x, 0), Point(targetLinkPoint.x, image02.rows)));

image2Overlap = image02(Rect(0, 0, image1Overlap.cols, image1Overlap.rows));

Mat image1ROICopy = image1Overlap.clone(); //复制一份图1的重叠部分

for (int i = 0; i < image1Overlap.rows; i++)

{

for (int j = 0; j < image1Overlap.cols; j++)

{

double weight;

weight = (double)j / image1Overlap.cols; //随距离改变而改变的叠加系数

image1Overlap.at<Vec3b>(i, j)[0] = (1 - weight)*image1ROICopy.at<Vec3b>(i, j)[0] + weight*image2Overlap.at<Vec3b>(i, j)[0];

image1Overlap.at<Vec3b>(i, j)[1] = (1 - weight)*image1ROICopy.at<Vec3b>(i, j)[1] + weight*image2Overlap.at<Vec3b>(i, j)[1];

image1Overlap.at<Vec3b>(i, j)[2] = (1 - weight)*image1ROICopy.at<Vec3b>(i, j)[2] + weight*image2Overlap.at<Vec3b>(i, j)[2];

}

}

Mat ROIMat = image02(Rect(Point(image1Overlap.cols, 0), Point(image02.cols, image02.rows))); //图2中不重合的部分

ROIMat.copyTo(Mat(imageTransform1, Rect(targetLinkPoint.x, 0, ROIMat.cols, image02.rows))); //不重合的部分直接衔接上去

namedWindow("拼接结果", 0);

imshow("拼接结果", imageTransform1);

imwrite("D:\拼接结果.jpg", imageTransform1);

waitKey();

return 0;

}

//计算原始图像点位在经过矩阵变换后在目标图像上对应位置

Point2f getTransformPoint(const Point2f originalPoint, const Mat &transformMaxtri)

{

Mat originelP, targetP;

originelP = (Mat_<double>(3, 1) << originalPoint.x, originalPoint.y, 1.0);

targetP = transformMaxtri*originelP;

float x = targetP.at<double>(0, 0) / targetP.at<double>(2, 0);

float y = targetP.at<double>(1, 0) / targetP.at<double>(2, 0);

return Point2f(x, y);

}图像一:

图像二:

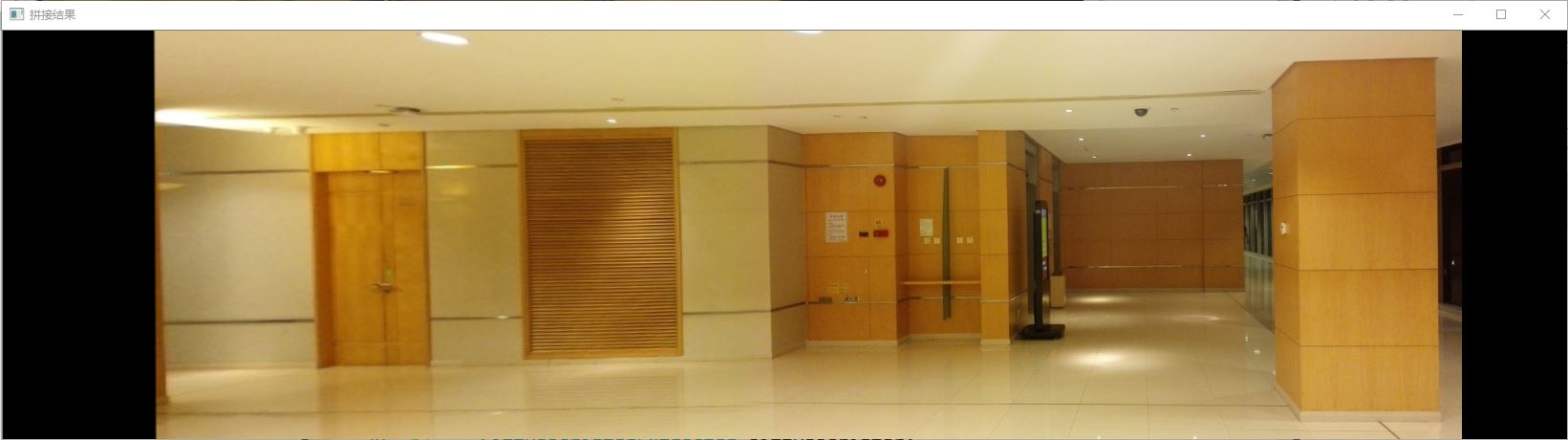

拼接结果 :

在我的机器上不使用OpenMP平均耗时 4.7S。

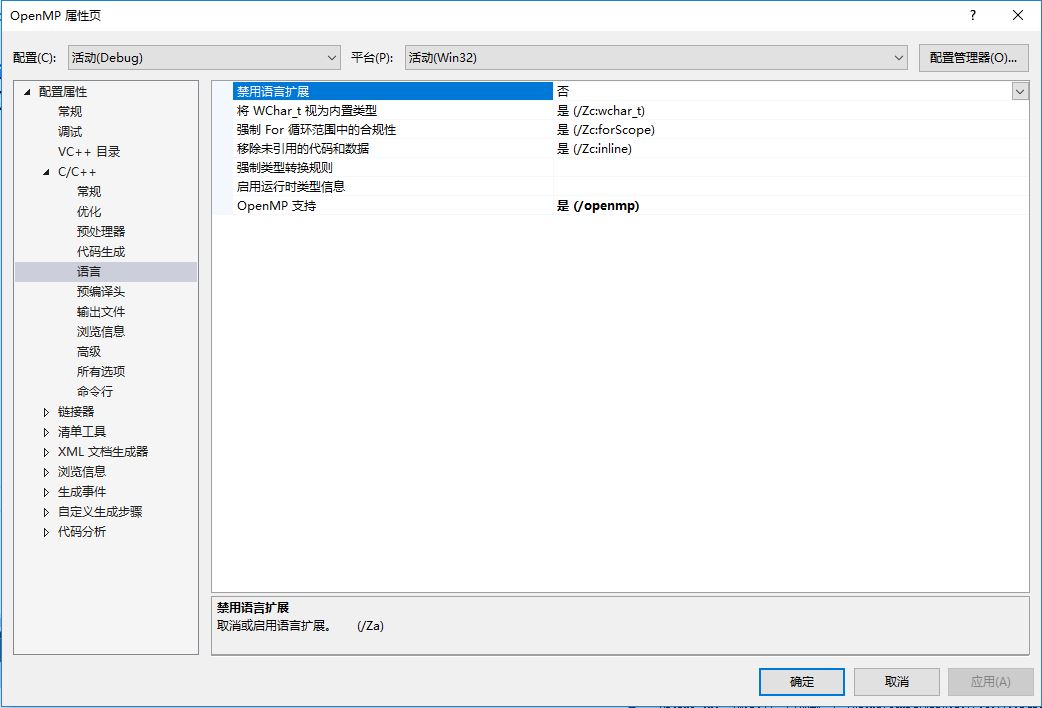

使用OpenMP也很简单,VS 内置了对OpenMP的支持。在项目上右键->属性->配置属性->C/C++->语言->OpenMP支持里选择是:

之后在程序中加入OpenMP的头文件“omp.h”就可以了:

#include "highgui/highgui.hpp"

#include "opencv2/nonfree/nonfree.hpp"

#include "opencv2/legacy/legacy.hpp"

#include "omp.h"

using namespace cv;

//计算原始图像点位在经过矩阵变换后在目标图像上对应位置

Point2f getTransformPoint(const Point2f originalPoint, const Mat &transformMaxtri);

int main(int argc, char *argv[])

{

float startTime = omp_get_wtime();

Mat image01, image02;

Mat image1, image2;

vector<KeyPoint> keyPoint1, keyPoint2;

Mat imageDesc1, imageDesc2;

SiftFeatureDetector siftDetector(800); // 海塞矩阵阈值

SiftDescriptorExtractor siftDescriptor;

//使用OpenMP的sections制导指令开启多线程

#pragma omp parallel sections

{

#pragma omp section

{

image01 = imread("Test01.jpg");

imshow("拼接图像1", image01);

//灰度图转换

cvtColor(image01, image1, CV_RGB2GRAY);

//提取特征点

siftDetector.detect(image1, keyPoint1);

//特征点描述,为下边的特征点匹配做准备

siftDescriptor.compute(image1, keyPoint1, imageDesc1);

}

#pragma omp section

{

image02 = imread("Test02.jpg");

imshow("拼接图像2", image02);

cvtColor(image02, image2, CV_RGB2GRAY);

siftDetector.detect(image2, keyPoint2);

siftDescriptor.compute(image2, keyPoint2, imageDesc2);

}

}

float endTime = omp_get_wtime();

std::cout << "使用OpenMP加速消耗时间: " << endTime - startTime << std::endl;

//获得匹配特征点,并提取最优配对

FlannBasedMatcher matcher;

vector<DMatch> matchePoints;

matcher.match(imageDesc1, imageDesc2, matchePoints, Mat());

sort(matchePoints.begin(), matchePoints.end()); //特征点排序

//获取排在前N个的最优匹配特征点

vector<Point2f> imagePoints1, imagePoints2;

for (int i = 0; i < 10; i++)

{

imagePoints1.push_back(keyPoint1[matchePoints[i].queryIdx].pt);

imagePoints2.push_back(keyPoint2[matchePoints[i].trainIdx].pt);

}

//获取图像1到图像2的投影映射矩阵,尺寸为3*3

Mat homo = findHomography(imagePoints1, imagePoints2, CV_RANSAC);

Mat adjustMat = (Mat_<double>(3, 3) << 1.0, 0, image01.cols, 0, 1.0, 0, 0, 0, 1.0);

Mat adjustHomo = adjustMat*homo;

//获取最强配对点在原始图像和矩阵变换后图像上的对应位置,用于图像拼接点的定位

Point2f originalLinkPoint, targetLinkPoint, basedImagePoint;

originalLinkPoint = keyPoint1[matchePoints[0].queryIdx].pt;

targetLinkPoint = getTransformPoint(originalLinkPoint, adjustHomo);

basedImagePoint = keyPoint2[matchePoints[0].trainIdx].pt;

//图像配准

Mat imageTransform1;

warpPerspective(image01, imageTransform1, adjustMat*homo, Size(image02.cols + image01.cols + 110, image02.rows));

//在最强匹配点左侧的重叠区域进行累加,是衔接稳定过渡,消除突变

Mat image1Overlap, image2Overlap; //图1和图2的重叠部分

image1Overlap = imageTransform1(Rect(Point(targetLinkPoint.x - basedImagePoint.x, 0), Point(targetLinkPoint.x, image02.rows)));

image2Overlap = image02(Rect(0, 0, image1Overlap.cols, image1Overlap.rows));

Mat image1ROICopy = image1Overlap.clone(); //复制一份图1的重叠部分

for (int i = 0; i < image1Overlap.rows; i++)

{

for (int j = 0; j < image1Overlap.cols; j++)

{

double weight;

weight = (double)j / image1Overlap.cols; //随距离改变而改变的叠加系数

image1Overlap.at<Vec3b>(i, j)[0] = (1 - weight)*image1ROICopy.at<Vec3b>(i, j)[0] + weight*image2Overlap.at<Vec3b>(i, j)[0];

image1Overlap.at<Vec3b>(i, j)[1] = (1 - weight)*image1ROICopy.at<Vec3b>(i, j)[1] + weight*image2Overlap.at<Vec3b>(i, j)[1];

image1Overlap.at<Vec3b>(i, j)[2] = (1 - weight)*image1ROICopy.at<Vec3b>(i, j)[2] + weight*image2Overlap.at<Vec3b>(i, j)[2];

}

}

Mat ROIMat = image02(Rect(Point(image1Overlap.cols, 0), Point(image02.cols, image02.rows))); //图2中不重合的部分

ROIMat.copyTo(Mat(imageTransform1, Rect(targetLinkPoint.x, 0, ROIMat.cols, image02.rows))); //不重合的部分直接衔接上去

namedWindow("拼接结果", 0);

imshow("拼接结果", imageTransform1);

imwrite("D:\拼接结果.jpg", imageTransform1);

waitKey();

return 0;

}

//计算原始图像点位在经过矩阵变换后在目标图像上对应位置

Point2f getTransformPoint(const Point2f originalPoint, const Mat &transformMaxtri)

{

Mat originelP, targetP;

originelP = (Mat_<double>(3, 1) << originalPoint.x, originalPoint.y, 1.0);

targetP = transformMaxtri*originelP;

float x = targetP.at<double>(0, 0) / targetP.at<double>(2, 0);

float y = targetP.at<double>(1, 0) / targetP.at<double>(2, 0);

return Point2f(x, y);

}OpenMP中for制导指令用于迭代计算的任务分配,sections制导指令用于非迭代计算的任务分配,每个#pragma omp section 语句会引导一个线程。在上边的程序中相当于是两个线程分别执行两幅图像的特征提取和描述操作。使用OpenMP后平均耗时2.5S,速度差不多提升了一倍。