package org.apache.hadoop.examples; import java.io.IOException; import java.util.StringTokenizer; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.Reducer; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; public class WordCount2{ public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException { Job job = Job.getInstance(); job.setJobName("WordCount2"); job.setJarByClass(WordCount2.class); job.setMapperClass(doMapper.class); job.setReducerClass(doReducer.class); job.setOutputKeyClass(Text.class); job.setOutputValueClass(NullWritable.class); Path in = new Path("hdfs://localhost:9000/user/hadoop/output2/part-r-00000"); Path out = new Path("hdfs://localhost:9000/user/hadoop/output3"); FileInputFormat.addInputPath(job, in); FileOutputFormat.setOutputPath(job, out); System.exit(job.waitForCompletion(true) ? 0 : 1); } public static class doMapper extends Mapper<Object, Text, Text, NullWritable>{ public static Text word = new Text(); @Override protected void map(Object key, Text value, Context context) throws IOException, InterruptedException { int i=0; String line = value.toString(); String arr[] = line.split(" "); String arrtime[]=arr[1].split("/"); String arrtimedate[]=arrtime[2].split(":"); String arr2[]=arrtimedate[3].split(" "); if(arrtime[1].equals("Nov")){ i=11; } String time=arrtimedate[0]+"-"+i+"-"+arrtime[0]+" "+arrtimedate[1]+":"+arrtimedate[2]+":"+arr2[0]; String[] type=arr[3].split("/"); word.set(arr[0]+" "+time+" "+arrtime[0]+" "+arr[2]+" "+type[0]+" "+type[1]); context.write(word, NullWritable.get()); } } public static class doReducer extends Reducer<Text, NullWritable, Text, NullWritable>{ @Override protected void reduce(Text key, Iterable<NullWritable> values, Context context) throws IOException, InterruptedException { context.write(key, NullWritable.get()); } } }

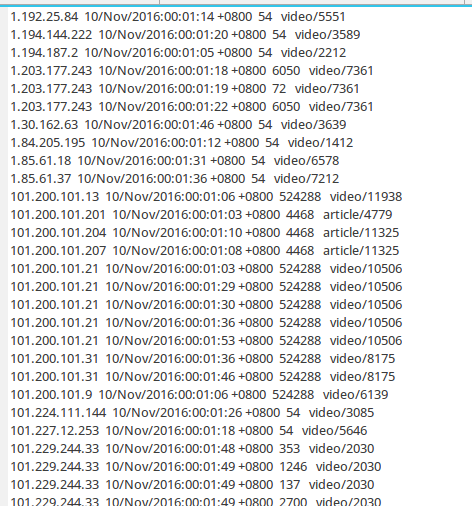

运行结果:

package org.apache.hadoop.examples; import java.io.IOException; import java.util.StringTokenizer; import org.apache.hadoop.fs.Path; import org.apache.hadoop.io.IntWritable; import org.apache.hadoop.io.NullWritable; import org.apache.hadoop.io.Text; import org.apache.hadoop.mapreduce.Job; import org.apache.hadoop.mapreduce.Mapper; import org.apache.hadoop.mapreduce.Reducer; import org.apache.hadoop.mapreduce.lib.input.FileInputFormat; import org.apache.hadoop.mapreduce.lib.output.FileOutputFormat; public class WordCount3{ public static void main(String[] args) throws IOException, ClassNotFoundException, InterruptedException { Job job = Job.getInstance(); job.setJobName("WordCount3"); job.setJarByClass(WordCount3.class); job.setMapperClass(doMapper.class); job.setReducerClass(doReducer.class); job.setOutputKeyClass(Text.class); job.setOutputValueClass(NullWritable.class); Path in = new Path("hdfs://localhost:9000/user/hadoop/output3/part-r-00000"); Path out = new Path("hdfs://localhost:9000/user/hadoop/output3"); FileInputFormat.addInputPath(job, in); FileOutputFormat.setOutputPath(job, out); System.exit(job.waitForCompletion(true) ? 0 : 1); } public static class doMapper extends Mapper<Object, Text, Text, NullWritable>{ public static Text word = new Text(); @Override protected void map(Object key, Text value, Context context) throws IOException, InterruptedException { int i=0; String line = value.toString(); String arr[] = line.split(" "); String arrtime[]=arr[1].split("/"); String arrtimedate[]=arrtime[2].split(":"); String arr2[]=arrtimedate[3].split(" "); if(arrtime[1].equals("Nov")){ i=11; } String time=arrtimedate[0]+"-"+i+"-"+arrtime[0]+" "+arrtimedate[1]+":"+arrtimedate[2]+":"+arr2[0]; String[] type=arr[3].split("/"); word.set(arr[0]+" "+time+" "+arrtime[0]+" "+arr[2]+" "+type[0]+" "+type[1]); context.write(word, NullWritable.get()); } } public static class doReducer extends Reducer<Text, NullWritable, Text, NullWritable>{ @Override protected void reduce(Text key, Iterable<NullWritable> values, Context context) throws IOException, InterruptedException { context.write(key, NullWritable.get()); } } }

运行结果: