1. 第一步初始化imu外参(可以从参数文档中读取,也可以计算出),VINS中处理如下:

# Extrinsic parameter between IMU and Camera. estimate_extrinsic: 0 # 0 Have an accurate extrinsic parameters. We will trust the following imu^R_cam, imu^T_cam, don't change it. # 1 Have an initial guess about extrinsic parameters. We will optimize around your initial guess. # 2 Don't know anything about extrinsic parameters. You don't need to give R,T. We will try to calibrate it. Do some rotation movement at beginning. #If you choose 0 or 1, you should write down the following matrix. #Rotation from camera frame to imu frame, imu^R_cam extrinsicRotation: !!opencv-matrix rows: 3 cols: 3 dt: d data: [0.0148655429818, -0.999880929698, 0.00414029679422, 0.999557249008, 0.0149672133247, 0.025715529948, -0.0257744366974, 0.00375618835797, 0.999660727178] #Translation from camera frame to imu frame, imu^T_cam extrinsicTranslation: !!opencv-matrix rows: 3 cols: 1 dt: d data: [-0.0216401454975,-0.064676986768, 0.00981073058949]

2. 在优化中,每来一帧则对外参更新一次

Matrix3d ric[NUM_OF_CAM];

Vector3d tic[NUM_OF_CAM];

//添加参数块 ceres::LocalParameterization *local_parameterization = new PoseLocalParameterization(); problem.AddParameterBlock(para_Ex_Pose[i], SIZE_POSE, local_parameterization); if (!ESTIMATE_EXTRINSIC) { ROS_DEBUG("fix extinsic param"); problem.SetParameterBlockConstant(para_Ex_Pose[i]); } else ROS_DEBUG("estimate extinsic param");

//添加残差块 if (ESTIMATE_TD) { ProjectionTdFactor *f_td = new ProjectionTdFactor(pts_i, pts_j, it_per_id.feature_per_frame[0].velocity, it_per_frame it_per_id.feature_per_frame[0].cur_td, it_per_frame.cur_td, it_per_id.feature_per_frame[0].uv.y(), it_per_frame.uv.y()); problem.AddResidualBlock(f_td, loss_function, para_Pose[imu_i], para_Pose[imu_j], para_Ex_Pose[0], para_Feature[feature_index], para_Td[0]); } else { ProjectionFactor *f = new ProjectionFactor(pts_i, pts_j); problem.AddResidualBlock(f, loss_function, para_Pose[imu_i], para_Pose[imu_j], para_Ex_Pose[0], para_Feature[feature_inde }

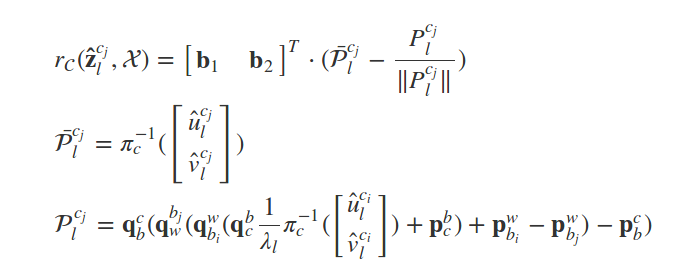

该残差块为视觉模型计算重投影误差 Vision Model

空间上的一个3D特征点会被camera多次观测到,jth 图像帧的camera的残差值被定义为,考虑lth 特征点第一次在ith 图像帧中被观察到恢复的深度信息,投影到第j 帧图像帧的重投影误差。

3. 相机imu外参的重要性

- 外参是相机和imu之间的桥梁,后端优化是以imu的坐标系为优化基准,所以在进行重投影误差时需要使用外参将空间点转换到相机坐标系。

- 初始化的三角化时也会用到外参来求出两个相机之间的旋转平移。

f_manager.triangulate(Ps, &(TIC_TMP[0]), &(RIC[0]));

Eigen::Vector3d pts_camera_i = pts_i / inv_dep_i; Eigen::Vector3d pts_imu_i = qic * pts_camera_i + tic; Eigen::Vector3d pts_w = Qi * pts_imu_i + Pi; Eigen::Vector3d pts_imu_j = Qj.inverse() * (pts_w - Pj); Eigen::Vector3d pts_camera_j = qic.inverse() * (pts_imu_j - tic); Eigen::Map<Eigen::Vector2d> residual(residuals);

qcb(qbjqcb(qbjqcb(qbjqcb(qbj��