最近在工作中,使用CASE WHEN语句的时候,总是出现异常,查看日志是由于数组超界。不知所以然,然后进行了一步步分析,发现这是hive本身的bug,分享出来,一是为了记录,二是想让大家共同看看,欢迎指正。

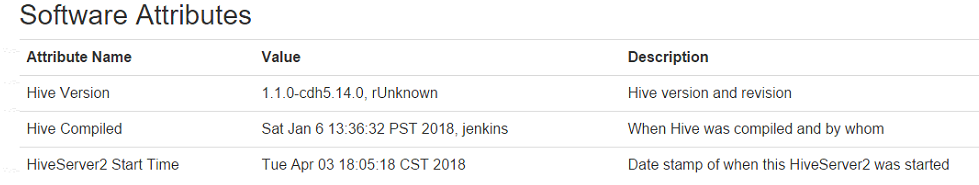

- 使用的是CDH5.14.0版本。

- CDH5.14.0使用的HIVE版本

- 自建日志表log:

+----------------------------------------------------+--+

| createtab_stmt |

+----------------------------------------------------+--+

| CREATE TABLE `log`( |

| `mandt` char(3), |

| `zdate` char(10), |

| `ztime` char(8), |

| `zguid` char(20), |

| `ztype` char(1), |

| `zfuncname` varchar(30), |

| `zseq` varchar(2), |

| `xmldata` string, |

| `xmldata2` string, |

| `field1` varchar(20), |

| `field2` varchar(20), |

| `field3` varchar(20), |

| `field4` varchar(20)) |

| CLUSTERED BY ( |

| zdate) |

| INTO 12 BUCKETS |

| ROW FORMAT SERDE |

| 'org.apache.hadoop.hive.ql.io.orc.OrcSerde' |

| WITH SERDEPROPERTIES ( |

| 'field.delim'=' ', |

| 'serialization.format'=' ') |

| STORED AS INPUTFORMAT |

| 'org.apache.hadoop.hive.ql.io.orc.OrcInputFormat' |

| OUTPUTFORMAT |

| 'org.apache.hadoop.hive.ql.io.orc.OrcOutputFormat' |

| LOCATION |

| 'hdfs://hserver1n:8020/user/hive/warehouse/sap.db/log' |

| TBLPROPERTIES ( |

| 'COLUMN_STATS_ACCURATE'='true', |

| 'compactor.mapreduce.map.memory.mb'='1024', |

| 'compactorthreshold.hive.compactor.delta.num.threshold'='4', |

| 'compactorthreshold.hive.compactor.delta.pct.threshold'='0.5', |

| 'last_modified_by'='hdfs', |

| 'last_modified_time'='1522318887', |

| 'numFiles'='14', |

| 'numRows'='1000000', |

| 'rawDataSize'='0', |

| 'totalSize'='135774020', |

| 'transactional'='true', |

| 'transient_lastDdlTime'='1522393096') |

+----------------------------------------------------+--+

41 rows selected (0.105 seconds)

此表共有13个字段,存储格式是 ORC列式存储,共有12个桶,并启用了事务。

- 查询语句:

select zdate,case zdate when '2013.07.23' then 'a' else 'b' end as zfunc from log limit 1;

- 错误描述(日志太长,部分省略):

Log Type: syslog

Log Upload Time: Tue Apr 03 17:09:14 +0800 2018

Log Length: 80385

2018-04-03 17:08:36,788 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorMapOperator: Initializing Self MAP[4]

2018-04-03 17:08:36,788 INFO [main] org.apache.hadoop.hive.ql.exec.TableScanOperator: Initializing Self TS[0]

2018-04-03 17:08:36,788 INFO [main] org.apache.hadoop.hive.ql.exec.TableScanOperator: Operator 0 TS initialized

2018-04-03 17:08:36,788 INFO [main] org.apache.hadoop.hive.ql.exec.TableScanOperator: Initializing children of 0 TS

2018-04-03 17:08:36,788 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorSelectOperator: Initializing child 1 SEL

2018-04-03 17:08:36,788 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorSelectOperator: Initializing Self SEL[1]

2018-04-03 17:08:36,792 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorSelectOperator: Operator 1 SEL initialized

2018-04-03 17:08:36,792 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorSelectOperator: Initializing children of 1 SEL

2018-04-03 17:08:36,792 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorLimitOperator: Initializing child 2 LIM

2018-04-03 17:08:36,792 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorLimitOperator: Initializing Self LIM[2]

2018-04-03 17:08:36,792 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorLimitOperator: Operator 2 LIM initialized

2018-04-03 17:08:36,792 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorLimitOperator: Initializing children of 2 LIM

2018-04-03 17:08:36,792 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorFileSinkOperator: Initializing child 3 FS

2018-04-03 17:08:36,792 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorFileSinkOperator: Initializing Self FS[3]

2018-04-03 17:08:36,793 INFO [main] org.apache.hadoop.conf.Configuration.deprecation: mapred.task.id is deprecated. Instead, use mapreduce.task.attempt.id

2018-04-03 17:08:36,908 INFO [main] org.apache.hadoop.hive.ql.exec.FileSinkOperator: Using serializer : class org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe[[[B@5d13d4e]:[_col0, _col1]:[char(10), string]] and formatter : org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat@7e1b4e9d

2018-04-03 17:08:36,908 INFO [main] org.apache.hadoop.conf.Configuration.deprecation: mapred.healthChecker.script.timeout is deprecated. Instead, use mapreduce.tasktracker.healthchecker.script.timeout

2018-04-03 17:08:36,909 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorFileSinkOperator: Operator 3 FS initialized

2018-04-03 17:08:36,909 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorFileSinkOperator: Initialization Done 3 FS

2018-04-03 17:08:36,909 INFO [main] org.apache.hadoop.conf.Configuration.deprecation: mapred.task.is.map is deprecated. Instead, use mapreduce.task.ismap

2018-04-03 17:08:36,909 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorLimitOperator: Initialization Done 2 LIM

2018-04-03 17:08:36,909 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorSelectOperator: Initialization Done 1 SEL

2018-04-03 17:08:36,909 INFO [main] org.apache.hadoop.hive.ql.exec.TableScanOperator: Initialization Done 0 TS

2018-04-03 17:08:36,909 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorMapOperator: Initialization Done 4 MAP

2018-04-03 17:08:38,231 FATAL [main] org.apache.hadoop.hive.ql.exec.mr.ExecMapper: org.apache.hadoop.hive.ql.metadata.HiveException: Hive Runtime Error while processing row

at org.apache.hadoop.hive.ql.exec.vector.VectorMapOperator.process(VectorMapOperator.java:52)

at org.apache.hadoop.hive.ql.exec.mr.ExecMapper.map(ExecMapper.java:170)

at org.apache.hadoop.mapred.MapRunner.run(MapRunner.java:54)

at org.apache.hadoop.mapred.MapTask.runOldMapper(MapTask.java:459)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:343)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:164)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1917)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:158)

Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: Error evaluating CASE (zfuncname) WHEN ('ZFI_VENDOR_TO_CRM') THEN ('a') ELSE ('b') END

at org.apache.hadoop.hive.ql.exec.vector.VectorSelectOperator.processOp(VectorSelectOperator.java:126)

at org.apache.hadoop.hive.ql.exec.Operator.forward(Operator.java:815)

at org.apache.hadoop.hive.ql.exec.TableScanOperator.processOp(TableScanOperator.java:98)

at org.apache.hadoop.hive.ql.exec.MapOperator$MapOpCtx.forward(MapOperator.java:157)

at org.apache.hadoop.hive.ql.exec.vector.VectorMapOperator.process(VectorMapOperator.java:45)

... 9 more

Caused by: java.lang.ArrayIndexOutOfBoundsException: 13

at org.apache.hadoop.hive.ql.exec.vector.udf.VectorUDFAdaptor.evaluate(VectorUDFAdaptor.java:117)

at org.apache.hadoop.hive.ql.exec.vector.VectorSelectOperator.processOp(VectorSelectOperator.java:124)

... 13 more

2018-04-03 17:08:38,231 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorMapOperator: 4 finished. closing...

2018-04-03 17:08:38,231 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorMapOperator: RECORDS_IN:0

2018-04-03 17:08:38,231 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorMapOperator: DESERIALIZE_ERRORS:0

2018-04-03 17:08:38,231 INFO [main] org.apache.hadoop.hive.ql.exec.TableScanOperator: 0 finished. closing...

2018-04-03 17:08:38,231 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorSelectOperator: 1 finished. closing...

2018-04-03 17:08:38,231 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorLimitOperator: 2 finished. closing...

2018-04-03 17:08:38,231 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorFileSinkOperator: 3 finished. closing...

2018-04-03 17:08:38,231 INFO [main] org.apache.hadoop.hive.ql.exec.FileSinkOperator: FS[3]: records written - 0

2018-04-03 17:08:38,231 INFO [main] org.apache.hadoop.hive.ql.exec.FileSinkOperator: Final Path: FS hdfs://hserver1n:8020/tmp/hive/hdfs/e62bf883-074a-44b2-8b04-2b69d4475a66/hive_2018-04-03_17-08-17_236_6563852616584890762-25/-mr-10000/.hive-staging_hive_2018-04-03_17-08-17_236_6563852616584890762-25/_tmp.-ext-10001/000000_0

2018-04-03 17:08:38,232 INFO [main] org.apache.hadoop.hive.ql.exec.FileSinkOperator: Writing to temp file: FS hdfs://hserver1n:8020/tmp/hive/hdfs/e62bf883-074a-44b2-8b04-2b69d4475a66/hive_2018-04-03_17-08-17_236_6563852616584890762-25/-mr-10000/.hive-staging_hive_2018-04-03_17-08-17_236_6563852616584890762-25/_task_tmp.-ext-10001/_tmp.000000_0

2018-04-03 17:08:38,232 INFO [main] org.apache.hadoop.hive.ql.exec.FileSinkOperator: New Final Path: FS hdfs://hserver1n:8020/tmp/hive/hdfs/e62bf883-074a-44b2-8b04-2b69d4475a66/hive_2018-04-03_17-08-17_236_6563852616584890762-25/-mr-10000/.hive-staging_hive_2018-04-03_17-08-17_236_6563852616584890762-25/_tmp.-ext-10001/000000_0

2018-04-03 17:08:38,623 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorFileSinkOperator: RECORDS_OUT_0:0

2018-04-03 17:08:38,623 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorFileSinkOperator: 3 Close done

2018-04-03 17:08:38,623 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorLimitOperator: 2 Close done

2018-04-03 17:08:38,623 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorSelectOperator: 1 Close done

2018-04-03 17:08:38,623 INFO [main] org.apache.hadoop.hive.ql.exec.TableScanOperator: 0 Close done

2018-04-03 17:08:38,623 INFO [main] org.apache.hadoop.hive.ql.exec.vector.VectorMapOperator: 4 Close done

2018-04-03 17:08:38,624 WARN [main] org.apache.hadoop.mapred.YarnChild: Exception running child : java.lang.RuntimeException: org.apache.hadoop.hive.ql.metadata.HiveException: Hive Runtime Error while processing row

at org.apache.hadoop.hive.ql.exec.mr.ExecMapper.map(ExecMapper.java:179)

at org.apache.hadoop.mapred.MapRunner.run(MapRunner.java:54)

at org.apache.hadoop.mapred.MapTask.runOldMapper(MapTask.java:459)

at org.apache.hadoop.mapred.MapTask.run(MapTask.java:343)

at org.apache.hadoop.mapred.YarnChild$2.run(YarnChild.java:164)

at java.security.AccessController.doPrivileged(Native Method)

at javax.security.auth.Subject.doAs(Subject.java:415)

at org.apache.hadoop.security.UserGroupInformation.doAs(UserGroupInformation.java:1917)

at org.apache.hadoop.mapred.YarnChild.main(YarnChild.java:158)

Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: Hive Runtime Error while processing row

at org.apache.hadoop.hive.ql.exec.vector.VectorMapOperator.process(VectorMapOperator.java:52)

at org.apache.hadoop.hive.ql.exec.mr.ExecMapper.map(ExecMapper.java:170)

... 8 more

Caused by: org.apache.hadoop.hive.ql.metadata.HiveException: Error evaluating CASE (zfuncname) WHEN ('ZFI_VENDOR_TO_CRM') THEN ('a') ELSE ('b') END

at org.apache.hadoop.hive.ql.exec.vector.VectorSelectOperator.processOp(VectorSelectOperator.java:126)

at org.apache.hadoop.hive.ql.exec.Operator.forward(Operator.java:815)

at org.apache.hadoop.hive.ql.exec.TableScanOperator.processOp(TableScanOperator.java:98)

at org.apache.hadoop.hive.ql.exec.MapOperator$MapOpCtx.forward(MapOperator.java:157)

at org.apache.hadoop.hive.ql.exec.vector.VectorMapOperator.process(VectorMapOperator.java:45)

... 9 more

Caused by: java.lang.ArrayIndexOutOfBoundsException: 13

at org.apache.hadoop.hive.ql.exec.vector.udf.VectorUDFAdaptor.evaluate(VectorUDFAdaptor.java:117)

at org.apache.hadoop.hive.ql.exec.vector.VectorSelectOperator.processOp(VectorSelectOperator.java:124)

... 13 more

2018-04-03 17:08:39,369 INFO [main] org.apache.hadoop.mapred.Task: Runnning cleanup for the task

2018-04-03 17:08:39,496 INFO [main] org.apache.hadoop.metrics2.impl.MetricsSystemImpl: Stopping MapTask metrics system...

2018-04-03 17:08:39,496 INFO [main] org.apache.hadoop.metrics2.impl.MetricsSystemImpl: MapTask metrics system stopped.

2018-04-03 17:08:39,496 INFO [main] org.apache.hadoop.metrics2.impl.MetricsSystemImpl: MapTask metrics system shutdown complete.

错误分析:

日志给出的错误原因是13超过了数组的边界,猜想是系统认为总共有12桶,超过了桶的个数,故报错,后面进行验证。

故一步步排除,首先创建了一个简单的表:

0: jdbc:hive2://localhost:10000> create table log2(

. . . . . . . . . . . . . . . .> mandt char(3),

. . . . . . . . . . . . . . . .> zdate char(10),

. . . . . . . . . . . . . . . .> ztime char(8),

. . . . . . . . . . . . . . . .> zguid char(20),

. . . . . . . . . . . . . . . .> ztype char(1),

. . . . . . . . . . . . . . . .> zfuncname varchar(30),

. . . . . . . . . . . . . . . .> zseq varchar(2),

. . . . . . . . . . . . . . . .> xmldata string,

. . . . . . . . . . . . . . . .> xmldata2 string,

. . . . . . . . . . . . . . . .> field1 varchar(20),

. . . . . . . . . . . . . . . .> field2 varchar(20),

. . . . . . . . . . . . . . . .> field3 varchar(20),

. . . . . . . . . . . . . . . .> field4 varchar(20))

. . . . . . . . . . . . . . . .> row format delimited fields terminated by ' ';

然后将log数据库中部分数据导入到此表中,再使用上面查询语句查询(将数据库表名改成新建的表log2),可以得到正确结果。

然后再在上面基础之上使用ORC方式存储,也可以得到正确结果;

然后在ORC基础之上,再使用分桶,也可以得到正确结果,可见并不是分桶的原因;

然后再分桶基础之上,再创建事务表,则会出现错误,可见此错误是由于使用了事务的原因。

为了验证后面的Hive版本是否已解决,下载了apache hive2.3.0版本测试了一下,已没有问题。