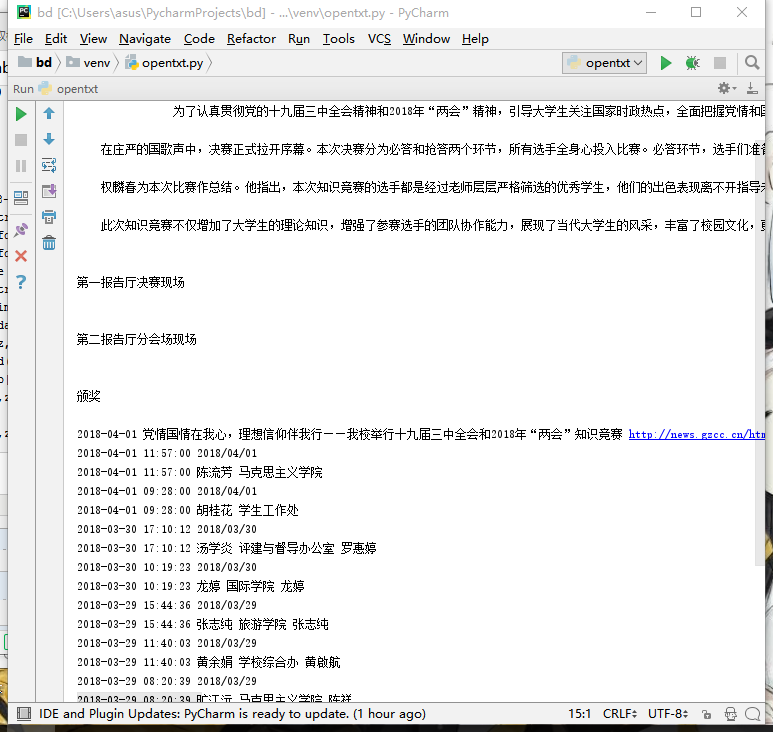

1. 用requests库和BeautifulSoup库,爬取校园新闻首页新闻的标题、链接、正文。

import requests

from bs4 import BeautifulSoup

res = requests.get('http://news.gzcc.cn/html/xiaoyuanxinwen/')

res.encoding = 'utf-8'

soup = BeautifulSoup(res.text,'html.parser')

for news in soup.select('li'):

if len(news.select('.news-list-title'))>0:

t = news.select('.news-list-title')[0].text # 标题

a = news.select('a')[0].attrs['href'] # 链接

resd = requests.get(a)

resd.encoding = 'utf-8'

soupd = BeautifulSoup(resd.text,'html.parser') # 打开询问详情页

c = soupd.select('#content')[0].text # 正文

print(t+'

'+a+'

'+c)

break

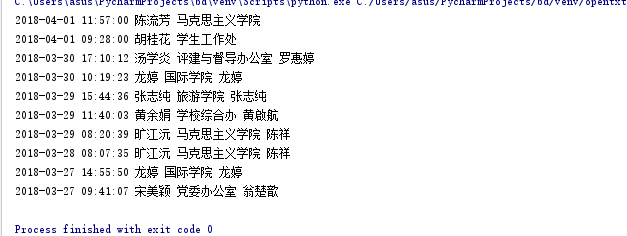

2. 分析字符串,获取每篇新闻的发布时间,作者,来源,摄影等信息。

import requests

from bs4 import BeautifulSoup

res = requests.get('http://news.gzcc.cn/html/xiaoyuanxinwen/')

res.encoding = 'utf-8'

soup = BeautifulSoup(res.text,'html.parser')

for news in soup.select('li'):

if len(news.select('.news-list-title')) > 0:

t = news.select('.news-list-title')[0].text # 标题

d = news.select('.news-list-description')[0].text

a = news.a.attrs['href']

resd = requests.get(a)

resd.encoding = 'utf-8'

soupd = BeautifulSoup(resd.text,'html.parser')

c = soupd.select('#content')[0].text # 正文

info = soupd.select('.show-info')[0].text

dt = info.lstrip('发布时间:')[:19]

zz = info[info.find('作者'):].split()[0].lstrip('作者:')

ly = info[info.find('来源'):].split()[0].lstrip('来源:')

if (info.find("摄影:") > 0):

sy = info[info.find("摄影:"):].split()[0].lstrip('摄影:') # 摄影

print(dt,zz,ly,sy)

else:

print(dt,zz,ly)

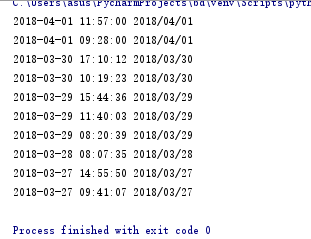

3. 将其中的发布时间由str转换成datetime类型。

import requests

from bs4 import BeautifulSoup

res = requests.get('http://news.gzcc.cn/html/xiaoyuanxinwen/')

res.encoding = 'utf-8'

soup = BeautifulSoup(res.text,'html.parser')

for news in soup.select('li'):

if len(news.select('.news-list-title')) > 0:

a = news.a.attrs['href']

resd = requests.get(a)

resd.encoding = 'utf-8'

soupd = BeautifulSoup(resd.text,'html.parser')

info = soupd.select('.show-info')[0].text

dt = info.lstrip('发布时间:')[:19]

from datetime import datetime

dt = info.lstrip('发布时间:')[:19]

dati = datetime.strptime(dt, '%Y-%m-%d %H:%M:%S')

print(dati, dati.strftime('%Y/%m/%d'))

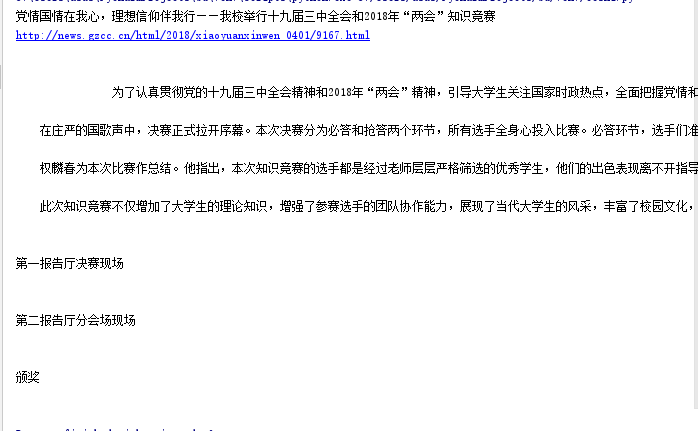

4. 将完整的代码及运行结果截图发布在作业上。

import requests

from bs4 import BeautifulSoup

res = requests.get('http://news.gzcc.cn/html/xiaoyuanxinwen/')

res.encoding = 'utf-8'

soup = BeautifulSoup(res.text,'html.parser')

# print(soup)

for news in soup.select('li'):

if len(news.select('.news-list-title'))>0:

d = news.select('.news-list-info')[0].contents[0].text # 日期

t = news.select('.news-list-title')[0].text # 标题

a = news.select('a')[0].attrs['href'] # 链接

resd = requests.get(a)

resd.encoding = 'utf-8'

soupd = BeautifulSoup(resd.text,'html.parser') # 打开询问详情页

print(soupd.select('#content')[0].text)

print(d,t,a)

break

for news in soup.select('li'):

if len(news.select('.news-list-title')) > 0:

t = news.select('.news-list-title')[0].text # 标题

d = news.select('.news-list-description')[0].text

a = news.a.attrs['href']

resd = requests.get(a)

resd.encoding = 'utf-8'

soupd = BeautifulSoup(resd.text,'html.parser')

# c = soupd.select('#content')[0].text # 正文

info = soupd.select('.show-info')[0].text

# print(info)

# print(a)

# print(d)

# print(c)

# print(t)

# break

# info= '发布时间:2018-04-01 11:57:00 作者:陈流芳 审核:权麟春 来源:马克思主义学院 点击:次'

dt = info.lstrip('发布时间:')[:19]

zz = info[info.find('作者'):].split()[0].lstrip('作者:')

ly = info[info.find('来源'):].split()[0].lstrip('来源:')

from datetime import datetime

dt = info.lstrip('发布时间:')[:19]

dati = datetime.strptime(dt, '%Y-%m-%d %H:%M:%S')

print(dati, dati.strftime('%Y/%m/%d'))

# print(dt,zz,ly)

if (info.find("摄影:") > 0):

sy = info[info.find("摄影:"):].split()[0].lstrip('摄影:') # 摄影

print(dt,zz,ly,sy)

else:

print(dt,zz,ly)