import tensorflow as tf

from tensorflow import keras

from tensorflow.keras import datasets

import os

os.environ['TF_CPP_MIN_LOG_LEVEL'] = '2'

""" 加载数据集

x: (60000, 28, 28)

y: (60000,)

"""

(x, y), _ = datasets.mnist.load_data()

#

x = tf.convert_to_tensor(x, dtype=tf.float32) / 255. # 数据在0~1更利于tf优化

y = tf.convert_to_tensor(y, dtype=tf.int32)

# x、y的范围:

# tf.reduce_min(x), tf.reduce_max(x)

# Out[7]:

# (<tf.Tensor: id=33, shape=(), dtype=float32, numpy=0.0>,

# <tf.Tensor: id=35, shape=(), dtype=float32, numpy=1.0>)

# tf.reduce_min(y), tf.reduce_max(y)

# Out[8]:

# (<tf.Tensor: id=20, shape=(), dtype=uint8, numpy=0>,

# <tf.Tensor: id=27, shape=(), dtype=uint8, numpy=9>)

""" 创建数据集 """

# 这样就可以一次取一个batch

train_db = tf.data.Dataset.from_tensor_slices((x,y)).batch(128)

train_iter = iter(train_db) # 迭代器

sample = next(train_iter)

# sample[0].shape, sample[1].shape

# Out[13]: (TensorShape([128, 28, 28]), TensorShape([128]))

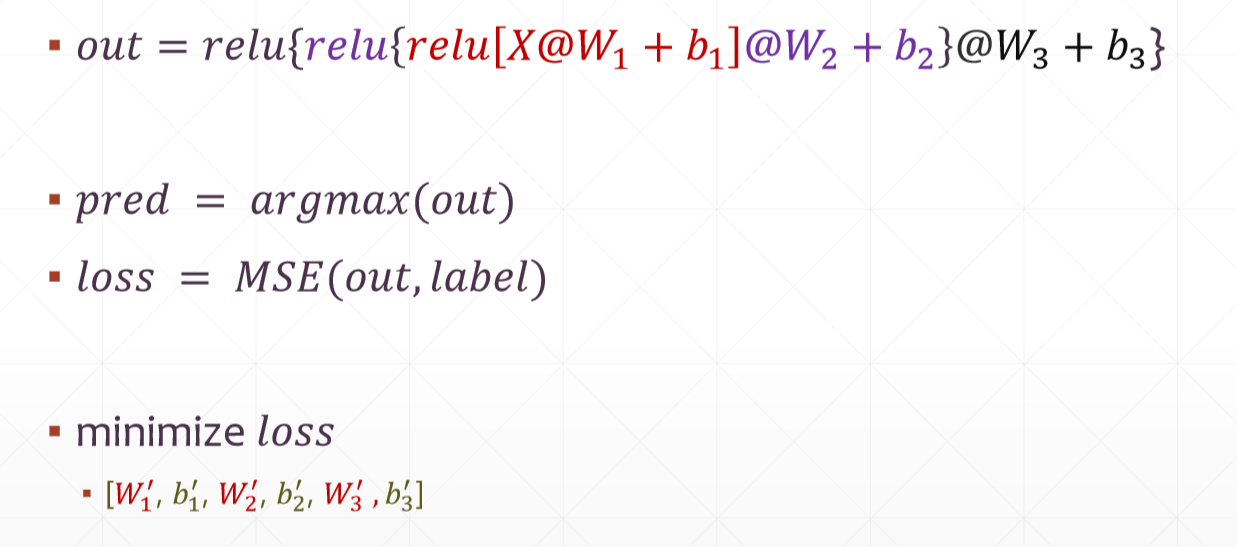

# [b, 784] => [b, 256] => [b, 128] => [b, 10]

# w: [dim_in, dim_out], b: [dim_out]

''' 若不用tf.Variable包装,则为tf.tensor类型,tf.GradientTape不会跟踪

在这个问题里不设置stddev会出现loss的值为nan的情况,即梯度爆炸'''

w1 = tf.Variable(tf.random.truncated_normal([784, 256], stddev=0.1))

b1 = tf.Variable(tf.zeros([256]))

w2 = tf.Variable(tf.random.truncated_normal([256, 128], stddev=0.1))

b2 = tf.Variable(tf.zeros([128]))

w3 = tf.Variable(tf.random.truncated_normal([128, 10], stddev=0.1))

b3 = tf.Variable(tf.zeros([10]))

lr = 1e-3

for epoch in range(10):

for step, (x, y) in enumerate(train_db): # for every batch

# x:[128, 28, 28]

# y: [128]

# [b, 28, 28] => [b, 28*28]

x = tf.reshape(x, [-1, 28*28])

'''

tf.GradientTape默认只会跟踪tf.Variable类型的梯度信息,用tf.tensor

计算出来的grad是None'''

with tf.GradientTape() as tape:

# x: [b, 28*28]

# h1 = x@w1 + b1

# [b, 784]@[784, 256] + [256] => [b, 256] + [256] => [b, 256] + [b, 256]

# TensorFlow会自动完成张量扩张tf.broadcast_to()

h1 = x@w1 + tf.broadcast_to(b1, [x.shape[0], 256])

h1 = tf.nn.relu(h1)

# [b, 256] => [b, 128]

h2 = h1@w2 + b2

h2 = tf.nn.relu(h2)

# [b, 128] => [b, 10]

out = h2@w3 + b3

# compute loss

# out: [b, 10]

# y: [b] => [b, 10]

y_onehot = tf.one_hot(y, depth=10)

# mse = mean(sum(y-out)^2)

# [b, 10]

loss = tf.square(y_onehot - out)

# mean: scalar

loss = tf.reduce_mean(loss)

# compute gradients

grads = tape.gradient(loss, [w1, b1, w2, b2, w3, b3])

# print(grads)

# optimizer的作用:

# w1 = w1 - lr * w1_grad

'''

grad为tf.Variable类型,使用 w1 = w1 - lr * grads[0]会让w1从tf.Variable

变成tf.tensor类型,在再次运算的时候会报错,要使用assign_sub()原地更新,

w1的数据类型才不会改变'''

w1.assign_sub(lr * grads[0])

b1.assign_sub(lr * grads[1])

w2.assign_sub(lr * grads[2])

b2.assign_sub(lr * grads[3])

w3.assign_sub(lr * grads[4])

b3.assign_sub(lr * grads[5])

# print(isinstance(b3, tf.Variable))

# print(isinstance(b3, tf.Tensor))

if step % 100 == 0:

print(epoch, step, 'loss:', float(loss))

结果:

0 0 loss: 0.6338542699813843

0 100 loss: 0.19527742266654968

0 200 loss: 0.19111016392707825

0 300 loss: 0.16401150822639465

0 400 loss: 0.17101189494132996

1 0 loss: 0.1488364338874817

1 100 loss: 0.13843022286891937

1 200 loss: 0.1527971774339676

1 300 loss: 0.13737797737121582

1 400 loss: 0.14406141638755798

2 0 loss: 0.1268216073513031

2 100 loss: 0.12029824405908585

2 200 loss: 0.13270306587219238

2 300 loss: 0.12179452180862427

2 400 loss: 0.12749424576759338

3 0 loss: 0.11288430541753769

3 100 loss: 0.10880843549966812

3 200 loss: 0.11929886043071747

3 300 loss: 0.11120941489934921

3 400 loss: 0.11638245731592178

4 0 loss: 0.10308767855167389

4 100 loss: 0.10074740648269653

4 200 loss: 0.10969498008489609

4 300 loss: 0.10343489795923233

4 400 loss: 0.10824362933635712

5 0 loss: 0.09583660215139389

5 100 loss: 0.09478463232517242

5 200 loss: 0.10255211591720581

5 300 loss: 0.09745050221681595

5 400 loss: 0.1019618883728981

...

若不设置sttdev,则容易梯度爆炸:

0 0 loss: 387908.8125

0 100 loss: nan

0 200 loss: nan

0 300 loss: nan

0 400 loss: nan

1 0 loss: nan

1 100 loss: nan

1 200 loss: nan

1 300 loss: nan

1 400 loss: nan

2 0 loss: nan

2 100 loss: nan

2 200 loss: nan

2 300 loss: nan

2 400 loss: nan

3 0 loss: nan

3 100 loss: nan

3 200 loss: nan

3 300 loss: nan

3 400 loss: nan

...