详细格式:https://grail.cs.washington.edu/projects/bal/

Bundle Adjustment in the Large

Recent work in Structure from Motion has demonstrated the possibility of reconstructing geometry from large-scale community photo collections. Bundle adjustment, the joint non-linear refinement of camera and point parameters, is a key component of most SfM systems, and one which can consume a significant amount of time for large problems. As the number of photos in such collections continues to grow into the hundreds of thousands or even millions, the scalability of bundle adjustment algorithms has become a critical issue.

In this project, we consider the design and implementation of a new Inexact Newton type bundle adjustment algorithm, which uses substantially less time and memory than standard Schur complement based methods, without compromising on the quality of the solution. We explore the use of the Conjugate Gradients algorithm for calculating the Newton step and its performance as a function of some simple and computationally efficient preconditioners. We also show that the use of the Schur complement is not limited to factorization-based methods, how it can be used as part of the Conjugate Gradients (CG) method without incurring the computational cost of actually calculating and storing it in memory, and how this use is equivalent to the choice of a particular preconditioner.

This research is part of Community Photo Collections project at the University of Washington GRAIL Lab. which explores the use of large scale internet image collections for furthering research in computer vision and graphics.

Team

Sameer Agarwal, University of Washington

Noah Snavely, Cornell University

Steve Seitz, University of Washington

Richard Szeliski, Microsoft Research

Paper

Bundle Adjustment in the Large

Sameer Agarwal, Noah Snavely, Steven M. Seitz and Richard Szeliski

European Conference on Computer Vision, 2010 , Crete, Greece.

Supplementary Material

Poster

Software & Data

As part of this project we will be releasing all the test problems, software and performance data reported in the paper. Currently we have the test problems available for download. The code shall be available shortly.

We experimented with two sources of data:

Images captured at a regular rate using a Ladybug camera mounted on a moving vehicle. Image matching was done by exploiting the temporal order of the images and the GPS information captured at the time of image capture.

Images downloaded from Flickr.com and matched using the system described in Building Rome in a Day. We used images from Trafalgar Square and the cities of Dubrovnik, Venice, and Rome.

For Flickr photographs, the matched images were decomposed into a skeletal set (i.e., a sparse core of images) and a set of leaf images. The skeletal set was reconstructed first, then the leaf images were added to it via resectioning followed by triangulation of the remaing 3D points. The skeletal sets and the Ladybug datasets were reconstructed incrementally using a modified version of Bundler, which was instrumented to dump intermediate unoptimized reconstructions to disk. This gave rise to the Ladybug, Trafalgar Square, Dubrovnik and Venice datasets. We refer to the bundle adjustment problems obtained after adding the leaf images to the skeletal set and triangulating the remaing points as the Final problems.

Available Datasets

Ladybug

Trafalgar Square

Dubrovnik

Venice

Final

Camera Model

We use a pinhole camera model; the parameters we estimate for each camera area rotation R, a translation t, a focal length f and two radial distortion parameters k1 and k2. The formula for projecting a 3D point X into a camera R,t,f,k1,k2 is:

P = R * X + t (conversion from world to camera coordinates)

p = -P / P.z (perspective division)

p' = f * r(p) * p (conversion to pixel coordinates)

where P.z is the third (z) coordinate of P. In the last equation, r(p) is a function that computes a scaling factor to undo the radial distortion:

r(p) = 1.0 + k1 * ||p||^2 + k2 * ||p||^4.

This gives a projection in pixels, where the origin of the image is the center of the image, the positive x-axis points right, and the positive y-axis points up (in addition, in the camera coordinate system, the positive z-axis points backwards, so the camera is looking down the negative z-axis, as in OpenGL).

Data Format

Each problem is provided as a bzip2 compressed text file in the following format.

<num_cameras> <num_points> <num_observations>

<camera_index_1> <point_index_1> <x_1> <y_1>

...

<camera_index_num_observations> <point_index_num_observations> <x_num_observations> <y_num_observations>

<camera_1>

...

<camera_num_cameras>

<point_1>

...

<point_num_points>

Where, there camera and point indices start from 0. Each camera is a set of 9 parameters - R,t,f,k1 and k2. The rotation R is specified as a Rodrigues' vector.

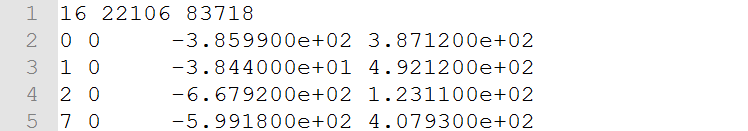

看一个数据集:

16为相机个数, 22106为路标个数 83718为观测数据个数

第一行:

0为第0个相机, 0为第0个路标, 后面2个为观测数据,像素坐标

83718后面是相关参数,前面是相机参数有9维:-R(罗德里格斯向量3维),t(3维),f(相机焦距),k1(畸变参数),k2(畸变参数)。依次对应相机0 - num_cameras

再后面是路标点的空间3D参数