1 有放回随机抽样:重要参数subsample

axisx = np.linspace(0,1,20) rs = [] for i in axisx: reg = XGBR(n_estimators=180,subsample=i,random_state=420) rs.append(CVS(reg,Xtrain,Ytrain,cv=cv).mean()) print(axisx[rs.index(max(rs))],max(rs)) plt.figure(figsize=(20,5)) plt.plot(axisx,rs,c="green",label="XGB") plt.legend() plt.show() #细化学习曲线 axisx = np.linspace(0.05,1,20) rs = [] var = [] ge = [] for i in axisx: reg = XGBR(n_estimators=180,subsample=i,random_state=420) cvresult = CVS(reg,Xtrain,Ytrain,cv=cv) rs.append(cvresult.mean()) var.append(cvresult.var()) ge.append((1 - cvresult.mean())**2+cvresult.var()) print(axisx[rs.index(max(rs))],max(rs),var[rs.index(max(rs))]) print(axisx[var.index(min(var))],rs[var.index(min(var))],min(var)) print(axisx[ge.index(min(ge))],rs[ge.index(min(ge))],var[ge.index(min(ge))],min(ge)) rs = np.array(rs) var = np.array(var) plt.figure(figsize=(20,5)) plt.plot(axisx,rs,c="black",label="XGB") plt.plot(axisx,rs+var,c="red",linestyle='-.') plt.plot(axisx,rs-var,c="red",linestyle='-.') plt.legend() plt.show() #继续细化学习曲线 axisx = np.linspace(0.75,1,25) #不要盲目找寻泛化误差可控部分的最低值,注意观察结果 #看看泛化误差的情况如何 reg = XGBR(n_estimators=180 ,subsample=0.7708333333333334 ,random_state=420).fit(Xtrain,Ytrain) reg.score(Xtest,Ytest) MSE(Ytest,reg.predict(Xtest)) #这样的结果说明了什么?

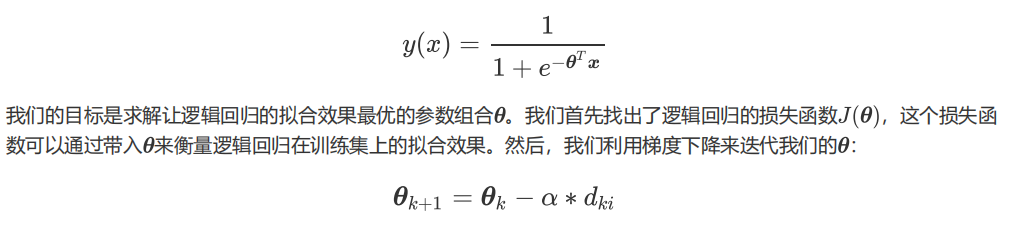

2 迭代决策树:重要参数eta

#首先我们先来定义一个评分函数,这个评分函数能够帮助我们直接打印Xtrain上的交叉验证结果 def regassess(reg,Xtrain,Ytrain,cv,scoring = ["r2"],show=True): score = [] for i in range(len(scoring)): if show: print("{}:{:.2f}".format(scoring[i] ,CVS(reg ,Xtrain,Ytrain ,cv=cv,scoring=scoring[i]).mean())) score.append(CVS(reg,Xtrain,Ytrain,cv=cv,scoring=scoring[i]).mean()) return score #运行一下函数来看看效果 regassess(reg,Xtrain,Ytrain,cv,scoring = ["r2","neg_mean_squared_error"]) #关闭打印功能试试看? regassess(reg,Xtrain,Ytrain,cv,scoring = ["r2","neg_mean_squared_error"],show=False) #观察一下eta如何影响我们的模型: from time import time import datetime for i in [0,0.2,0.5,1]: time0=time() reg = XGBR(n_estimators=180,random_state=420,learning_rate=i) print("learning_rate = {}".format(i)) regassess(reg,Xtrain,Ytrain,cv,scoring = ["r2","neg_mean_squared_error"]) print(datetime.datetime.fromtimestamp(time()-time0).strftime("%M:%S:%f")) print(" ")

axisx = np.arange(0.05,1,0.05) rs = [] te = [] for i in axisx: reg = XGBR(n_estimators=180,random_state=420,learning_rate=i) score = regassess(reg,Xtrain,Ytrain,cv,scoring = ["r2","neg_mean_squared_error"],show=False) test = reg.fit(Xtrain,Ytrain).score(Xtest,Ytest) rs.append(score[0]) te.append(test) print(axisx[rs.index(max(rs))],max(rs)) plt.figure(figsize=(20,5)) plt.plot(axisx,te,c="gray",label="XGB") plt.plot(axisx,rs,c="green",label="XGB") plt.legend() plt.show()