文档地址:https://docs.spring.io/spring-cloud-sleuth/docs/2.2.4.BUILD-SNAPSHOT/reference/html/

git地址:https://github.com/spring-cloud/spring-cloud-sleuth/

1.sleuth简介

1. sleuth简单介绍

在微服务框架中,一个由客户端发起的请求在后端系统中会经过多个不同的服务节点调用来协同产生最后的请求结果,每一个前段请求都会形成一条复杂的分布式服务调用链,链路中的任何一环出现高延时或错误都会引起整个请求的失败。

sleuth提供了一套完整的服务跟踪的解决方案。包括链路追踪(可以看到每个请求的依赖服务)、性能分析(可以看到在每个调用消耗的时间)以及通过链路分析程序错误等。

在分布式系统中提供追踪解决方案并且兼容支持了zipkin。其实就是sleuth负责监控,zipkin负责展现。

官网:https://github.com/spring-cloud/spring-cloud-sleuth

Springcloud从F版不需要自己构建ZipkinServer,只需要下载jar包运行即可。下载地址:http://dl.bintray.com/openzipkin/maven/io/zipkin/java/zipkin-server/

2.术语介绍

Trace:一系列spans组成的一个树状结构,表示一条调用链路,一条链路通过Trace Id唯一标识。

Span:表示调用链路来源,通俗的理解span就是一次请求信息,各span通过parentid关联起来。

Annotation:用来及时记录一个事件的存在,一些核心annotations用来定义一个请求的开始和结束

cs - Client Sent -客户端发起一个请求,这个annotion描述了这个span的开始

sr - Server Received -服务端获得请求并准备开始处理它,如果将其sr减去cs时间戳便可得到网络延迟

ss - Server Sent -注解表明请求处理的完成(当请求返回客户端),如果ss减去sr时间戳便可得到服务端需要的处理请求时间

cr - Client Received -表明span的结束,客户端成功接收到服务端的回复,如果cr减去cs时间戳便可得到客户端从服务端获取回复的所有所需时间

2.使用sleuth开始监控

1.下载zipkin并启动

1.下载

zipkin-server-2.12.9-exec.jar

2.启动zipkin

java -jar ./zipkin-server-2.12.9-exec.jar

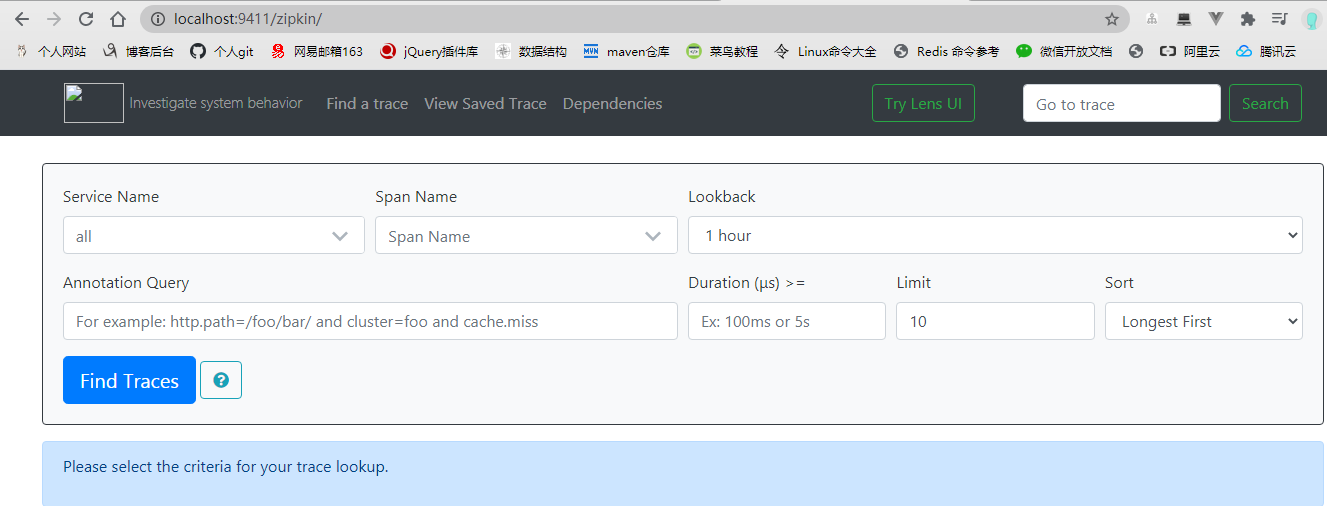

3.访问测试,zipkin默认端口是9411

2.修改原来的支付项目,支持链路追踪

1.修改pom,增加如下依赖:

<!-- 包含了sleuth zipkin 数据链路追踪--> <dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-starter-zipkin</artifactId> </dependency>

2.修改yml,增加zipkin和sleuth相关信息

spring:

application:

name: cloud-payment-service

# sleuth链路追踪

zipkin:

base-url: http://localhost:9411

sleuth:

sampler:

#采样取值介于 0到1之间,1则表示全部收集

probability: 1

3.修改原来的订单项目,支持链路追踪

1.修改pom,增加如下依赖

<!-- 包含了sleuth zipkin 数据链路追踪--> <dependency> <groupId>org.springframework.cloud</groupId> <artifactId>spring-cloud-starter-zipkin</artifactId> </dependency>

2.修改yml

spring:

application:

name: cloud-order-service

# sleuth链路追踪

zipkin:

base-url: http://localhost:9411

sleuth:

sampler:

#采样取值介于 0到1之间,1则表示全部收集

probability: 1

4.启动后测试

依次启动eureka-》支付服务-》订单服务,然后访问如下:

(1)访问订单服务,订单服务内部调用支付服务

http://localhost/consumer/pay/getServerPort

(2)查看zipkin

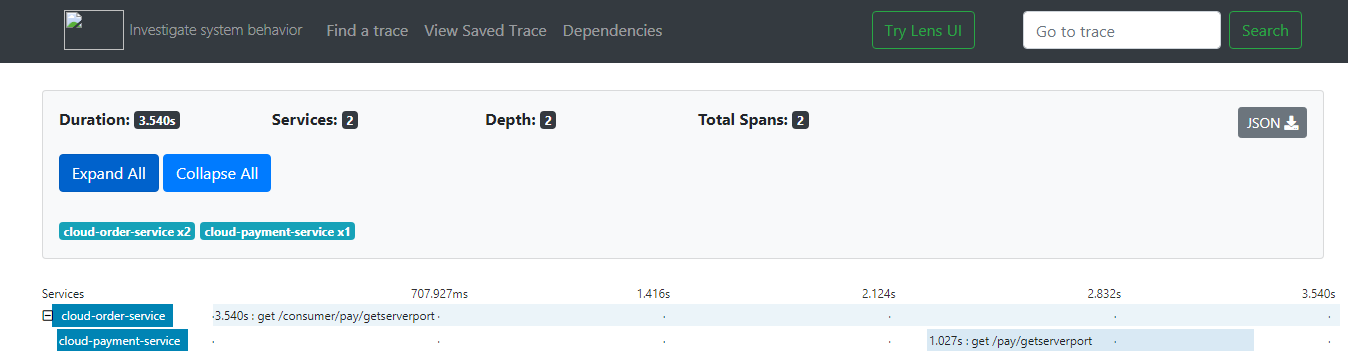

(3)点击查看请求详情

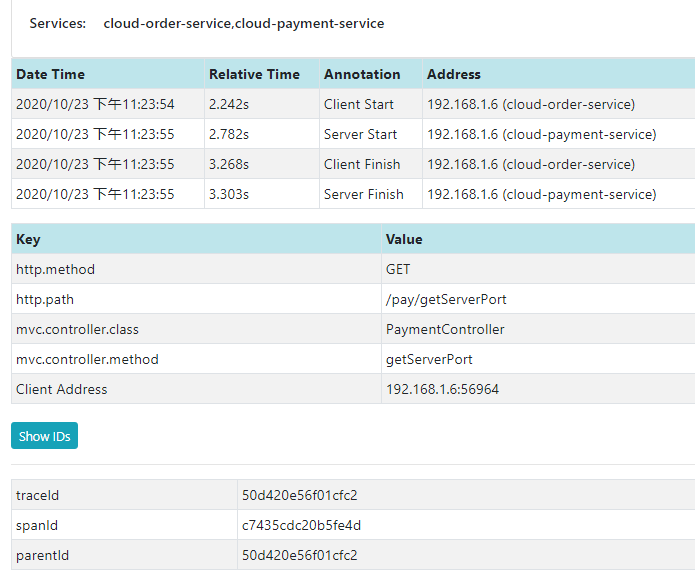

(4)点击时间:如下

图一:parentid为空

图二:(也验证了通过spanid标记服务请求,parentid标记上个请求)

其实,sleuth的数据也可以进行持久化到数据库中。这个需要的时候再研究吧。

补充:cloud集成zipkin之后日志会自动打印traceId和spanId,格式如下:

2021-01-01 20:01:20.791 INFO [cloud-payment-service,72a132d8a6579863,d71d6d4f5e7ace1b,true] 1276 --- [nio-8081-exec-3] c.qz.cloud.controller.PaymentController : serverPort: 8081

解释:

application name — 应用的名称,也就是application.properties中的spring.application.name参数配置的属性。

traceId — 为一个请求分配的ID号,用来标识一条请求链路。

spanId — 表示一个基本的工作单元,一个请求可以包含多个步骤,每个步骤都拥有自己的spanId。一个请求包含一个TraceId,多个SpanId

export — 布尔类型。表示是否要将该信息输出到类似Zipkin这样的聚合器进行收集和展示

补充:sleuth原理简单理解

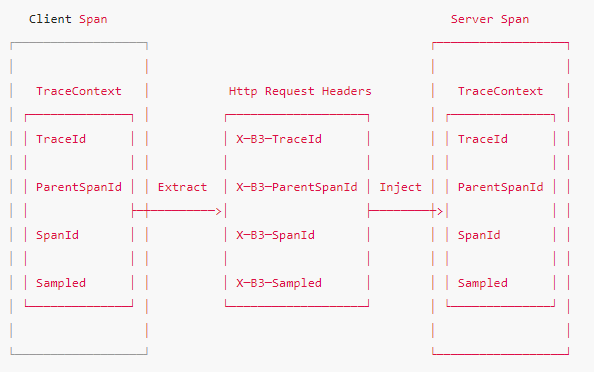

1》traceId和spanId在服务间传递在调用的时候通过header传递的 ,官方给出的图如下:

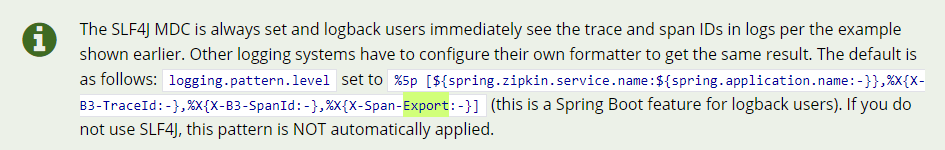

2》在服务内部是通过MDC传递的,可以理解为通过ThreadLocal传递,官方解释如下:

源码如下:

package org.springframework.cloud.sleuth.log; import java.util.AbstractMap; import java.util.ArrayList; import java.util.Collections; import java.util.List; import brave.internal.HexCodec; import brave.internal.Nullable; import brave.propagation.CurrentTraceContext; import brave.propagation.ExtraFieldPropagation; import brave.propagation.TraceContext; import org.slf4j.Logger; import org.slf4j.LoggerFactory; import org.slf4j.MDC; import org.springframework.cloud.sleuth.autoconfig.SleuthProperties; import org.springframework.util.StringUtils; final class Slf4jScopeDecorator implements CurrentTraceContext.ScopeDecorator { // Backward compatibility for all logging patterns private static final String LEGACY_EXPORTABLE_NAME = "X-Span-Export"; private static final String LEGACY_PARENT_ID_NAME = "X-B3-ParentSpanId"; private static final String LEGACY_TRACE_ID_NAME = "X-B3-TraceId"; private static final String LEGACY_SPAN_ID_NAME = "X-B3-SpanId"; private static final Logger log = LoggerFactory.getLogger(Slf4jScopeDecorator.class); private final SleuthProperties sleuthProperties; private final SleuthSlf4jProperties sleuthSlf4jProperties; Slf4jScopeDecorator(SleuthProperties sleuthProperties, SleuthSlf4jProperties sleuthSlf4jProperties) { this.sleuthProperties = sleuthProperties; this.sleuthSlf4jProperties = sleuthSlf4jProperties; } static void replace(String key, @Nullable String value) { if (value != null) { MDC.put(key, value); } else { MDC.remove(key); } } @Override public CurrentTraceContext.Scope decorateScope(TraceContext currentSpan, CurrentTraceContext.Scope scope) { final String previousTraceId = MDC.get("traceId"); final String previousParentId = MDC.get("parentId"); final String previousSpanId = MDC.get("spanId"); final String spanExportable = MDC.get("spanExportable"); final String legacyPreviousTraceId = MDC.get(LEGACY_TRACE_ID_NAME); final String legacyPreviousParentId = MDC.get(LEGACY_PARENT_ID_NAME); final String legacyPreviousSpanId = MDC.get(LEGACY_SPAN_ID_NAME); final String legacySpanExportable = MDC.get(LEGACY_EXPORTABLE_NAME); final List<AbstractMap.SimpleEntry<String, String>> previousMdc = previousMdc(); if (currentSpan != null) { String traceIdString = currentSpan.traceIdString(); MDC.put("traceId", traceIdString); MDC.put(LEGACY_TRACE_ID_NAME, traceIdString); String parentId = currentSpan.parentId() != null ? HexCodec.toLowerHex(currentSpan.parentId()) : null; replace("parentId", parentId); replace(LEGACY_PARENT_ID_NAME, parentId); String spanId = HexCodec.toLowerHex(currentSpan.spanId()); MDC.put("spanId", spanId); MDC.put(LEGACY_SPAN_ID_NAME, spanId); String sampled = String.valueOf(currentSpan.sampled()); MDC.put("spanExportable", sampled); MDC.put(LEGACY_EXPORTABLE_NAME, sampled); log("Starting scope for span: {}", currentSpan); if (currentSpan.parentId() != null) { if (log.isTraceEnabled()) { log.trace("With parent: {}", currentSpan.parentId()); } } for (String key : whitelistedBaggageKeysWithValue(currentSpan)) { MDC.put(key, ExtraFieldPropagation.get(currentSpan, key)); } for (String key : whitelistedPropagationKeysWithValue(currentSpan)) { MDC.put(key, ExtraFieldPropagation.get(currentSpan, key)); } for (String key : whitelistedLocalKeysWithValue(currentSpan)) { MDC.put(key, ExtraFieldPropagation.get(currentSpan, key)); } } else { MDC.remove("traceId"); MDC.remove("parentId"); MDC.remove("spanId"); MDC.remove("spanExportable"); MDC.remove(LEGACY_TRACE_ID_NAME); MDC.remove(LEGACY_PARENT_ID_NAME); MDC.remove(LEGACY_SPAN_ID_NAME); MDC.remove(LEGACY_EXPORTABLE_NAME); for (String s : whitelistedBaggageKeys()) { MDC.remove(s); } for (String s : whitelistedPropagationKeys()) { MDC.remove(s); } for (String s : whitelistedLocalKeys()) { MDC.remove(s); } previousMdc.clear(); } /** * Thread context scope. * * @author Adrian Cole */ class ThreadContextCurrentTraceContextScope implements CurrentTraceContext.Scope { @Override public void close() { log("Closing scope for span: {}", currentSpan); scope.close(); replace("traceId", previousTraceId); replace("parentId", previousParentId); replace("spanId", previousSpanId); replace("spanExportable", spanExportable); replace(LEGACY_TRACE_ID_NAME, legacyPreviousTraceId); replace(LEGACY_PARENT_ID_NAME, legacyPreviousParentId); replace(LEGACY_SPAN_ID_NAME, legacyPreviousSpanId); replace(LEGACY_EXPORTABLE_NAME, legacySpanExportable); for (AbstractMap.SimpleEntry<String, String> entry : previousMdc) { replace(entry.getKey(), entry.getValue()); } } } return new ThreadContextCurrentTraceContextScope(); } private List<AbstractMap.SimpleEntry<String, String>> previousMdc() { List<AbstractMap.SimpleEntry<String, String>> previousMdc = new ArrayList<>(); List<String> keys = new ArrayList<>(whitelistedBaggageKeys()); keys.addAll(whitelistedPropagationKeys()); keys.addAll(whitelistedLocalKeys()); for (String key : keys) { previousMdc.add(new AbstractMap.SimpleEntry<>(key, MDC.get(key))); } return previousMdc; } private List<String> whitelistedKeys(List<String> keysToFilter) { List<String> keys = new ArrayList<>(); for (String baggageKey : keysToFilter) { if (this.sleuthSlf4jProperties.getWhitelistedMdcKeys().contains(baggageKey)) { keys.add(baggageKey); } } return keys; } private List<String> whitelistedBaggageKeys() { return whitelistedKeys(this.sleuthProperties.getBaggageKeys()); } private List<String> whitelistedKeysWithValue(TraceContext context, List<String> keys) { if (context == null) { return Collections.EMPTY_LIST; } List<String> nonEmpty = new ArrayList<>(); for (String key : keys) { if (StringUtils.hasText(ExtraFieldPropagation.get(context, key))) { nonEmpty.add(key); } } return nonEmpty; } private List<String> whitelistedBaggageKeysWithValue(TraceContext context) { return whitelistedKeysWithValue(context, whitelistedBaggageKeys()); } private List<String> whitelistedPropagationKeys() { return whitelistedKeys(this.sleuthProperties.getPropagationKeys()); } private List<String> whitelistedLocalKeys() { return whitelistedKeys(this.sleuthProperties.getLocalKeys()); } private List<String> whitelistedPropagationKeysWithValue(TraceContext context) { return whitelistedKeysWithValue(context, whitelistedPropagationKeys()); } private List<String> whitelistedLocalKeysWithValue(TraceContext context) { return whitelistedKeysWithValue(context, whitelistedLocalKeys()); } private void log(String text, TraceContext span) { if (span == null) { return; } if (log.isTraceEnabled()) { log.trace(text, span); } } }

(3) logback默认打出的日志会加入appname、traceId、spaceId、Export信息,官方解释如下:

测试:

(1)order服务日志如下:

2021-02-08 19:10:26.593 DEBUG [cloud-order-service,7521499505d655df,7521499505d655df,true] 89224 --- [nio-8088-exec-4] cn.qz.cloud.service.PaymentFeignService : [PaymentFeignService#getServerPort] ---> GET http://CLOUD-PAYMENT-SERVICE/pay/getServerPort HTTP/1.1 2021-02-08 19:10:26.593 DEBUG [cloud-order-service,7521499505d655df,7521499505d655df,true] 89224 --- [nio-8088-exec-4] cn.qz.cloud.service.PaymentFeignService : [PaymentFeignService#getServerPort] ---> END HTTP (0-byte body)

(2) payment日志如下:

2021-02-08 19:10:26.596 INFO [cloud-payment-service,7521499505d655df,69a517ce04d901b9,true] 47764 --- [nio-8081-exec-9] cn.qz.cloud.filter.HeaderFilter : key: x-b3-traceid, value: 7521499505d655df 2021-02-08 19:10:26.597 INFO [cloud-payment-service,7521499505d655df,69a517ce04d901b9,true] 47764 --- [nio-8081-exec-9] cn.qz.cloud.filter.HeaderFilter : key: x-b3-spanid, value: 69a517ce04d901b9 2021-02-08 19:10:26.597 INFO [cloud-payment-service,7521499505d655df,69a517ce04d901b9,true] 47764 --- [nio-8081-exec-9] cn.qz.cloud.filter.HeaderFilter : key: x-b3-parentspanid, value: 7521499505d655df 2021-02-08 19:10:26.597 INFO [cloud-payment-service,7521499505d655df,69a517ce04d901b9,true] 47764 --- [nio-8081-exec-9] cn.qz.cloud.filter.HeaderFilter : key: x-b3-sampled, value: 1 2021-02-08 19:10:26.597 INFO [cloud-payment-service,7521499505d655df,69a517ce04d901b9,true] 47764 --- [nio-8081-exec-9] cn.qz.cloud.filter.HeaderFilter : key: accept, value: */* 2021-02-08 19:10:26.597 INFO [cloud-payment-service,7521499505d655df,69a517ce04d901b9,true] 47764 --- [nio-8081-exec-9] cn.qz.cloud.filter.HeaderFilter : key: user-agent, value: Java/1.8.0_171 2021-02-08 19:10:26.597 INFO [cloud-payment-service,7521499505d655df,69a517ce04d901b9,true] 47764 --- [nio-8081-exec-9] cn.qz.cloud.filter.HeaderFilter : key: host, value: 12.0.215.93:8081 2021-02-08 19:10:26.597 INFO [cloud-payment-service,7521499505d655df,69a517ce04d901b9,true] 47764 --- [nio-8081-exec-9] cn.qz.cloud.filter.HeaderFilter : key: connection, value: keep-alive

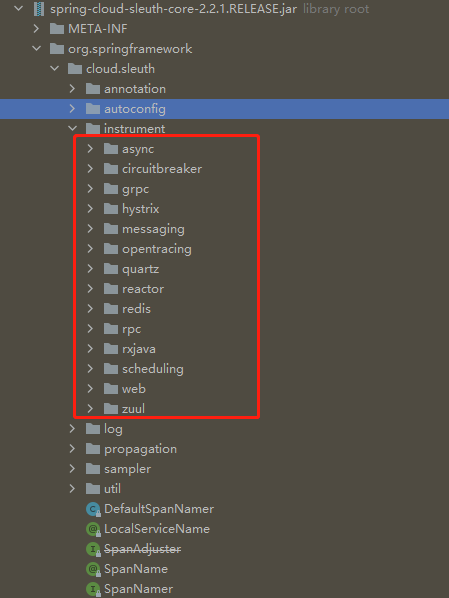

补充:Sleuth对其他相关的组件进行了集成

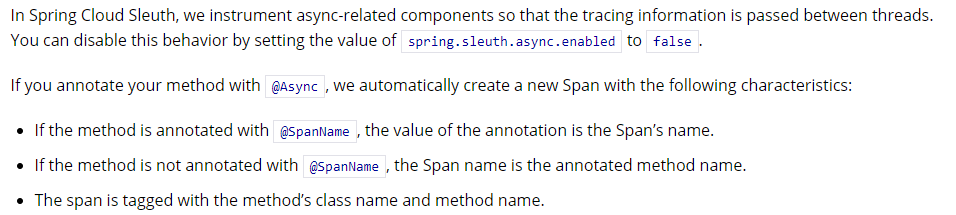

(1) 对@Async 异步线程池做了集成,官方解释如下:也就是说trace信息为同主线程一样,每个@Async标注的异步方法会生成一个新的Span信息。简单的说就是traceId同主线程一样,spanId重新生成: