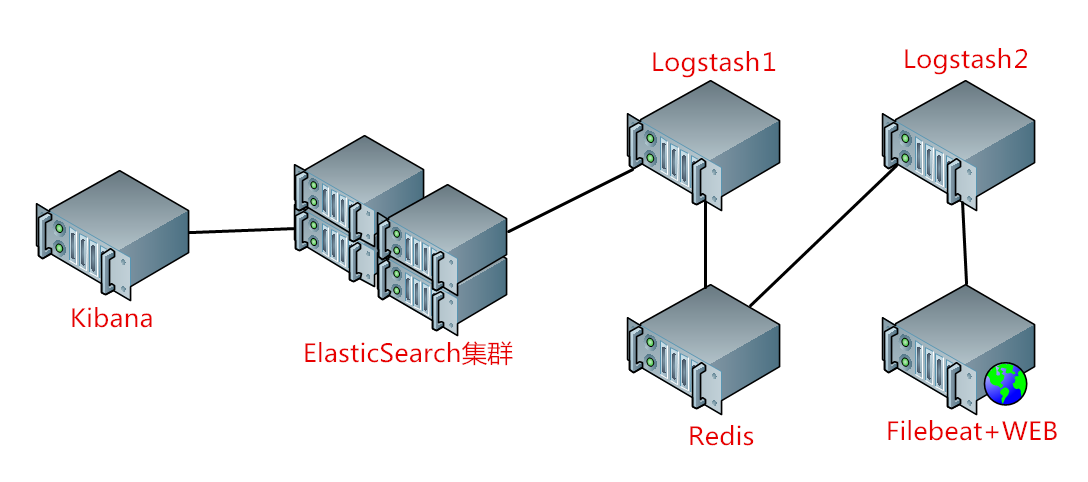

一、系统的基本架构

在以前的博客中有介绍过在物理机上搭建ELK日志分析系统,有兴趣的朋友可以看一看-------------->>链接戳我<<。这篇博客将介绍如何使用Docker来更方便快捷的搭建,架构图如下:

说明:WEB服务器代表收集日志的对象,由Filebeat收集日志后发送给Logstash2,再由Logstash2发送至Redis消息队列,然后由Redis发送至Logstash1,最后由ElasticSearch收集处理并由Kibana进行可视化显示。这里之所以需要两层Logstash是因为WEB服务器可以是任何服务器,可能会出现多个不同日志需要分析。这个架构可以用在较大规模的集群中,在生产中可以将各个功能单点进行扩展,例如将Redis和Logstash集群化。

二、Docker搭建ES集群

默认系统已安装好docker,创建ES集群docker挂载目录,编辑配置文件:

~]# mkdir -pv /root/elk/{logs,data,conf}

vim /root/elk/conf/elasticsearch.yml cluster.name: es5.6-cluster #集群识别名,在一个集群中必须同名 node.name: node1 #节点标识 network.host: 192.168.29.115 #节点IP http.port: 9200 #监听端口 discovery.zen.ping.unicast.hosts: ["192.168.29.115", "192.168.29.116"] #集群节点 http.cors.enabled: true http.cors.allow-origin: "*"

~]# docker container run --name es5.6 --network host -v /root/elk/conf/elasticsearch.yml:/usr/share/elasticsearch/config/elasticsearch.yml -v /root/elk/data/:/usr/share/elasticsearch/data/ -v /root/elk/logs/:/usr/share/elasticsearch/logs/ -p 9200:9200 -p 9300:9300 -d --rm docker.io/elasticsearch:5.6

安装ElasticSearch-head,查看ES集群是否安装成功:

~]# docker container run --name es-head -p 9100:9100 -d --rm mobz/elasticsearchhead:5

三、Docker安装Filebeat

编辑Filebeat配置文件,把配置文件、log挂载至docker卷,,定义要送至Logstash的log文件与输出目标:

vim /root/filebeat/conf/filebeat.yml filebeat.prospectors: - input_type: log paths: - /var/log/messages exclude_lines: ["^DBG"] document_type: system-log-0019 - input_type: log paths: - /var/log/nginx/access.log document_type: nginx-log-0019 #相对的是logstash中的type,可以在Logstash中做if判断 output.logstash: hosts: ["192.168.29.119:5044","192.168.29.119:5045"] enabled: true worker: 1 compression_level: 3 loadbalance: true

~]# docker container run --name filebeat --network host -v /root/filebeat/conf/filebeat.yml:/usr/share/filebeat/filebeat.yml -v /var/log/messages:/var/log/messages -v /var/log/nginx/access.log:/var/log/nginx/access.log --rm -d docker.elastic.co/beats/filebeat:5.6.15 filebeat -c /usr/share/filebeat/filebeat.yml

四、Docker安装Logstash

docker container run --name logstash -p 9600:9600 -v /var/log/nginx/access.log:/var/log/nginx/access.log -v /var/log/messages:/var/log/messages -v /root/logstash/pipeline/stdout.conf:/etc/logstash/conf.d/stdout.conf -d --network host -it --rm docker.io/logstash:5.6 -f /etc/logstash/conf.d/stdout.conf

Logstash收集多个日志并输出示例(与此架构无关,只是示范示例):

vim /root/logstash/pipeline/stdout.conf input { file { type => "nginxaccesslog" path => "/var/log/nginx/access.log" start_position => "beginning" } file { type => "syslog" path => "/var/log/messages" start_position => "beginning" } } output { if [type] == "nginxaccesslog" { elasticsearch { hosts => ["192.168.29.115:9200"] index => "nginx-log-0018-%{+YYYY.MM.dd}" } } if [type] == "syslog" { elasticsearch { hosts => ["192.168.29.115:9200"] index => "syslog-0018-%{+YYYY.MM.dd}" } } }

配置Logstash2上的输入输出配置:

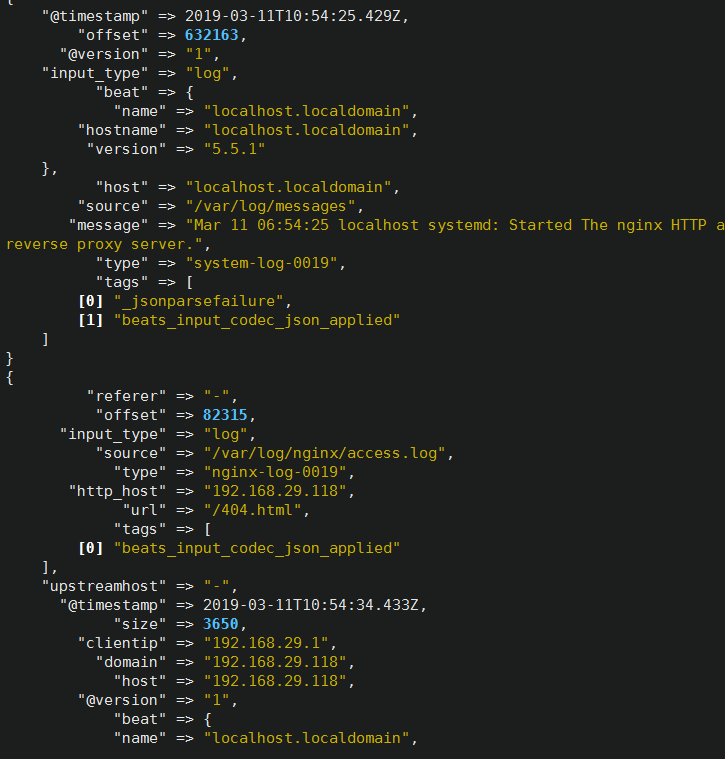

这里先进行测试,用Filebeat收集WEB服务器上的 /var/log/messages 与 /var/log/nginx/access.log 日志并进行标准输出,配置如下:

vim /root/logstash/conf/stdout.conf input { beats { port => 5044 codec => "json" } beats { port => 5045 codec => "json" } } output { stdout { codec => "rubydebug" } }

启动Logstash2并查看是否可读取日志并输出:

docker container run --name logstash -p 9600:9600 -v /root/logstash/conf/stdout.conf:/etc/logstash/conf.d/stdout.conf -it

--network host --rm docker.io/logstash:5.6 -f /etc/logstash/conf.d/stdout.conf

如截图所示便是正常:

可以看到/var/log/messages与/var/log/nginx/access.log都能正常收集并输出。

五、Docker安装Redis

修改配置:

vim /root/redis/conf/redis.conf bind 0.0.0.0 port 6379 requirepass 123456 #配置密码 save "" pidfile /var/run/redis/redis.pid logfile /var/log/redis/redis.log #注意权限

运行:

~]# docker container run --name redis -v /root/redis/conf/redis.conf:/usr/local/etc/redis/redis.conf -v /root/redis/log/redis.log:/var/log/redis/redis.log -v /root/redis/run/redis.pid:/var/run/redis/redis.pid -v /root/redis/data/:/data/ -p 6379:6379 --network host -d docker.io/redis:4 redis-server /usr/local/etc/redis/redis.conf

六、Docker启动并配置Logstash2收集多日志并送至Redis

vim /root/logstash/conf/stdout.conf input { beats { port => 5044 codec => "json" } beats { port => 5045 codec => "json" } } output { if [type] == "system-log-0019" { redis { data_type => "list" host => "192.168.29.117" port => "6379" key => "system-log-0019" db => "4" password => "123456" } } if [type] == "nginx-log-0019" { redis { data_type => "list" host => "192.168.29.117" port => "6379" key => "nginx-log-0019" db => "4" password => "123456" } } }

再次启动Logstash2:

~]# docker container run --name logstash -p 9600:9600 -v /root/logstash/conf/stdout.conf:/etc/logstash/conf.d/stdout.conf -it --network host --rm docker.io/logstash:5.6 -f /etc/logstash/conf.d/stdout.conf

链接Redis查看日志是否已被收集

~]# redis-cli -h 192.168.29.117

192.168.29.117:6379> AUTH 123456 OK 192.168.29.117:6379> SELECT 4 #选择数据库号 OK 192.168.29.117:6379[4]> KEYS * 1) "nginx-log-0019" #可以看到日志已写入成功,这里是前面在Logstash中定义的key 2) "system-log-0019" 192.168.29.117:6379[4]> LLEN system-log-0019 (integer) 6400 192.168.29.117:6379[4]> LLEN nginx-log-0019 (integer) 313

也可以在Redis上查看是否连接:

~]# lsof -n -i:6379 COMMAND PID USER FD TYPE DEVICE SIZE/OFF NODE NAME redis-ser 14230 polkitd 6u IPv4 106595 0t0 TCP *:6379 (LISTEN) redis-ser 14230 polkitd 7u IPv4 118204 0t0 TCP 192.168.29.117:6379->192.168.29.104:40320 (ESTABLISHED) redis-ser 14230 polkitd 10u IPv4 109238 0t0 TCP 127.0.0.1:6379->127.0.0.1:52460 (ESTABLISHED) redis-cli 17066 root 3u IPv4 117806 0t0 TCP 127.0.0.1:52460->127.0.0.1:6379 (ESTABLISHED)

以上步骤log文件走向:WEB→Filebeat→Logstash2→Redis。下面再来配置Redis→Logstash1→ES→Kibana。

七、docker配置Logstash1

vim /root/logstash/conf/stdout.conf input { redis { data_type => "list" host => "192.168.29.117" port => "6379" key => "system-log-0019" db => "4" password => "123456" } redis { data_type => "list" host => "192.168.29.117" port => "6379" key => "nginx-log-0019" db => "4" password => "123456" codec => "json" } } output { if [type] == "system-log-0019" { #用if判断来选择ES节点 elasticsearch { hosts => ["192.168.29.115:9200"] #节点可以自定义 index => "system-log-0019-%{+YYYY.MM.dd}" } } if [type] == "nginx-log-0019" { elasticsearch { hosts => ["192.168.29.115:9200"] index => "nginx-log-0019-%{+YYYY.MM.dd}" } } }

启动Logstash1并挂载配置:

~]# docker container run --name logstash -p 9600:9600 -v /root/logstash/conf/stdout.conf:/etc/logstash/conf.d/stdout.conf -it --network host --rm docker.io/logstash:5.6 -f /etc/logstash/conf.d/stdout.conf

查看Redis中的数据是否已被ES取走:

192.168.29.117:6379[4]> LLEN nginx-log-0019 (integer) 0 192.168.29.117:6379[4]> LLEN system-log-0019 (integer) 0

以上Redis显示说明数据已被ES取走。

八、docker启动并配置Kibana

配置Kibana:

vim /etc/kibana/kibana.yml server.host: "127.0.0.1" elasticsearch.url: "http://192.168.29.115:9200"

启动Kibana:

~]# docker container run --name kibana

-v /etc/kibana/kibana.yml:/etc/kibana/kibana.yml

--network host

-p 5601:5601

-d --rm kibana:5.6

为安全起见,这里建议用Nginx加密返代Kibana进行访问。

最后在Kibana中添加index就能访问并搜索采集的日志了: