https://github.com/PacktPublishing/Unity-2018-Artificial-Intelligence-Cookbook-Second-Edition

1 Behaviors - Intelligent Movement

Behaviors – Intelligent Movement, explores some of the most interesting movement algorithms based on the steering behavior principles developed by Craig Reynolds and work from Ian Millington.

They act as a foundation for most of the AI used in advanced games and other algorithms that rely on movement, such as the family of path-finding algorithms.

Navigation, covers path-finding algorithms for navigating complex scenarios.

It will include some ways of representing the world using different kinds of graph structure, and several algorithms for finding a path, each aimed to different situations.

3 Decision Making (已看)

Decision Making, explains different decision-making techniques that are flexible enough to adapt to different types of games, and robust enough to let us build modular decision-making systems.

The New NavMesh API, shows the inner workings of the NavMesh API introduced in Unity 5.6, and explains how it enables us to grasp the power of the NavMesh engine and tune it in real time.

Coordination and Tactics, deals with a number of different recipes for coordinating different agents as a whole organism, such as formations and techniques that allow us make tactical decisions based on graphs, such as waypoints and influence maps.

Agent Awareness, explores different ways to simulate sense stimuli on an agent.

We will learn how to use tools we already know to create these simulations: colliders and graphs.

7 Board Games and Applied Search AI

Board Games and Applied Search AI, covers a family of algorithms for developing board games, as well as turn-based-game techniques for creating AI.

Learning Techniques, explores the field of machine learning. It will give us a great head-start in our endeavor to learn and apply machine learning techniques into our games.

9 Procedural Content Generation

Procedural Content Generation, explores different techniques for enabling replayability in our games by creating content procedurally.

It will give us some pointers in the right direction for different types of content.

Miscellaneous, introduces new techniques and uses algorithms that we will have learned in previous chapters to create new behaviors that don't quite fit in a definite category.

1 Behaviors - Intelligent Movement

In this chapter, we will develop AI algorithms for movement by covering the following recipes:

- Creating the behaviors template

- Pursuing and evading

- Adjusting the agent for physics

- Arriving and leaving

- Facing objects

- Wandering around

- Following a path

- Avoiding agents

- Avoiding walls

- Blending behaviors by weight

- Blending behaviors by priority

- Shooting a projectile

- Predicting a projectile's landing spot

- Targeting a projectile

- Creating a jump system

Introduction

Creating the behaviors template

Steering serves as custom data type for storing the movement and rotation of the agent:

using UnityEngine; public class MySteering { // 角加速度 public float angular; // 加速度 public Vector3 linear; public MySteering() { angular = 0.0f; linear = new Vector3(); } }

AgentBehaviour is the template class for most of the behaviors covered in this chapter:

using UnityEngine; public class MyAgentBehaviour : MonoBehaviour { public GameObject target; protected MyAgent agent; public virtual void Awake() { agent = gameObject.GetComponent<MyAgent>(); } public virtual void Update() { agent.SetSteering(GetSteering()); } public virtual MySteering GetSteering() { return new MySteering(); } }

Agent is the main component, and it makes use of behaviors in order to create intelligent movement.

using UnityEngine; public class MyAgent : MonoBehaviour { public float maxSpeed; public float maxAccel; public float orientation; public float rotation; public Vector3 velocity; protected MySteering steering; void Start() { velocity = Vector3.zero; steering = new MySteering(); } public void SetSteering(MySteering steering) { this.steering = steering; } public virtual void Update() { Vector3 displacement = velocity * Time.deltaTime; orientation += rotation * Time.deltaTime; // we need to limit the orientation values // to be in the range(0 - 360) if (orientation < 0.0f) { orientation += 360.0f; } else if (orientation > 360.0f) { orientation -= 360.0f; } transform.Translate(displacement, Space.World); transform.rotation = new Quaternion(); transform.Rotate(Vector3.up, orientation); } public virtual void LateUpdate() { // velocity 叠加 每帧 steering 给与的加速度 velocity += steering.linear * Time.deltaTime; rotation += steering.angular * Time.deltaTime; // 不能超过最大速率 // 限制每帧的速率 if (velocity.magnitude > maxSpeed) { velocity.Normalize(); velocity = velocity * maxSpeed; } if (steering.angular == 0.0f) { rotation = 0.0f; } if (steering.linear.sqrMagnitude == 0.0f) { velocity = Vector3.zero; } // 每帧 steering(操控) 重置为 0 steering = new MySteering(); } }

https://docs.unity3d.com/Manual/ExecutionOrder.html

https://docs.unity3d.com/Manual/class-MonoManager.html

Pursuing and evading

A couple of basic behaviors called Seek and Flee.

using System.Collections; using System.Collections.Generic; using UnityEngine; public class MySeek: MyAgentBehaviour { public override MySteering GetSteering() { MySteering steering = new MySteering(); steering.linear = target.transform.position - transform.position; steering.linear.Normalize(); // 速度因加速度而改变 steering.linear = steering.linear * agent.maxAccel; return steering; } }

using System.Collections; using System.Collections.Generic; using UnityEngine; public class MyFlee : MyAgentBehaviour { public override MySteering GetSteering() { MySteering steering = new MySteering(); steering.linear = transform.position - target.transform.position; steering.linear.Normalize(); // 速度因加速度而改变 steering.linear = steering.linear * agent.maxAccel; return steering; } }

These behaviors rely on Seek and Flee and take into consideration the target's velocity in order to predict where it will go next, and they aim at that position using an internal extra object.

Adjusting the agent for physics

Arriving and leaving

Facing objects

Wandering around

Following a path

Avoiding agents

Avoiding walls

Blending behaviors by weight

Blending behaviors by priority

Shooting a projectile

Predicting a projectile's landing spot

Targeting a projectile

Creating a jump system

2 Navigation

In this chapter, we will cover the following recipes:

- Representing the world with grids

- Representing the world with points of visibility

- Representing the world with a self-made navigation mesh

- Finding your way out of a maze with DFS

- Finding the shortest path in a grid with BFS

- Finding the shortest path with Dijkstra

- Finding the best-promising path with A*

- Improving A* for memory: IDA*

- Planning navigation in several frames: time-sliced search

- Smoothing a path

Introduction

Representing the world with grids

A grid is the most widely-used structure for representing worlds in games because it is easy to implement and visualize.

Representing the world with points of visibility

Representing the world with a self-made navigation mesh

Finding your way out of a maze with DFS

Finding the shortest path in a grid with BFS

Finding the shortest path with Dijkstra

Finding the best-promising path with A*

Improving A* for memory – IDA*

Planning navigation in several frames – time-sliced search

Smoothing a path

3 Decision Making

In this chapter, we will cover the following recipes:

- Choosing through a decision tree

- Implementing a finite-state machine

- Improving FSMs: hierarchical finite-state machines

- Implementing behavior trees

- Working with fuzzy logic

- Making decisions with goal-oriented behaviors

- Implementing a blackboard architecture

- Experimenting with Unity's animation state machine

Introduction

Making decisions or changing the game flow according to the game's state could get really messy if we rely only on simple control structures. That's why we will learn different decision-making techniques that are flexible enough to adapt to different types of games, and robust enough to let us build modular decision-making systems.

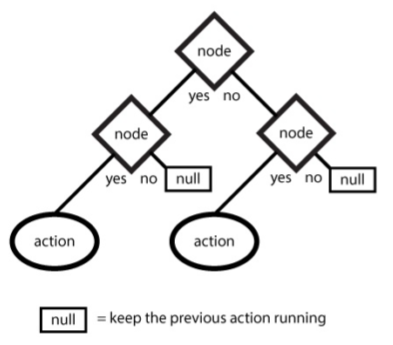

Choosing through a decision tree

One of the simplest mechanisms for tackling decision-making problems is decision trees, because they are fast and easy to grasp and implement. As a consequence, it's one of the most widely-used techniques today; it is extensively used in other character-controlled scopes such as animations

using UnityEngine; using System.Collections; public class DecisionTreeNode: MonoBehaviour { public virtual DecisionTreeNode MakeDecision() { return null; } }

using UnityEngine; using System.Collections; public class Decision: DecisionTree { public Action nodeTrue; public Action nodeFalse; public virtual Action GetBranch() { return null; } }

using UnityEngine; using System.Collections; public class Action: DecisionTreeNode { public bool activated = false; public override DecisionTreeNode MakeDecision() { return this; } public virtual void LateUpdate() { if (!activated) { return; } // Implement your behaviors here } }

using UnityEngine; using System.Collections; public class DecisionTree: DecisionTreeNode { public DecisionTreeNode root; private Action actionNew; private Action actionOld; public override DecisionTreeNode MakeDecision() { return root.MakeDecision(); } void Update() { actionNew.activated = false; actionOld = actionNew; actionNew = root.MakeDecision() as Action; if (actionNew == null) { actionNew = actionOld; actionNew.activated = true; } }

using UnityEngine; using System.Collections; public class DecisionBool: Decision { public bool valueDecision; public bool valueTest; public override Action GetBranch() { if (valueTest == valueDecision) { return nodeTrue; } return nodeFalse; } }

Decision nodes choose which path to take, calling the MakeDecision function recursively. It is worth mentioning that branches must be decisions, and leaves must be actions. Also, we should be careful not to create cycles within the tree

Implementing a finite-state machine

Finite-state machine(FSM) move us to change the train of thought from what it was in the previous repcipe. FSMs are great when our train of thoughts is more event-oriented, and we think in terms of holding a behavior until a condition is met, changing to another.

public class Condition { public virtual bool Test() { return false; } }

public class Transition { public Condition condition; public State target; }

using UnityEngine; using System.Collections.Generic; public class State: MonoBehaviour { public List<Transition> transitions; public virtual void Awake() { transitions = new List<Transition>(); // TO-DO // setup your transitions here } public virtual void OnEnable() { // TO-DO // develop state's initialization here } public virtual void OnDisable() { // TO-DO // develop behaviour here } public void LateUpdate() { foreach (Transition t in transitions) { if (t.condition.Test()) { t.target.enabled = true; this.enabled = false; return; } } } }

using UnityEngine; using System.Collections; public class ConditionFloat: Condition { public float valueMin; public float valueMax; public float valueTest; public override bool Test() { if (valueMax >= valueTest && valueTest >= valueMin) { return true; } return false; } }

using UnityEngine; using System.Collections; public class ConditionAnd: Condition { public Condition conditionA; public Condition conditionB; public override bool Test() { if (conditionA.Test() && conditionB.Test()) { return true; } return false; } }

Improving FSMs: hierarchical finite-state machines

Finite-state machines can be improved in terms of having different layers or hierarchies. The principles are the same, but states are able to have their own internal finite-state machine, making them more flexible and scalable

The high-level state class allows us to activate the internal FSMs when it is enabled, and recursively disables its internal states when disabled.

using UnityEngine; using System.Collections; using System.Collections.Generic; public class StateHighLevel: State { public List<State> states; public State stateInitial; protected State stateCurrent; public override void OnEnable() { if (stateCurrent == null) { stateCurrent = stateInitial; } stateCurrent.enabled = true; } public override void OnDisable() { base.OnDisable(); stateCurrent.enabled = false; foreach (State s in states) { s.enabled = false; } } }

Implementing behavior trees

Behavior trees can be seen as a synthesis of a number of other artificial intelligence techniques, such as finite-state machines, planning, and decision trees. In fact, they share some resemblance to FSMs, but instead of states, we think in terms of actions spanned across a tree structure.

Behavior trees work in a similar fashion to decision trees; however, the leaf nodes are called tasks, and there are some branch nodes that are not conditions, but run a set of tasks in one of two ways: Selector and Sequence. Selectors run a set of tasks and return true when one of their sub-tasks returns true. They can be seen as OR nodes. Sequences run as set of tasks and return true when all of their sub-tasks return true. They can be seen as AND nodes

using UnityEngine; using System.Collections; using System.Collections.Generic; public class Task: MonoBehaviour { public List<Task> children; protected bool result = false; protected bool isFinished = false; public virtual IEnumerator Run() { SetResult(true); yield break; } public virtual IEnumerator RunTask() { yield return StartCoroutine(Run()); } }

using UnityEngine; using System.Collections; public class ConditionBT: Task { public override IEnumerator Run() { isFinished = false; bool r = false; // implement your behaviour here // define result (r) whether true or false // ------ SetResult(r); yiled break; } }

using UnityEngine; using System.Collections; public class ActionBT: Task { public override IEnumerator Run() { isFinished = false; // implement your behaviour here // ------ return base.Run(); } }

using UnityEngine; using System.Collections; public class Selector: Task { public override void SetResult(bool r) { if (r == true) { isFinished = true; } } public override IEnumerator RunTask() { foreach (Task t in children) { yield return StartCoroutine(t.RunTask()); } } }

using UnityEngine; using System.Collections; public class Sequence: Task { public override void SetResult(bool r) { if (r == true) { isFinished = true; } } public override IEnumerator RunTask() { foreach (Task t in children) { yield return StartCoroutine(t.RunTask()); } } }

Working with fuzzy logic

There are times when we have to deal with gray areas, instead of binary-based value, to make decisions, and fuzzy logic is a set of mathematical techniques that help us with this task

Imagine that we're developing an automated driver. A couple of available actions are steering and speed control, both of which have a range of degrees. Deciding how to take a turn, and at what speed, is what will make our driver different and possibly smarter. That's the type of gray area that fuzzy logic helps represent and handle.

This recipe requires a set of states indexed by continuous integer numbers. As this representation varies from game to game, we handle the raw input from such states along with thire fuzzification in order to have a good general-purpose, fuzzy decision maker. Finally, the decision maker returns a set of fuzzy values representing the degree of membership of each state.

using UnityEngine; using System.Collections; public class MembershipFunction: MonoBehaviour { public int stateId; public virtual float GetDOM(object input) { return 0f; } }

using System.Collections; using System.Collections.Generic; public class FuzzyRule { public List<int> stateIds; public int conclusionStateId; }

using UnityEngine; using System.Collections; using System.Collections.Generic; public class FuzzyDecisionMaker : MonoBehaviour { public Dictionary<int,float> MakeDecision(object[] inputs, MembershipFunction[][] mfList, FuzzyRule[] rules) { Dictionary<int, float> inputDOM = new Dictionary<int, float>(); Dictionary<int, float> outputDOM = new Dictionary<int, float>(); MembershipFunction memberFunc; foreach (object input in inputs) { int r, c; for (r = 0; r < mfList.Length; r++) { for (c = 0; c < mfList[r].Length; c++) { memberFunc = mfList[r][c]; int mfId = memberFunc.stateId; float dom = memberFunc.GetDOM(input); if (!inputDOM.ContainsKey(mfId)) { inputDOM.Add(mfId, dom); outputDOM.Add(mfId, 0f); } else inputDOM[mfId] = dom; } } } foreach (FuzzyRule rule in rules) { int outputId = rule.conclusionStateId; float best = outputDOM[outputId]; float min = 1f; foreach (int state in rule.stateIds) { float dom = inputDOM[state]; if (dom < best) continue; if (dom < min) min = dom; } outputDOM[outputId] = min; } return outputDOM; } }

using UnityEngine; using System; using System.Collections; public class MFEnraged : MembershipFunction { public override float GetDOM(object input) { if ((int)input <= 30) return 1f; return 0f; } }

Making decisions with goal-oriented behaviors

Goal-oriented behaviors are a set of techniques aimed to give agents not only a sense of intelligence, but also a sense of free will once a goal is defined and given a set of rules to choose from.

Image we're developing a trooper agent that need not only a goal of capturing the flag(main goal), but also while taking care of their own life and ammo(inner goals for reaching the first). One way of implementing it is to use a general-purpose algorithm for handling goals, so the agent develops something similar as free will.

using UnityEngine; using System.Collections; public class GoalGOB { public string name; public float value; public float change; public virtual float GetDiscontentment(float newValue) { return newValue * newValue; } public virtual float GetChange() { return 0f; } }

using UnityEngine; using System.Collections; public class ActionGOB: MonoBehaviour { public virtual float GetGoalChange(GoalGOB goal) { return 0f; } public virtual float GetDuration() { return 0f; } }

using UnityEngine; using System.Collections; public class ActionChooser : MonoBehaviour { public ActionGOB Choose(ActionGOB[] actions, GoalGOB[] goals) { ActionGOB bestAction; bestAction = actions[0]; float bestValue = CalculateDiscontentment(actions[0], goals); float value; foreach (ActionGOB action in actions) { value = CalculateDiscontentment(action, goals); if (value < bestValue) { bestValue = value; bestAction = action; } } return bestAction; } public float CalculateDiscontentment(ActionGOB action, GoalGOB[] goals) { float discontentment = 0; foreach (GoalGOB goal in goals) { float newValue = goal.value + action.GetGoalChange(goal); newValue += action.GetDuration() * goal.GetChange(); discontentment += goal.GetDiscontentment(newValue); } return discontentment; } }

The discontentment functions help avoid unintended actions, depending on how much a goal's value changes in terms of an actionand the time it takes to be executed. Then, the function for choosing an action is handled by computing the best-promising in terms of the minimum impact(discontentment)

Implementing a blackboard architecture

Experimenting with Unity's animation state machine

4 The New NavMesh API

In this chapter, we will learn how to make use of the new NavMesh API,through the following recipes:

- Setting up the NavMesh building components

- Creating and managing NavMesh for multiple types of agents

- Creating and updating NavMesh data at runtime

- Controlling the lifetime of the NavMesh instance

- Connecting multiple instances of NavMesh

- Creating dynamic NavMeshes with obstacles

- Implementing some behaviors using the NavMesh API

Introduction

Setting up the NavMesh building components

Creating and managing NavMesh for multiple types of agents

Creating and updating NavMesh data at runtime

Controlling the lifetime of the NavMesh instance

Connecting multiple instances of NavMesh

Creating dynamic NavMeshes with obstacles

Implementing some behaviors using the NavMesh API

5 Coordination and Tactics

In this chapter, we will learn techniques for coordination and devising tactics:

- Handling formations

- Extending A* for coordination: A*mbush

- Introducing waypoints by making a manual selector

- Analyzing waypoints by height

- Analyzing waypoints by cover and visibility

- Creating waypoints automatically

- Exemplifying waypoints for decision making

- Implementing influence maps

- Improving influence with map flooding

- Improving influence with convolution filters

- Building a fighting circle

Introduction

Handling formations

Extending A* for coordination – A*mbush

Analyzing waypoints by height

Analyzing waypoints by cover and visibility

Creating waypoints automatically

Exemplifying waypoints for decision making

Implementing influence maps

Improving influence with map flooding

Improving influence with convolution filters

Building a fighting circle

6 Agent Awareness

In this chapter, we will learn some algorithm recipes for simulating senses and agent awareness:

- The seeing function using a collider-based system

- The hearing function using a collider-based system

- The smelling function using a collider-based system

- The seeing function using a graph-based system

- The hearing function using a graph-based system

- The smelling function using a graph-based system

- Creating awareness in a stealth game

Introduction

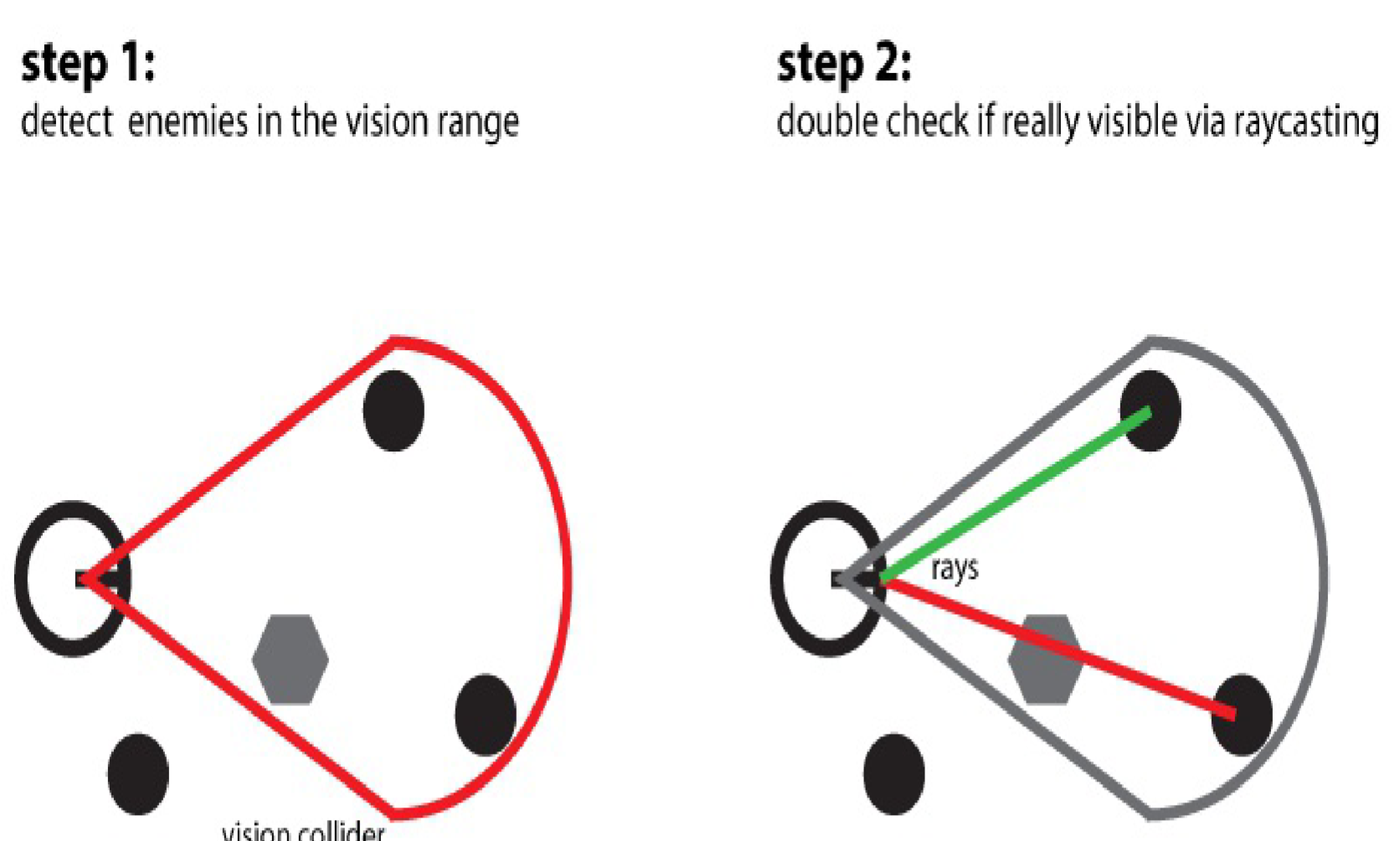

The seeing function using a collider-based system

using UnityEngine; using System.Collections; public class Visor : MonoBehaviour { public string tagWall = "Wall"; public string tagTarget = "Enemy"; public GameObject agent; // Use this for initialization void Start() { if (agent == null) agent = gameObject; } public void OnTriggerStay(Collider coll) { string tag = coll.gameObject.tag; if (!tag.Equals(tagTarget)) return; GameObject target = coll.gameObject; Vector3 agentPos = agent.transform.position; Vector3 targetPos = target.transform.position; Vector3 direction = targetPos - agentPos; float length = direction.magnitude; direction.Normalize(); Ray ray = new Ray(agentPos, direction); RaycastHit[] hits; hits = Physics.RaycastAll(ray, length); int i; for (i = 0; i < hits.Length; i++) { GameObject hitObj; hitObj = hits[i].collider.gameObject; tag = hitObj.tag; if (tag.Equals(tagWall)) return; } // TODO // target is visible // code your behaviour below } }

The collider component checks every frame to see whether it is colliding with any game object in the scene. We leverage the optimizations in Unity's scene graph and engine, and focus only on how to handle the valid collisions

After checking this, if a target object is inside the vision range represented by the collider, we cast a ray in order to check whether it is really visible or whether there is a wall between the target and the agent

The hearing function using a collider-based system

The smelling function using a collider-based system

The seeing function using a graph-based system

The hearing function using a graph-based system

The smelling function using a graph-based system

Creating awareness in a stealth game

7 Board Games and Applied Search AI

In this chapter, you will learn a family of algorithms for developing board game AI:

- Working with the game-tree class

- Implementing Minimax

- Implementing Negamax

- Implementing AB Negamax

- Implementing NegaScout

- Implementing a Tic-Tac-Toe rival

- Implementing a Checkers rival

- Implementing Rock-Paper-Scissors AI with UCB1

- Implementing Regret Matching

Introduction

Working with the game-tree class

Implementing Minimax

Implementing Negamax

Implementing AB Negamax

Implementing NegaScout

Implementing a Tic-Tac-Toe rival

Implementing a Checkers rival

Implementing Rock-Paper-Scissors AI with UCB1

Implementing regret matching

8 Learning Techniques

In this chapter, we will explore the world of machine learning through the following topics:

- Predicting actions with an N-Gram predictor

- Improving the predictor – Hierarchical N-Gram

- Learning to use a Naïve Bayes classifier

- Implementing reinforcement learning

- Implementing artificial neural networks

Introduction

Predicting actions with an N-Gram predictor

Improving the predictor – Hierarchical N-Gram

Learning to use Naïve Bayes classifier

Implementing reinforcement learning

Implementing artificial neural networks

9 Procedural Content Generation

In this chapter, we will learn different techniques for procedural content generation with the following recipes:

- Creating mazes with Depth-First Search

- Implementing the constructive algorithm for dungeons and islands

- Generating landscapes

- Using N-Grams for content generation

- Generating enemies with the evolutionary algorithm

Introduction

Creating mazes with Depth-First Search

Implementing the constructive algorithm for dungeons and islands

Generating landscapes

Using N-Grams for content generation

Generating enemies with the evolutionary algorithm

10 Miscellaneous

In this chapter, you will learn different techniques for:

- Creating and managing Scriptable Objects

- Handling random numbers better

- Building an air-hockey rival

- Implementing an architecture for racing games

- Managing race difficulty using a rubber-band system

Introduction

Creating and managing Scriptable Objects

Handling random numbers better

Building an air-hockey rival

Implementing an architecture for racing games

Managing race difficulty using a rubber-band system