官方文档地址:https://www.elastic.co/guide/en/cloud-on-k8s/current/k8s-quickstart.html

yaml文件地址:https://download.elastic.co/downloads/eck/1.6.0/all-in-one.yaml

前提要求

1.Kubernetes 1.16-1.20

2. es各组件版本

- Elasticsearch, Kibana, APM Server: 6.8+, 7.1+

- Enterprise Search: 7.7+

- Beats: 7.0+

- Elastic Agent: 7.10+

- Elastic Maps Server: 7.11+

安装步骤

1.在k8s中安装部署ECK

2.使用ECK安装部署Elasticsearch集群

3.安装部署Kibana

4.升级部署

5.存储

6.检测

在k8s中安装部署ECK

1.安装自定义资源定义和运算符及其RBAC规则:

kubectl create -f https://download.elastic.co/downloads/eck/1.6.0/all-in-one.yaml

有警告信息:

namespace/elastic-system created

serviceaccount/elastic-operator created

secret/elastic-webhook-server-cert created

configmap/elastic-operator created

Warning: apiextensions.k8s.io/v1beta1 CustomResourceDefinition is deprecated in v1.16+, unavailable in v1.22+; use apiextensions.k8s.io/v1 CustomResourceDefinition

customresourcedefinition.apiextensions.k8s.io/agents.agent.k8s.elastic.co created

customresourcedefinition.apiextensions.k8s.io/apmservers.apm.k8s.elastic.co created

customresourcedefinition.apiextensions.k8s.io/beats.beat.k8s.elastic.co created

customresourcedefinition.apiextensions.k8s.io/elasticmapsservers.maps.k8s.elastic.co created

customresourcedefinition.apiextensions.k8s.io/elasticsearches.elasticsearch.k8s.elastic.co created

customresourcedefinition.apiextensions.k8s.io/enterprisesearches.enterprisesearch.k8s.elastic.co created

customresourcedefinition.apiextensions.k8s.io/kibanas.kibana.k8s.elastic.co created

clusterrole.rbac.authorization.k8s.io/elastic-operator created

clusterrole.rbac.authorization.k8s.io/elastic-operator-view created

clusterrole.rbac.authorization.k8s.io/elastic-operator-edit created

clusterrolebinding.rbac.authorization.k8s.io/elastic-operator created

service/elastic-webhook-server created

statefulset.apps/elastic-operator created

Warning: admissionregistration.k8s.io/v1beta1 ValidatingWebhookConfiguration is deprecated in v1.16+, unavailable in v1.22+; use admissionregistration.k8s.io/v1 ValidatingWebhookConfiguration

validatingwebhookconfiguration.admissionregistration.k8s.io/elastic-webhook.k8s.elastic.co created

2.监控operator 日志

kubectl -n elastic-system logs -f statefulset.apps/elastic-operator

看日志的话也会显示上述警告信息:

使用ECK部署es集群

注意:如果您的Kubernetes集群没有任何Kubernetes节点具有至少2GiB的可用内存,pod将处于挂起状态。

如下是创建一个pod的示范:

默认拉取的镜像是从docker.elastic.co/elasticsearch/elasticsearch:7.13.1拉取的,若是拉入不到,可以先从dockerhub上拉取,然后tag

docker tag elasticsearch:7.12.1 docker.elastic.co/elasticsearch/elasticsearch:7.12.1

# cat es.yaml

apiVersion: elasticsearch.k8s.elastic.co/v1

kind: Elasticsearch

metadata:

name: quickstart

spec:

version: 7.13.1

nodeSets:

- name: default

count: 1

config:

node.store.allow_mmap: false

# kubectl create -f es.yaml

elasticsearch.elasticsearch.k8s.elastic.co/quickstart created

监视es运行状况和创建进度

kubectl get elasticsearch

NAME HEALTH NODES VERSION PHASE AGE

quickstart green 1 7.12.1 Ready 5m33s

创建集群时,没有运行状况,并且阶段为空。一段时间后,阶段变成就绪,健康变成绿色。

可以看到一个Pod正在启动过程中

kubectl get pods --selector='elasticsearch.k8s.elastic.co/cluster-name=quickstart'

NAME READY STATUS RESTARTS AGE

quickstart-es-default-0 1/1 Running 0 5m54s

查看pod日志

kubectl logs -f quickstart-es-default-0

访问es

不建议使用-k标志禁用证书验证,只应用于测试目的

kubectl get service quickstart-es-http

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

quickstart-es-http ClusterIP 10.3.255.226 <none> 9200/TCP 2m52s

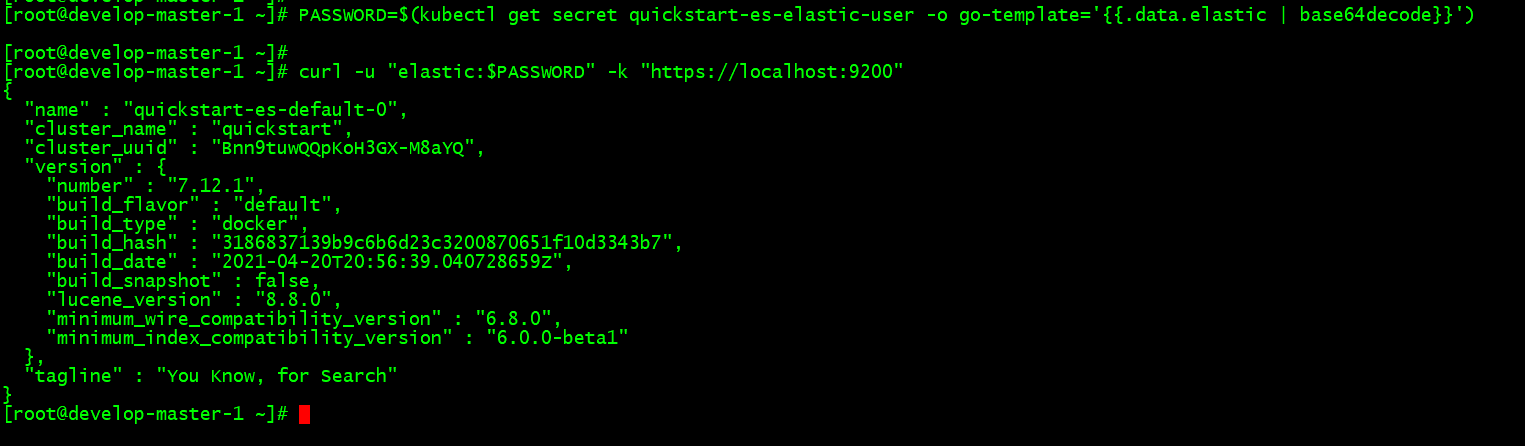

# 获取密码,默认用户名是elastic

PASSWORD=$(kubectl get secret quickstart-es-elastic-user -o go-template='{{.data.elastic | base64decode}}')

# 在Kubernetes 集群内部访问

curl -u "elastic:$PASSWORD" -k "https://quickstart-es-http:9200"

# 在主机上访问

curl -u "elastic:$PASSWORD" -k "https://quickstart-es-http:9200"

三个pod组成的集群,可以随意扩展pod数量

# curl -u "elastic:$PASSWORD" -k "https://localhost:9200/_cat/nodes"

10.0.0.137 15 63 59 6.24 7.62 6.57 cdfhilmrstw - quickstart-es-default-2

10.0.1.219 30 64 46 4.80 3.21 2.53 cdfhilmrstw * quickstart-es-default-0

10.0.2.193 55 63 44 1.97 2.50 2.30 cdfhilmrstw - quickstart-es-default-1

部署kibana

# cat kibana.yaml

apiVersion: kibana.k8s.elastic.co/v1

kind: Kibana

metadata:

name: quickstart

spec:

version: 7.13.1

count: 1

elasticsearchRef:

name: quickstart

# kubectl create -f kibana.yaml

kibana.kibana.k8s.elastic.co/quickstart created

监视kibana运行状况和创建进度

# kubectl get pod --selector='kibana.k8s.elastic.co/name=quickstart'

NAME READY STATUS RESTARTS AGE

quickstart-kb-5f844868fb-lrn2f 1/1 Running 1 4m26s

访问kibana

# kubectl get service quickstart-kb-http

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

quickstart-kb-http ClusterIP 10.3.255.47 <none> 5601/TCP 5m11s

# 本机访问

# kubectl port-forward service/quickstart-kb-http 5601

Forwarding from 127.0.0.1:5601 -> 5601

Forwarding from [::1]:5601 -> 5601

# 获取默认用户名elastic的密码

# kubectl get secret quickstart-es-elastic-user -o=jsonpath='{.data.elastic}' | base64 --decode; echo

# 另一种访问kibana的方法

默认创建的有一个名为quickstart-kb-http的svc,类型是ClusterIP,可以修改这个为NodePort,来实现通过宿主机ip:NodePort来访问

升级部署

首先确保Kubernetes集群有足够的资源来适应这些更改(额外的存储空间、足够的内存和CPU资源来临时启动新的pod等等)。

如下示例是把es集群中节点数量由1增加到3

# cat es.yaml

apiVersion: elasticsearch.k8s.elastic.co/v1

kind: Elasticsearch

metadata:

name: quickstart

spec:

version: 7.13.1

nodeSets:

- name: default

count: 3

config:

node.store.allow_mmap: false

存储

默认情况下,操作员为Elasticsearch集群中的每个pod创建一个容量为1Gi的PersistentVolumeClaim,以防止意外删除pod时数据丢失。对于生产工作负载,应该使用所需的存储容量和(可选)要与持久卷关联的Kubernetes存储类来定义自己的卷声明模板。卷声明的名称必须始终是elasticsearch数据。

spec:

nodeSets:

- name: default

count: 3

volumeClaimTemplates:

- metadata:

name: elasticsearch-data

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

storageClassName: standard

如果拥有的Elasticsearch节点按比例缩小,则ECK会自动删除PersistentVolumeClaim资源。根据配置的存储类回收策略,可以保留相应的持久卷。

此外,如果您通过volumeClaimDeletePolicy属性完全删除Elasticsearch集群,您可以控制ECK应该如何处理PersistentVolumeClaims。

apiVersion: elasticsearch.k8s.elastic.co/v1

kind: Elasticsearch

metadata:

name: es

spec:

version: 7.13.1

volumeClaimDeletePolicy: DeleteOnScaledownOnly

nodeSets:

- name: default

count: 3

可能的值为DeleteOnScaleDownandClusterDelete和DeleteOnScaledownOnly。默认情况下,DeleteOnScaledownAndClusterDeletion生效,这意味着所有persistentVolumeClaimes都将与Elasticsearch集群一起删除。但是,删除Elasticsearch群集时,DeleteOnScaleDown仅保留PersistentVolumeClaims。如果使用与以前相同的名称和节点集重新创建已删除的群集,则新群集将采用现有的PersistentVolumeClaims。