k8s-pv动态磁盘分配(StorageClass)

1. k8s-pv动态磁盘分配

-

PV静态供给明显的缺点是维护成本太高了!

-

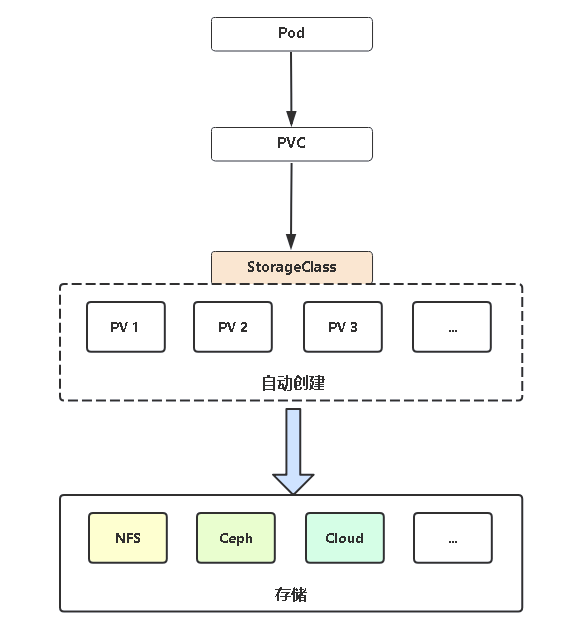

因此,K8s开始支持PV动态供给,使用StorageClass对象实现。

-

pv动态供给架构图

2.部署自动供给

-

部署NFS实现自动创建PV插件:

git clone https://github.com/kubernetes-incubator/external-storage cd nfs-client/deploy kubectl apply -f rbac.yaml # 授权访问apiserver kubectl apply -f deployment.yaml # 部署插件,需修改里面NFS服务器地址与共享目录 kubectl apply -f class.yaml # 创建存储类 kubectl get sc# 查看存储类

2.1 下载插件

-

克隆代码

[root@k8s-master ~]# git clone https://github.com/kubernetes-incubator/external-storage -

我这里是先下在了安装包,通过sftp上传

[root@k8s-master ~]# ll 总用量 212 -rw-------. 1 root root 1857 12月 15 09:32 anaconda-ks.cfg -rw-r--r-- 1 root root 187757 12月 15 12:54 calico.yaml -rw-r--r-- 1 root root 7591 12月 15 13:13 dashboard.yaml -rw-r--r-- 1 root root 6615 12月 16 20:17 ingress_controller.yaml drwxr-xr-x 2 root root 64 12月 30 16:33 nfs-client -rw-r--r--. 1 root root 7867 12月 15 10:22 open.sh drw-r--r-- 12 root root 144 12月 30 09:20 yaml

2.2 配置文件修改

-

进入插件里

[root@k8s-master ~]# cd nfs-client/ [root@k8s-master nfs-client]# ll 总用量 12 -rw-r--r-- 1 root root 225 12月 30 16:33 class.yaml # 创建存储类 -rw-r--r-- 1 root root 994 12月 30 16:33 deployment.yaml # 部署插件,需修改里面NFS服务器地址与共享目录 -rw-r--r-- 1 root root 1526 12月 30 16:33 rbac.yaml # 授权访问apiserver -

修改deployment配置文件

[root@k8s-master nfs-client]# vim deployment.yaml [root@k8s-master nfs-client]# cat deployment.yaml apiVersion: v1 kind: ServiceAccount metadata: name: nfs-client-provisioner --- kind: Deployment apiVersion: apps/v1 metadata: name: nfs-client-provisioner spec: replicas: 1 strategy: type: Recreate selector: matchLabels: app: nfs-client-provisioner template: metadata: labels: app: nfs-client-provisioner spec: serviceAccountName: nfs-client-provisioner containers: - name: nfs-client-provisioner # 把国外源修改为国内源 #image: quay.io/external_storage/nfs-client-provisioner:latest image: shichao01/nfs-client-provisioner:latest volumeMounts: - name: nfs-client-root mountPath: /persistentvolumes env: - name: PROVISIONER_NAME value: fuseim.pri/ifs - name: NFS_SERVER # 修改nfs连接地址 #value: 192.168.1.61 value: 10.100.24.85 - name: NFS_PATH value: /ifs/kubernetes #共享路径,根据情况修改 volumes: - name: nfs-client-root nfs: server: 10.100.24.85 path: /ifs/kubernetes -

修改class文件

[root@k8s-master nfs-client]# vim class.yaml [root@k8s-master nfs-client]# cat class.yaml apiVersion: storage.k8s.io/v1 kind: StorageClass metadata: name: managed-nfs-storage provisioner: fuseim.pri/ifs # or choose another name, must match deployment's env PROVISIONER_NAME' parameters: archiveOnDelete: "true" # 修改为true的时候,删除pod后就归档, -

配置rbac.yaml文件

[root@k8s-master nfs-client]# vim rbac.yaml [root@k8s-master nfs-client]# cat rbac.yaml kind: ServiceAccount apiVersion: v1 metadata: name: nfs-client-provisioner --- kind: ClusterRole apiVersion: rbac.authorization.k8s.io/v1 metadata: name: nfs-client-provisioner-runner rules: - apiGroups: [""] resources: ["persistentvolumes"] verbs: ["get", "list", "watch", "create", "delete"] - apiGroups: [""] resources: ["persistentvolumeclaims"] verbs: ["get", "list", "watch", "update"] - apiGroups: ["storage.k8s.io"] resources: ["storageclasses"] verbs: ["get", "list", "watch"] - apiGroups: [""] resources: ["events"] verbs: ["create", "update", "patch"] --- kind: ClusterRoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: run-nfs-client-provisioner subjects: - kind: ServiceAccount name: nfs-client-provisioner namespace: default roleRef: kind: ClusterRole name: nfs-client-provisioner-runner apiGroup: rbac.authorization.k8s.io --- kind: Role apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner rules: - apiGroups: [""] resources: ["endpoints"] verbs: ["get", "list", "watch", "create", "update", "patch"] --- kind: RoleBinding apiVersion: rbac.authorization.k8s.io/v1 metadata: name: leader-locking-nfs-client-provisioner subjects: - kind: ServiceAccount name: nfs-client-provisioner # replace with namespace where provisioner is deployed namespace: default roleRef: kind: Role name: leader-locking-nfs-client-provisioner apiGroup: rbac.authorization.k8s.io

2.3 启动所有服务

[root@k8s-master nfs-client]# kubectl apply -f .

storageclass.storage.k8s.io/managed-nfs-storage created

serviceaccount/nfs-client-provisioner created

deployment.apps/nfs-client-provisioner created

serviceaccount/nfs-client-provisioner unchanged

clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created

role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

2.4 查看服务是否启动

[root@k8s-master nfs-client]# kubectl get pods

NAME READY STATUS RESTARTS AGE

configmap-demo-pod 1/1 Running 0 4d1h

my-hostpath 1/1 Running 4 2d1h

my-pod 1/1 Running 0 7h35m

nfs-client-provisioner-95c9579-m2f69 1/1 Running 0 52s

secret-demo-pod 1/1 Running 0 3d18h

web-nfs-84f8d7bf8d-6mj75 1/1 Running 0 44h

web-nfs-84f8d7bf8d-n4tpk 1/1 Running 0 44h

web-nfs-84f8d7bf8d-qvd2z 1/1 Running 0 44h

2.5 验证服务

[root@k8s-master nfs-client]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

managed-nfs-storage fuseim.pri/ifs Delete Immediate false 3m46s

3. pvc 动态供给

3.1 示例代码

-

pvc示例代码

apiVersion: v1 kind: PersistentVolumeClaim metadata: name: test-claim # pvc名称 spec: storageClassName: "managed-nfs-storage" # 这里申明扩展的插件 accessModes: - ReadWriteMany resources: requests: storage: 1Gi -

应用代码

apiVersion: v1 kind: Pod metadata: name: my-pvc-sc spec: containers: - name: nginx image: nginx:latest ports: - containerPort: 80 volumeMounts: - name: www mountPath: /usr/share/nginx/html volumes: - name: www persistentVolumeClaim: claimName: test-claim # pvc的名称

3.2 部署案例

3.2.1 部署pvc服务

-

创建容器pod配置文件目录

[root@k8s-master yaml]# mkdir -p pvc-dynamic [root@k8s-master yaml]# cd pvc-dynamic/ -

编写pvc文件

[root@k8s-master pvc-dynamic]# vim pvc.yaml [root@k8s-master pvc-dynamic]# cat pvc.yaml apiVersion: v1 kind: PersistentVolumeClaim metadata: name: test-claim spec: storageClassName: "managed-nfs-storage" accessModes: - ReadWriteMany resources: requests: storage: 1Gi -

启动pvc服务

[root@k8s-master pvc-dynamic]# kubectl apply -f pvc.yaml persistentvolumeclaim/test-claim created -

查看验证pvc服务

[root@k8s-master pvc-dynamic]# kubectl get pvc NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE my-pvc Bound my-pv 5Gi RWX 26h test-claim Bound pvc-998a4c34-f686-4e46-80bf-6d32c005111d 1Gi RWX managed-nfs-storage 5s

3.2.2 部署pvc的容器应用

-

编写示例代码

[root@k8s-master pvc-dynamic]# vim pvc-pod.yaml [root@k8s-master pvc-dynamic]# cat pvc-pod.yaml apiVersion: v1 kind: Pod metadata: name: my-pvc-sc spec: containers: - name: nginx image: nginx:latest ports: - containerPort: 80 volumeMounts: - name: www mountPath: /usr/share/nginx/html volumes: - name: www persistentVolumeClaim: claimName: test-claim # pvc的名称 -

启动服务

[root@k8s-master pvc-dynamic]# kubectl apply -f pvc-pod.yaml pod/my-pvc-sc created -

验证服务是否启动

[root@k8s-master pvc-dynamic]# kubectl get pods NAME READY STATUS RESTARTS AGE configmap-demo-pod 1/1 Running 0 4d20h my-hostpath 1/1 Running 6 2d20h my-pod 1/1 Running 0 26h my-pvc-sc 1/1 Running 0 12s nfs-client-provisioner-95c9579-m2f69 1/1 Running 0 19h secret-demo-pod 1/1 Running 0 4d13h web-nfs-84f8d7bf8d-6mj75 1/1 Running 0 2d15h web-nfs-84f8d7bf8d-n4tpk 1/1 Running 0 2d15h web-nfs-84f8d7bf8d-qvd2z 1/1 Running 0 2d15h

3.3 验证服务数据

-

登陆容器插找数据

[root@k8s-master pvc-dynamic]# kubectl exec -it pod/my-pvc-sc -- /bin/bash root@my-pvc-sc:/# ls -a /usr/share/nginx/html . ..注释:我们发现是不是没有数据,我们怎么查看啦

-

解决以上疑问:

我们登陆到nfs服务器,到对应的共享目录下,查看

[root@k8s-node3 ~]# cd /ifs/kubernetes/ [root@k8s-node3 kubernetes]# ll 总用量 4 drwxrwxrwx 2 root root 24 12月 31 12:37 default-test-claim-pvc-998a4c34-f686-4e46-80bf-6d32c005111d -rw-r--r-- 1 root root 22 12月 28 20:17 index.html发现这里是不是多了一个目录了,这个就是共享的目录

-

我们把index.html文件拷贝到这个目录下容器里就有数据了

[root@k8s-node3 kubernetes]# cp -a index.html default-test-claim-pvc-998a4c34-f686-4e46-80bf-6d32c005111d/ [root@k8s-node3 kubernetes]# cat default-test-claim-pvc-998a4c34-f686-4e46-80bf-6d32c005111d/index.html <h1>hello world!</h1>

-

-

我们重新进入容器查看是否有有数据了

[root@k8s-master pvc-dynamic]# kubectl exec -it pod/my-pvc-sc -- /bin/bash root@my-pvc-sc:/# ls -a /usr/share/nginx/html . .. index.html root@my-pvc-sc:/# cat /usr/share/nginx/html/index.html <h1>hello world!</h1>提示:发现是不是有数据了