今天闲,测试了下concurrent.futures 模块中的ThreadPoolExecutor,ProcessPoolExecutor。

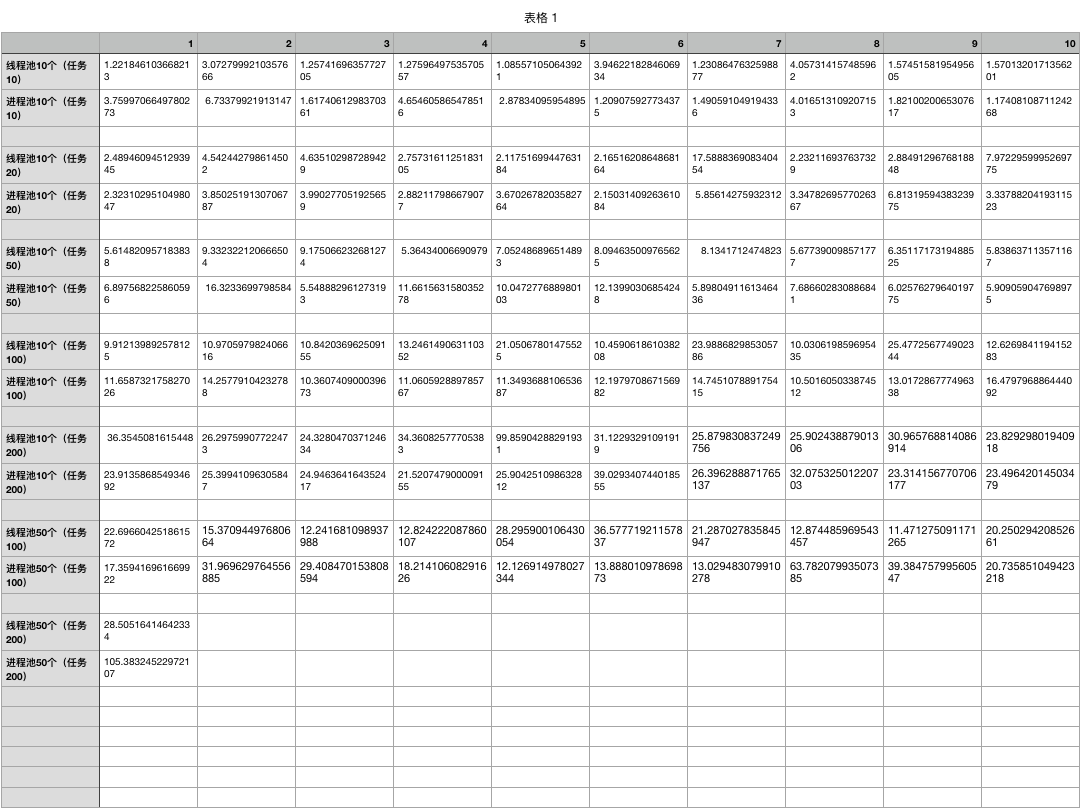

对开不同的数量的进程池和任务量时,所耗时间。

from concurrent.futures import ThreadPoolExecutor,ProcessPoolExecutor import requests import time,os def get_page(url): #print('<%s> is getting [%s]'%(os.getpid(),url)) response = requests.get(url) #time.sleep(5) if response.status_code==200: #200代表状态:下载成功了 return {'url':url,'text':response.text} def parse_page(res): res = res.result() #print('<%s> is getting [%s]'%(os.getpid(),res['url'])) with open('db.txt','a') as f: parse_res = 'url:%s size:%s '%(res['url'],len(res['text'])) f.write(parse_res) if __name__ == '__main__': start = time.time() p = ThreadPoolExecutor(max_workers=15) #p = ProcessPoolExecutor() l = [ ] for x in range(200): l.append('https://www.sina.com.cn/') for url in l: res=p.submit(get_page,url) #res = p.submit(get_page,url).add_done_callback(parse_page) #这里的回调函数拿到的是一个对象。得 # 先把返回的res得到一个结果。即在前面加上一个res.result() #谁好了谁去掉回调函数 # 回调函数也是一种编程思想。不仅开线程池用,开线程池也用 p.shutdown() #相当于进程池里的close和join print('主',os.getpid()) print(time.time() - start) start = time.time() # p = ThreadPoolExecutor() p = ProcessPoolExecutor(max_workers=15) for url in l: res = p.submit(get_page, url) # res = p.submit(get_page,url).add_done_callback(parse_page) #这里的回调函数拿到的是一个对象。得 # 先把返回的res得到一个结果。即在前面加上一个res.result() #谁好了谁去掉回调函数 # 回调函数也是一种编程思想。不仅开线程池用,开线程池也用 p.shutdown() # 相当于进程池里的close和join print('主', os.getpid()) print(time.time() - start)

aaMacBook-Pro:~ aa$ system_profiler SPHardwareDataType Hardware: Hardware Overview: Model Name: MacBook Pro Model Identifier: MacBookPro14,1 Processor Name: Intel Core i5 Processor Speed: 2.3 GHz Number of Processors: 1 Total Number of Cores: 2 L2 Cache (per Core): 256 KB L3 Cache: 4 MB Hyper-Threading Technology: Enabled Memory: 16 GB Boot ROM Version: 198.0.0.0.0 SMC Version (system): 2.43f6 Serial Number (system): FVFYL11EHV2H Hardware UUID: 39CD8397-D284-5356-BAF4-3E6CE64250C6