创建zybo cluster的spark集群(计算层面):

1.每个节点都是同样的filesystem,mac地址冲突,故:

vi ./etc/profile

export PATH=/usr/local/sbin:/usr/local/bin:/usr/sbin:/usr/bin:/sbin:/bin:$PATH

export JAVA_HOME=/usr/lib/jdk1.7.0_55

export CLASSPATH=.:$JAVA_HOME/lib/tools.jar

export PATH=$JAVA_HOME/bin:$PATH

export HADOOP_HOME=/root/hadoop-2.4.0

ifconfig eth1 hw ether 00:0a:35:00:01:03

ifconfig eth1 192.168.1.3/24 up

2.生成私匙 id_rsa 与 公匙 id_rsa.pub 配置文件

ssh-keygen -t rsa

id_rsa是密钥文件,id_rsa.pub是公钥文件。

3.Worker节点/etc/hosts配置:

具体操作步骤:

ssh root@192.168.1.x

vi /etc/hosts

127.0.0.1 localhost zynq

192.168.1.1 spark1

192.168.1.2 spark2

192.168.1.3 spark3

192.168.1.4 spark4

192.168.1.5 spark5

192.168.1.100 sparkMaster

#::1 localhost ip6-localhost ip6-loopback

Master节点/etc/hosts配置:

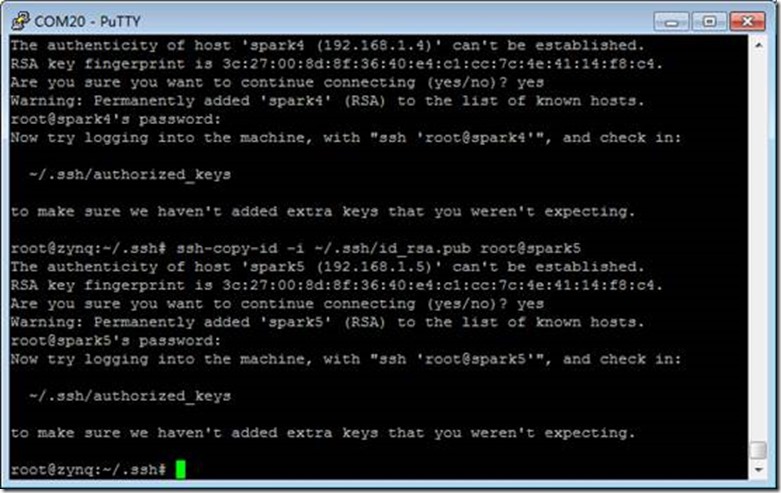

4.分发公钥

ssh-copy-id -i ~/.ssh/id_rsa.pub root@spark1

ssh-copy-id -i ~/.ssh/id_rsa.pub root@spark2

ssh-copy-id -i ~/.ssh/id_rsa.pub root@spark3

ssh-copy-id -i ~/.ssh/id_rsa.pub root@spark4

…..

5.配置Master节点

Cd ~/spark-0.9.1-bin-hadoop2/conf

Vi slaves

6.配置java

否则运行pi计算时会出现count找不到的错误(因为pyspark找不到javaruntime)。

cd /usr/bin/

ln -s /usr/lib/jdk1.7.0_55/bin/java java

ln -s /usr/lib/jdk1.7.0_55/bin/javac javac

ln -s /usr/lib/jdk1.7.0_55/bin/jar jar

7.测试运行所有节点

SPARK_MASTER_IP=192.168.1.1 ./sbin/start-all.sh

SPARK_MASTER_IP=192.168.1.100 ./sbin/start-all.sh

成功启动所有节点:

8.查看工作状态:

Jps

Netstat -ntlp

9.开启脚本命令行

MASTER=spark://192.168.1.1:7077 ./bin/pyspark

MASTER=spark://192.168.1.100:7077 ./bin/pyspark

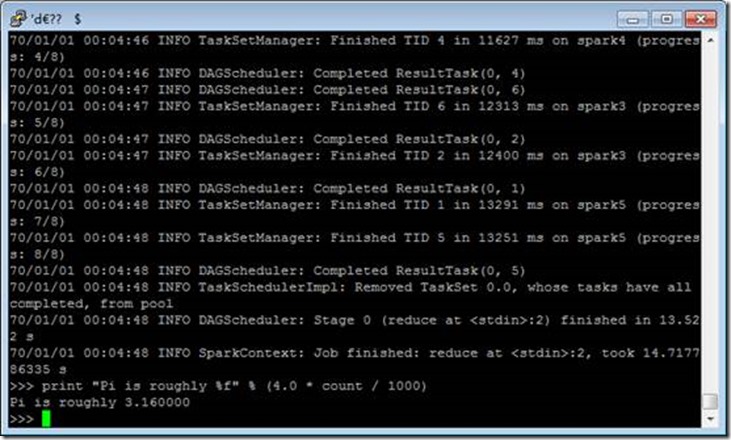

10.测试

from random import random

def sample(p):

x, y = random(), random()

return 1 if x*x + y*y < 1 else 0

count = sc.parallelize(xrange(0, 1000000)).map(sample)

.reduce(lambda a, b: a + b)

print "Pi is roughly %f" % (4.0 * count / 1000000)

成功进行运算:

正常启动信息:

root@zynq:~/spark-0.9.1-bin-hadoop2# MASTER=spark://192.168.1.1:7077 ./bin/pyspark

Python 2.7.4 (default, Apr 19 2013, 19:49:55)

[GCC 4.7.3] on linux2

Type "help", "copyright", "credits" or "license" for more information.

log4j:WARN No appenders could be found for logger (akka.event.slf4j.Slf4jLogger).

log4j:WARN Please initialize the log4j system properly.

log4j:WARN See http://logging.apache.org/log4j/1.2/faq.html#noconfig for more info.

70/01/01 00:07:48 INFO SparkEnv: Using Spark's default log4j profile: org/apache/spark/log4j-defaults.properties

70/01/01 00:07:48 INFO SparkEnv: Registering BlockManagerMaster

70/01/01 00:07:49 INFO DiskBlockManager: Created local directory at /tmp/spark-local-19700101000749-e1fb

70/01/01 00:07:49 INFO MemoryStore: MemoryStore started with capacity 297.0 MB.

70/01/01 00:07:49 INFO ConnectionManager: Bound socket to port 36414 with id = ConnectionManagerId(spark1,36414)

70/01/01 00:07:49 INFO BlockManagerMaster: Trying to register BlockManager

70/01/01 00:07:49 INFO BlockManagerMasterActor$BlockManagerInfo: Registering block manager spark1:36414 with 297.0 MB RAM

70/01/01 00:07:49 INFO BlockManagerMaster: Registered BlockManager

70/01/01 00:07:49 INFO HttpServer: Starting HTTP Server

70/01/01 00:07:50 INFO HttpBroadcast: Broadcast server started at http://192.168.1.1:42068

70/01/01 00:07:50 INFO SparkEnv: Registering MapOutputTracker

70/01/01 00:07:50 INFO HttpFileServer: HTTP File server directory is /tmp/spark-77996902-7ea4-4161-bc23-9f3538967c17

70/01/01 00:07:50 INFO HttpServer: Starting HTTP Server

70/01/01 00:07:51 INFO SparkUI: Started Spark Web UI at http://spark1:4040

70/01/01 00:07:52 INFO AppClient$ClientActor: Connecting to master spark://192.168.1.1:7077...

70/01/01 00:07:55 INFO SparkDeploySchedulerBackend: Connected to Spark cluster with app ID app-19700101000755-0001

70/01/01 00:07:55 INFO AppClient$ClientActor: Executor added: app-19700101000755-0001/0 on worker-19700101000249-spark2-53901 (spark2:53901) with 2 cores

70/01/01 00:07:55 INFO SparkDeploySchedulerBackend: Granted executor ID app-19700101000755-0001/0 on hostPort spark2:53901 with 2 cores, 512.0 MB RAM

70/01/01 00:07:55 INFO AppClient$ClientActor: Executor added: app-19700101000755-0001/1 on worker-19700101000306-spark5-38532 (spark5:38532) with 2 cores

70/01/01 00:07:55 INFO SparkDeploySchedulerBackend: Granted executor ID app-19700101000755-0001/1 on hostPort spark5:38532 with 2 cores, 512.0 MB RAM

70/01/01 00:07:55 INFO AppClient$ClientActor: Executor added: app-19700101000755-0001/2 on worker-19700101000255-spark3-41536 (spark3:41536) with 2 cores

70/01/01 00:07:55 INFO SparkDeploySchedulerBackend: Granted executor ID app-19700101000755-0001/2 on hostPort spark3:41536 with 2 cores, 512.0 MB RAM

70/01/01 00:07:55 INFO AppClient$ClientActor: Executor added: app-19700101000755-0001/3 on worker-19700101000254-spark4-38766 (spark4:38766) with 2 cores

70/01/01 00:07:55 INFO SparkDeploySchedulerBackend: Granted executor ID app-19700101000755-0001/3 on hostPort spark4:38766 with 2 cores, 512.0 MB RAM

70/01/01 00:07:55 INFO AppClient$ClientActor: Executor updated: app-19700101000755-0001/0 is now RUNNING

70/01/01 00:07:55 INFO AppClient$ClientActor: Executor updated: app-19700101000755-0001/3 is now RUNNING

70/01/01 00:07:55 INFO AppClient$ClientActor: Executor updated: app-19700101000755-0001/1 is now RUNNING

70/01/01 00:07:55 INFO AppClient$ClientActor: Executor updated: app-19700101000755-0001/2 is now RUNNING

70/01/01 00:07:56 WARN NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Welcome to

____ __

/ __/__ ___ _____/ /__

_ / _ / _ `/ __/ '_/

/__ / .__/\_,_/_/ /_/\_ version 0.9.1

/_/

Using Python version 2.7.4 (default, Apr 19 2013 19:49:55)

Spark context available as sc.

>>> 70/01/01 00:08:06 INFO SparkDeploySchedulerBackend: Registered executor: Actor[akka.tcp://sparkExecutor@spark3:35842/user/Executor#1876589543] with ID 2

70/01/01 00:08:11 INFO BlockManagerMasterActor$BlockManagerInfo: Registering block manager spark3:42847 with 297.0 MB RAM

70/01/01 00:08:12 INFO SparkDeploySchedulerBackend: Registered executor: Actor[akka.tcp://sparkExecutor@spark5:43445/user/Executor#-1199017431] with ID 1

70/01/01 00:08:13 INFO BlockManagerMasterActor$BlockManagerInfo: Registering block manager spark5:42630 with 297.0 MB RAM

70/01/01 00:08:15 INFO AppClient$ClientActor: Executor updated: app-19700101000755-0001/0 is now FAILED (Command exited with code 1)

70/01/01 00:08:15 INFO SparkDeploySchedulerBackend: Executor app-19700101000755-0001/0 removed: Command exited with code 1

70/01/01 00:08:15 INFO AppClient$ClientActor: Executor added: app-19700101000755-0001/4 on worker-19700101000249-spark2-53901 (spark2:53901) with 2 cores

70/01/01 00:08:15 INFO SparkDeploySchedulerBackend: Granted executor ID app-19700101000755-0001/4 on hostPort spark2:53901 with 2 cores, 512.0 MB RAM

70/01/01 00:08:15 INFO AppClient$ClientActor: Executor updated: app-19700101000755-0001/4 is now RUNNING

70/01/01 00:08:21 INFO SparkDeploySchedulerBackend: Registered executor: Actor[akka.tcp://sparkExecutor@spark4:41692/user/Executor#-1994427913] with ID 3

70/01/01 00:08:26 INFO BlockManagerMasterActor$BlockManagerInfo: Registering block manager spark4:49788 with 297.0 MB RAM

70/01/01 00:08:27 INFO SparkDeploySchedulerBackend: Registered executor: Actor[akka.tcp://sparkExecutor@spark2:44449/user/Executor#-1155287434] with ID 4

70/01/01 00:08:28 INFO BlockManagerMasterActor$BlockManagerInfo: Registering block manager spark2:38675 with 297.0 MB RAM