lnmp环境

gdb /usr/local/php5.2/bin/php-cgi /tmp/coredump-php-cgi.20503

source /home/tmp/lnmp1.4-full/src/php-5.2.17/.gdbinit

zbacktrace

[root@iZ28cm0353dZ ~]# gdb /usr/local/php5.2/bin/php-cgi /tmp/coredump-php-cgi.20503

GNU gdb (GDB) Red Hat Enterprise Linux 7.6.1-115.el7

Copyright (C) 2013 Free Software Foundation, Inc.

License GPLv3+: GNU GPL version 3 or later <http://gnu.org/licenses/gpl.html>

This is free software: you are free to change and redistribute it.

There is NO WARRANTY, to the extent permitted by law. Type "show copying"

and "show warranty" for details.

This GDB was configured as "x86_64-redhat-linux-gnu".

For bug reporting instructions, please see:

<http://www.gnu.org/software/gdb/bugs/>...

Reading symbols from /usr/local/php5.2/bin/php-cgi...done.

[New LWP 20503]

Missing separate debuginfo for /lib/libpng15.so.15

Try: yum --enablerepo='*debug*' install /usr/lib/debug/.build-id/18/0154adee1cc1f0393b1953442cf70efc22697c.debug

Missing separate debuginfo for /lib/libjpeg.so.62

Try: yum --enablerepo='*debug*' install /usr/lib/debug/.build-id/cc/b2a3b40c4242b63c3169c582fef734d4cf87a3.debug

[Thread debugging using libthread_db enabled]

Using host libthread_db library "/usr/lib64/libthread_db.so.1".

Core was generated by `/usr/local/php5.2/bin/php-cgi --fpm --fpm-config /usr/local/php5.2/etc/php-fpm.'.

Program terminated with signal 11, Segmentation fault.

#0 0x0000000000000028 in ?? ()

Missing separate debuginfos, use: debuginfo-install bzip2-libs-1.0.6-13.el7.x86_64 c-ares-1.10.0-3.el7.x86_64 keyutils-libs-1.5.8-3.el7.x86_64 krb5-libs-1.15.1-19.el7.x86_64 libcom_err-1.42.9-11.el7.x86_64 libgcc-4.8.5-28.el7.x86_64 libstdc++-4.8.5-28.el7.x86_64 libxml2-2.9.1-6.el7_2.3.x86_64 pcre-8.32-17.el7.x86_64 xz-libs-5.2.2-1.el7.x86_64 zlib-1.2.7-17.el7.x86_64

(gdb) source /home/tmp/lnmp1.4-full/src/php-5.2.17/.gdbinit

(gdb) zbacktrace

[0x9e19a3f0] tpl_function_filter() /home/wwwroot/aaaa/core/admin/smartyplugin/function.filter.php:20

[0x9e1a04f0] tpl_function_filter() /home/wwwroot/aaaa/core/include_v5/pageFactory.php(417) : eval()'d code:1

[0x9e1a0820] ??? /home/wwwroot/aaaa/core/include_v5/pageFactory.php:417

[0x9e1a0a90] _fetch_compile() /home/wwwroot/aaaa/core/include_v5/pageFactory.php:449

[0x9e1a0f10] fetch() /home/wwwroot/aaaa/core/model_v5/content/mdl.widgets.php:366

[0x9e1a1aa0] editor() /home/wwwroot/aaaa/core/admin/controller/system/ctl.template.php:429

[0x9e1a1ba0] editWidgets()

[0x9e1a2aa0] call_user_func_array() /home/wwwroot/aaaa/core/kernel.php:428

[0x9e1a31a0] callAction() /home/wwwroot/aaaa/core/include_v5/adminCore.php:104

[0x9e1a3650] adminCore() /home/wwwroot/aaaa/shopadmin/index.php:12

(gdb) print ((zval *)0x9e19a3f0)

$3 = (zval *) 0x9e19a3f0

(gdb) printzv $3

[0x9e19a3f0] Cannot access memory at address 0x9e19a404

(gdb) print ((zval *)0x9e1a04f0)

$4 = (zval *) 0x9e1a04f0

(gdb) printzv $4

[0x9e1a04f0] Cannot access memory at address 0x9e1a0504

(gdb) print ((zval *)0x9e1a3650)

$5 = (zval *) 0x9e1a3650

(gdb) printzv $5

[0x9e1a3650] Cannot access memory at address 0x9e1a3664

(gdb)

(gdb)

如何找出发生SEGV内存错误的程序

====

centos7 安装 debuginfo

阿里云的centos机器和镜像都没有提供debuginfo的源,而且默认开通的centos并没有提供yum的repo配置文件。这样的话很多的性能调试工具,比如systemtap之类的工具基本没办法使用。

- 创建配置文件

[root@iZbp1b0n9ivu1hyf17tgsfZ ~]# cat /etc/yum.repos.d/CentOS-Debug.repo

#Debug Info

[debug]

name=CentOS-$releasever - DebugInfo

baseurl=http://debuginfo.centos.org/$releasever/$basearch/

gpgcheck=0

enabled=1

protect=1

priority=1

- 安装kernel-debuginfo

yum --enablerepo=base-debug install -y kernel-debuginfo-$(uname -r)

- 安装glibc

debuginfo-install glibc

==================

gdb使用方式详细

Generate PHP core dumps on segfaults in PHP-FPM

Mattias Geniar, Wednesday, December 10, 2014 - last modified: Wednesday, December 30, 2015The PHP documentation is pretty clear on how to get a backtrace in PHP, but some of the explanations are confusing and seem focused on mod_php, instead of PHP-FPM. So here's the steps you can take to enable core dumps in PHP-FPM pools.

Enable core dumps on Linux

Chances are, your current Linux config doesn't support core dumps yet. You can enable them and set the location where the kernel will dump the core files.

$ echo '/tmp/coredump-%e.%p' > /proc/sys/kernel/core_pattern

You can use many different kinds of core dump variables for the filename, such as;

%% a single % character

%c core file size soft resource limit of crashing process (since

Linux 2.6.24)

%d dump mode—same as value returned by prctl(2) PR_GET_DUMPABLE

(since Linux 3.7)

%e executable filename (without path prefix)

%E pathname of executable, with slashes ('/') replaced by

exclamation marks ('!') (since Linux 3.0).

%g (numeric) real GID of dumped process

%h hostname (same as nodename returned by uname(2))

%p PID of dumped process, as seen in the PID namespace in which

the process resides

%P PID of dumped process, as seen in the initial PID namespace

(since Linux 3.12)

%s number of signal causing dump

%t time of dump, expressed as seconds since the Epoch,

1970-01-01 00:00:00 +0000 (UTC)

%u (numeric) real UID of dumped process

The example above will use the executable name (%e) and the pidfile (%p) in the filename. It'll dump in /tmp, as that will be writable to any kind of user.

Now that your kernel knows where to save the core dumps, it's time to change PHP-FPM.

Enable PHP-FPM core dumps per pool

To enable a core dump on a SIGSEGV, you can enable the rlimit_core option per PHP-FPM pool. Open your pool configuration and add the following.

rlimit_core = unlimited

Restart your PHP-FPM daemon (service php-fpm restart) to activate the config. Next time a SIGSEGV happens, your PHP-FPM logs will show you some more information.

WARNING: [pool poolname] child 20076 exited on signal 11 (SIGSEGV - core dumped) after 8.775895 seconds from start

You can find the core-dump in /tmp/coredump*.

$ ls /tmp/coredump* -rw------- 1 user group 220M /tmp/coredump-php-fpm.2393

The filename shows the program (php-fpm) and the PID (2393).

Reading the core dumps

This is one part that the PHP docs are pretty clear about, so just a copy paste with modified/updated paths.

First, you need gdb installed (yum install gdb) to get the backtraces. You then start the gdb binary like gdb $program-path $coredump-path. Since our program is php-fpm, which resides in /usr/sbin/php-fpm, we call the gdb binary like this.

$ gdb /usr/sbin/php-fpm /tmp/coredump-php-fpm.2393 (gdb loading all symbols ... ) ... Reading symbols from /usr/lib64/php/modules/memcache.so...(no debugging symbols found)...done. Loaded symbols for /usr/lib64/php/modules/memcache.so ... (gdb) bt #0 0x00007f8a8b6d7c37 in mmc_value_handler_multi () from /usr/lib64/php/modules/memcache.so #1 0x00007f8a8b6db9ad in mmc_unpack_value () from /usr/lib64/php/modules/memcache.so #2 0x00007f8a8b6e0637 in ?? () from /usr/lib64/php/modules/memcache.so #3 0x00007f8a8b6dd55b in mmc_pool_select () from /usr/lib64/php/modules/memcache.so #4 0x00007f8a8b6ddcc8 in mmc_pool_run () from /usr/lib64/php/modules/memcache.so #5 0x00007f8a8b6d7e92 in ?? () from /usr/lib64/php/modules/memcache.so #6 0x00007f8a8ac335cf in nr_php_curl_setopt () at /home/hudson/slave-workspace/workspace/PHP_Release_Agent/label/centos5-64-nrcamp/agent/php_curl.c:202 #7 0x0000000002b14fe0 in ?? () #8 0x0000000000000000 in ?? ()

The bt command will show you the PHP backtrace on the moment of the core dump. To exit gdb, just type quit.

There are other good-to-know gdb commands too, for instance -- from your gdb shell (which you started above), type the following:

$ gdb /usr/sbin/php-fpm /tmp/coredump-php-fpm.2393 (gdb) print (char *)(executor_globals.function_state_ptr->function)->common.function_name There is no member named function_state_ptr. (gdb) print (char *)executor_globals.active_op_array->function_name $1 = 0x7f97a47a6670 "_drupal_error_handler_real" (gdb) print (char *)executor_globals.active_op_array->filename $2 = 0x7f97a47a4458 "/var/www/vhosts/site.tld/htdocs/includes/errors.inc"

In summary:

- first print: can tell you which function call in PHP's user-land caused the error

- second print: can tell you which method got called that caused the error

- third print: can tell you which PHP file called that method/function that caused the segfault

Not all data is always available, but it can help narrow down your search.

You can dive even further into each line of the stacktrace. The number in front of each line is a frame number. You can access them like this. In this example, I'll explore frame 11.

(gdb) frame 11

#11 0x00007f97c3ee4f43 in zend_call_function (fci=fci@entry=0x7fff44686280, fci_cache=<optimized out>, fci_cache@entry=0x0) at /usr/src/debug/php-5.6.16/Zend/zend_execute_API.c:829

829 zend_execute(EG(active_op_array) TSRMLS_CC);

(gdb) info frame

Stack level 11, frame at 0x7fff44686280:

rip = 0x7f97c3ee4f43 in zend_call_function (/usr/src/debug/php-5.6.16/Zend/zend_execute_API.c:829); saved rip 0x7f97c3ee513f

called by frame at 0x7fff446862e0, caller of frame at 0x7fff44686140

...

(gdb) info locals

i =

execute_data = {opline = 0x0, function_state = {function = 0x7f97c5709f40, arguments = 0x7f97c3c3a1c0}, op_array = 0x0, object = 0x0, symbol_table = 0x7f97a2b14880, ...

args = {{gp_offset = 16, fp_offset = 48, overflow_arg_area = 0x7fff44686520, reg_save_area = 0x7fff44686430}}

usr_copy = {{gp_offset = 40, fp_offset = 48, overflow_arg_area = 0x7fff44686520, reg_save_area = 0x7fff44686430}}

params = 0x7f97c6494518

error_filename = 0x7f97a6152318 "/var/www/vhosts/drupal.hotelgiftbox.com/htdocs/sites/all/modules/contrib/mailmime/mailmime.inc"

(gdb) info args

fci = 0x7fff44686280

fci_cache = <optimized out>

The last line, info args, tells you which arguments were passed to this call. The info locals tells you which local variables were in use. You can inspect both, so for the info args we can look at the 'fci' or 'fci_cache' variables, for info locals we can look at 'execute_data', 'i' etc.

First, check which data type they are.

(gdb) ptype i

type = unsigned int

(gdb) ptype execute_data

type = struct _zend_execute_data {

struct _zend_op *opline;

zend_function_state function_state;

zend_op_array *op_array;

zval *object;

HashTable *symbol_table;

struct _zend_execute_data *prev_execute_data;

zval *old_error_reporting;

zend_bool nested;

zval **original_return_value;

zend_class_entry *current_scope;

zend_class_entry *current_called_scope;

zval *current_this;

struct _zend_op *fast_ret;

zval *delayed_exception;

call_slot *call_slots;

call_slot *call;

}

This tells us that 'i' is an unsigned integer and that 'execute_data' is a struct. To see the value of each variables, print them.

(gdb) print i $1 = 1

These commands get you a long way: you can investigate each frame, show the arguments and local values, and inspect the value of each variable.

It's time consuming tedious work, but it can get you to the fix.

Send me a tweet on @mattiasgeniar!

====

如何调试PHP的Core之获取基本信息

- 本文地址: http://www.laruence.com/2011/06/23/2057.html

- 转载请注明出处

其实一直想写这个系列, 但是一想到这个话题的宽泛性, 我就有点感觉无法组织.

今天我也不打算全部讲如何调试一个PHP的Core文件, 也不会介绍什么是Coredump, 选择一个相对比较简单的方向来介绍, 那就是如何从PHP的Core文件中获取一些对我们重演这个Core有帮助的信息.

在这个过程中, 会涉及到对PHP的函数调用, PHP的传参, PHP的一些全局变量的知识, 这些知识在我之前的文章中都有过涉及, 大家可以翻阅: 深入理解PHP原理之函数 深入理解PHP原理之变量作用域等等.

首先, 让我们生成一个供我们举例子的Core文件:

- <?php

- function recurse($num) {

- recurse(++$num);

- }

- recurse(0);

运行这个PHP文件:

- $ php test.php

- Segmentation fault (core dumped)

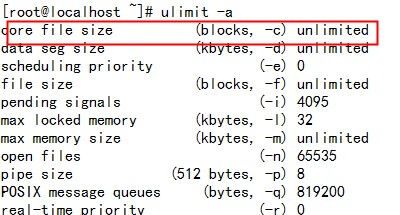

这个PHP因为无线递归, 会导致爆栈, 从而造成 segment fault而在PHP的当前工作目录产生Coredump文件(如果你的系统没有产生Coredump文件, 那请查询ulimit的相关设置).

好, 现在, 让我们删除掉这个test.php, 忘掉上面的代码, 我们现在仅有的是这个Core文件, 任务是, 找出这个Core产生的原因, 以及发生时候的状态.

首先, 让我们用gdb打开这个core文件:

- $ gdb php -c core.31656

会看到很多的信息, 首先让我们注意这段:

- Core was generated by `php test.php'.

- Program terminated with signal 11, Segmentation fault.

他告诉我们Core发生的原因:”Segmentation fault”.

一般来说, 这种Core是最常见的, 解引用空指针, double free, 以及爆栈等等, 都会触发SIGSEGV, 继而默认的产生Coredump.

现在让我们看看Core发生时刻的堆栈:

- #0 execute (op_array=0xdc9a70) at /home/laruence/package/php-5.2.14/Zend/zend_vm_execute.h:53

- 53 memset(EX(CVs), 0, sizeof(zval**) * op_array->last_var);

- (gdb) bt

- #0 execute (op_array=0xdc9a70) at /home/laruence/package/php-5.2.14/Zend/zend_vm_execute.h:53

- #1 0x00000000006ea263 in zend_do_fcall_common_helper_SPEC (execute_data=0x7fbf400210) at /home/laruence/package/php-5.2.14/Zend/zend_vm_execute.h:234

- #2 0x00000000006e9f61 in execute (op_array=0xdc9a70) at /home/laruence/package/php-5.2.14/Zend/zend_vm_execute.h:92

- #3 0x00000000006ea263 in zend_do_fcall_common_helper_SPEC (execute_data=0x7fbf400440) at /home/laruence/package/php-5.2.14/Zend/zend_vm_execute.h:234

- #4 0x00000000006e9f61 in execute (op_array=0xdc9a70) at /home/laruence/package/php-5.2.14/Zend/zend_vm_execute.h:92

- #5 0x00000000006ea263 in zend_do_fcall_common_helper_SPEC (execute_data=0x7fbf400670) at /home/laruence/package/php-5.2.14/Zend/zend_vm_execute.h:234

- .....

不停的按回车, 可以看到堆栈很深, 不停的是zend_do_fcall_common_helper_SPEC和execute的重复, 那么这基本就能断定是因为产生了无穷大的递归(不能一定说是无穷递归, 比如我之前文章中介绍深悉正则(pcre)最大回溯/递归限制). 从而造成爆栈产生的Core.

Ok, 那么现在让我们看看, Core发生在PHP的什么函数中, 在PHP中, 对于FCALL_* Opcode的handler来说, execute_data代表了当前函数调用的一个State, 这个State中包含了信息:

- (gdb)f 1

- #1 0x00000000006ea263 in zend_do_fcall_common_helper_SPEC (execute_data=0x7fbf400210) at /home/laruence/package/php-5.2.14/Zend/zend_vm_execute.h:234

- 234 zend_execute(EG(active_op_array) TSRMLS_CC);

- (gdb) p execute_data->function_state.function->common->function_name

- $3 = 0x2a95b65a78 "recurse"

- (gdb) p execute_data->function_state.function->op_array->filename

- $4 = 0x2a95b632a0 "/home/laruence/test.php"

- (gdb) p execute_data->function_state.function->op_array->line_start

- $5 = 2

现在我们得到, 在调用的PHP函数是recurse, 这个函数定义在/home/laruence/test.php的第二行

经过重复验证几个frame, 我们可以看出, 一直是在重复调用这个PHP函数.

要注意的是, 为了介绍查看执行信息的原理, 我才采用原生的gdb的print来查看, 其实我们还可以使用PHP源代码中提供的.gdbinit(gdb命令编写脚本), 来简单的获取到上面的信息:

- (gdb) source /home/laruence/package/php-5.2.14/.gdbinit

- (gdb) zbacktrace

- [0xbf400210] recurse() /home/laruence/test.php:3

- [0xbf400440] recurse() /home/laruence/test.php:3

- [0xbf400670] recurse() /home/laruence/test.php:3

- [0xbf4008a0] recurse() /home/laruence/test.php:3

- [0xbf400ad0] recurse() /home/laruence/test.php:3

- [0xbf400d00] recurse() /home/laruence/test.php:3

- [0xbf400f30] recurse() /home/laruence/test.php:3

- [0xbf401160] recurse() /home/laruence/test.php:3

- .....

关于.gdbinit, 是一段小小的脚本文件, 定义了一些方便我们去调试PHP的Core, 大家也可以用文本编辑器打开, 看看里面定义的一些快捷的命令, 一般来说, 我常用的有:

- zbacktrace

- print_ht**系列

- zmemcheck

OK, 回归正题, 我们现在知道, 问题发生在/home/laruence/test.php的recurse函数的递归调用上了.

现在, 让我们来看看, 在调用这个函数的时候的参数是什么?

PHP的参数传递是依靠一个全局Stack来完成的, 也就是EG(argument_stack), EG在非多线程情况下就是executor_globals, 它保持了很多执行状态. 而argument_statck就是参数的传递栈, 保存着对应PHP函数调用层数相当的调用参数.

要注意的是, 这个PHP函数调用堆栈(层数)不和gdb所看到的backtrace简单的一一对应, 所以参数也不能直接和gdb的backtrace对应起来, 需要单独分析:

- //先看看, 最后一次函数调用的参数数目是多少

- (gdb) p (int )*(executor_globals->argument_stack->top_element - 2)

- $13 = 1

- //再看看, 最后一次函数调用的参数是什么

- (gdb) p **(zval **)(executor_globals->argument_stack->top_element - 3)

- $2 = {value = {lval = 22445, dval = 1.1089303420906779e-319, str = {val = 0x57ad <address 0x57ad out of bounds>, len = 7}, ht = 0x57ad, obj = {handle = 22445, handlers = 0x7}},

- refcount = 2, type = 1 '�01', is_ref = 0 '�'}

好, 我们现在得到, 最后一次调用的参数是一个整数, 数值是22445

到了这一步, 我们就得到了这个Core发生的时刻的PHP层面的相关信息, 接下来, 就可以交给对应的PHP开发工程师来排查, 这个参数下, 可能造成的无穷大递归的原因, 从而修复这个问题..

后记: 调试PHP的Core是一个需要丰富经验的过程, 也许我今天介绍的这个例子太简单, 但是只要经常去挑战, 在遇到不懂的相关的知识的时候, 勇于去追根究底, 我相信大家终都可以成PHP Core杀手..

Comments

Guy Paddock Sunday, December 14, 2014 at 20:32 -

So… I think you and I are troubleshooting a similar issue with PHP-FPM, Memcache, and New Relic, judging from the date of your post and the fact that your post was exactly what I needed to get a coredump that looks eerily like mine.

Mattias Geniar Sunday, December 14, 2014 at 20:34 -

Haha, that’s very possible – this was indeed the result of a New Relic backtrace combined with Memcached. :-)

Guy Paddock Sunday, December 14, 2014 at 20:51 -

Were you able to find a workaround, or are you waiting on NR support like I am?

Mattias Geniar Sunday, December 14, 2014 at 20:54 -

It hasn’t occured since, actually. Since finding the core-dumps, I installed a NR update (somewhere around end of october 2014) for the PHP module. I’m waiting for new core dumps since then.

If it happens again, I’ll open a support case, but for now I’m unclear what the actual reason was. The release notes of the PHP module don’t show any fix for a SEGFAULT, but I’m just hoping this got quietly fixed: https://docs.newrelic.com/docs/release-notes/agent-release-notes/php-release-notes

If you do hear from them, please let me know? I’d be interested!

Guy Paddock Sunday, December 14, 2014 at 20:59 -

Hmm, well, we’re using 4.15.0.74 (“zanabazar”) and only seeing it on one out of several sites using Couchbase / Memcache on the same server.

There’s definitely something that triggers the behavior, but we haven’t figured out what it is.

Guy Paddock Monday, December 15, 2014 at 02:23 -

While waiting for New Relic support, I traced this issue to an issue filed against libmemcached 1.0.16 relating to a “double-free” of memory that appears to cause this type of problem:

https://bugs.launchpad.net/bugs/1126601

The fix appears to be in 1.0.17, but the Atomic Repo doesn’t have anything past 1.0.16.

In the mean time, I’ve rolled this package:

https://github.com/GuyPaddock/express-tech/tree/master/centos/libmemcached-1.0.18

Pat Monday, July 27, 2015 at 09:20 -

Thanks!!!! You saved me. I had a low-level issue that had my head scratching and your tutorial solved it.