综合系统实践 第7次实践作业

(1)在树莓派中安装opencv库

在Raspberry Pi 4B上安装OpenCV 4.1.2

安装OpenCV的进程可能非常耗时且需要安装许多依赖项和先决条件。

①展开文件系统

如果使用全新的Raspbian Stretch安装,首先需要扩展文件系统,以包括micro-SD卡上的所有可用空间:

sudo raspi-config

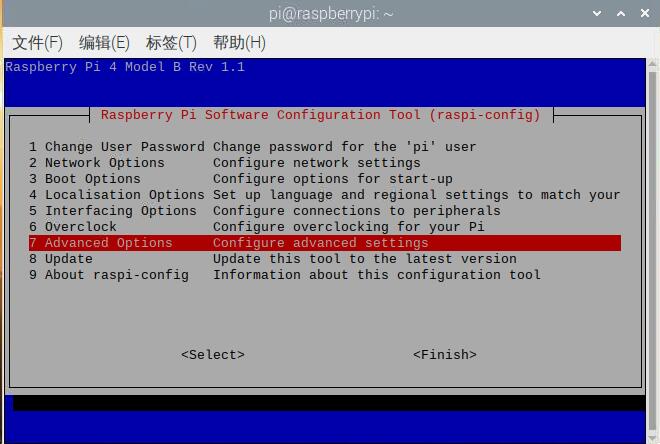

然后选择“高级选项”菜单项:

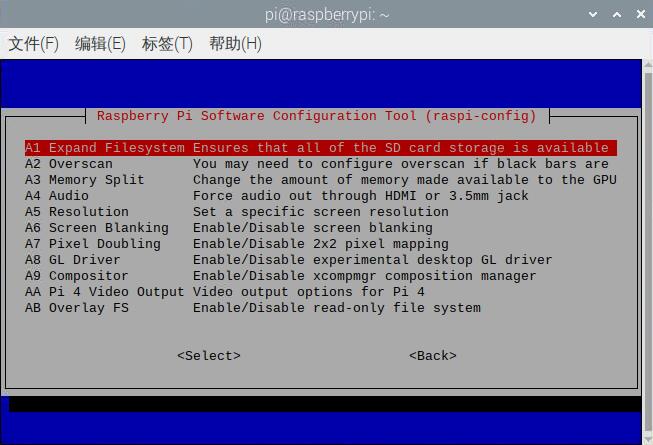

接下来选择“扩展文件系统”:

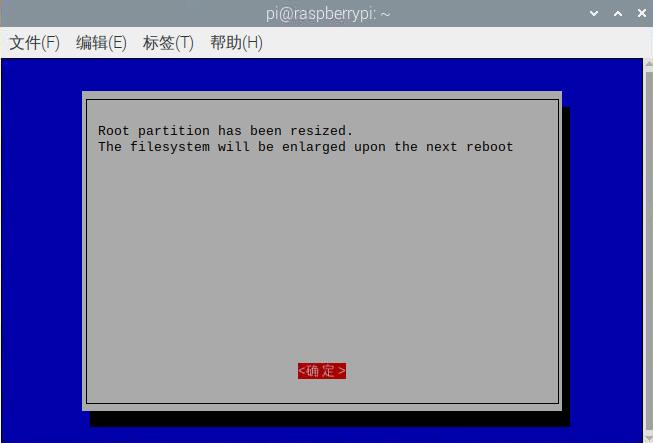

回车确定

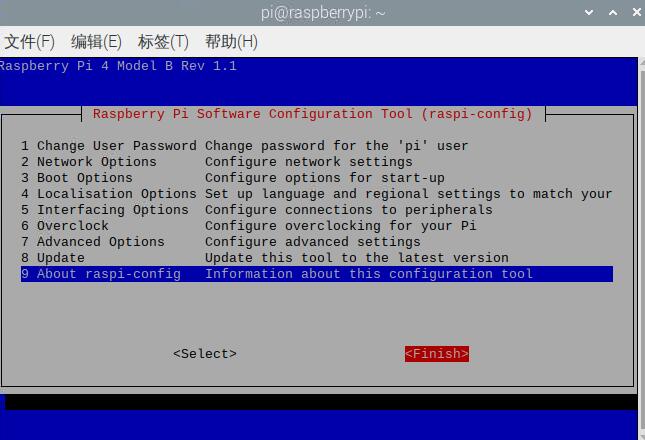

选择finish

然后重新启动Pi

sudo reboot

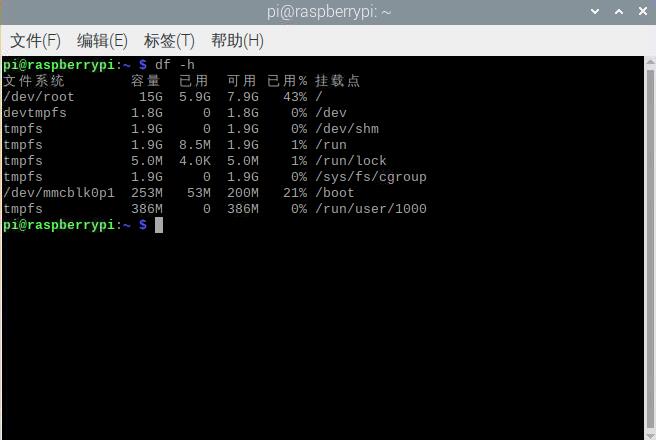

重新启动后,文件系统应该已经扩展到包含micro-SD卡上的所有可用空间。可以验证该盘已被执行扩展和检查的输出

df -h

Raspbian文件系统已经扩展到包含所有16GB的micro-SD卡。

但是,即使扩展了我的文件系统,我也已经使用了16GB卡的43%。

一件简单的事情就是删除LibreOffice和Wolfram引擎,以释放Pi上的一些空间:

sudo apt-get purge wolfram-engine

sudo apt-get purge libreoffice*

sudo apt-get clean

sudo apt-get autoremove

删除Wolfram引擎和LibreOffice后,可以回收近1GB!

②安装依赖关系

# 更新和升级任何现有的软件包

sudo apt-get update && sudo apt-get upgrade

# 安装开发工具CMake,帮助我们配置OpenCV构建过程

sudo apt-get install build-essential cmake pkg-config

# 图像I/O包,允许我们从磁盘加载各种图像文件格式。这种文件格式的例子包括JPEG,PNG,TIFF等

sudo apt-get install libjpeg-dev libtiff5-dev libjasper-dev libpng12-dev

# 视频I/O包。这些库允许我们从磁盘读取各种视频文件格式,并直接处理视频流

sudo apt-get install libavcodec-dev libavformat-dev libswscale-dev libv4l-dev

sudo apt-get install libxvidcore-dev libx264-dev

# OpenCV库附带一个名为highgui的子模块 ,用于在我们的屏幕上显示图像并构建基本的GUI。为了编译 highgui模块,我们需要安装GTK开发库

sudo apt-get install libgtk2.0-dev libgtk-3-dev

# OpenCV中的许多操作(即矩阵操作)可以通过安装一些额外的依赖关系进一步优化

sudo apt-get install libatlas-base-dev gfortran

# 安装Python 2.7和Python 3头文件,以便我们可以用Python绑定来编译OpenCV

sudo apt-get install python2.7-dev python3-dev

如果是新安装的操作系统,那么这些版本的Python可能已经是最新版本了(终端可以看到)。

③下载OpenCV源代码

现在我们已经安装了依赖项,从官方的OpenCV仓库中获取OpenCV 的 4.1.2归档。

cd ~

wget -O opencv.zip https://github.com/Itseez/opencv/archive/4.1.2.zip

unzip opencv.zip

我们需要完整安装 OpenCV 3(例如,可以访问SIFT和SURF等功能),因此我们还需要获取opencv_contrib存储库。

wget -O opencv_contrib.zip https://github.com/Itseez/opencv_contrib/archive/4.1.2.zip

unzip opencv_contrib.zip

注意:确保 opencv和 opencv_contrib版本相同。

如果版本号不匹配,那么可能会遇到编译时错误或运行时错误。

④Python 2.7或Python 3

在我们开始在我们的Raspberry Pi 3上开始编译OpenCV之前

首先需要安装 Python包管理器pip:

wget https://bootstrap.pypa.io/get-pip.py

sudo python get-pip.py

sudo python3 get-pip.py

可能会收到一条消息,指出在发出这些命令时pip已经是最新的,但最好不要跳过这一步 。

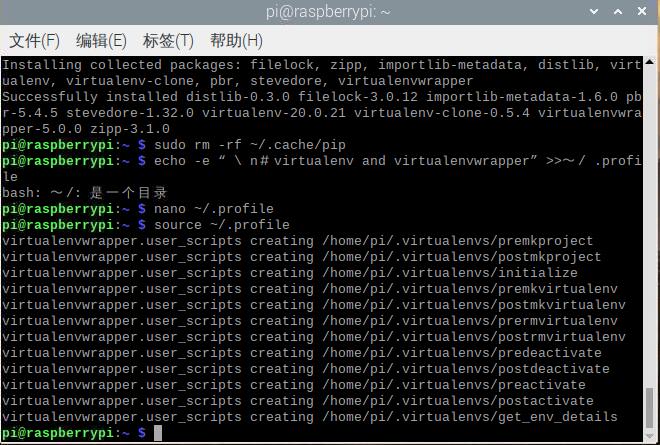

接下来安装virtualenv和 virtualenvwrapper

首先,了解虚拟环境是一种特殊的工具,通过为每个环境创建独立的,独立的 Python环境来保持不同项目所需的依赖关系,这一点很重要。

总之,它解决了“Project X取决于版本1.x,但项目Y需要4.x”的困境

安装python虚拟机

sudo pip install virtualenv virtualenvwrapper

sudo rm -rf ~/.cache/pip

配置~/.profile,添加内容:

# virtualenv and virtualenvwrapper

export WORKON_HOME=$HOME/.virtualenvs

export VIRTUALENVWRAPPER_PYTHON=/usr/bin/python3

source /usr/local/bin/virtualenvwrapper.sh

使之生效

source ~/.profile

使用Python3 安装虚拟机

mkvirtualenv cv -p python3

虚拟机完成安装之后,后续的所有操作全部在虚拟机中进行。按照教程的说明,一定要看清楚命令行前面是否有(cv),以此作为是否在虚拟机的判断!

需要重新进入虚拟机,可运行下面的命令

source ~/.profile

workon cv

再次提醒:后续所有操作均在虚拟机中

安装numpy

pip install numpy

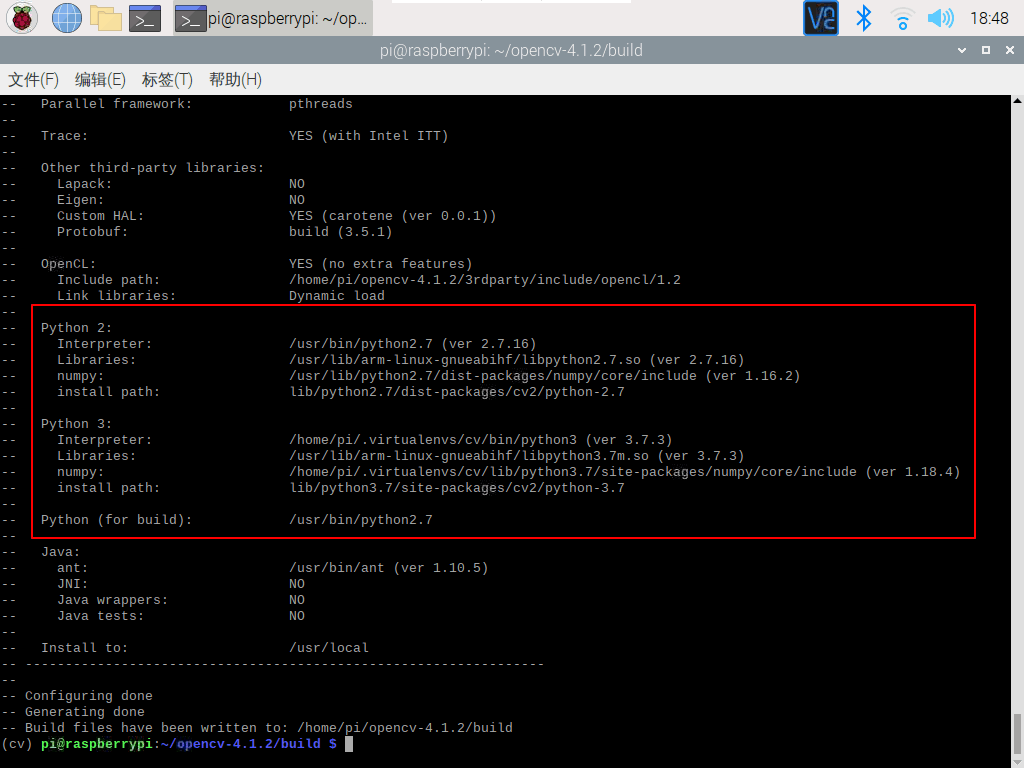

⑤编译OpenCV

cd ~/opencv-4.1.2/

mkdir build

cd build

cmake -D CMAKE_BUILD_TYPE=RELEASE

-D CMAKE_INSTALL_PREFIX=/usr/local

-D INSTALL_PYTHON_EXAMPLES=ON

-D OPENCV_EXTRA_MODULES_PATH=~/opencv_contrib-4.1.2/modules

-D BUILD_EXAMPLES=ON ..

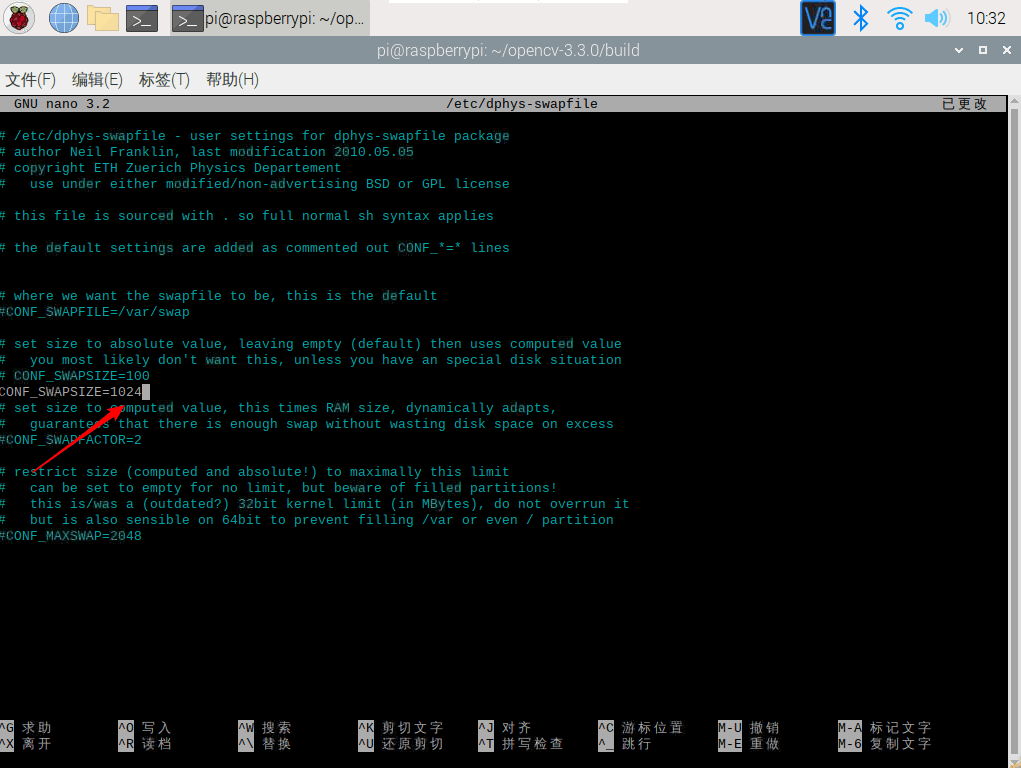

编译之前配置交换空间大小

在开始编译过程之前,应 增加交换空间的大小。这使OpenCV可以使用 Raspberry PI的所有四个内核进行编译,而不会由于内存问题而挂起编译。

把交换空间交换空间增大到 CONF_SWAPSIZE=1024

# 虚拟机中sudo才可以修改

sudo nano /etc/dphys-swapfile

sudo /etc/init.d/dphys-swapfile stop

sudo /etc/init.d/dphys-swapfile start

# 开始编译(顺利的话1个多小时就可以编译完,运气不好的话遇到很多坑可能一天都...)

make

编译过程好费时间长而且一波三折,遇到了一些坑(好多ERROR),重新烧录了好几次备份系统(差点自闭QAQ),但好在都一一解决了,遇到问题详情及解决办法在(5)。

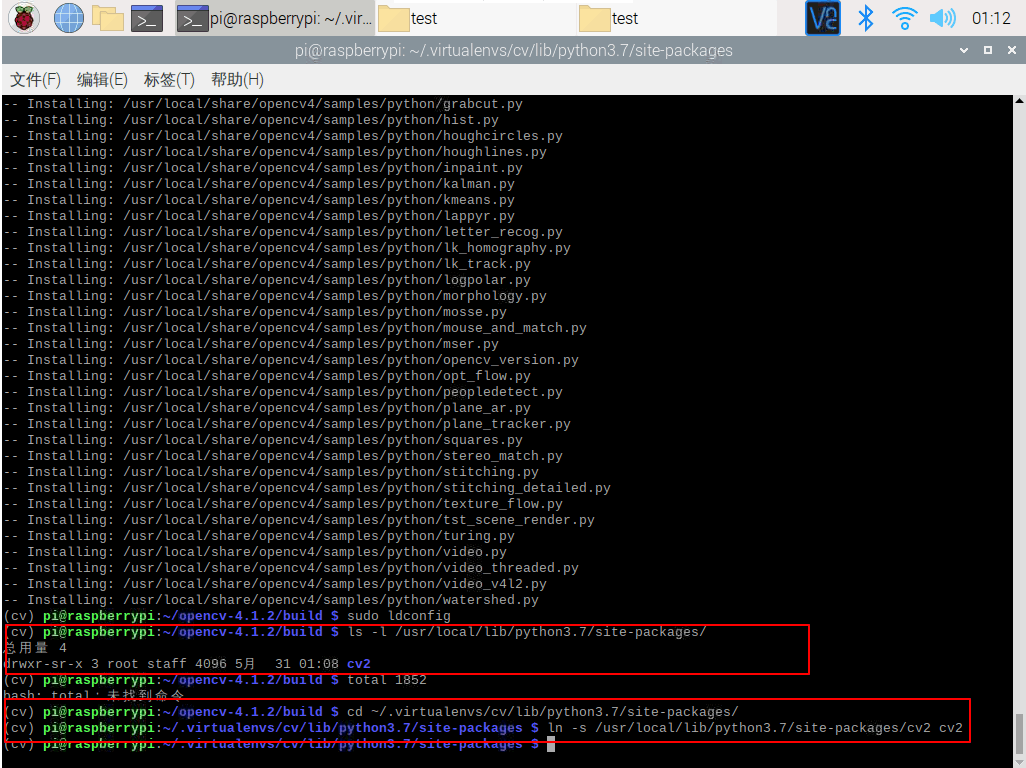

⑥安装OpenCV

sudo make install

sudo ldconfig

检查OpenCV的安装位置

ls -l /usr/local/lib/python3.7/site-packages/

cd ~/.virtualenvs/cv/lib/python3.7/site-packages/

ln -s /usr/local/lib/python3.7/site-packages/cv2 cv2

验证安装

source ~/.profile

workon cv

python

import cv2

cv2.__version__

关于opencv的编译安装,可以参考

- Adrian Rosebrock的Raspbian Stretch: Install OpenCV 3 + Python on your Raspberry Pi。

- Raspberry Pi 4B 使用OpenCV访问摄像头picamera模块

- Raspbian Stretch:在你的Raspberry Pi上安装OpenCV 3 + Python

- OpenCV 各种安装错误汇总

(2)使用opencv和python控制树莓派的摄像头

安装picreame

source ~/.profile

workon cv

pip install "picamera[array]"

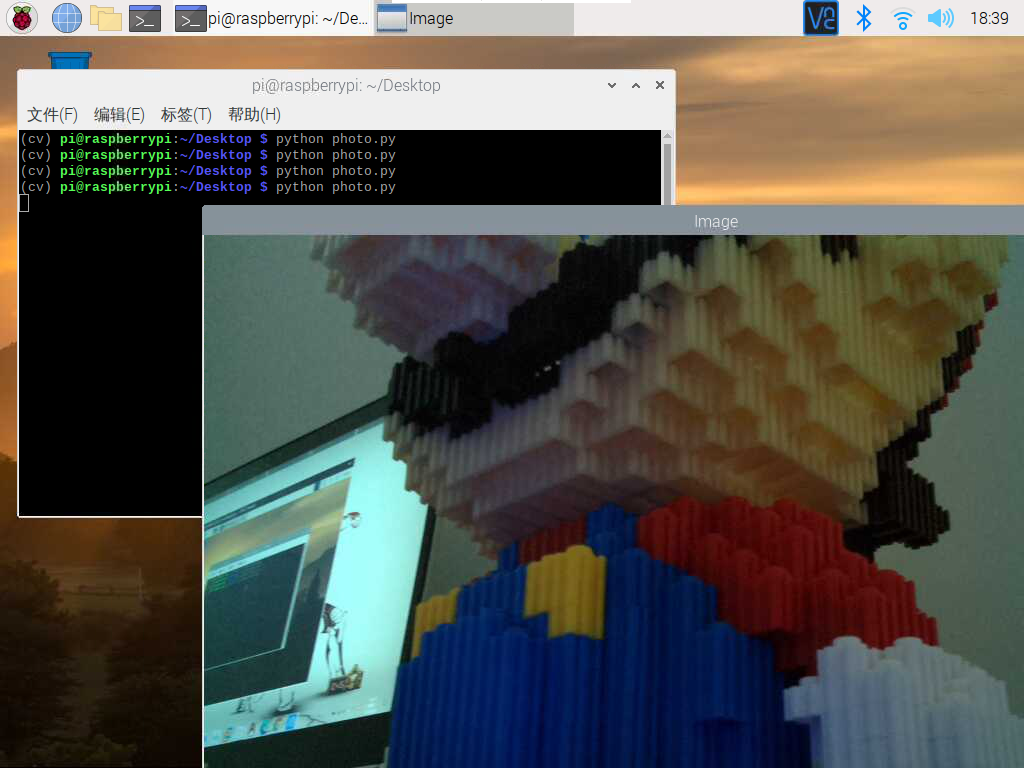

拍照测试

按照教程给的示例代码,验证Python控制摄像头拍照的效果,增加示例代码中sleep的时间,让摄像头曝光时间增加,拍照效果比较好。

示例代码

# import the necessary packages

from picamera.array import PiRGBArray

from picamera import PiCamera

import time

import cv2

# initialize the camera and grab a reference to the raw camera capture

camera = PiCamera()

rawCapture = PiRGBArray(camera)

# allow the camera to warmup

# 此处把0.1改成了5

time.sleep(5)

# grab an image from the camera

camera.capture(rawCapture, format="bgr")

image = rawCapture.array

# display the image on screen and wait for a keypress

cv2.imshow("Image", image)

cv2.waitKey(0)

- 参考教程:还是可以参考Adrian Rosebrock的Accessing the Raspberry Pi Camera with OpenCV and Python跑通教程的示例代码(有可能要调整里面的参数)

(3)利用树莓派的摄像头实现人脸识别

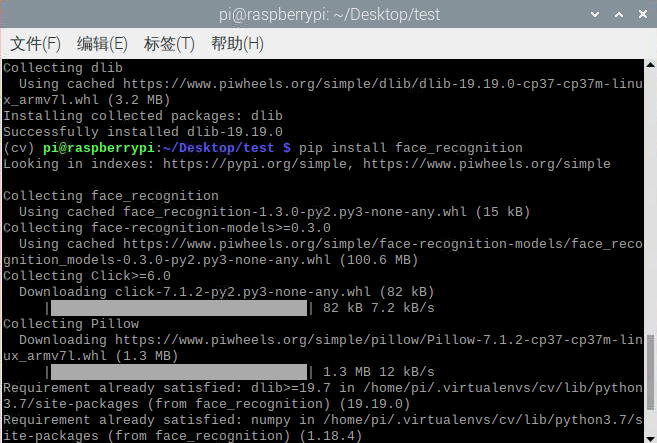

安装依赖库dlib,face_recognition

在命令行输入:

source ~/.profile

workon cv

pip install dlib

pip install face_recognition

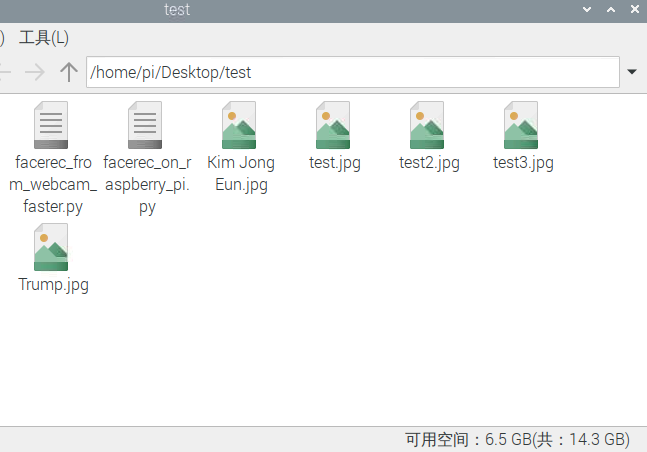

切换到放有要加载图片和python代码的目录下

①facerec_on_raspberry_pi.py

示例代码如下:

# This is a demo of running face recognition on a Raspberry Pi.

# This program will print out the names of anyone it recognizes to the console.

# To run this, you need a Raspberry Pi 2 (or greater) with face_recognition and

# the picamera[array] module installed.

# You can follow this installation instructions to get your RPi set up:

# https://gist.github.com/ageitgey/1ac8dbe8572f3f533df6269dab35df65

import face_recognition

import picamera

import numpy as np

# Get a reference to the Raspberry Pi camera.

# If this fails, make sure you have a camera connected to the RPi and that you

# enabled your camera in raspi-config and rebooted first.

camera = picamera.PiCamera()

camera.resolution = (320, 240)

output = np.empty((240, 320, 3), dtype=np.uint8)

# Load a sample picture and learn how to recognize it.

print("Loading known face image(s)")

image = face_recognition.load_image_file("test.jpg")

face_encoding = face_recognition.face_encodings(image)[0]

# Initialize some variables

face_locations = []

face_encodings = []

while True:

print("Capturing image.")

# Grab a single frame of video from the RPi camera as a numpy array

camera.capture(output, format="rgb")

# Find all the faces and face encodings in the current frame of video

face_locations = face_recognition.face_locations(output)

print("Found {} faces in image.".format(len(face_locations)))

face_encodings = face_recognition.face_encodings(output, face_locations)

# Loop over each face found in the frame to see if it's someone we know.

for face_encoding in face_encodings:

# See if the face is a match for the known face(s)

match = face_recognition.compare_faces([face_encoding], face_encoding)

name = "<Unknown Person>"

if match[0]:

name = "Trump"

print("I see someone named {}!".format(name))

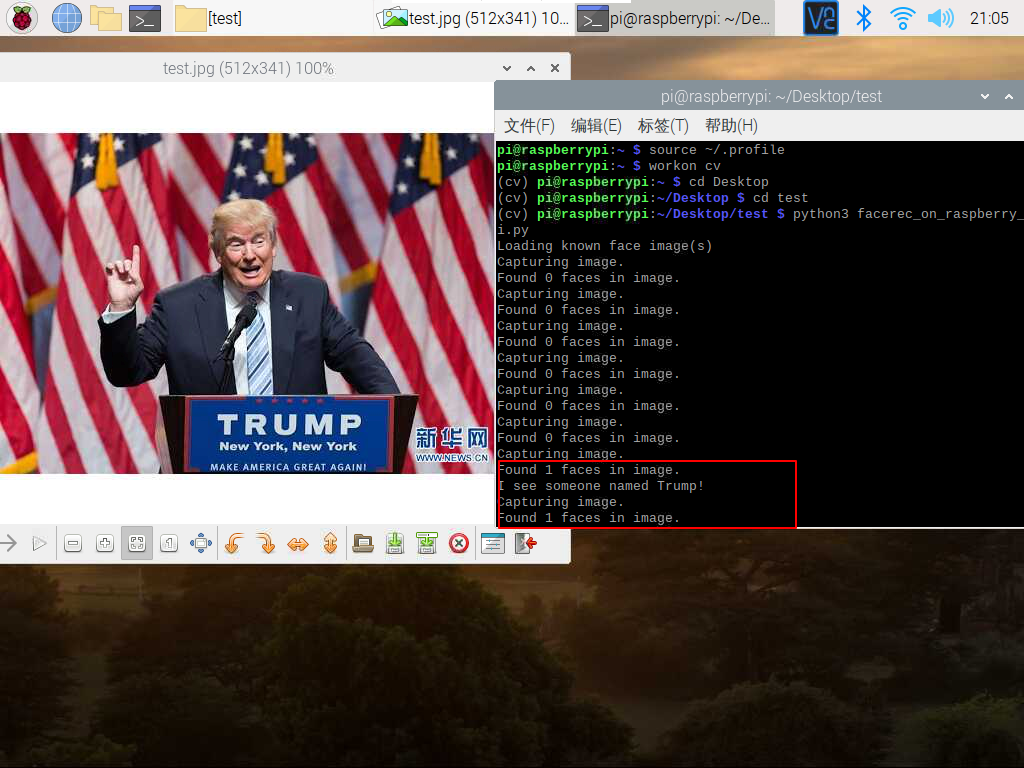

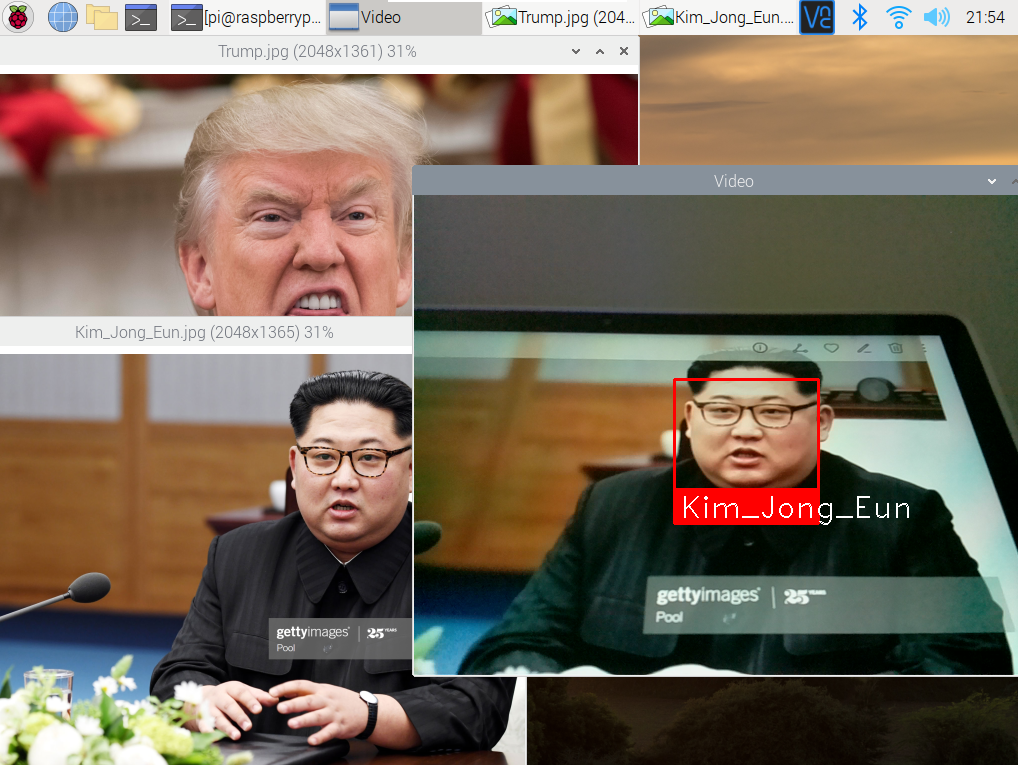

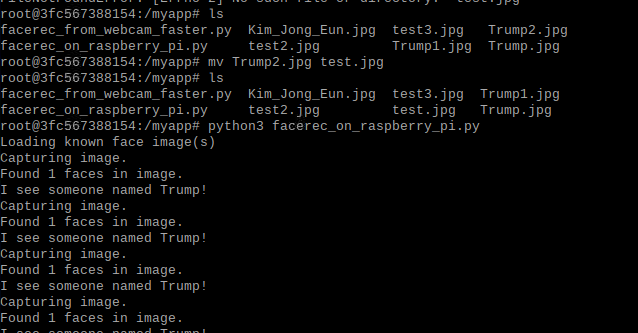

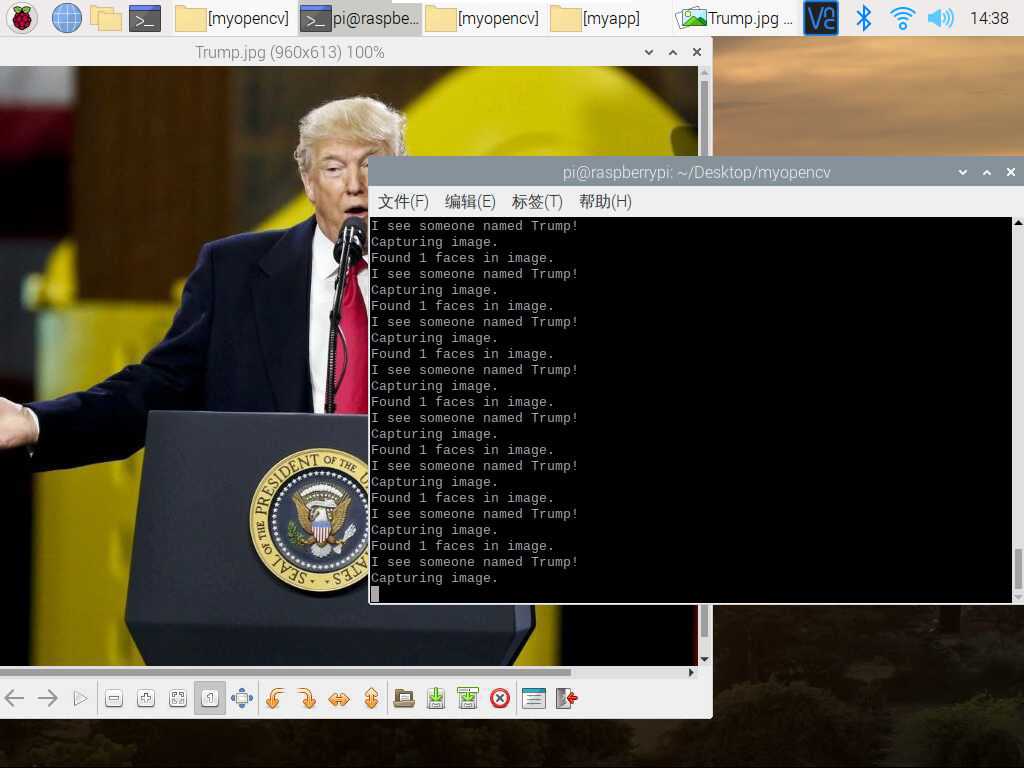

一开始摄像头没有对准照片,后面照片移入摄像头拍摄范围,可以看到识别成功。

-

test.jpg用于上传转换格式提取特征值保存

-

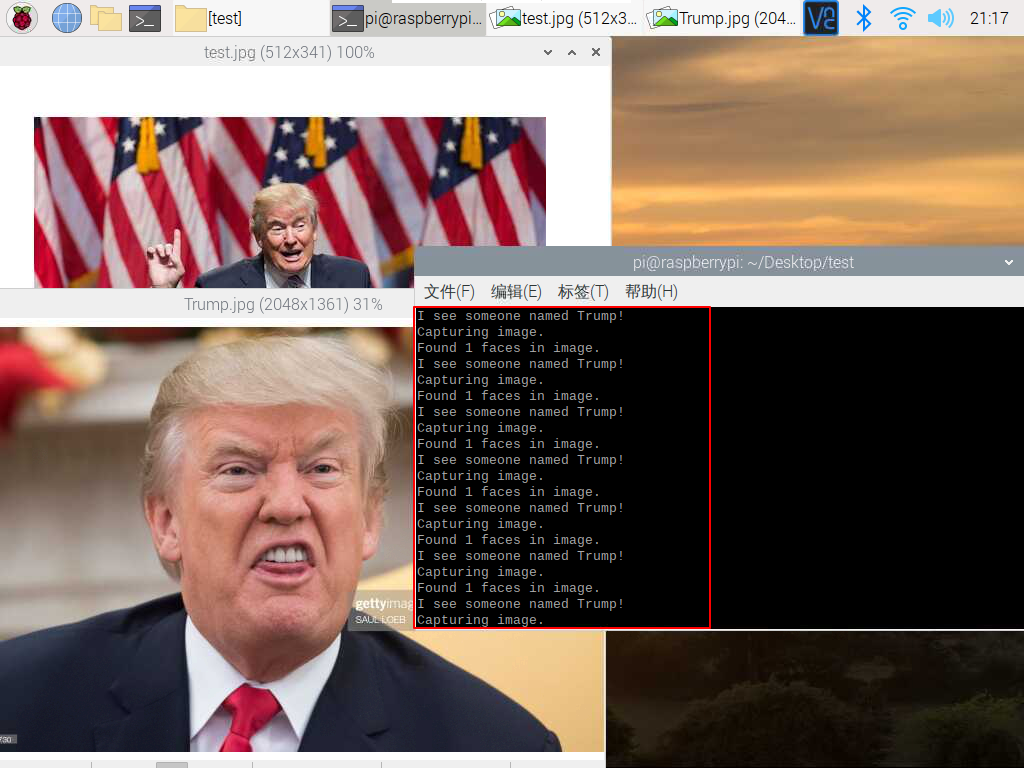

Trump.jpg用于测试是否准确识别出Trump

-

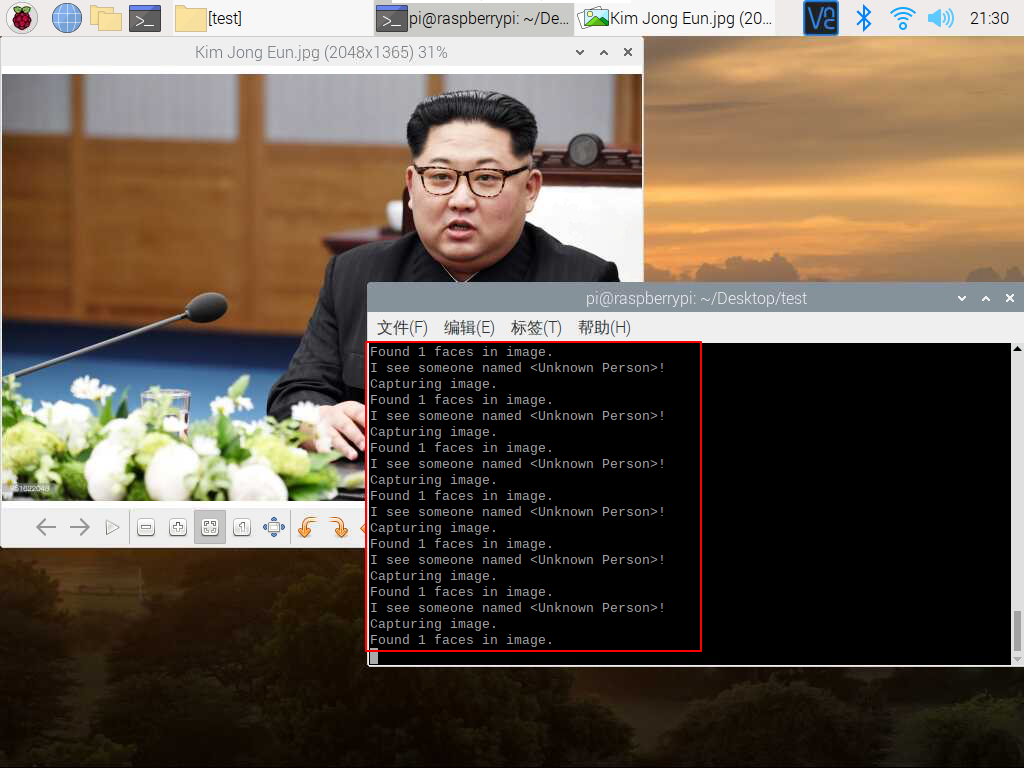

Kim Jong Eun.jpg用于测试是否准确识别出Unknown Person

测试test2.jpg并查看是否准确识别Trump

当照片切换到Kim Jong Eun.jpg,可以看到识别出一张人脸而且是Unknown Person

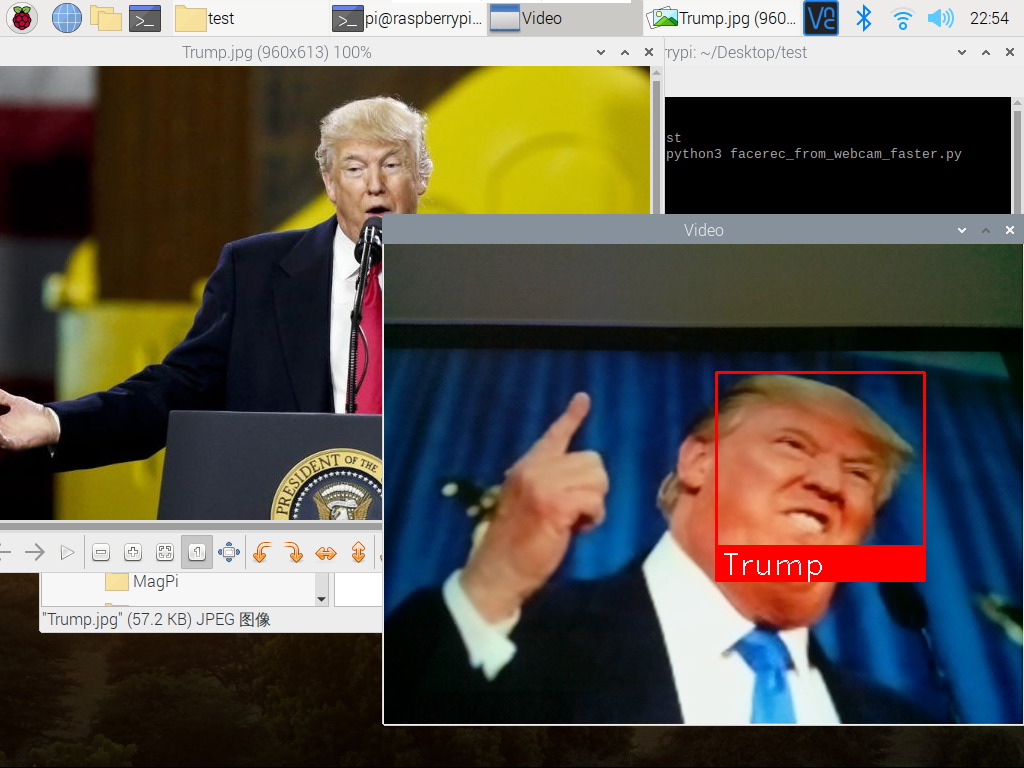

②facerec_from_webcam_faster.py

示例代码如下:

import face_recognition

import cv2

import numpy as np

# This is a demo of running face recognition on live video from your webcam. It's a little more complicated than the

# other example, but it includes some basic performance tweaks to make things run a lot faster:

# 1. Process each video frame at 1/4 resolution (though still display it at full resolution)

# 2. Only detect faces in every other frame of video.

# PLEASE NOTE: This example requires OpenCV (the `cv2` library) to be installed only to read from your webcam.

# OpenCV is *not* required to use the face_recognition library. It's only required if you want to run this

# specific demo. If you have trouble installing it, try any of the other demos that don't require it instead.

# Get a reference to webcam #0 (the default one)

video_capture = cv2.VideoCapture(0)

# Load a sample picture and learn how to recognize it.

Trump_image = face_recognition.load_image_file("Trump.jpg")

Trump_face_encoding = face_recognition.face_encodings(Trump_image)[0]

# Load a second sample picture and learn how to recognize it.

Kim_Jong_Eunimage = face_recognition.load_image_file("Kim_Jong_Eun.jpg")

Kim_Jong_Eunface_encoding = face_recognition.face_encodings(Kim_Jong_Eunimage)[0]

# Create arrays of known face encodings and their names

known_face_encodings = [

Trump_face_encoding,

Kim_Jong_Eunface_encoding

]

known_face_names = [

"Trump",

"Kim_Jong_Eun"

]

# Initialize some variables

face_locations = []

face_encodings = []

face_names = []

process_this_frame = True

while True:

# Grab a single frame of video

ret, frame = video_capture.read()

# Resize frame of video to 1/4 size for faster face recognition processing

small_frame = cv2.resize(frame, (0, 0), fx=0.25, fy=0.25)

# Convert the image from BGR color (which OpenCV uses) to RGB color (which face_recognition uses)

rgb_small_frame = small_frame[:, :, ::-1]

# Only process every other frame of video to save time

if process_this_frame:

# Find all the faces and face encodings in the current frame of video

face_locations = face_recognition.face_locations(rgb_small_frame)

face_encodings = face_recognition.face_encodings(rgb_small_frame, face_locations)

face_names = []

for face_encoding in face_encodings:

# See if the face is a match for the known face(s)

matches = face_recognition.compare_faces(known_face_encodings, face_encoding)

name = "Unknown"

# # If a match was found in known_face_encodings, just use the first one.

# if True in matches:

# first_match_index = matches.index(True)

# name = known_face_names[first_match_index]

# Or instead, use the known face with the smallest distance to the new face

face_distances = face_recognition.face_distance(known_face_encodings, face_encoding)

best_match_index = np.argmin(face_distances)

if matches[best_match_index]:

name = known_face_names[best_match_index]

face_names.append(name)

process_this_frame = not process_this_frame

# Display the results

for (top, right, bottom, left), name in zip(face_locations, face_names):

# Scale back up face locations since the frame we detected in was scaled to 1/4 size

top *= 4

right *= 4

bottom *= 4

left *= 4

# Draw a box around the face

cv2.rectangle(frame, (left, top), (right, bottom), (0, 0, 255), 2)

# Draw a label with a name below the face

cv2.rectangle(frame, (left, bottom - 35), (right, bottom), (0, 0, 255), cv2.FILLED)

font = cv2.FONT_HERSHEY_DUPLEX

cv2.putText(frame, name, (left + 6, bottom - 6), font, 1.0, (255, 255, 255), 1)

# Display the resulting image

cv2.imshow('Video', frame)

# Hit 'q' on the keyboard to quit!

if cv2.waitKey(1) & 0xFF == ord('q'):

break

# Release handle to the webcam

video_capture.release()

cv2.destroyAllWindows()

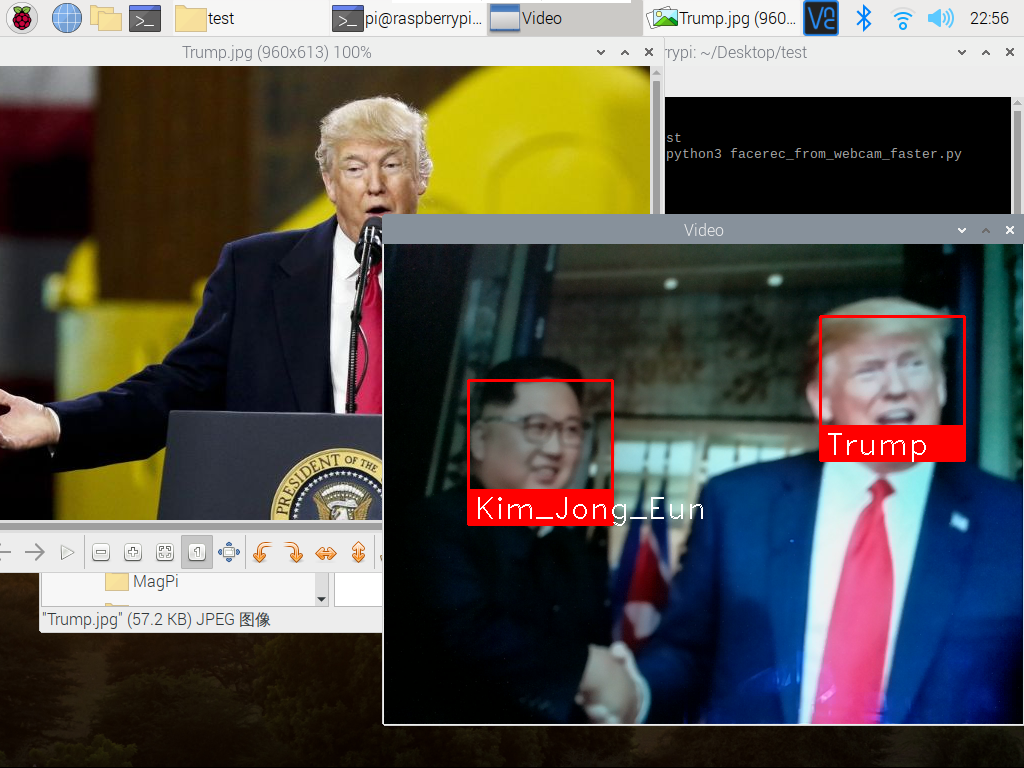

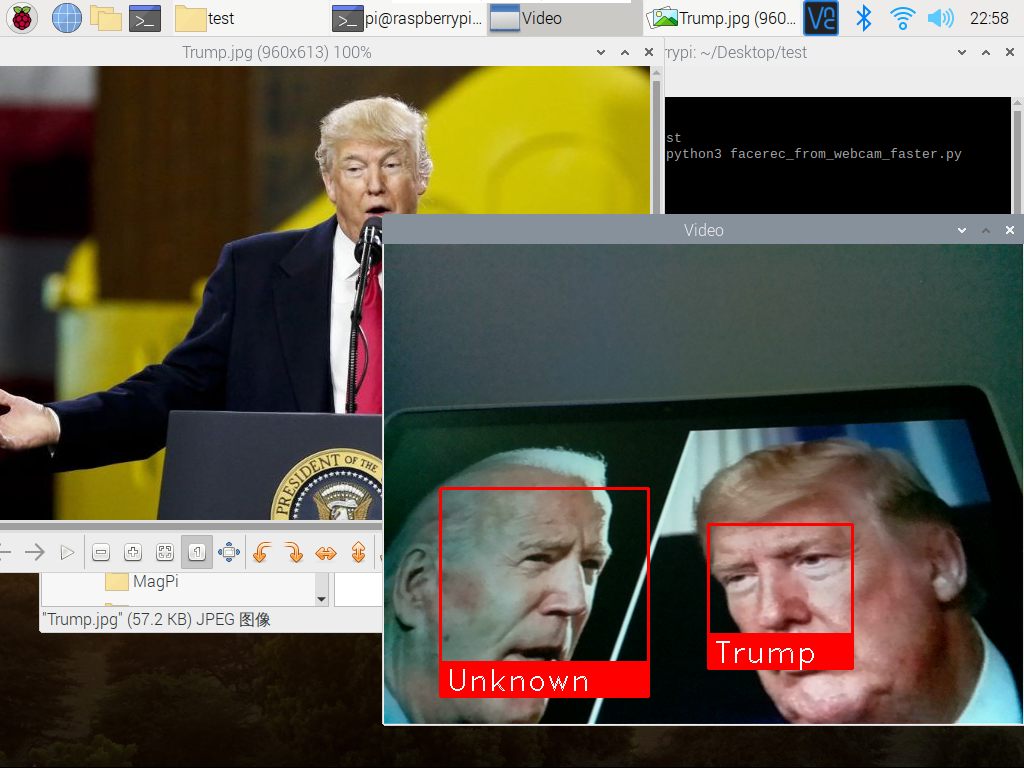

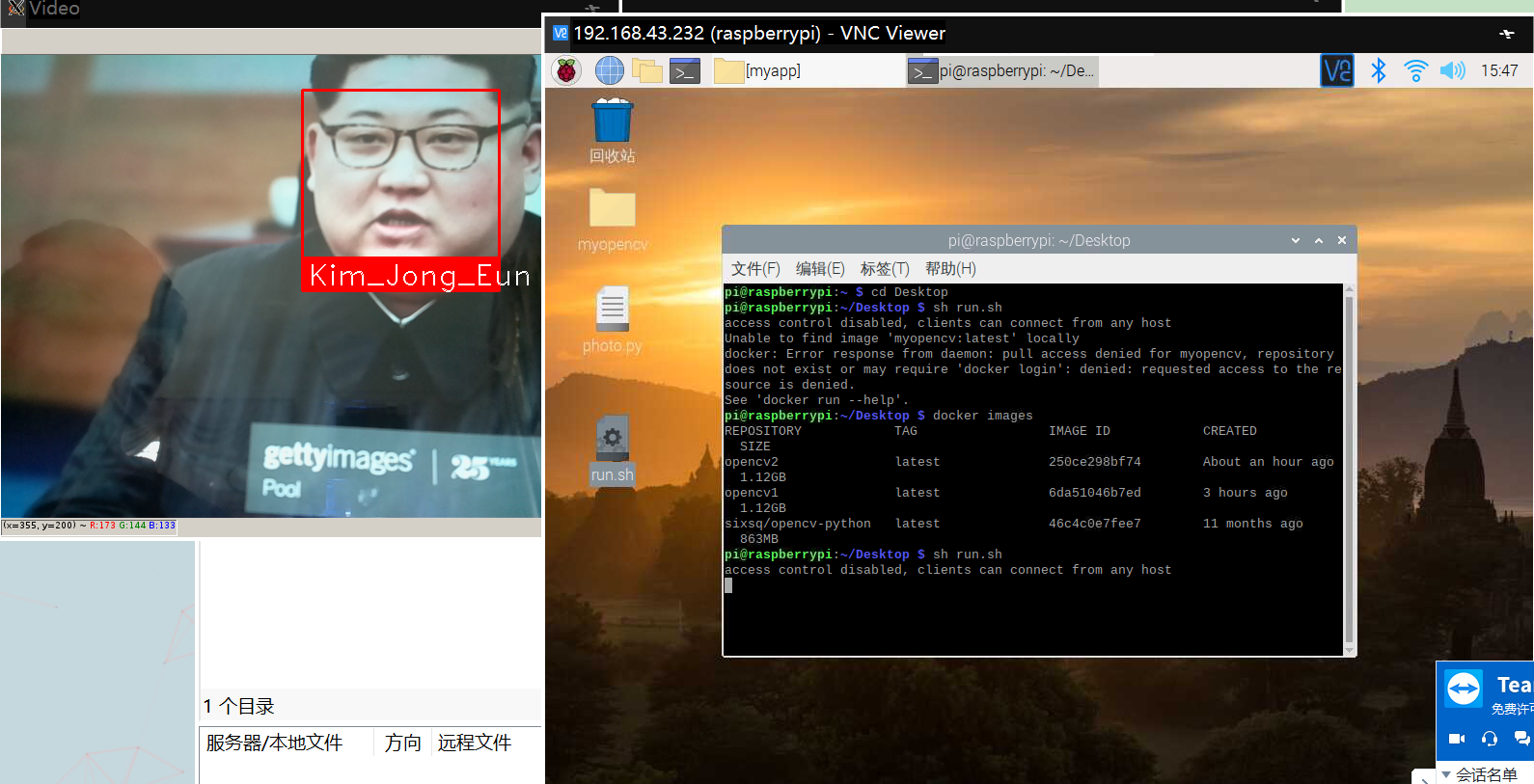

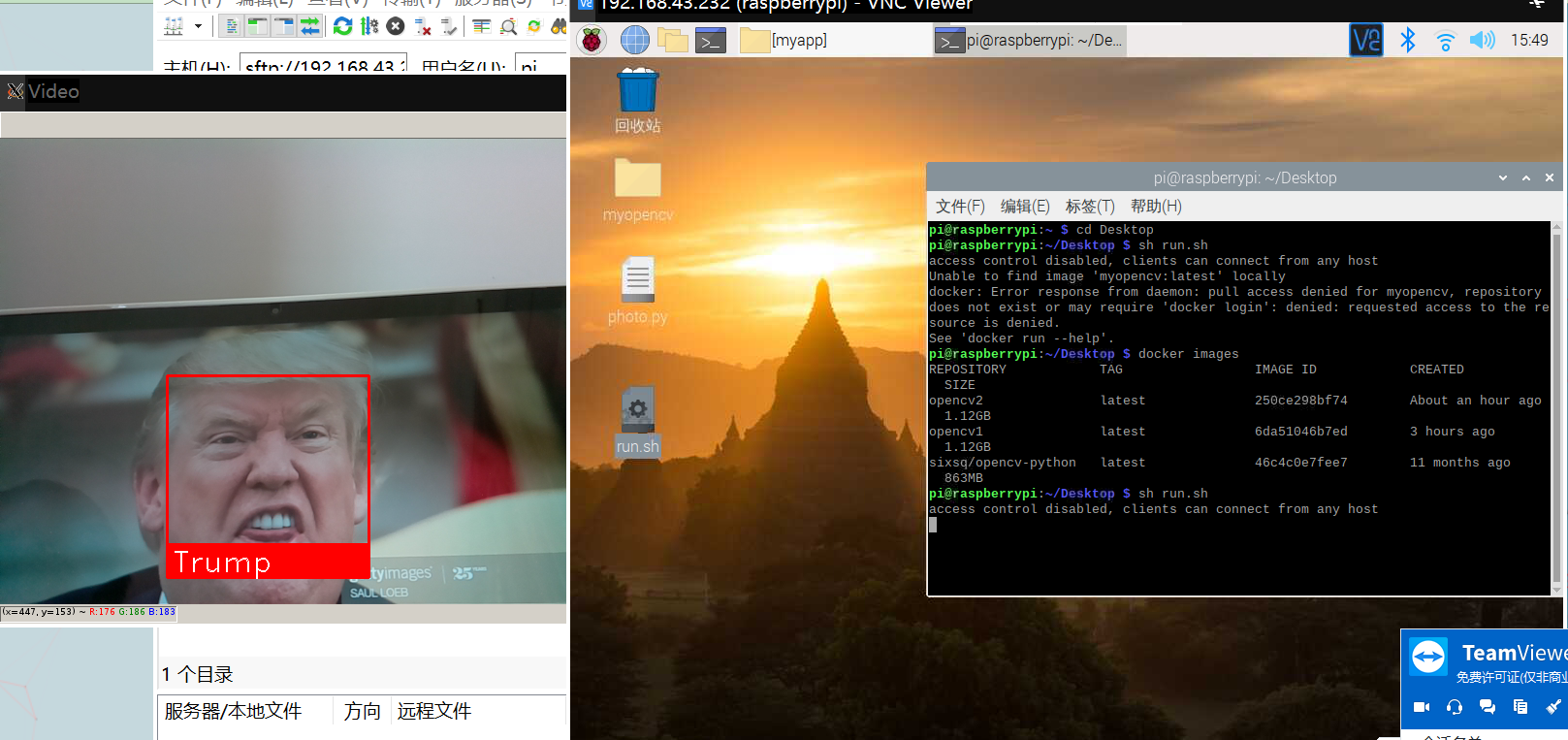

验证识别成功

因为左边的人物图像没有上传提取特征值保存,所以识别为unknown,识别成功

- 人脸识别有开源的python库face_recognition,这当中有很多示例代码

- 参考教程:树莓派实现简单的人脸识别

- 要求:跑通face_recognition的示例代码facerec_on_raspberry_pi.py以及facerec_from_webcam_faster.py

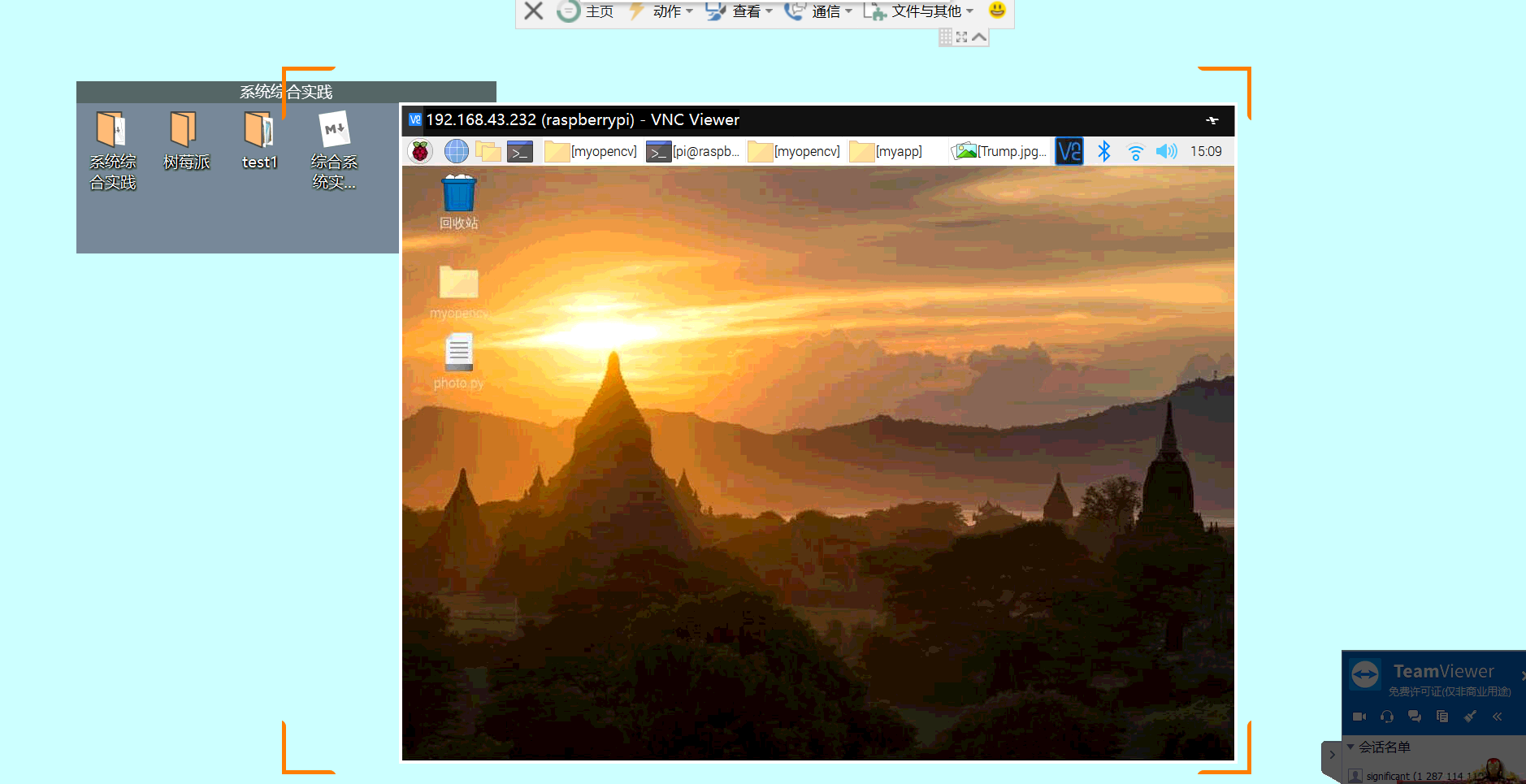

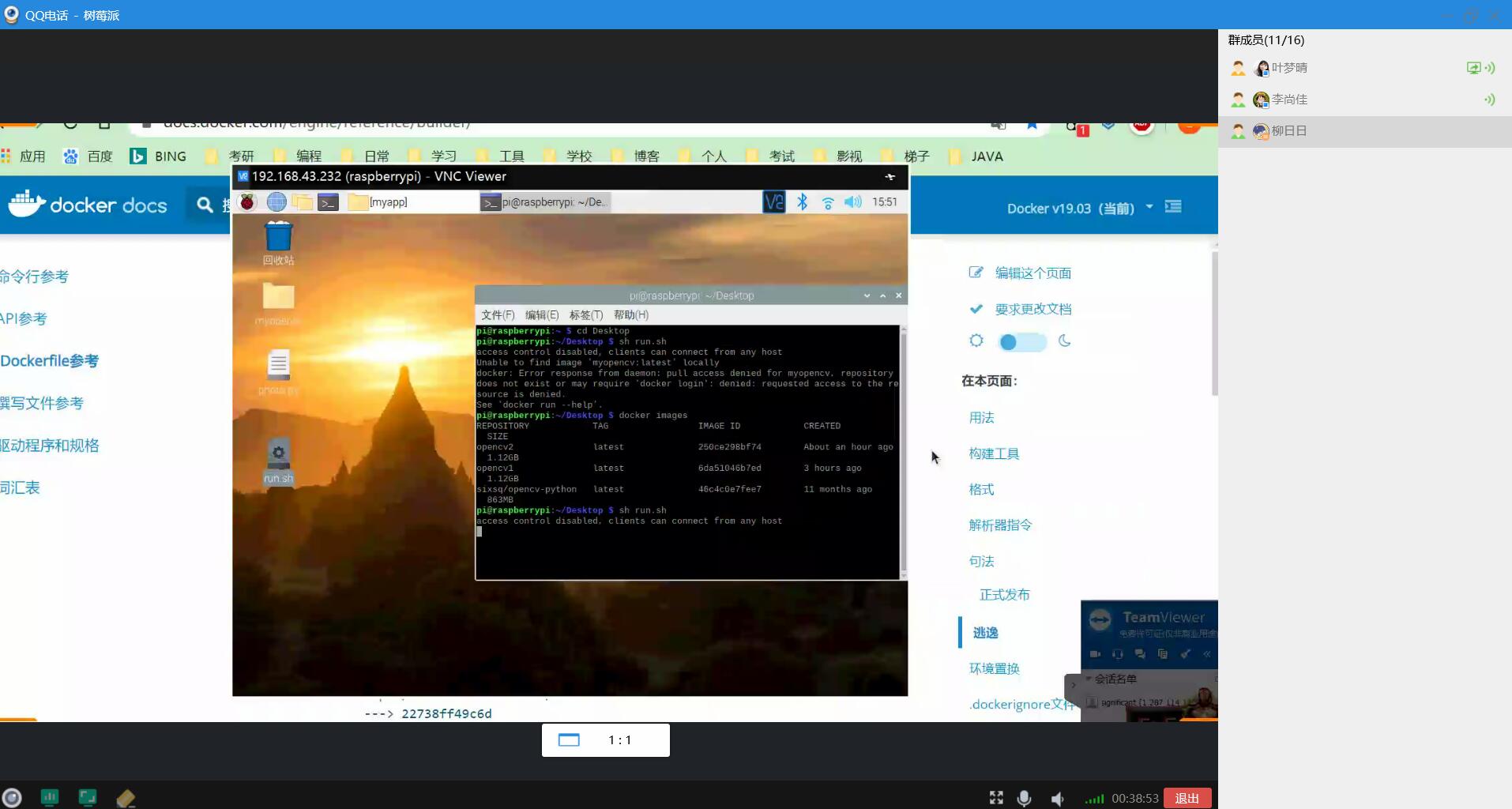

(4)结合微服务的进阶任务

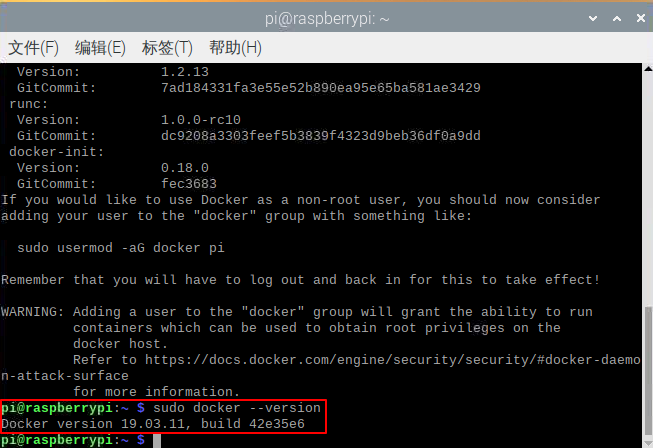

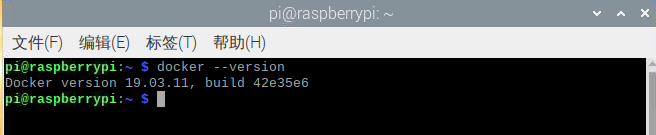

①安装Docker

下载安装脚本

curl -fsSL https://get.docker.com -o get-docker.sh

运行安装脚本(阿里云镜像)

sh get-docker.sh --mirror Aliyun

查看docker版本,验证是否安装成功

添加用户到docker组

sudo usermod -aG docker pi

重新登陆让用户组生效

exit

ssh pi@raspiberry

重启之后,docker指令之前就不需要加sudo了

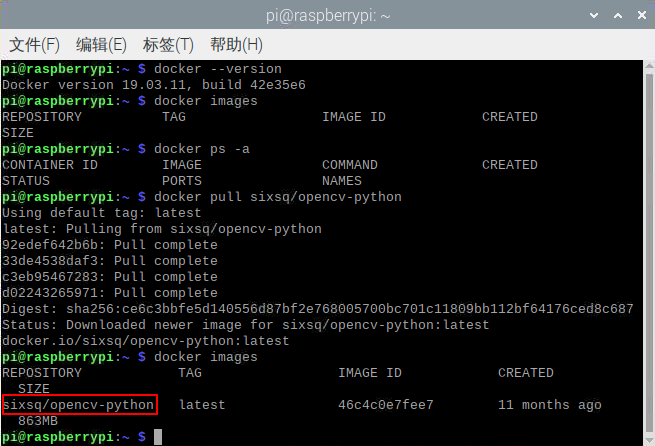

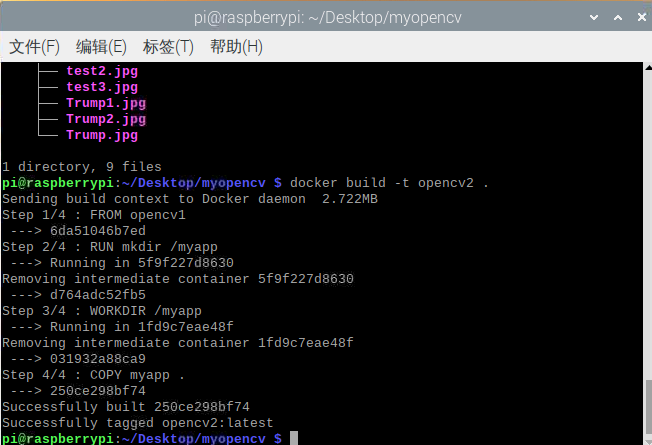

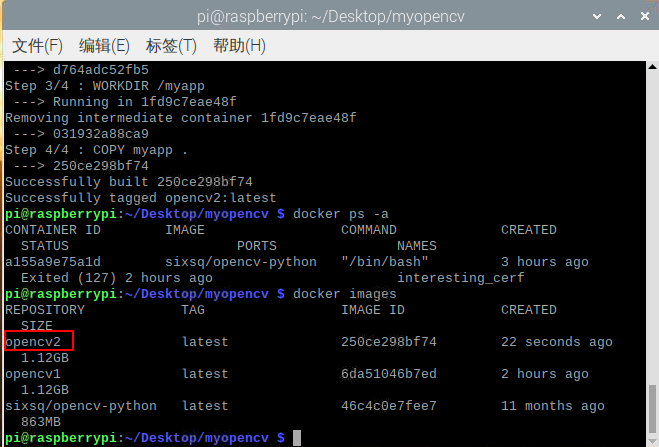

②定制opencv镜像

拉取镜像

docker pull sixsq/opencv-python

创建并运行容器

docker run -it sixsq/opencv-python /bin/bash

在容器中,用pip3安装 "picamera[array]",dlib和face_recognition

pip3 install "picamera[array]"

pip3 install dlib

pip3 install face_recognition

exit

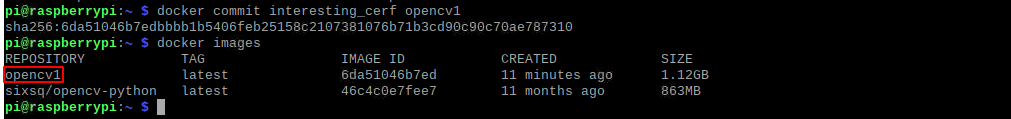

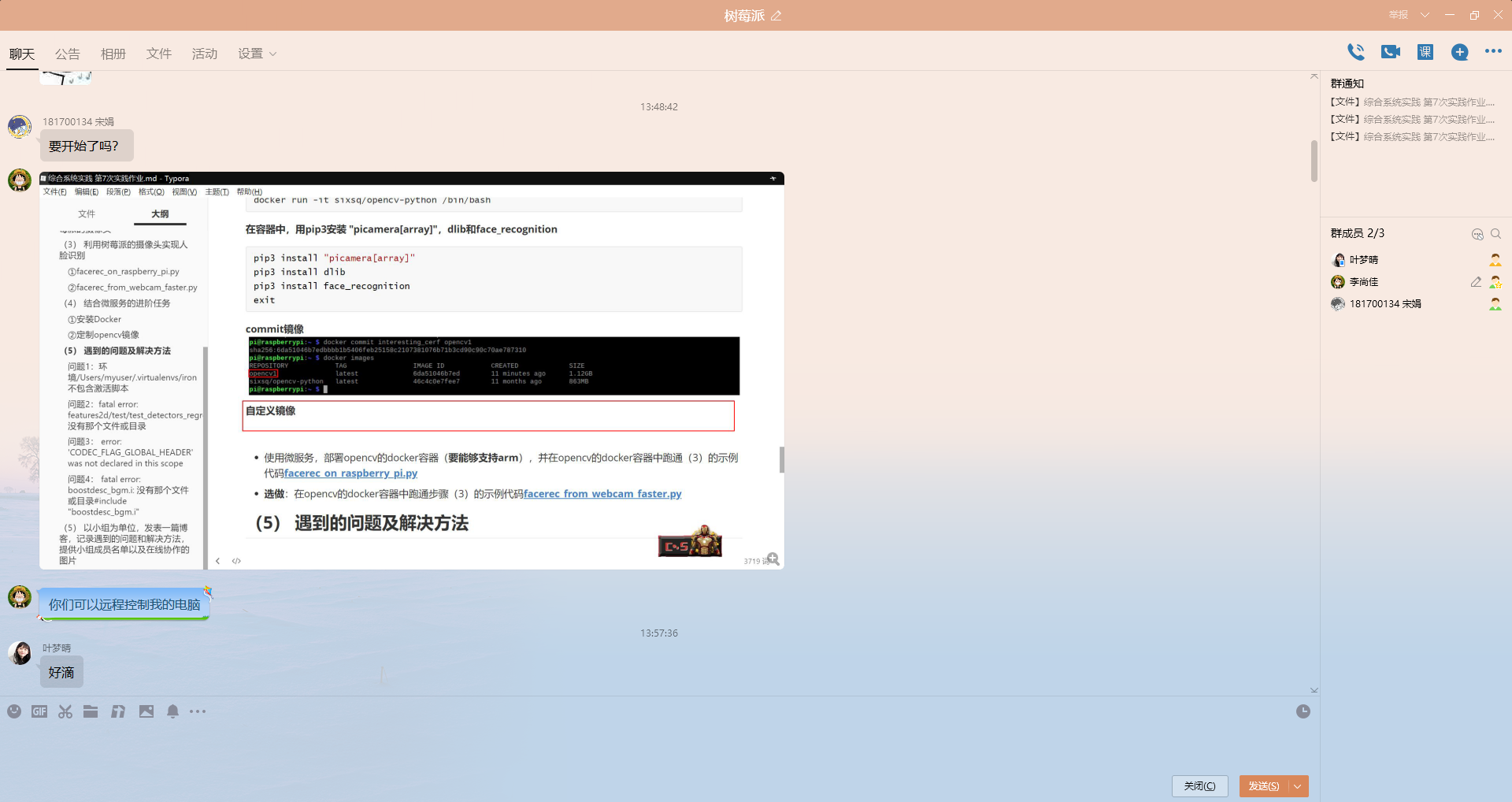

commit镜像

自定义镜像

- Dockerfile

FROM opencv1

RUN mkdir /myapp

WORKDIR /myapp

COPY myapp .

构建镜像

docker build -t opencv2 .

查看镜像

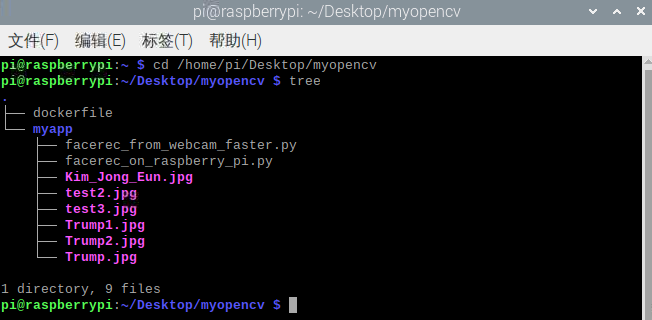

③运行容器执行facerec_on_raspberry_pi.py

docker run -it --device=/dev/vchiq --device=/dev/video0 --name myopencv opencv2

python3 facerec_on_raspberry_pi.py

④选做:在opencv的docker容器中跑通步骤(3)的示例代码facerec_from_webcam_faster.py

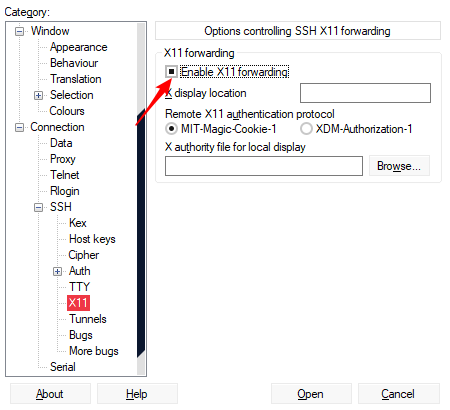

在Windows系统中安装Xming

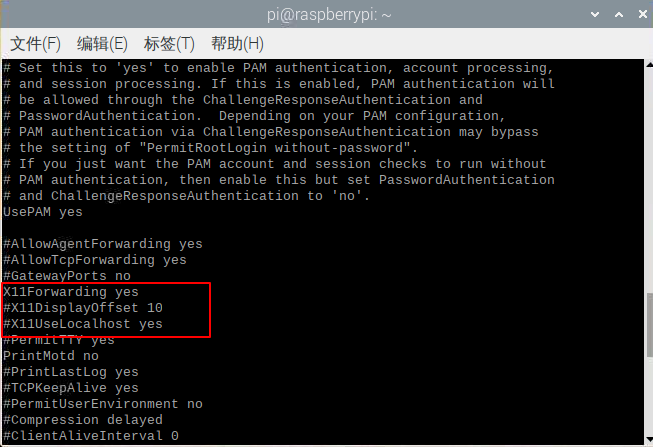

开启树莓派的ssh配置中的X11

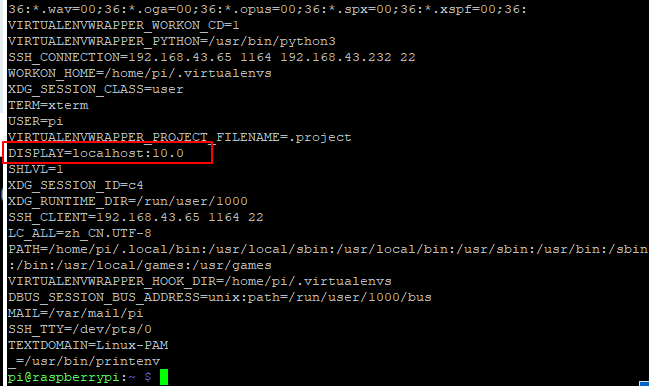

查看DISPLAY环境变量值

printenv

编写run.sh

#sudo apt-get install x11-xserver-utils

xhost +

docker run -it

--net=host

-v $HOME/.Xauthority:/root/.Xauthority

-e DISPLAY=:10.0

-e QT_X11_NO_MITSHM=1

--device=/dev/vchiq

--device=/dev/video0

--name facerecgui

opencv2

python3 facerec_from_webcam_faster.py

打开终端,运行run.sh

sh run.sh

可以看到在windows的Xvideo可以正确识别人脸。

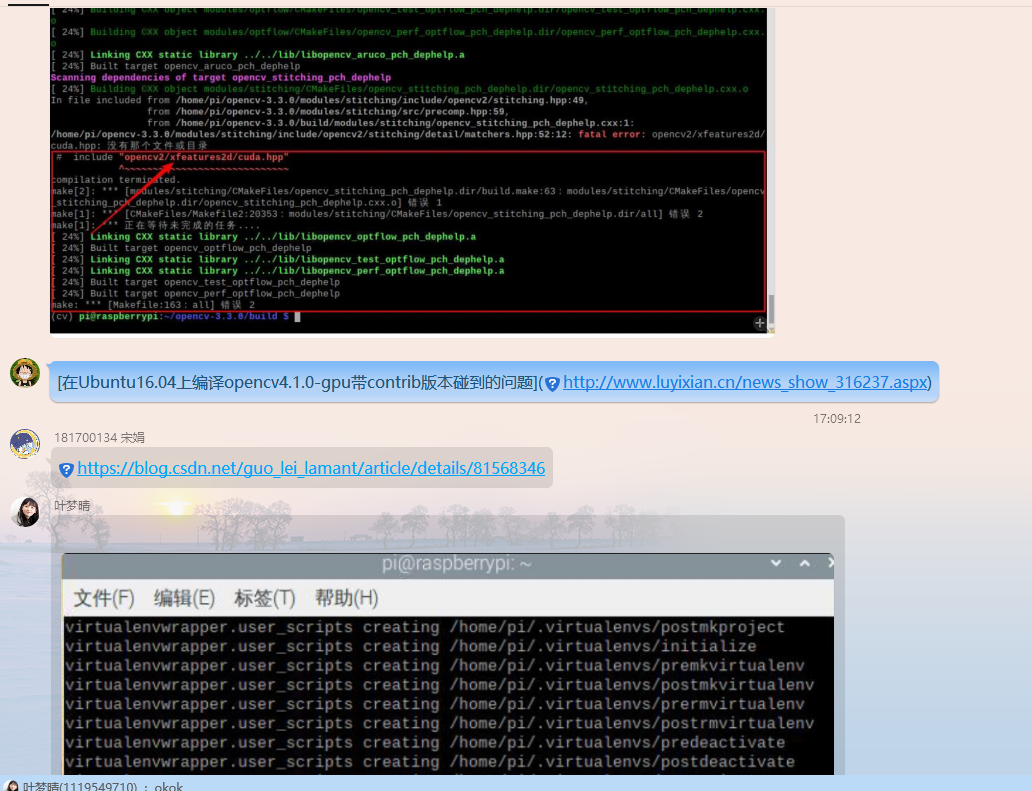

(5)遇到的问题及解决方法

问题1:环境/Users/myuser/.virtualenvs/iron不包含激活脚本

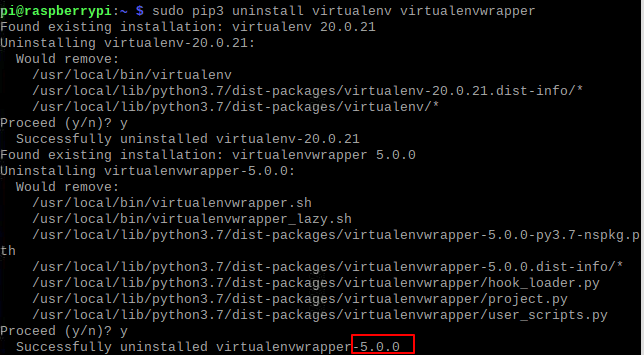

在使用Python3 安装虚拟机的时候出现下图的安装错误

卸载virtualenv和virtualenvwrapper

sudo pip3 uninstall virtualenv virtualenvwrapper

看到virtualenvwrapper我安装的版本是5.0.0。

检查了PyPi,它仍然是4.8.4版。

重新安装了两者并指定了版本

sudo pip3 install virtualenv virtualenvwrapper=='4.8.4'

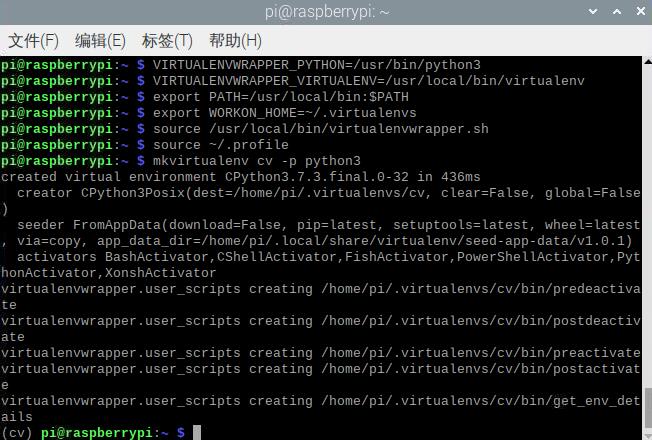

我获取了.bashrc的源代码,其中附加了设置:

VIRTUALENVWRAPPER_PYTHON=/usr/bin/python3

VIRTUALENVWRAPPER_VIRTUALENV=/usr/local/bin/virtualenv

export PATH=/usr/local/bin:$PATH

export WORKON_HOME=~/.virtualenvs

source /usr/local/bin/virtualenvwrapper.sh

Python3重新安装虚拟机

mkvirtualenv cv -p python3

参考教程

Error: Environment /Users/myuser/.virtualenvs/iron does not contain activation script

ERROR: Environment '/home/pi/.virtualenvs/cv' does not contain an activate script

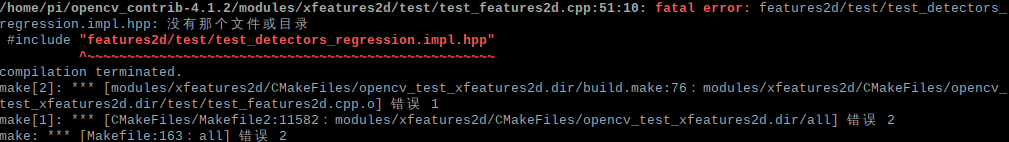

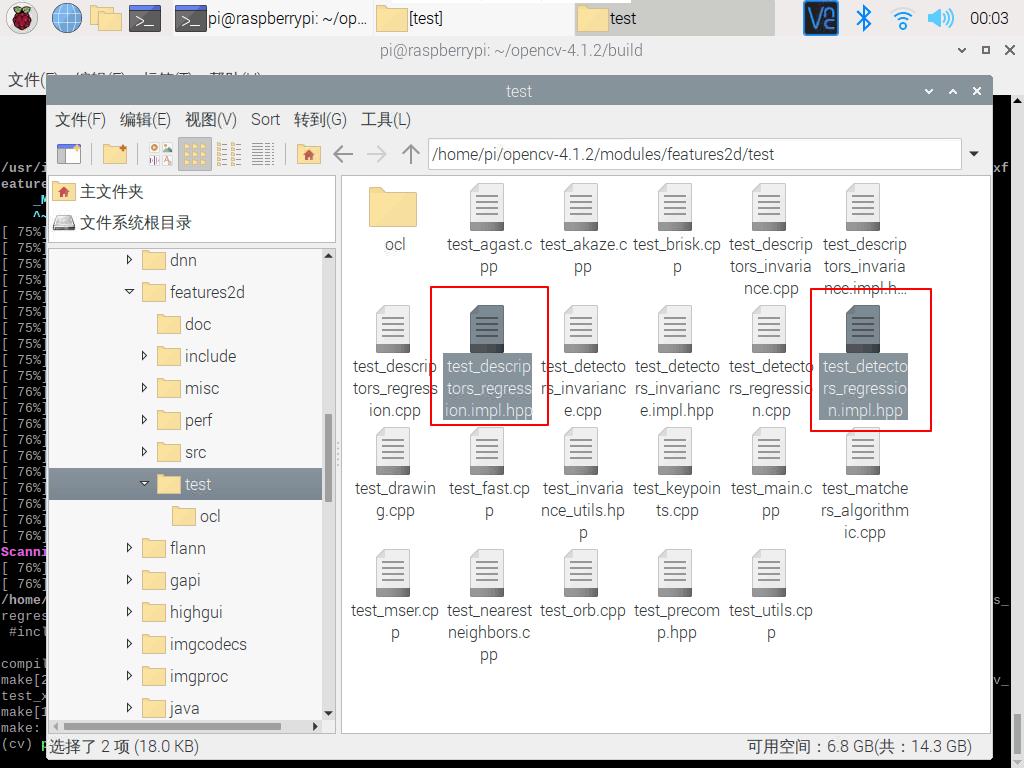

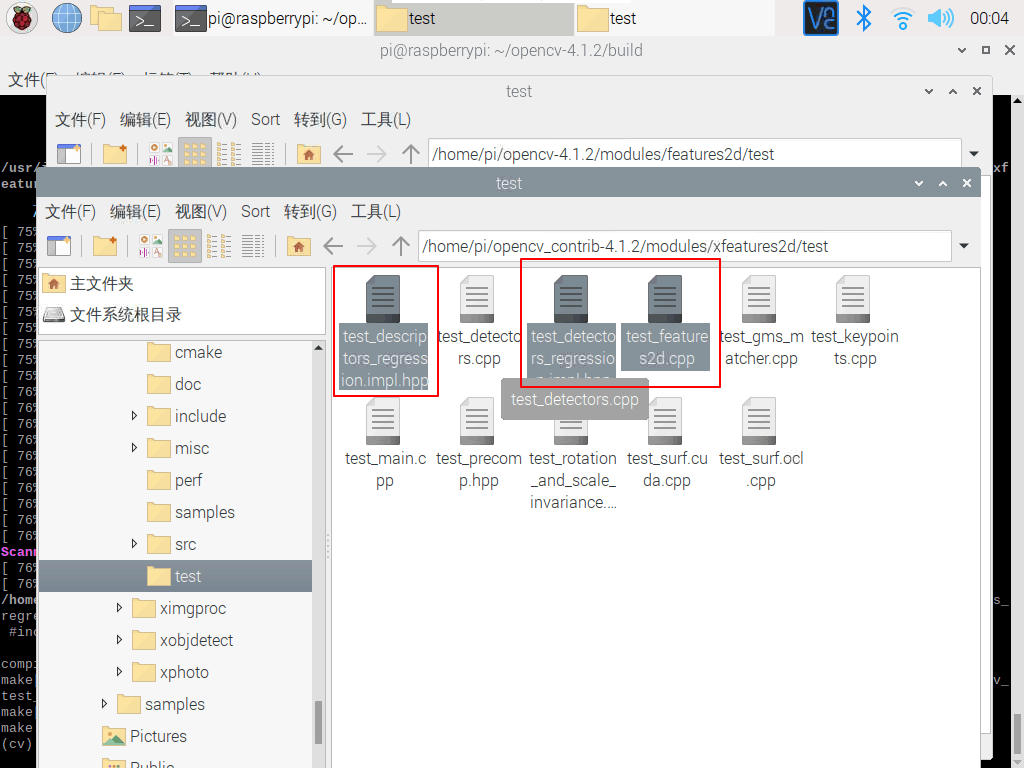

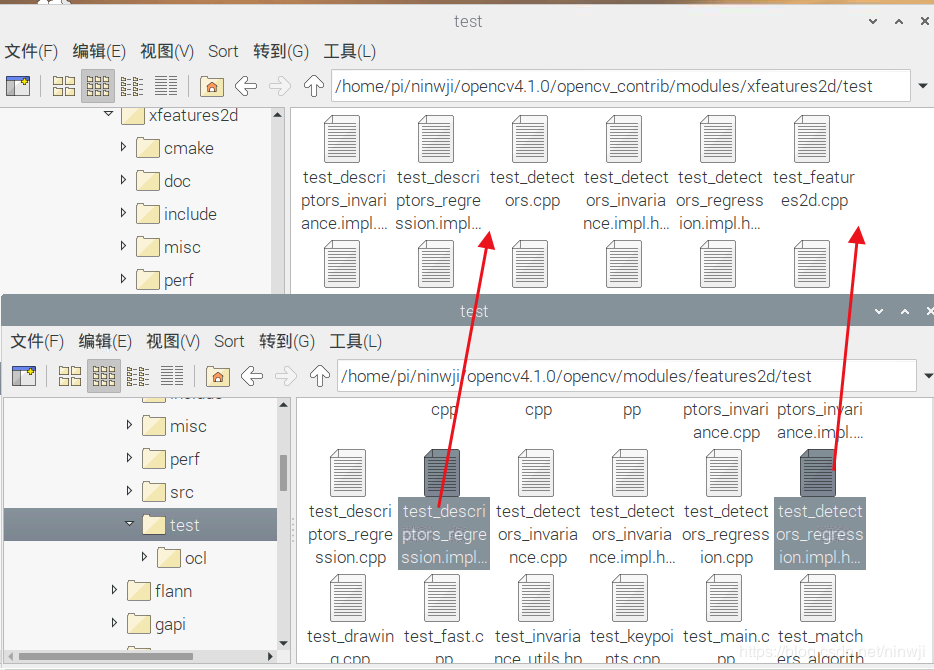

问题2:fatal error: features2d/test/test_detectors_regression.impl.hpp: 没有那个文件或目录

头文件include路径不对,解决方法如下:

将opencv-4.1.2/modules/features2d/test/文件下的

- test_descriptors_regression.impl.hpp

- test_detectors_regression.impl.hpp

- test/test_detectors_invariance.impl.hpp

- test_descriptors_invariance.impl.hpp

- test_invariance_utils.hpp

拷贝到opencv_contrib-4.1.0/modules/xfeatures2d/test/文件下。

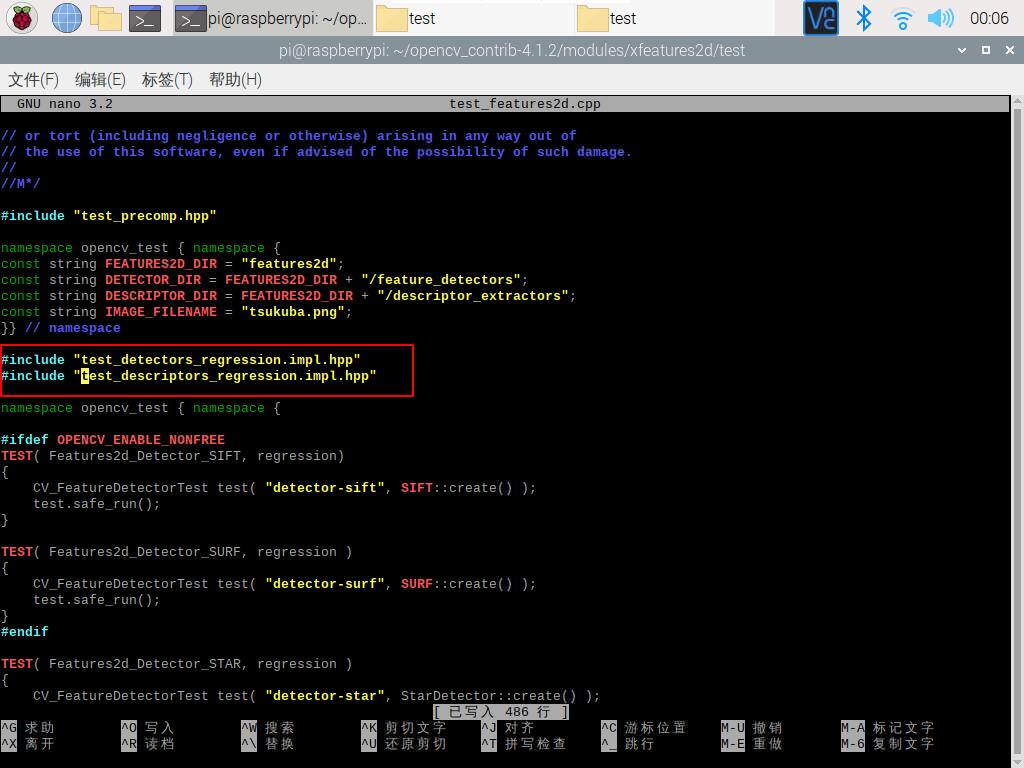

同时,将opencv_contrib-4.1.2/modules/xfeatures2d/test/test_features2d.cpp文件下的

同时,将opencv_contrib-4.1.2/modules/xfeatures2d/test/test_features2d.cpp文件下的

#include "features2d/test/test_detectors_regression.impl.hpp"

#include "features2d/test/test_descriptors_regression.impl.hpp"

改成:

#include "test_detectors_regression.impl.hpp"

#include "test_descriptors_regression.impl.hpp"

之后编译过程中可能还会遇到类似问题,也是按照同样的做法。

参考博客

在Ubuntu16.04上编译opencv4.1.0-gpu带contrib版本碰到的问题

问题3: error: 'CODEC_FLAG_GLOBAL_HEADER' was not declared in this scope

解决方法:

在 /home/pi/opencv-4.1.2/modules/videoio/src/cap_ffmpeg_impl.hpp 里最顶端添加

#define AV_CODEC_FLAG_GLOBAL_HEADER (1 << 22)

#define CODEC_FLAG_GLOBAL_HEADER AV_CODEC_FLAG_GLOBAL_HEADER

#define AVFMT_RAWPICTURE 0x0020

参考博客

Ubuntu 源码安装opencv320 报错 error: 'CODEC_FLAG_GLOBAL_HEADER' was not declared in this scope

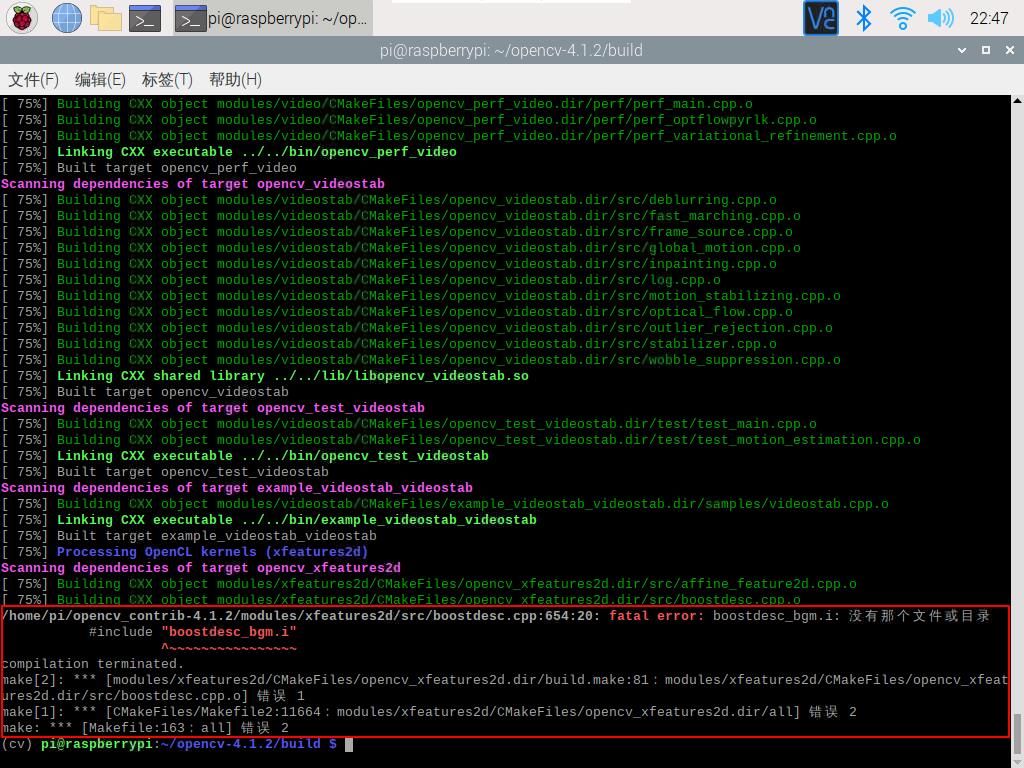

问题4: fatal error: boostdesc_bgm.i: 没有那个文件或目录#include "boostdesc_bgm.i"

解决方法:

需要下列文件

-

boostdesc_bgm.i

-

boostdesc_bgm_bi.i

-

boostdesc_bgm_hd.i

-

boostdesc_lbgm.i

-

boostdesc_binboost_064.i

-

boostdesc_binboost_128.i

-

boostdesc_binboost_256.i

-

vgg_generated_120.i

-

vgg_generated_64.i

-

vgg_generated_80.i

-

vgg_generated_48.i

网上下载压缩包( 提取码:p50x )拷贝到opencv_contrib/modules/xfeatures2d/src/目录下并且解压**

参考博客

安装OpenCV时提示缺少boostdesc_bgm.i文件的问题解决方案

(6)分工协作及总结

小组成员名单

| 学号 | 姓名 | 分工 |

|---|---|---|

| 071703428 | 叶梦晴 | 查阅资料、实际操作及博客撰写 |

| 031702444 | 李尚佳 | 实际操作、解决问题及博客撰写 |

| 181700134 | 宋娟 | 查阅资料及提供代码 |

在线协作

①通过TeamViewer远程控制有树莓派同学的电脑进行实际操作

②通过QQ语音和文件传输分享博客资料和沟通

③实验小结

本次实验学习了如何使用opencv和python控制树莓派的摄像头、利用树莓派的摄像头实现人脸识别以及opencv的docker容器中实现了人脸识别,整体操作下来没有特别大无法解决的问题。就是在搭建opencv库时,因为各种错误(大大小小的坑踩了个遍,环境重新烧录了好几遍QAQ),好几次编译进度条都到不了100%,花了很多很多的时间,在线协作和学习理论知识以及实践操作合计大概有20h,还有其他一些小问题都记录在上面了。通过这次实验体会到树莓派的用处真的很大,而且使用起来也真的很方便,期待下次能做出一个有创意又有意思的实验!