28. Sqoop: java.lang.NullPointerException

sqoop import --connect jdbc:oracle:thin:@//xxxx:1521/aps --username xxx --password 'xxxx' --query " select REPORTNO, QUERYTIME, REPORTCREATETIME, NAME, CERTTYPE, CERTNO, USERCODE, QUERYREASON, HTMLREPORT, CREATETIME , to_char(SysDate,'YYYY-MM-DD HH24:mi:ss') as ETL_IN_DT from ZXC.HHICRQUERYREQ where $CONDITIONS " --hcatalog-database BFMOBILE --hcatalog-table HHICRQUERYREQ --hcatalog-storage-stanza 'stored as ORC' --hive-delims-replacement " " -m 1

17/08/23 17:30:30 INFO Configuration.deprecation: mapred.output.dir is deprecated. Instead, use mapreduce.output.fileoutputformat.outputdir

17/08/23 17:30:31 INFO hcat.SqoopHCatUtilities: HCatalog table partitioning key fields = []

17/08/23 17:30:31 ERROR sqoop.Sqoop: Got exception running Sqoop: java.lang.NullPointerException

java.lang.NullPointerException

at org.apache.hive.hcatalog.data.schema.HCatSchema.get(HCatSchema.java:105)

at org.apache.sqoop.mapreduce.hcat.SqoopHCatUtilities.configureHCat(SqoopHCatUtilities.java:390)

at org.apache.sqoop.mapreduce.hcat.SqoopHCatUtilities.configureImportOutputFormat(SqoopHCatUtilities.java:783)

at org.apache.sqoop.mapreduce.ImportJobBase.configureOutputFormat(ImportJobBase.java:98)

at org.apache.sqoop.mapreduce.ImportJobBase.runImport(ImportJobBase.java:259)

at org.apache.sqoop.manager.SqlManager.importQuery(SqlManager.java:729)

at org.apache.sqoop.tool.ImportTool.importTable(ImportTool.java:499)

at org.apache.sqoop.tool.ImportTool.run(ImportTool.java:608)

at org.apache.sqoop.Sqoop.run(Sqoop.java:143)

at org.apache.hadoop.util.ToolRunner.run(ToolRunner.java:70)

at org.apache.sqoop.Sqoop.runSqoop(Sqoop.java:179)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:218)

at org.apache.sqoop.Sqoop.runTool(Sqoop.java:227)

at org.apache.sqoop.Sqoop.main(Sqoop.java:236)

17/08/23 17:30:31 INFO hive.metastore: Closed a connection to metastore, current connections: 0这里报了一个null pointer错误,十分让人费解.一开始以为是"HCatalog table partitioning key fields = []"引起的,使用sqoop import --verbose打印debug日志:

7/08/23 17:56:52 INFO Configuration.deprecation: mapred.output.dir is deprecated. Instead, use mapreduce.output.fileoutputformat.outputdir

17/08/23 17:56:53 INFO hcat.SqoopHCatUtilities: HCatalog table partitioning key fields = []

17/08/23 17:56:53 DEBUG util.ClassLoaderStack: Restoring classloader: sun.misc.Launcher$AppClassLoader@5474c6c

17/08/23 17:56:53 DEBUG manager.OracleManager$ConnCache: Caching released connection for jdbc:oracle:thin:@//XXX/XX/XXX

17/08/23 17:56:53 ERROR sqoop.Sqoop: Got exception running Sqoop: java.lang.NullPointerException

java.lang.NullPointerException

at org.apache.hive.hcatalog.data.schema.HCatSchema.get(HCatSchema.java:105)

at org.apache.sqoop.mapreduce.hcat.SqoopHCatUtilities.configureHCat(SqoopHCatUtilities.java:390)

at org.apache.sqoop.mapreduce.hcat.SqoopHCatUtilities.configureImportOutputFo发现并不是在那一步报错.后来查看oracle的表结构:

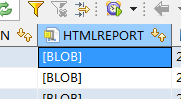

发现这个是BLOB.把sqoop脚本改为'BLOB' AS HTMLREPORT后,依然报错.

最后在网上找了一下:

https://community.hortonworks.com/questions/2168/javalangnullpointerexception-at-orgapachehivehcata.html

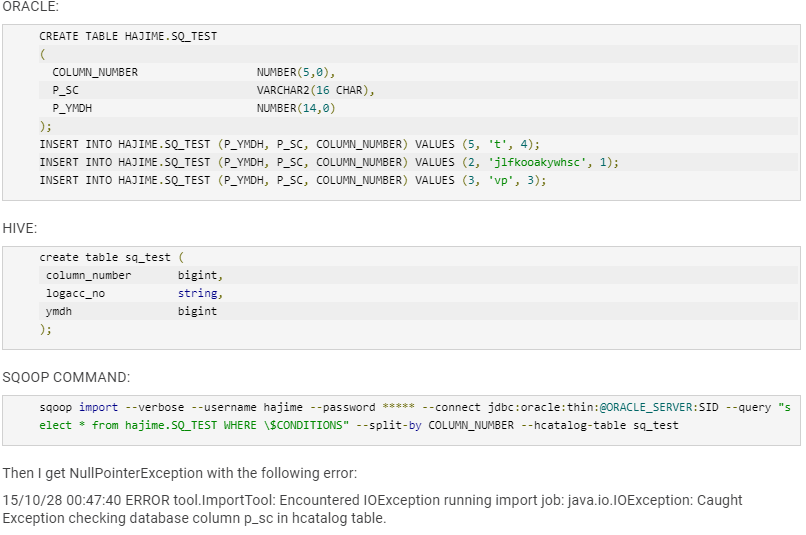

没看到啥有用的信息,但突然想着要比较一下字段类型,对比发现:

REPORTNO VARCHAR2

QUERYTIME VARCHAR2

REPORTCREATETIME VARCHAR2

NAME VARCHAR2

CERTTYPE VARCHAR2

CERTNO VARCHAR2

USERCODE VARCHAR2

QUERYREASON VARCHAR2

**HTMLREPORT BLOB**

CREATETIME VARCHAR2

reportno string

querytime string

reportcreatetime string

name string

certtype string

certno string

usercode string

queryreason string

createtime string

etl_in_dt string然后发现,据然TM的字段对不上,目标表根本没有HTMLREPORT字段!!MMP啊!修改后就好了.

总结:字段对不上会报java.lang.NullPointerException

29. sparksql报java.heap out of limit

如果下的sql:

select a.id,b.name from a join b on a.id = b.id and a.seri ='seq2007u123'改成:

select a.id,b.name from a join b on a.id = b.id and a.seri ='seq2007u123' and b.conta ='tx'

select x.id,y.name

(select a.id from a where a.seri ='seq2007u123') x join

(select a.id,b.name from a where b.conta ='tx' ) y

on x.id=y.id后,可能报这个错误.原因:

通过sparkUI看执行过程,发现在table scan阶段就挂了,并没有执行的到map阶段.

后经大神分析,原因是select a.id from a where a.seri ='seq2007u123'spark在分析时,认为其结果集少于10M,会进行广播,实际上该表有20亿行,这样buffer就不够用了.

解决办法,把大于多少M广播改小成1M.

30. SparkSql读写hive分区表时分区丢失

原因是SparkSql没用hcatalog而是用的自己的解析器解析的表结构,改成用hcatalog就可以了.另外在SparkSql中分区区分大小写.

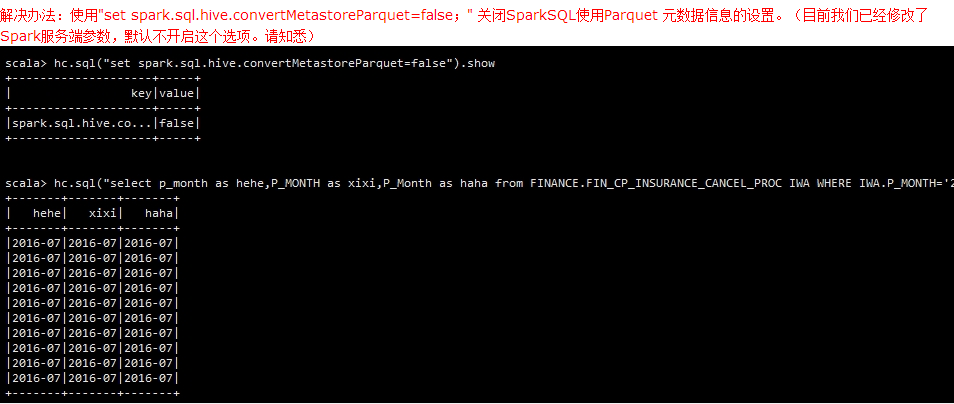

大家好:

最近有同事反馈使用了Parquet之后,部分表的分区字段失效了。这个问题仅限于使用SparkSQL以及Hive On Spark模式。原因是使用Spark读写到Hive metastore Parquet table时,Spark SQL将会使用自己的Parquet而不是Hive的SerDes为了更好的性能。也就是直接使用Parquet文件的schema信息和Hive的schema信息两种不同的模式,他们的区别在于

1、Hive是不区分大小写的,但是Parquet区分

2、Hive认为所有的列是nullable,在Parquet中这只是列的一个特性。

也就是默认情况下,使用Spark引擎读写Hive表时,所有分区的操作都是区分大小写的。以下是对比测试