一、虚拟机环境配置

准备三台虚拟机:

|

Linux系统 |

IP |

hostname |

|

Centos 7 |

192.168.107.2 |

Hadoop01 |

|

Centos 7 |

192.168.107.3 |

Hadoop02 |

|

Centos 7 |

192.168.107.4 |

Hadoop03 |

(1)下载安装VMware

链接:https://pan.baidu.com/s/1a_9pW6-nesgl_GUINFfqsA 提取码:6b6b

(2)在VMware上安装虚拟机

(3)克隆虚拟机 (百度一下)

(4)配置虚拟机

4.1 修改克隆虚拟机的静态IP

4.2 修改主机名

4.3 关闭防火墙

4.4 创建hadoop用户

4.5 配置hadoop用户具有root权限

修改 /etc/sudoers 文件,找到下面一行,在root下面添加一行

## Allow root to run any commands anywhere

root ALL=(ALL) ALL

hadoop ALL=(ALL) ALL

(5)在/opt目录下创建module、software文件夹

[hadoop@hadoop01 ~]$ sudo mkdir -p /opt/module

[hadoop@hadoop01 ~]$ sudo mkdir -p /opt/software

(6)修改module、software文件夹的所有者为hadoop

[hadoop@hadoop01 opt]$ sudo chown hadoop:hadoop /opt/module/ /opt/software/

(7)配置SSH免密登录

生成公钥和私钥:

[hadoop@hadoop01 ~]$ ssh-keygen -t rsa

然后敲(三个回车),就会生成两个文件id_rsa(私钥)、id_rsa.pub(公钥)

将公钥拷贝到要免密登录的目标机器上

[hadoop@hadoop01 ~]$ ssh-copy-id hadoop01

[hadoop@hadoop01 ~]$ ssh-copy-id hadoop02

验证

[hadoop@hadoop01 ~]$ ssh hadoop02

Last login: Tue Oct 27 19:10:02 2020 from gateway

[hadoop@hadoop02 ~]$

(8)编写集群分发脚本xsync

(a)在/home/hadoop目录下创建bin目录,并在bin目录下xsync创建文件,文件内容如下:

[hadoop@hadoop01 ~]$ mkdir bin

[hadoop@hadoop01 ~]$ cd bin/

[hadoop@hadoop01 bin]$ touch xsync

[hadoop@hadoop01 bin]$ vi xsync

在该文件中编写如下代码

#!/bin/bash #1 获取输入参数个数,如果没有参数,直接退出 pcount=$# if((pcount==0)); then echo no args; exit; fi #2 获取文件名称 p1=$1 fname=`basename $p1` echo fname=$fname #3 获取上级目录到绝对路径 pdir=`cd -P $(dirname $p1); pwd` echo pdir=$pdir #4 获取当前用户名称 user=`whoami` #5 循环 for((host=2; host<=3; host++)); do echo ------------------- hadoop$host -------------- rsync -rvl $pdir/$fname $user@hadoop0$host:$pdir done

(b)修改脚本 xsync 具有执行权限

[hadoop@hadoop01 bin]$ chmod 777 xsync

(c)调用脚本形式:xsync 文件名称

[hadoop@hadoop01 bin]$ xsync /home/hadoop/bin

注意:如果将xsync放到/home/hadoop/bin目录下仍然不能实现全局使用,可以将xsync移动到/usr/local/bin目录下。

二、安装JDK

(1)下载JDK

链接:https://pan.baidu.com/s/1wuR2FQe_RYO5mBpAWytGLw 提取码:td3y

(2)用FTP/SFTP工具将下载的JDK压缩包上传至/opt/software/

(3)解压JDK到/opt/module目录下

[hadoop@hadoop01 software]$ tar -zvxf jdk-8u144-linux-x64.tar.gz -C /opt/module/

(4) 配置JDK

4.1 获取JDK路径

[hadoop@hadoop01 software]$ cd /opt/module/jdk1.8.0_144/

[hadoop@hadoop01 jdk1.8.0_144]$ pwd

/opt/module/jdk1.8.0_144

4.2 编辑 /etc/profile文件source /etc/profile

[hadoop@hadoop01 jdk1.8.0_144]$ sudo vi /etc/profile

添加:

#JAVA_HOME

export JAVA_HOME=/opt/module/jdk1.8.0_144

export PATH=$PATH:$JAVA_HOME/bin

4.3 让修改后的 /etc/profile文件生效

[hadoop@hadoop01 jdk1.8.0_144]$ source /etc/profile

4.4 测试JDK安装是否成功

[hadoop@hadoop01 jdk1.8.0_144]$ java -version

java version "1.8.0_144"

三、安装Hadoop

(1)下载Hadoop

链接:https://pan.baidu.com/s/1Bb3h5OwAymfBtF0jKGwhXg 提取码:o061

(2)用FTP/SFTP工具将下载的Hadoop压缩包上传至/opt/software/

(3)解压Hadoop压缩包到/opt/module目录下

[hadoop@hadoop01 software]$ tar -zvxf hadoop-2.7.2.tar.gz -C /opt/module/

(4)将Hadoop添加到环境变量

4.1 获取Hadoop_home路径

[hadoop@hadoop01 ~]$ cd /opt/module/hadoop-2.7.2/

[hadoop@hadoop01 hadoop-2.7.2]$ pwd

/opt/module/hadoop-2.7.2

4.2 编辑 /etc/profile 文件

[hadoop@hadoop01 hadoop-2.7.2]$ vi /etc/profile

添加:

# Hadoop

export HADOOP_HOME=/opt/module/hadoop-2.7.2

export PATH=$PATH:$HADOOP_HOME/bin

export PATH=$PATH:$HADOOP_HOME/sbin

4.3 让修改后的 /etc/profile文件生效

[hadoop@hadoop01 hadoop-2.7.2]$ source /etc/profile

(5)Hadoop目录结构

drwxr-xr-x. 2 hadoop hadoop 194 May 22 2017 bin

drwxr-xr-x. 3 hadoop hadoop 20 May 22 2017 etc

drwxr-xr-x. 2 hadoop hadoop 106 May 22 2017 include

drwxr-xr-x. 3 hadoop hadoop 20 May 22 2017 lib

drwxr-xr-x. 2 hadoop hadoop 239 May 22 2017 libexec

-rw-r--r--. 1 hadoop hadoop 15429 May 22 2017 LICENSE.txt

-rw-r--r--. 1 hadoop hadoop 101 May 22 2017 NOTICE.txt

-rw-r--r--. 1 hadoop hadoop 1366 May 22 2017 README.txt

drwxr-xr-x. 2 hadoop hadoop 4096 May 22 2017 sbin

drwxr-xr-x. 4 hadoop hadoop 31 May 22 2017 share

bin目录:存放对Hadoop相关服务(HDFS,YARN)进行操作的脚本

etc目录:Hadoop的配置文件目录,存放Hadoop的配置文件

lib目录:存放Hadoop的本地库(对数据进行压缩解压缩功能)

sbin目录:存放启动或停止Hadoop相关服务的脚本

share目录:存放Hadoop的依赖jar包、文档、和官方案例

四、配置集群

|

hadoop01(192.168.107.2) |

hadoop02(192.168.107.3) |

hadoop03(192.168.107.4) |

|

NameNode |

Resourcemanager |

SecondaryNameNode |

|

DataNode |

DataNode |

DataNode |

|

NodeManager |

NodeManager |

NodeManager |

[hadoop@hadoop01 ~]$ cd /opt/module/hadoop-2.7.2/etc/hadoop/

(1)配置HDFS相关文件

配置core-site.xml

[hadoop@hadoop01 hadoop]$ vi core-site.xml

在该文件中编写如下配置:

<configuration>

<property> <!-- 指定HDFS中NameNode的地址 -->

<name>fs.defaultFS</name>

<value>hdfs://hadoop01:9000</value>

</property>

<property> <!-- 指定Hadoop运行时产生文件的存储目录 -->

<name>hadoop.tmp.dir</name>

<value>/opt/module/hadoop-2.7.2/data/tmp</value>

</property>

</configuration>

配置hadoop-env.sh

[hadoop@hadoop01 hadoop]$ vi hadoop-env.sh

export JAVA_HOME=/opt/module/jdk1.8.0_144

配置hdfs-site.xml

[hadoop@hadoop01 hadoop]$ vi hdfs-site.xml

在该文件中编写如下配置:

<configuration>

<property> <!--设置备份数量-->

<name>dfs.replication</name>

<value>3</value>

</property>

<property> <!-- 指定HDFS中SecondaryNameNode的配置 -->

<name>dfs.namenode.secondary.http-address</name>

<value>hadoop03:9001</value>

</property>

<property>

<name>dfs.permissions.enabled</name>

<value>false</value>

</property>

<property>

<name>dfs.datanode.max.xcievers</name>

<value>4096</value>

<dedication> Datanode 有一个同时处理文件的上限,至少要有4096</dedication>

</property>

<property> <!--设置为true,可以在浏览器中IP+port查看-->

<name>dfs.webhdfs.enabled</name>

<value>true</value>

</property>

</configuration>

(2)配置MapReduce

配置mapred-env.sh

[hadoop@hadoop01 hadoop]$ vi mapred-env.sh

export JAVA_HOME=/opt/module/jdk1.8.0_144

配置mapred-site.xml

[hadoop@hadoop01 hadoop]$ cp mapred-site.xml.template mapred-site.xml

[hadoop@hadoop01 hadoop]$ vi mapred-site.xml

在该文件中编写如下配置:

<configuration>

<property>

<name>mapreduce.framework.name</name>

<value>yarn</value>

</property>

<property> <!--配置实际的主机名和端口-->

<name>mapreduce.jobhistory.address</name>

<value>hadoop02:10020</value>

</property>

<property>

<name>mapreduce.jobhistory.webapp.address</name>

<value>hadoop02:19888</value>

</property>

</configuration>

(3)配置Yarn

配置yarn-env.sh

[hadoop@hadoop01 hadoop]$ vi yarn-env.sh

export JAVA_HOME=/opt/module/jdk1.8.0_144

配置yarn-site.xml

[hadoop@hadoop01 hadoop]$ vi yarn-site.xml

在该文件中编写如下配置:

<configuration>

<property> <!-- Reducer获取数据的方式 -->

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

<property>

<name>yarn.log-aggregation-enable</name>

<value>true</value>

</property>

<property> <!--日志保存时间 默认保存3-7-->

<name>yarn.log-aggregation.retain-seconds</name>

<value>86400</value>

</property>

<property> <!-- 指定YARN的ResourceManager的地址 -->

<name>yarn.resourcemanager.hostname</name>

<value>hadoop02</value>

</property>

<property> <!--ResourceManager 对外web暴露的地址,可在浏览器查看-->

<name>yarn.resourcemanager.webapp.address</name>

<value>hadoop02:8088</value>

</property>

<property> <!--ResourceManager 对ApplicationMaster暴露的地址-->

<name>yarn.resourcemanager.scheduler.address</name>

<value>hadoop02:8030</value>

</property>

<property> <!--ResourceManager 对NodeManager暴露的地址-->

<name>yarn.resourcemanager.resource-tracker.address</name>

<value>hadoop02:8031</value>

</property>

<property> <!--ResourceManager 对客户端暴露的地址-->

<name>yarn.resourcemanager.address</name>

<value>hadoop02:8032</value>

</property>

<property> <!--ResourceManager 对管理员暴露的地址-->

<name>yarn.resourcemanager.admin.address</name>

<value>hadoop02:8033</value>

</property>

</configuration>

(4)配置slaves

[hadoop@hadoop01 hadoop]$ vi slaves

在该文件中增加如下内容:

Hadoop01

Hadoop02

Hadoop03

(5)拷贝配置好的Hadoop到其他节点

[hadoop@hadoop01 ~]$ xsync /opt/module/hadoop-2.7.2/

(6)拷贝 /etc/profile 文件到其他节点

[hadoop@hadoop01 ~]$ su root

Password:

[root@hadoop01 hadoop]# /home/hadoop/bin/xsync /etc/profile

五、启动关闭集群

(1)如果集群是第一次启动,需要格式化NameNode(注意格式化之前,一定要先停止上次启动的所有namenode和datanode进程,然后再删除data和log数据)

[hadoop@hadoop01 hadoop-2.7.2]$ bin/hdfs namenode -format

(2) 启动HDFS (在NameNode节点启动)

[hadoop@hadoop01 hadoop-2.7.2]$ sbin/start-dfs.sh

[hadoop@hadoop01 hadoop-2.7.2]$ jps

3716 DataNode

3941 Jps

3574 NameNode

(3)启动Yarn (在Resourcemanager节点启动)

[hadoop@hadoop02 hadoop-2.7.2]$ sbin/start-yarn.sh

[hadoop@hadoop02 hadoop-2.7.2]$ jps

3876 Jps

3271 DataNode

3422 ResourceManager

3535 NodeManager

启动顺序:HDFS > Yarn

关闭顺序:Yarn > HDFS

启动HDFS:start-dfs.sh

启动Yarn:start-yarn.sh

关闭HDFS:stop-dfs.sh

关闭Yarn:stop-yarn.sh

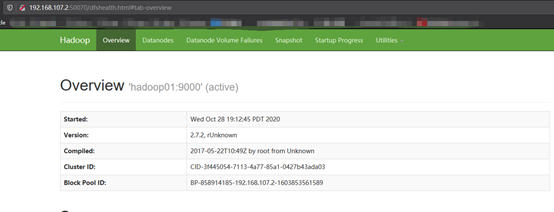

Web查看HDFS:http://192.168.107.2:50070

Web查看SecondaryNameNode:http://192.168.107.4:9001/status.html

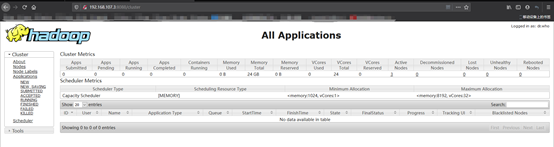

Web查看Yarn:http://192.168.107.3:8088