开始参考https://www.cnblogs.com/sruzzg/p/13060159.html

一、创建scrapy爬虫工程demo

scrapy startproject demo

快捷创建了一个demo新工程

二、在工程中生成一个scrapy爬虫qiushibaike

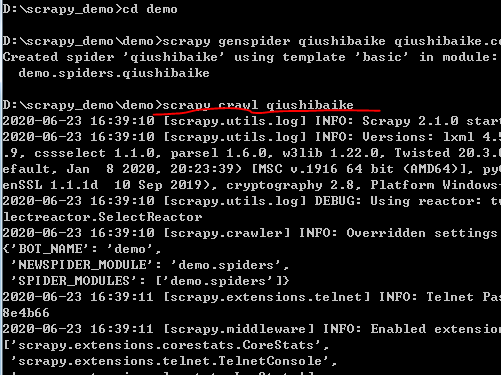

1:进入工程 cd demo

2:生成爬虫 scrapy genspider 爬虫名(和工程名不能相同) 域名

scrapy genspider qiushibaike qiushibaike.com

三、修改setting文件

1:更改ROBOTSTXT_OBEY = False 设置为False

2:增加头文件

DEFAULT_REQUEST_HEADERS = { 'Accept': 'text/html,application/xhtml+xml,application/xml;q=0.9,*/*;q=0.8', 'Accept-Language': 'en', 'User-Agent': 'Mozilla/5.0 (Windows NT 6.1; WOW64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/78.0.3904.108 Safari/537.36' }

四、修改spiders文件下的qiushibaike.py文件

在这是里有3个重要属性:name、start_urls、方法parse()

其中def parse(self, response):中的response就是spiders发送给Engine再到Scheduler又返回Engine,再到Downloader,返回Engine,再到spiders的对url爬取的结果,所以很多操作都在parse()下进行。更改如下:

1 class QiushibaikeSpider(scrapy.Spider): 2 name = 'qiushibaike' 3 allowed_domains = ['qiushibaike.com'] 4 start_urls = ['https://www.qiushibaike.com/8hr/page/1/'] 5 6 def parse(self, response): 7 print('='*40) 8 print(type(response)) 9 print('='*40)

五、运行

1:命令行中运行

scrapy crawl qiushibaike

2:pycharm中运行(调试更方便)

setp1:在项目根目录新建python文件start

step2:把命令行命令写入start文件中如下:

1 from scrapy import cmdline 2 3 # cmdline.execute(["scrapy", "crawl", "qiushibaike"]) 4 cmdline.execute("scrapy crawl qiushibaike".split())

六、对糗事百科网站一个页面的爬取

1 # -*- coding: utf-8 -*- 2 import scrapy 3 from scrapy.http.response.html import HtmlResponse 4 from scrapy.selector.unified import SelectorList 5 6 7 class QiushibaikeSpider(scrapy.Spider): 8 name = 'qiushibaike' 9 allowed_domains = ['qiushibaike.com'] 10 start_urls = ['https://www.qiushibaike.com/text/page/2/'] 11 12 def parse(self, response): 13 print("=" * 40) 14 # SelectorList 15 duanzidivs = response.xpath("//div[@class='col1 old-style-col1']/div") 16 for duanzidiv in duanzidivs: 17 # Selector 18 author = duanzidiv.xpath(".//h2/text()").get().strip() 19 print(author) 20 content = duanzidiv.xpath(".//div[@class='content']//text()").getall() # 返回列表 21 content = "".join(content).strip() 22 print(content) 23 print("=" * 40)

附:

3 from scrapy.http.response.html import HtmlResponse 4 from scrapy.selector.unified import SelectorList

以上两行代码是用于查看response对应类HtmlResponse及SelectorList类的原码,可以查看里面的方法及属性

七、使用pipelines保存数据

1、更改QiushibaikeSpider()

1 # -*- coding: utf-8 -*- 2 import scrapy 3 4 class QiushibaikeSpider(scrapy.Spider): 5 name = 'qiushibaike' 6 allowed_domains = ['qiushibaike.com'] 7 start_urls = ['https://www.qiushibaike.com/text/page/2/'] 8 9 def parse(self, response): 10 # SelectorList 11 duanzidivs = response.xpath("//div[@class='col1 old-style-col1']/div") 12 for duanzidiv in duanzidivs: 13 # Selector 14 author = duanzidiv.xpath(".//h2/text()").get().strip() 15 #print(author) 16 content = duanzidiv.xpath(".//div[@class='content']//text()").getall() # 返回列表 17 content = "".join(content).strip() 18 #print(content) 19 duanzi = {"author":author, "conent":content} 20 yield duanzi

2、pipelines.py

1 # -*- coding: utf-8 -*- 2 3 # Define your item pipelines here 4 # 5 # Don't forget to add your pipeline to the ITEM_PIPELINES setting 6 # See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html 7 import json 8 9 class QiushibaikePipeline: 10 def __init__(self): 11 self.fp = open("duanzi.json", 'w', encoding='utf-8') 12 13 def open_spider(self, spider): 14 # 初始化代码写在这里也是一样的 15 #self.fp = open("duanzi.json", 'w', encoding='utf-8') 16 print('爬虫开始了...') 17 18 def process_item(self, item, spider): 19 # 数据的存处理 20 item_json = json.dumps(item, ensure_ascii=False) # ensure_ascii=false显示中文 21 self.fp.write(item_json+' ') 22 return item 23 24 def close_spider(self, spider): 25 self.fp.close() 26 print('爬虫结束了...')

3、setting.py中设置pipelines

ITEM_PIPELINES = {

# 300表示优先级,越小越高 'qiushibaike.pipelines.QiushibaikePipeline': 300, }

八、优化存储(使用item代替字典,只是相对优化更好的见九)

1、修改items.py

# -*- coding: utf-8 -*- # Define here the models for your scraped items # # See documentation in: # https://docs.scrapy.org/en/latest/topics/items.html import scrapy class QiushibaikeItem(scrapy.Item): # define the fields for your item here like: # name = scrapy.Field() author = scrapy.Field() content = scrapy.Field()

2、把类QiushibaikeItem在Qiushibaike用上

# -*- coding: utf-8 -*- import scrapy from scrapy.http.response.html import HtmlResponse from scrapy.selector.unified import SelectorList from qiushibaike.items import QiushibaikeItem class DemoSpider(scrapy.Spider): name = 'qiushibaike' allowed_domains = ['qiushibaike.com'] start_urls = ['https://www.qiushibaike.com/text/page/2/'] def parse(self, response): # SelectorList duanzidivs = response.xpath("//div[@class='col1 old-style-col1']/div") for duanzidiv in duanzidivs: # Selector author = duanzidiv.xpath(".//h2/text()").get().strip() #print(author) content = duanzidiv.xpath(".//div[@class='content']//text()").getall() # 返回列表 content = "".join(content).strip() #print(content) #duanzi = {"author":author, "conent":content} item = QiushibaikeItem(author=author, content=content) yield item

3、更改pipelines.py

# -*- coding: utf-8 -*- # Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html import json class QiushibaikePipeline: def __init__(self): self.fp = open("duanzi.json", 'w', encoding='utf-8') def open_spider(self, spider): # 初始化代码写在这里也是一样的 #self.fp = open("duanzi.json", 'w', encoding='utf-8') print('爬虫开始了...') def process_item(self, item, spider): # 数据的存处理 item_json = json.dumps(dict(item), ensure_ascii=False) self.fp.write(item_json+' ') return item def close_spider(self, spider): self.fp.close() print('爬虫结束了...')

九、数据的存储方式

1、方法一:(都是存储在一个列表中,对数据不是非常大的情况可用)

1 from scrapy.exporters import JsonItemExporter 2 3 class QiushibaikePipeline: 4 def __init__(self): 5 self.fp = open("duanzi2.json", 'wb') 6 self.exporter = JsonItemExporter(self.fp, ensure_ascii=False, encoding='utf-8') 7 self.exporter.start_exporting() 8 9 def open_spider(self, spider): 10 # 初始化代码写在这里也是一样的 11 print('爬虫开始了...') 12 13 def process_item(self, item, spider): 14 # 数据的存处理 15 self.exporter.export_item(item) 16 return item 17 18 def close_spider(self, spider): 19 self.exporter.finish_exporting() 20 self.fp.close() 21 print('爬虫结束了...')

2、方法二:(可处理大量数据存储为json格式,推荐使用)

1 from scrapy.exporters import JsonLinesItemExporter

2

3 class QiushibaikePipeline:

4 def __init__(self):

5 self.fp = open("duanzi3.json", 'wb')

6 self.exporter = JsonLinesItemExporter(self.fp, ensure_ascii=False, encoding='utf-8')

7

8 def open_spider(self, spider):

9 # 初始化代码写在这里也是一样的

10 print('爬虫开始了...')

11

12 def process_item(self, item, spider):

13 # 数据的存处理

14 self.exporter.export_item(item)

15 return item

16

17 def close_spider(self, spider):

18 self.fp.close()

19 print('爬虫结束了...')

十、爬取多个页面

1、在Qiushibaike中对下一个页面的获取,并且使用回调函数再次爬取

1 # -*- coding: utf-8 -*- 2 import scrapy 3 from scrapy.http.response.html import HtmlResponse 4 from scrapy.selector.unified import SelectorList 5 from qiushibaike.items import QiushibaikeItem 6 7 8 class QiushibaikeSpider(scrapy.Spider): 9 name = 'qiushibaike' 10 allowed_domains = ['qiushibaike.com'] 11 start_urls = ['https://www.qiushibaike.com/text/page/2/'] 12 base_domain = "https://www.qiushibaike.com" 13 14 def parse(self, response): 15 # SelectorList 16 duanzidivs = response.xpath("//div[@class='col1 old-style-col1']/div") 17 for duanzidiv in duanzidivs: 18 # Selector 19 author = duanzidiv.xpath(".//h2/text()").get().strip() 20 #print(author) 21 content = duanzidiv.xpath(".//div[@class='content']//text()").getall() # 返回列表 22 content = "".join(content).strip() 23 #print(content) 24 #duanzi = {"author":author, "conent":content} 25 item = QiushibaikeItem(author=author, content=content) 26 yield item 27 # 获取下一页网址 28 next_url = response.xpath("//ul[@class='pagination']/li[last()]/a/@href").get() 29 #print(next_url) 30 if not next_url: 31 # 最后一页了就为空 32 return 33 else: 34 next_url = self.base_domain + next_url 35 yield scrapy.Request(next_url, callback=self.parse)

2、对下载数据在setting中设置下载延时(太快对服务器不好)

DOWNLOAD_DELAY = 3

附:本笔记在写的时候用了两台电脑,不同电脑项目名称不同。可能在代码中demo与qiushibaike名称有点混(改过不知道有没有全改过来)