1.环境安装jdk

jdk集群分布:7-11

[root@hdss7-11 src]# pwd

/opt/src

[root@hdss7-11 src]# ll

总用量 191596

-rw-r--r-- 1 root root 1096843 5月 18 20:01 flannel-v0.11.0-linux-amd64.tar.gz

-rw-r--r-- 1 root root 195094741 5月 28 11:06 jdk-8u221-linux-x64.tar.gz

[root@hdss7-11 src]#

[root@hdss7-11 src]# mkdir /usr/java

[root@hdss7-11 src]# tar xf jdk-8u221-linux-x64.tar.gz -C /usr/java/

[root@hdss7-11 src]# ln -s /usr/java/jdk1.8.0_221/ /usr/java/jdk

# vi /etc/profile

#JAVA HOME

export JAVA_HOME=/usr/java/jdk

export PATH=$JAVA_HOME/bin:$JAVA_HOME/bin:$PATH

export CLASSPATH=$CLASSPATH:$JAVA_HOME/lib:$JAVA_HOME/lib/tools.jar

jdk集群分布: 7-12

[root@hdss7-12 src]# pwd

/opt/src

[root@hdss7-12 src]# ll

总用量 211168

-rw-r--r-- 1 root root 11288334 5月 12 2019 etcdkeeper-v0.7.5-linux_x86_64.zip

-rw-r--r-- 1 root root 9850227 5月 18 14:24 etcd-v3.1.20-linux-amd64.tar.gz

-rw-r--r-- 1 root root 195094741 5月 28 11:14 jdk-8u221-linux-x64.tar.gz

[root@hdss7-12 src]#

[root@hdss7-12 src]# tar xf jdk-8u221-linux-x64.tar.gz -C /usr/java/

ln -s /usr/java/jdk1.8.0_221/ /usr/java/jdk

jdk集群分布:7-21

[root@hdss7-21 src]# pwd

/opt/src

[root@hdss7-21 src]# ll

总用量 211168

-rw-r--r-- 1 root root 11288334 5月 12 2019 etcdkeeper-v0.7.5-linux_x86_64.zip

-rw-r--r-- 1 root root 9850227 5月 18 14:24 etcd-v3.1.20-linux-amd64.tar.gz

-rw-r--r-- 1 root root 195094741 5月 28 11:14 jdk-8u221-linux-x64.tar.gz

[root@hdss7-21 src]#

[root@hdss7-21 src]# tar xf jdk-8u221-linux-x64.tar.gz -C /usr/java/

ln -s /usr/java/jdk1.8.0_221/ /usr/java/jdk

2.部署zk集群涉及11.12.21机器

分布式:11

[root@hdss7-11 src]# ll

总用量 228392

-rw-r--r-- 1 root root 1096843 5月 18 20:01 flannel-v0.11.0-linux-amd64.tar.gz

-rw-r--r-- 1 root root 195094741 5月 28 11:06 jdk-8u221-linux-x64.tar.gz

-rw-r--r-- 1 root root 37676320 5月 28 11:29 zookeeper-3.4.14.tar.gz

tar xf zookeeper-3.4.14.tar.gz -C /opt/

ln -s /opt/zookeeper-3.4.14/ /opt/zookeeper

mkdir -pv /data/zookeeper/data /data/zookeeper/logs

vi /opt/zookeeper/conf/zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/data/zookeeper/data

dataLogDir=/data/zookeeper/logs

clientPort=2181

server.1=zk1.od.com:2888:3888

server.2=zk2.od.com:2888:3888

server.3=zk3.od.com:2888:3888

[root@hdss7-11 opt]# vim /var/named/od.com.zone

zk1 A 192.168.40.11

zk2 A 192.168.40.12

zk3 A 192.168.40.21

[root@hdss7-11 opt]# echo 1 > /data/zookeeper/data/myid

[root@hdss7-11 opt]# /opt/zookeeper/bin/zkServer.sh status

[root@hdss7-11 opt]# /opt/zookeeper/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/bin/../conf/zoo.cfg

Mode: follower

分布式12

[root@hdss7-12 src]# ll

总用量 228392

-rw-r--r-- 1 root root 1096843 5月 18 20:01 flannel-v0.11.0-linux-amd64.tar.gz

-rw-r--r-- 1 root root 195094741 5月 28 11:06 jdk-8u221-linux-x64.tar.gz

-rw-r--r-- 1 root root 37676320 5月 28 11:29 zookeeper-3.4.14.tar.gz

tar xf zookeeper-3.4.14.tar.gz -C /opt/

ln -s /opt/zookeeper-3.4.14/ /opt/zookeeper

mkdir -pv /data/zookeeper/data /data/zookeeper/logs

[root@hdss7-12 opt]# vi /opt/zookeeper/conf/zoo.cfg

[root@hdss7-12 opt]# cat /opt/zookeeper/conf/zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/data/zookeeper/data

dataLogDir=/data/zookeeper/logs

clientPort=2181

server.1=zk1.od.com:2888:3888

server.2=zk2.od.com:2888:3888

server.3=zk3.od.com:2888:3888

[root@hdss7-12 opt]# echo 2 > /data/zookeeper/data/myid

[root@hdss7-12 opt]# /opt/zookeeper/bin/zkServer.sh start

[root@hdss7-12 opt]# /opt/zookeeper/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/bin/../conf/zoo.cfg

Mode: follower

分布式21

[root@hdss7-21 src]# ll

总用量 228392

-rw-r--r-- 1 root root 1096843 5月 18 20:01 flannel-v0.11.0-linux-amd64.tar.gz

-rw-r--r-- 1 root root 195094741 5月 28 11:06 jdk-8u221-linux-x64.tar.gz

-rw-r--r-- 1 root root 37676320 5月 28 11:29 zookeeper-3.4.14.tar.gz

tar xf zookeeper-3.4.14.tar.gz -C /opt/

ln -s /opt/zookeeper-3.4.14/ /opt/zookeeper

mkdir -pv /data/zookeeper/data /data/zookeeper/logs

[root@hdss7-21 src]# cat /opt/zookeeper/conf/zoo.cfg

tickTime=2000

initLimit=10

syncLimit=5

dataDir=/data/zookeeper/data

dataLogDir=/data/zookeeper/logs

clientPort=2181

server.1=zk1.od.com:2888:3888

server.2=zk2.od.com:2888:3888

server.3=zk3.od.com:2888:3888

[root@hdss7-21 src]# echo 3 > /data/zookeeper/data/myid

[root@hdss7-21 src]# /opt/zookeeper/bin/zkServer.sh status

[root@hdss7-21 src]# /opt/zookeeper/bin/zkServer.sh status

ZooKeeper JMX enabled by default

Using config: /opt/zookeeper/bin/../conf/zoo.cfg

Mode: leader

200上部署

# docker pull jenkins/jenkins:2.190.3

# docker tag 22b8b9a84dbe harbor.od.com/public/jenkins:v2.190.3

# docker push harbor.od.com/public/jenkins:v2.190.3

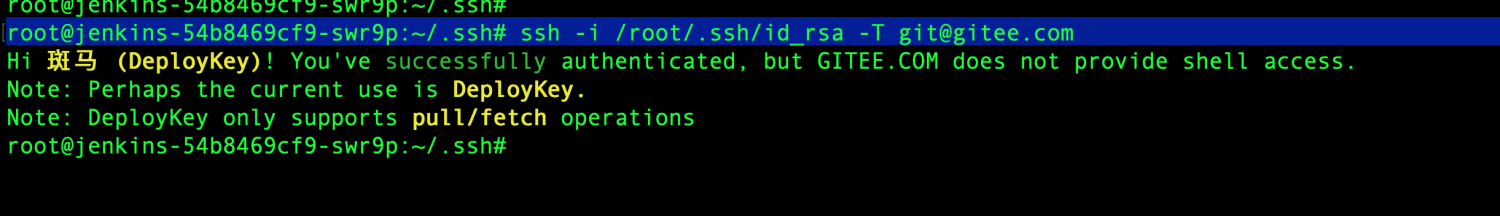

ssh-keygen -t rsa -b 2048 -C "xxx@xx.xxx" -N "" -f /root/.ssh/id_rsa

curl -fsSL get.docker.com -o get-docker.sh

# chmod u+x get-docker.sh

# mkdir -p /data/dockerfile/jenkins/

# cd /data/dockerfile/jenkins

# vi Dockerfile

FROM harbor.od.com/public/jenkins:v2.190.3

USER root

RUN /bin/cp /usr/share/zoneinfo/Asia/Shanghai /etc/localtime &&

echo 'Asia/Shanghai' >/etc/timezone

ADD id_rsa /root/.ssh/id_rsa

ADD config.json /root/.docker/config.json

ADD get-docker.sh /get-docker.sh

RUN echo " StrictHostKeyChecking no" >> /etc/ssh/ssh_config &&

/get-docker.sh

[root@hdss7-200 jenkins]# cp /root/.ssh/id_rsa .

[root@hdss7-200 jenkins]# curl -fsSL get.docker.com -o get-docker.sh

[root@hdss7-200 jenkins]# chmod u+x get-docker.sh

[root@hdss7-200 jenkins]# docker build . -t harbor.od.com/infra/jenkins:v2.190.3

21上创建名称空间

kubectl create ns infra

[root@hdss7-21 ~]# kubectl create secret docker-registry harbor --docker-server=harbor.od.com --docker-username=admin --docker-password=Harbor12345 -n infra

3 共享存储

所有节点安装nfs

# 其他机器统一操作yum install nfs-utils -y

[root@hdss7-200 ~]# cat /etc/exports

/data/nfs-volume 192.168.40.0/24(rw,no_root_squash)

[root@hdss7-200 ~]# mkdir -p mkdir /data/nfs-volume/jenkins_home

[root@hdss7-200 ~]# systemctl start nfs

[root@hdss7-200 ~]# systemctl enable nfs

[root@hdss7-200 ~]# cd /data/k8s-yaml/

[root@hdss7-200 k8s-yaml]# ls

coredns dashboard heapster traefik

[root@hdss7-200 k8s-yaml]# mkdir jenkins

[root@hdss7-200 k8s-yaml]# cd jenkins/

4.jenkins资源配置清单

1.dp.yaml

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: jenkins

namespace: infra

labels:

name: jenkins

spec:

replicas: 1

selector:

matchLabels:

name: jenkins

template:

metadata:

labels:

app: jenkins

name: jenkins

spec:

volumes:

- name: data

nfs:

server: hdss7-200

path: /data/nfs-volume/jenkins_home

- name: docker

hostPath:

path: /run/docker.sock

type: ''

containers:

- name: jenkins

image: harbor.od.com/infra/jenkins:v2.190.3

imagePullPolicy: IfNotPresent

ports:

- containerPort: 8080

protocol: TCP

env:

- name: JAVA_OPTS

value: -Xmx512m -Xms512m

volumeMounts:

- name: data

mountPath: /var/jenkins_home

- name: docker

mountPath: /run/docker.sock

imagePullSecrets:

- name: harbor

securityContext:

runAsUser: 0

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

New:

namespace: infra 隶属于infra ns中

path: /run/docker.sock 这里挂载了宿主机的docker.sock,使容器内的docker客户端可以直接与宿主机的docker引擎进行通信

volumes nfs 类型的卷 server是运维管理主机200

imagePullPolicy 镜像拉取的策略

分为三种 1.无论如何都去从harbor仓库拉取镜像 2.无论如何都不去远程拉取 只是用本地 3.如何本地没有就去远程仓库拉

imagePullSecrets 拉取harbor私有仓库必须加此参数 否则拉取不到

2.svc.yaml

kind: Service

apiVersion: v1

metadata:

name: jenkins

namespace: infra

spec:

ports:

- protocol: TCP

port: 80

targetPort: 8080

selector:

app: jenkins

3.ingress.yaml

kind: Ingress

apiVersion: extensions/v1beta1

metadata:

name: jenkins

namespace: infra

spec:

rules:

- host: jenkins.od.com

http:

paths:

- path: /

backend:

serviceName: jenkins

servicePort: 80

4.应用资源配置清单:node节点

[root@hdss7-22 ~]# kubectl create -f http://k8s-yaml.od.com/jenkins/dp.yaml

deployment.extensions/jenkins created

[root@hdss7-22 ~]# kubectl create -f http://k8s-yaml.od.com/jenkins/svc.yaml

service/jenkins created

[root@hdss7-22 ~]# kubectl create -f http://k8s-yaml.od.com/jenkins/ingress.yaml

[root@hdss7-22 ~]# kubectl get pod -n infra

NAME READY STATUS RESTARTS AGE

jenkins-54b8469cf9-q59tk 1/1 Running 0 14h

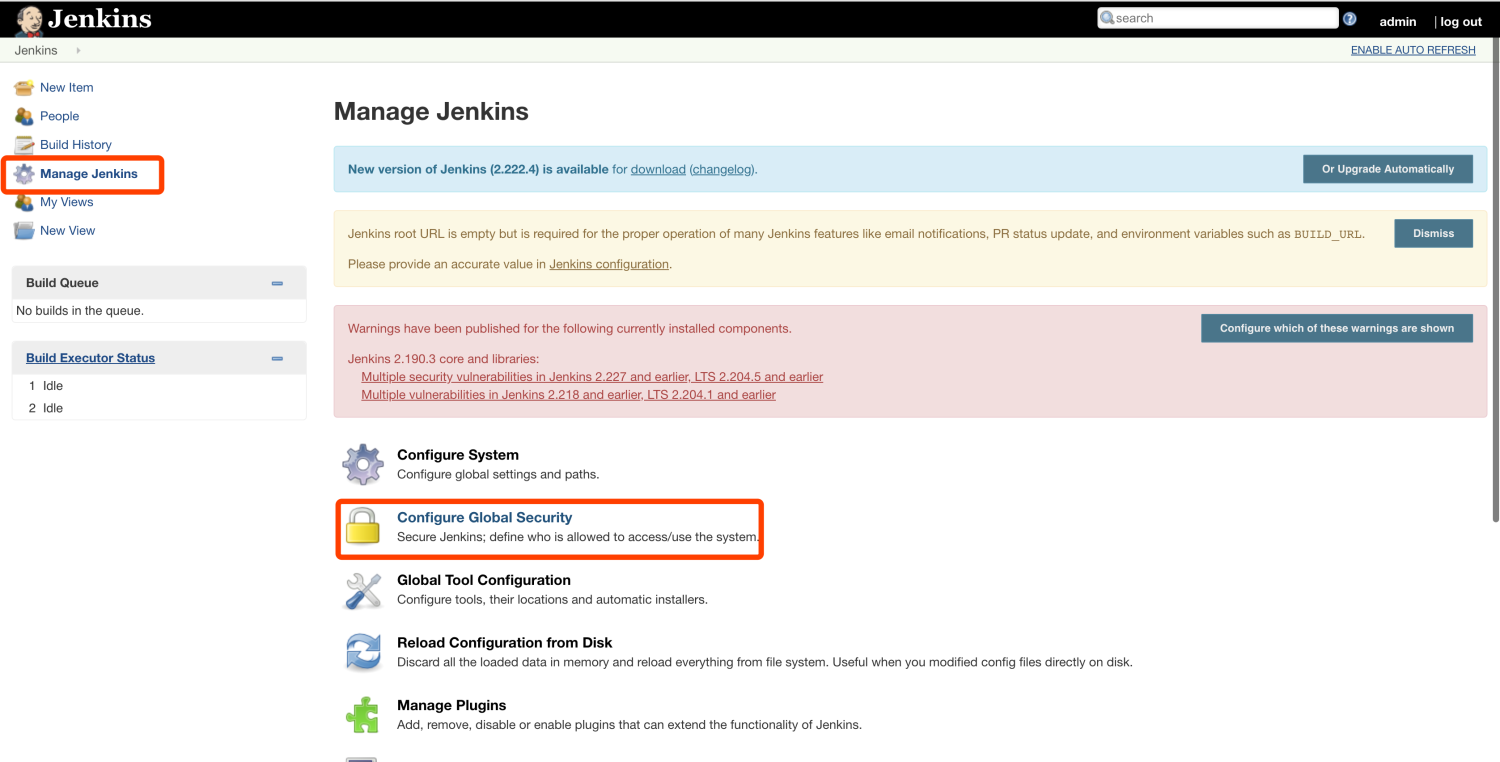

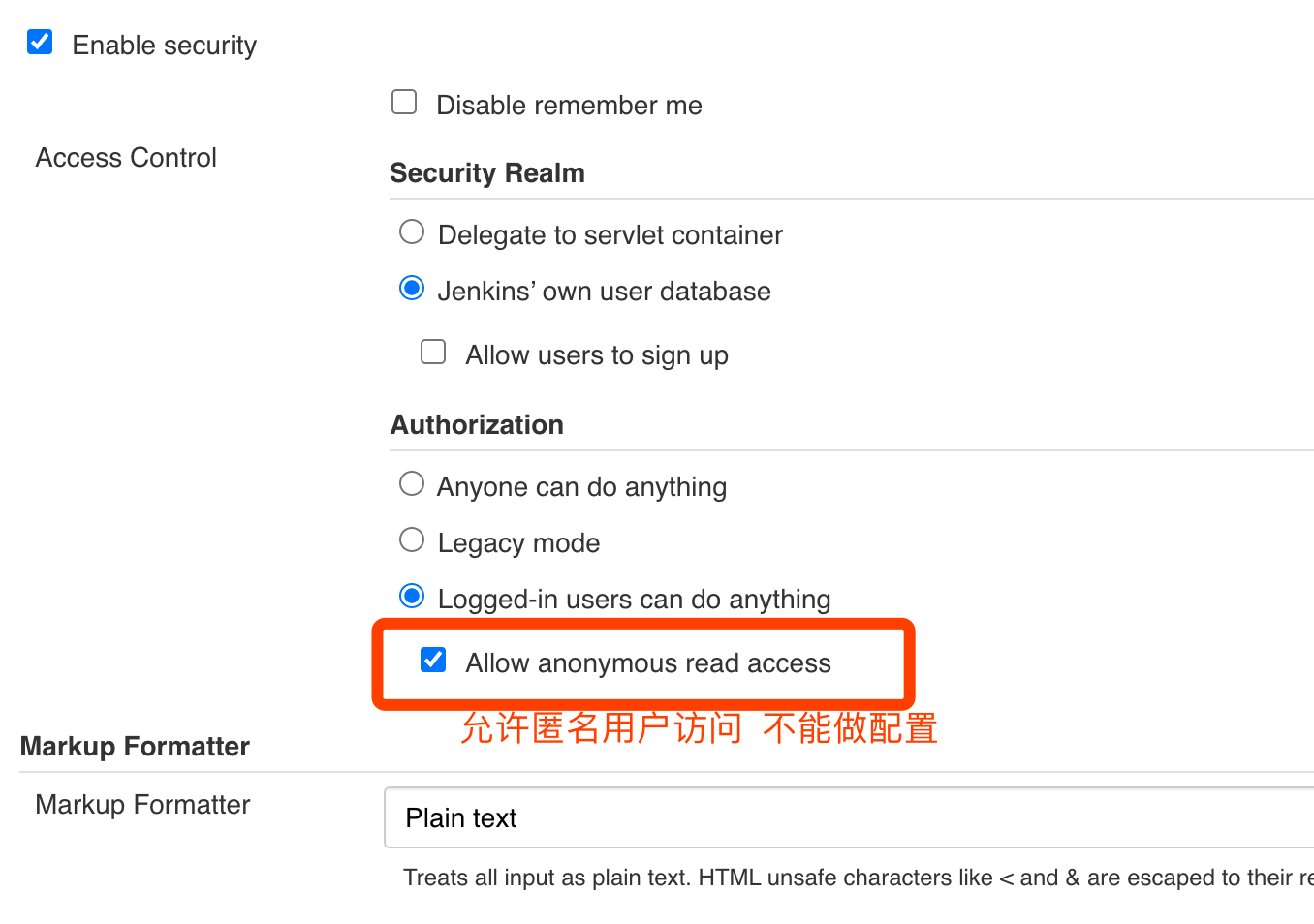

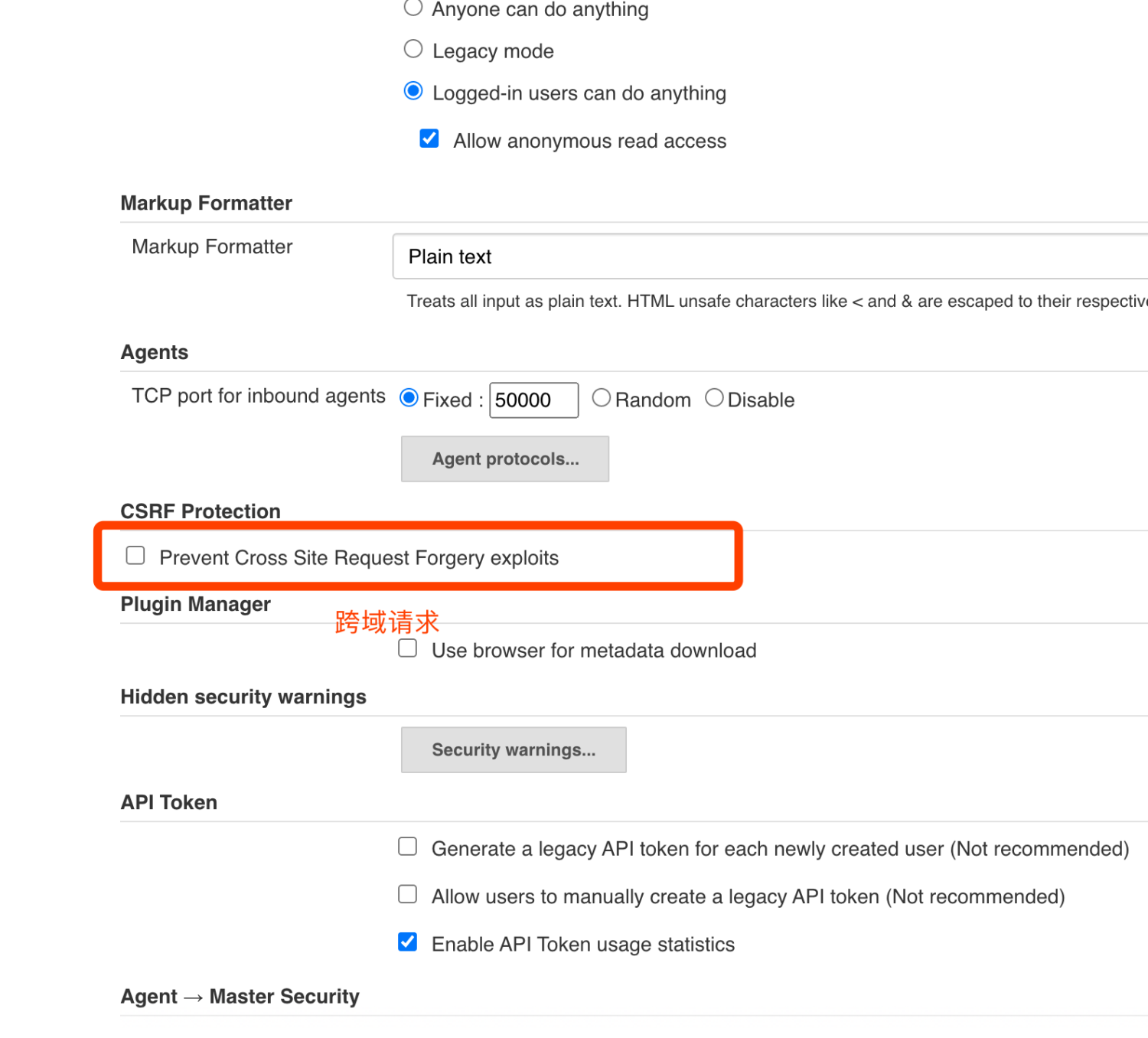

5.jenkins基础优化

搜索蓝海插件并安装:blue ocean

搜索蓝海插件并安装:blue ocean

6 二进制部署mvn 200操作

cd /opt/src

[root@hdss7-200 harbor]# wget https://archive.apache.org/dist/maven/maven-3/3.6.1/binaries/apache-maven-3.6.1-bin.tar.gz

# mkdir /data/nfs-volume/jenkins_home/maven-3.6.1-8u232

# tar -zxf apache-maven-3.6.1-bin.tar.gz -C /data/nfs-volume/jenkins_home/maven-3.6.1-8u232/

# cd /data/nfs-volume/jenkins_home/maven-3.6.1-8u232

# mv apache-maven-3.6.1/* ./

# rm -rf apache-maven-3.6.1/

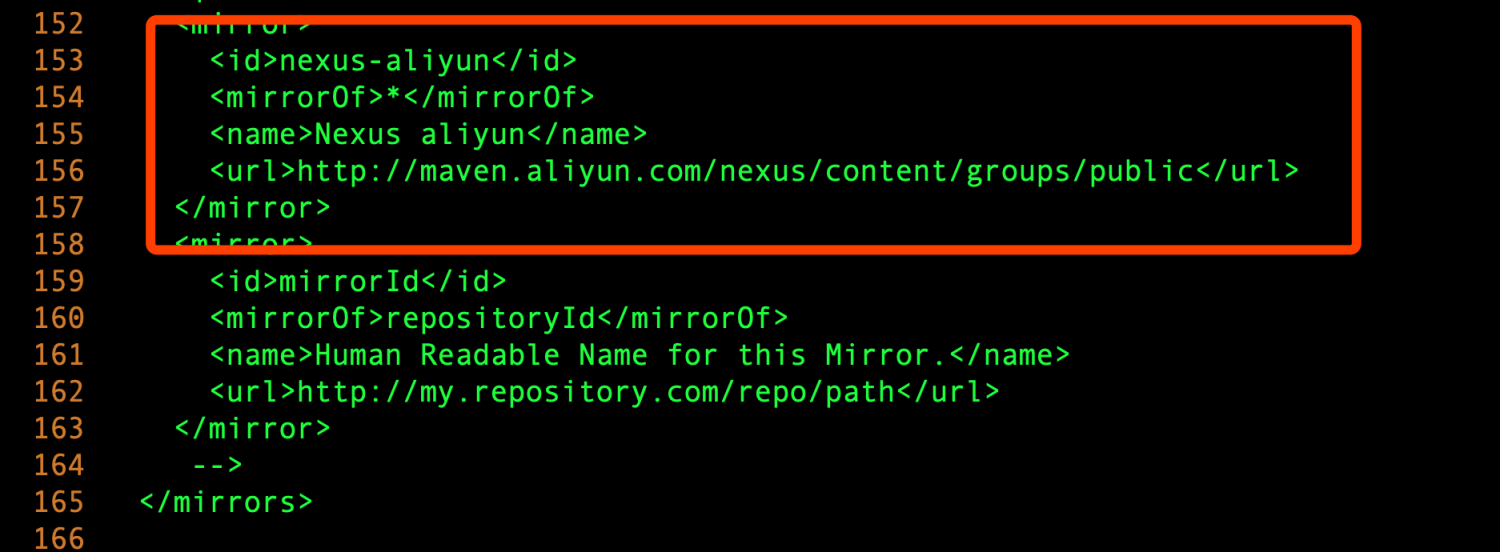

# vi /data/nfs-volume/jenkins_home/maven-3.6.1-8u232/conf/settings.xml

制作dubbo微服务底包镜像:JAVA运行时环境的底包

[root@hdss7-200 ~]# docker pull stanleyws/jre8:8u112

[root@hdss7-200 ~]# docker pull stanleyws/jre8:8u112

[root@hdss7-200 ~]# docker tag fa3a085d6ef1 harbor.od.com/public/jre:8u112

[root@hdss7-200 ~]# docker push harbor.od.com/public/jre:8u112

[root@hdss7-200 ~]# cd /data/dockerfile/

[root@hdss7-200 dockerfile]# ls

jenkins

[root@hdss7-200 dockerfile]# mkdir jre8

[root@hdss7-200 dockerfile]# cd jre8

[root@hdss7-200 jre8]# vi Dockerfile

下载jmx_javaagent,监控jvm信息:

[root@hdss7-200 jre8]# wget https://repo1.maven.org/maven2/io/prometheus/jmx/jmx_prometheus_javaagent/0.3.1/jmx_prometheus_javaagent-0.3.1.jar -O jmx_javaagent-0.3.1.jar

root@hdss7-200 jre8]# cat config.yml

---

rules:

- pattern: '.*'

创建entrypoint.sh:使用exec 来运行java的jar包,能够使脚本将自己的pid 为‘1’ 传递给java进程,避免docker容器因没有前台进程而退出。并且不要加&符。

[root@hdss7-200 jre8]# cat entrypoint.sh

#!/bin/sh

M_OPTS="-Duser.timezone=Asia/Shanghai -javaagent:/opt/prom/jmx_javaagent-0.3.1.jar=$(hostname -i):${M_PORT:-"12346"}:/opt/prom/config.yml"

C_OPTS=${C_OPTS}

JAR_BALL=${JAR_BALL}

exec java -jar ${M_OPTS} ${C_OPTS} ${JAR_BALL}

[root@hdss7-200 jre8]# chmod +x entrypoint.sh

# docker build . -t harbor.od.com/base/jre8:8u112

[root@hdss7-200 jre8]# docker push harbor.od.com/base/jre8:8u112

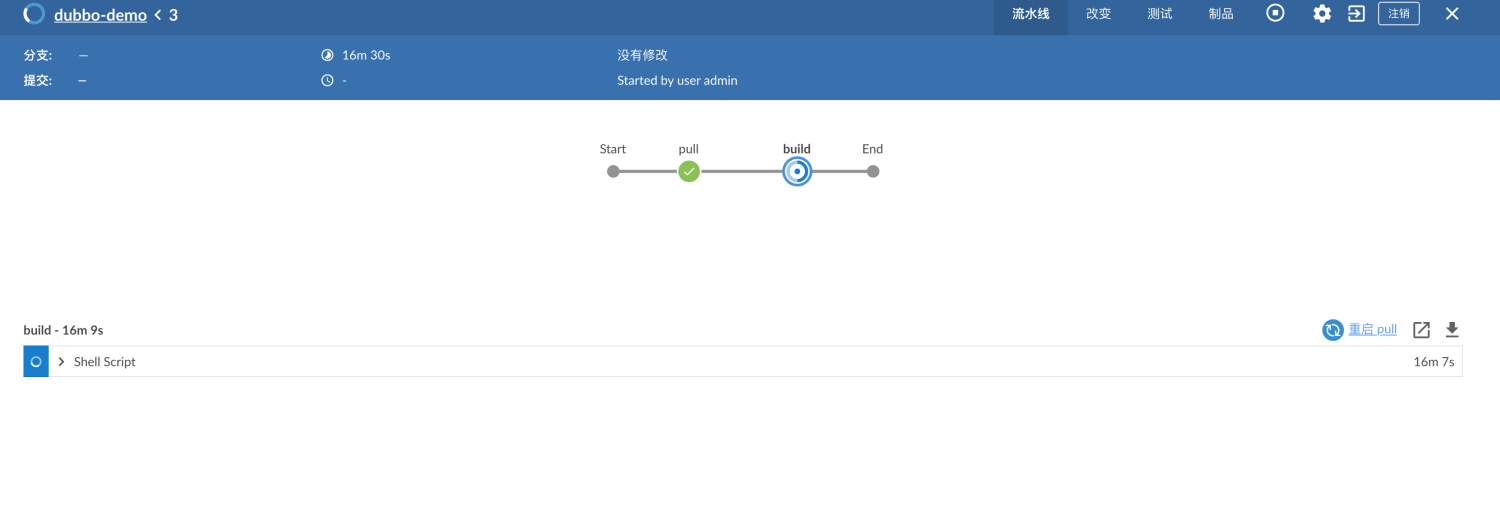

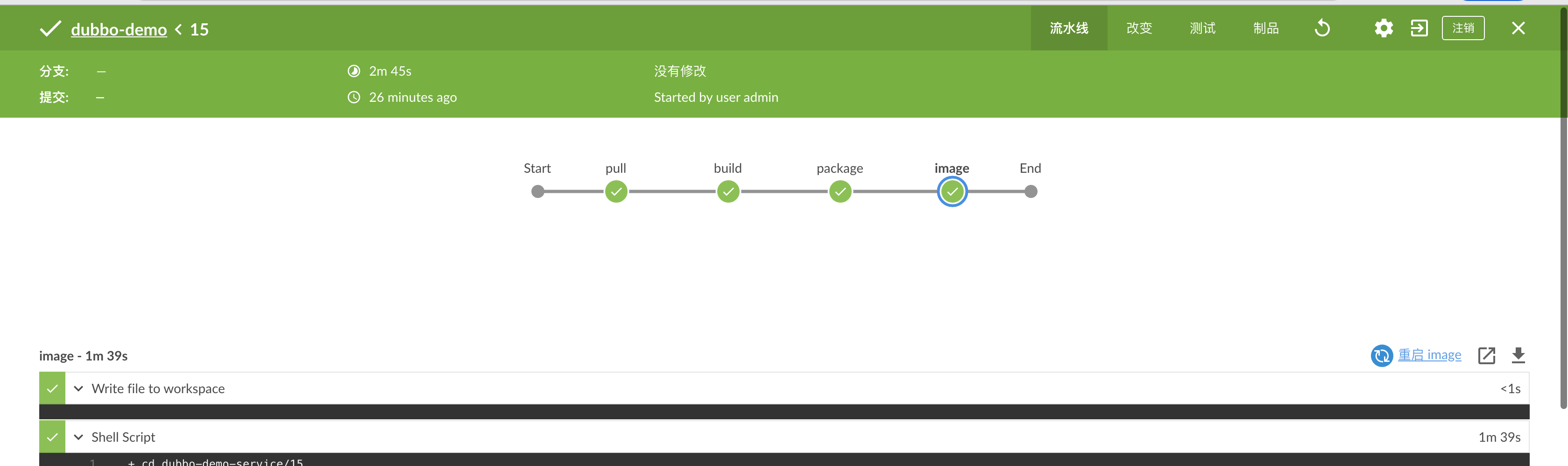

7 jenkins 参数化构建

除了base_image和maven是choice parameter,其他都是string parameter

添加完成后,效果如图:

pipeline {

agent any

stages {

stage('pull') { //get project code from repo

steps {

sh "git clone ${params.git_repo} ${params.app_name}/${env.BUILD_NUMBER} && cd ${params.app_name}/${env.BUILD_NUMBER} && git checkout ${params.git_ver}"

}

}

stage('build') { //exec mvn cmd

steps {

sh "cd ${params.app_name}/${env.BUILD_NUMBER} && /var/jenkins_home/maven-${params.maven}/bin/${params.mvn_cmd}"

}

}

stage('package') { //move jar file into project_dir

steps {

sh "cd ${params.app_name}/${env.BUILD_NUMBER} && cd ${params.target_dir} && mkdir project_dir && mv *.jar ./project_dir"

}

}

stage('image') { //build image and push to registry

steps {

writeFile file: "${params.app_name}/${env.BUILD_NUMBER}/Dockerfile", text: """FROM harbor.od.com/${params.base_image}

ADD ${params.target_dir}/project_dir /opt/project_dir"""

sh "cd ${params.app_name}/${env.BUILD_NUMBER} && docker build -t harbor.od.com/${params.image_name}:${params.git_ver}_${params.add_tag} . && docker push harbor.od.com/${params.image_name}:${params.git_ver}_${params.add_tag}"

}

}

}

}

构建时间有点久 先去抽根烟

8 资源配置清单

已经有了,接下来交付dubbo-demo-service服务到k8s:

准备k8s资源配置清单:7-200 红色部分,需要根据自己构建镜像的tag来进行修改

[root@hdss7-200 nginx]# mkdir /data/k8s-yaml/dubbo-server/

[root@hdss7-200 nginx]# cd /data/k8s-yaml/dubbo-server/

[root@hdss7-200 dubbo-server]# cat dp.yaml

kind: Deployment

apiVersion: extensions/v1beta1

metadata:

name: dubbo-demo-service

namespace: app

labels:

name: dubbo-demo-service

spec:

replicas: 1

selector:

matchLabels:

name: dubbo-demo-service

template:

metadata:

labels:

app: dubbo-demo-service

name: dubbo-demo-service

spec:

containers:

- name: dubbo-demo-service

image: harbor.od.com/app/dubbo-demo-service:master_20200602_1730

ports:

- containerPort: 20880

protocol: TCP

env:

- name: JAR_BALL

value: dubbo-server.jar

imagePullPolicy: IfNotPresent

imagePullSecrets:

- name: harbor

restartPolicy: Always

terminationGracePeriodSeconds: 30

securityContext:

runAsUser: 0

schedulerName: default-scheduler

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

maxSurge: 1

revisionHistoryLimit: 7

progressDeadlineSeconds: 600

由于我们使用的harbor私有镜像的项目是app,是个私有项目,所以需要创建secret资源:

# kubectl create ns app

# kubectl create secret docker-registry harbor --docker-server=harbor.od.com --docker-username=admin --docker-password=Harbor12345 -n app

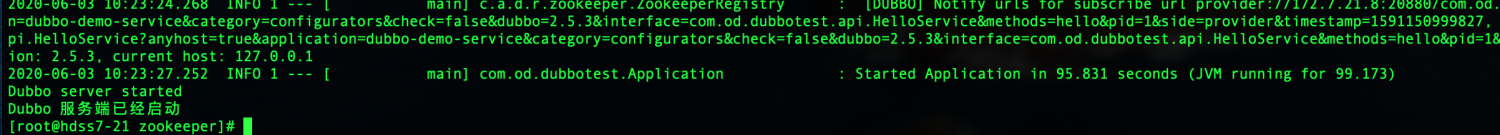

# kubectl apply -f http://k8s-yaml.od.com/dubbo-server/dp.yaml

检查是否启动成功:

# kubectl logs dubbo-demo-service-77b687c6f8-v556v -n app

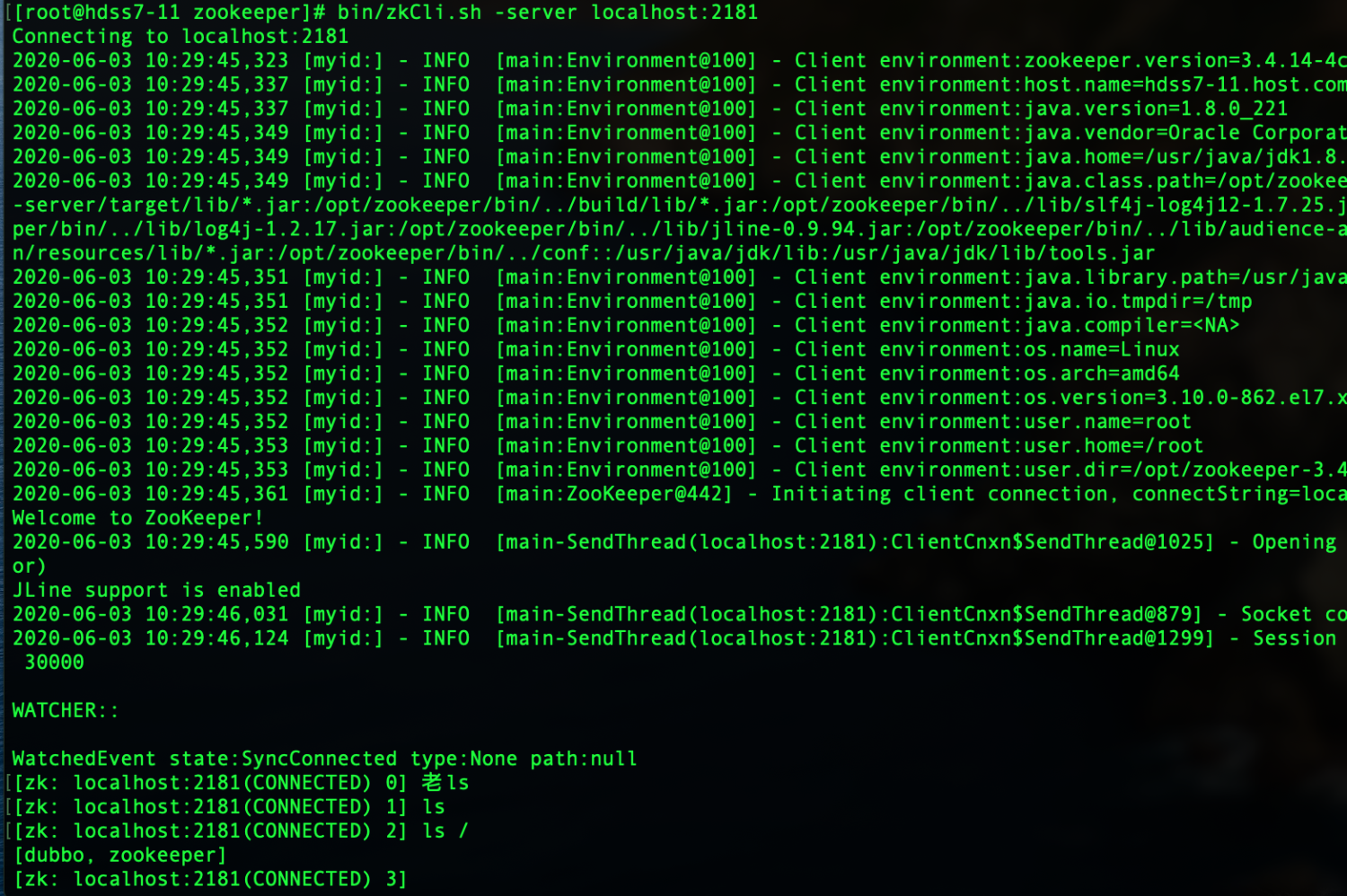

查看是否已经注册到zk中