参考

https://www.cnblogs.com/yangyi402/p/11557878.html

https://github.com/github/gh-ost

https://www.cnblogs.com/zhoujinyi/p/9187421.html

主从同步过程省略。

启动主从同步

show processlist; show master status; 记录Position,执行 change master to master_host='192.168.10.60', master_port=3306, master_user='slave', master_password='123456', master_log_file='mysql-bin.000002', master_log_pos=1407; 启动主从同步 start slave;

开始在线DDL

gh-ost因为不需要使用触发器,gh-ost 把修改表定义的负载和正常的业务负载解耦开了。它不需要考虑被修改的表上的并发操作和竞争等,这些在二进制日志中都被序列化了,gh-ost 只操作临时表,完全与原始表不相干。事实上,gh-ost 也把行拷贝的写操作与二进制日志的写操作序列化了,这样,对主库来说只是有一条连接在顺序的向临时表中不断写入数据。

添加1个字段,奇怪的可以不填数据库root用户的密码,无需 --password="yourpassword"

./gh-ost --user="root" --host=192.168.10.60 --database="test" --table="tab" --alter="ADD COLUMN oneColumn varchar(10)" --allow-on-master --execute

即使在主次同步时也是可以执行的。在执行ghost复制的时候暂停了主从同步。

[root@hadoop001 home]# ./gh-ost --user="root" --host=192.168.10.60 --database="test" --switch-to-rbr --table="tab" --alter="ADD COLUMN oneColumn varchar(10)" --allow-on-master --execute [2020/07/21 23:01:35] [info] binlogsyncer.go:133 create BinlogSyncer with config {99999 mysql 192.168.10.60 3306 root false false <nil> false UTC true 0 0s 0s 0 false} [2020/07/21 23:01:35] [info] binlogsyncer.go:354 begin to sync binlog from position (mysql-bin.000003, 28925) [2020/07/21 23:01:35] [info] binlogsyncer.go:203 register slave for master server 192.168.10.60:3306 [2020/07/21 23:01:35] [info] binlogsyncer.go:723 rotate to (mysql-bin.000003, 28925) # Migrating `test`.`tab`; Ghost table is `test`.`_tab_gho` # Migrating hadoop001:3306; inspecting hadoop001:3306; executing on hadoop001 # Migration started at Tue Jul 21 23:01:35 +0800 2020 # chunk-size: 1000; max-lag-millis: 1500ms; dml-batch-size: 10; max-load: ; critical-load: ; nice-ratio: 0.000000 # throttle-additional-flag-file: /tmp/gh-ost.throttle # Serving on unix socket: /tmp/gh-ost.test.tab.sock Copy: 0/22 0.0%; Applied: 0; Backlog: 0/1000; Time: 0s(total), 0s(copy); streamer: mysql-bin.000003:30509; Lag: 0.01s, State: migrating; ETA: N/A Copy: 0/22 0.0%; Applied: 0; Backlog: 0/1000; Time: 1s(total), 1s(copy); streamer: mysql-bin.000003:34164; Lag: 0.01s, State: migrating; ETA: N/A Copy: 22/22 100.0%; Applied: 0; Backlog: 0/1000; Time: 1s(total), 1s(copy); streamer: mysql-bin.000003:35132; Lag: 0.01s, State: migrating; ETA: due Copy: 22/22 100.0%; Applied: 0; Backlog: 1/1000; Time: 2s(total), 1s(copy); streamer: mysql-bin.000003:39597; Lag: 0.01s, State: migrating; ETA: due # Migrating `test`.`tab`; Ghost table is `test`.`_tab_gho` # Migrating hadoop001:3306; inspecting hadoop001:3306; executing on hadoop001 # Migration started at Tue Jul 21 23:01:35 +0800 2020 # chunk-size: 1000; max-lag-millis: 1500ms; dml-batch-size: 10; max-load: ; critical-load: ; nice-ratio: 0.000000 # throttle-additional-flag-file: /tmp/gh-ost.throttle # Serving on unix socket: /tmp/gh-ost.test.tab.sock Copy: 22/22 100.0%; Applied: 0; Backlog: 0/1000; Time: 2s(total), 1s(copy); streamer: mysql-bin.000003:39996; Lag: 0.01s, State: migrating; ETA: due [2020/07/21 23:01:37] [info] binlogsyncer.go:164 syncer is closing... [2020/07/21 23:01:37] [info] binlogsyncer.go:179 syncer is closed # Done

添加多个字段

./gh-ost --user="root" --host=192.168.10.60 --database="test" --table="tab" --alter="ADD COLUMN col02 varchar(10),add column col03 int not null default 0 comment '备注' " --allow-on-master --execute

删除字段

./gh-ost --user="root" --host=192.168.10.60 --database="test" --table="tab" --initially-drop-old-table --alter="DROP COLUMN col02 " --allow-on-master --execute

如果binlog使用STATEMENT模式,需要修改为ROW模式,看提示需要添加--switch-to-rbr,gh-ost 希望二进制文件使用基于行的日志格式,但这并不表示如果主库上使用的是基于语句的日志格式,就不能用它来在线修改表定义了。事实上,Github 常用的方式是用一个从库把日志的语句模式转成行模式,再从这个从库上去读日志。搭一个这样的从库并不复杂。

目前我的主从都是STATEMENT模式,所以就手动改模式:

SET SESSION binlog_format = 'ROW';

SET GLOBAL binlog_format = 'ROW';

压力下测试,同时测试以下场景时同时修改schema

- 1、在大量写;

- 2、启动主从同步的状态下;

大量写的脚本,灌10万条数据

create procedure addDatas() begin declare pid int; set pid = 100000; while pid>0 do insert into tab(name)values('testuser'); set pid = pid-1; end while; end 先执行 call addDatas() 再执行 ./gh-ost --user="root" --host=192.168.10.60 --database="test" --table="tab" --alter="ADD COLUMN oneColumn1 varchar(10)" --allow-on-master --execute

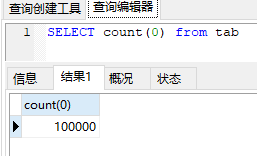

原来的数据为0条

执行后

输出结果

[root@hadoop001 home]# ./gh-ost --user="root" --host=192.168.10.60 --database="test" --table="tab" --alter="ADD COLUMN oneColumn1 varchar(10)" --allow-on-master --execute [2020/07/21 23:13:41] [info] binlogsyncer.go:133 create BinlogSyncer with config {99999 mysql 192.168.10.60 3306 root false false <nil> false UTC true 0 0s 0s 0 false} [2020/07/21 23:13:41] [info] binlogsyncer.go:354 begin to sync binlog from position (mysql-bin.000003, 48626709) [2020/07/21 23:13:41] [info] binlogsyncer.go:203 register slave for master server 192.168.10.60:3306 [2020/07/21 23:13:41] [info] binlogsyncer.go:723 rotate to (mysql-bin.000003, 48626709) # Migrating `test`.`tab`; Ghost table is `test`.`_tab_gho` # Migrating hadoop001:3306; inspecting hadoop001:3306; executing on hadoop001 # Migration started at Tue Jul 21 23:13:41 +0800 2020 # chunk-size: 1000; max-lag-millis: 1500ms; dml-batch-size: 10; max-load: ; critical-load: ; nice-ratio: 0.000000 # throttle-additional-flag-file: /tmp/gh-ost.throttle # Serving on unix socket: /tmp/gh-ost.test.tab.sock Copy: 0/12155 0.0%; Applied: 0; Backlog: 0/1000; Time: 0s(total), 0s(copy); streamer: mysql-bin.000003:48646230; Lag: 0.01s, State: migrating; ETA: N/A Copy: 0/12155 0.0%; Applied: 4741; Backlog: 3/1000; Time: 1s(total), 1s(copy); streamer: mysql-bin.000003:50271412; Lag: 0.01s, State: migrating; ETA: N/A Copy: 0/12155 0.0%; Applied: 9301; Backlog: 1/1000; Time: 2s(total), 2s(copy); streamer: mysql-bin.000003:51834667; Lag: 0.01s, State: migrating; ETA: N/A Copy: 0/12155 0.0%; Applied: 14004; Backlog: 9/1000; Time: 3s(total), 3s(copy); streamer: mysql-bin.000003:53444572; Lag: 0.01s, State: migrating; ETA: N/A Copy: 0/12155 0.0%; Applied: 18576; Backlog: 1/1000; Time: 4s(total), 4s(copy); streamer: mysql-bin.000003:55008409; Lag: 0.00s, State: migrating; ETA: N/A Copy: 0/12155 0.0%; Applied: 23194; Backlog: 2/1000; Time: 5s(total), 5s(copy); streamer: mysql-bin.000003:56589121; Lag: 0.01s, State: migrating; ETA: N/A Copy: 1000/12155 8.2%; Applied: 27909; Backlog: 4/1000; Time: 6s(total), 6s(copy); streamer: mysql-bin.000003:58218868; Lag: 0.01s, State: migrating; ETA: 1m6s Copy: 1000/12155 8.2%; Applied: 32645; Backlog: 0/1000; Time: 7s(total), 7s(copy); streamer: mysql-bin.000003:59839783; Lag: 0.01s, State: migrating; ETA: 1m18s Copy: 1000/12155 8.2%; Applied: 37399; Backlog: 5/1000; Time: 8s(total), 8s(copy); streamer: mysql-bin.000003:61468184; Lag: 0.01s, State: migrating; ETA: 1m29s Copy: 1000/12155 8.2%; Applied: 41908; Backlog: 6/1000; Time: 9s(total), 9s(copy); streamer: mysql-bin.000003:63017054; Lag: 0.01s, State: migrating; ETA: 1m40s Copy: 1000/12155 8.2%; Applied: 46481; Backlog: 3/1000; Time: 10s(total), 10s(copy); streamer: mysql-bin.000003:64587677; Lag: 0.01s, State: migrating; ETA: 1m51s Copy: 1000/12155 8.2%; Applied: 51207; Backlog: 3/1000; Time: 11s(total), 11s(copy); streamer: mysql-bin.000003:66206261; Lag: 0.01s, State: migrating; ETA: 2m2s Copy: 1000/12155 8.2%; Applied: 55899; Backlog: 3/1000; Time: 12s(total), 12s(copy); streamer: mysql-bin.000003:67813910; Lag: 0.01s, State: migrating; ETA: 2m13s Copy: 1000/12155 8.2%; Applied: 60535; Backlog: 4/1000; Time: 13s(total), 13s(copy); streamer: mysql-bin.000003:69415881; Lag: 0.01s, State: migrating; ETA: 2m25s Copy: 2000/12155 16.5%; Applied: 65086; Backlog: 2/1000; Time: 14s(total), 14s(copy); streamer: mysql-bin.000003:70998265; Lag: 0.01s, State: migrating; ETA: 1m11s Copy: 2000/12155 16.5%; Applied: 69623; Backlog: 7/1000; Time: 15s(total), 15s(copy); streamer: mysql-bin.000003:72564672; Lag: 0.01s, State: migrating; ETA: 1m16s Copy: 3000/12155 24.7%; Applied: 74058; Backlog: 2/1000; Time: 16s(total), 16s(copy); streamer: mysql-bin.000003:74113223; Lag: 0.01s, State: migrating; ETA: 48s Copy: 3000/12155 24.7%; Applied: 78445; Backlog: 5/1000; Time: 17s(total), 17s(copy); streamer: mysql-bin.000003:75628183; Lag: 0.01s, State: migrating; ETA: 51s Copy: 3000/12155 24.7%; Applied: 82853; Backlog: 4/1000; Time: 18s(total), 18s(copy); streamer: mysql-bin.000003:77151858; Lag: 0.00s, State: migrating; ETA: 54s Copy: 3000/12155 24.7%; Applied: 87229; Backlog: 5/1000; Time: 19s(total), 19s(copy); streamer: mysql-bin.000003:78663838; Lag: 0.01s, State: migrating; ETA: 57s Copy: 12254/12254 100.0%; Applied: 87746; Backlog: 0/1000; Time: 19s(total), 19s(copy); streamer: mysql-bin.000003:79018628; Lag: 0.01s, State: migrating; ETA: due Copy: 12254/12254 100.0%; Applied: 87746; Backlog: 1/1000; Time: 20s(total), 19s(copy); streamer: mysql-bin.000003:79022790; Lag: 0.00s, State: migrating; ETA: due # Migrating `test`.`tab`; Ghost table is `test`.`_tab_gho` # Migrating hadoop001:3306; inspecting hadoop001:3306; executing on hadoop001 # Migration started at Tue Jul 21 23:13:41 +0800 2020 # chunk-size: 1000; max-lag-millis: 1500ms; dml-batch-size: 10; max-load: ; critical-load: ; nice-ratio: 0.000000 # throttle-additional-flag-file: /tmp/gh-ost.throttle # Serving on unix socket: /tmp/gh-ost.test.tab.sock Copy: 12254/12254 100.0%; Applied: 87746; Backlog: 0/1000; Time: 20s(total), 19s(copy); streamer: mysql-bin.000003:79023531; Lag: 0.00s, State: migrating; ETA: due [2020/07/21 23:14:01] [info] binlogsyncer.go:164 syncer is closing... [2020/07/21 23:14:01] [info] binlogsyncer.go:179 syncer is closed # Done

如果在执行一次修改后,再执行其他修改,会被禁止,消息:

2020-07-21 23:23:34 FATAL Table `_tab_del` already exists. Panicking. Use --initially-drop-old-table to force dropping it, though I really prefer that you drop it or rename it away

需要手动删除`_tab_del`表

或者设置参数:

--ok-to-drop-table

go-ost执行完以后是否删除老表,加上此参数会自动删除老表。

一些参数

--max-load

迁移过程中,gh-ost会时刻关注负载情况,负载阀值是使用者自己定义,比如数据库的最大连接数,如果超过阀值,gh-ost不会退出,会等待到负载在阀值以下继续执行。

--critical-load

这个指的是gh-ost退出阀值,当负载超过这个阀值,gh-ost会停止并退出

--max-lag-millis

会监控从库的主从延迟情况,如果延迟秒数超过这个阀值,迁移不会退出,等待延迟秒数低于这个阀值继续迁移。