java

1 public class CountByKeyDemo {

2 private static SparkConf conf = new SparkConf().setMaster("local").setAppName("countbykeydemo");

3 private static JavaSparkContext jsc = new JavaSparkContext(conf);

4 public static void main(String[] args) {

5 List<Tuple2<String,Integer>> list = Arrays.asList(

6 new Tuple2<String,Integer>("tele",100),

7 new Tuple2<String,Integer>("tele",200),

8 new Tuple2<String,Integer>("tele",300),

9 new Tuple2<String,Integer>("yeye",50),

10 new Tuple2<String,Integer>("yeye",10),

11 new Tuple2<String,Integer>("yeye",70),

12 new Tuple2<String,Integer>("wyc",10000)

13 );

14

15 JavaPairRDD<String, Integer> rdd = jsc.parallelizePairs(list);

16

17 Map<String, Long> map = rdd.countByKey();

18 map.entrySet().forEach(i-> System.out.println(i.getKey() + ":" + i.getValue()));

19

20 jsc.close();

21 }

22 }

scala

1 object CountByKeyDemo {

2 def main(args: Array[String]): Unit = {

3 val conf = new SparkConf().setMaster("local").setAppName("countdemo");

4 val sc = new SparkContext(conf);

5

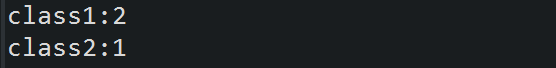

6 val arr = Array(("class1","tele"),("class1","yeye"),("class2","wyc"));

7 val rdd = sc.parallelize(arr,1);

8

9 val result = rdd.countByKey();

10 for((k,v) <- result) {

11 println(k + ":" + v);

12 }

13 }

14 }