Spark编译有两种处理方式,第一种是通过SBT,第二种是通过Maven。作过Java工作的一般对于Maven工具会比较熟悉,这边也是选用Maven的方式来处理Spark源码编译工作。

在开始编译工作前应当在自己的系统中配置Maven环境

参考Linux上安装Maven方案:

http://www.runoob.com/maven/maven-setup.html

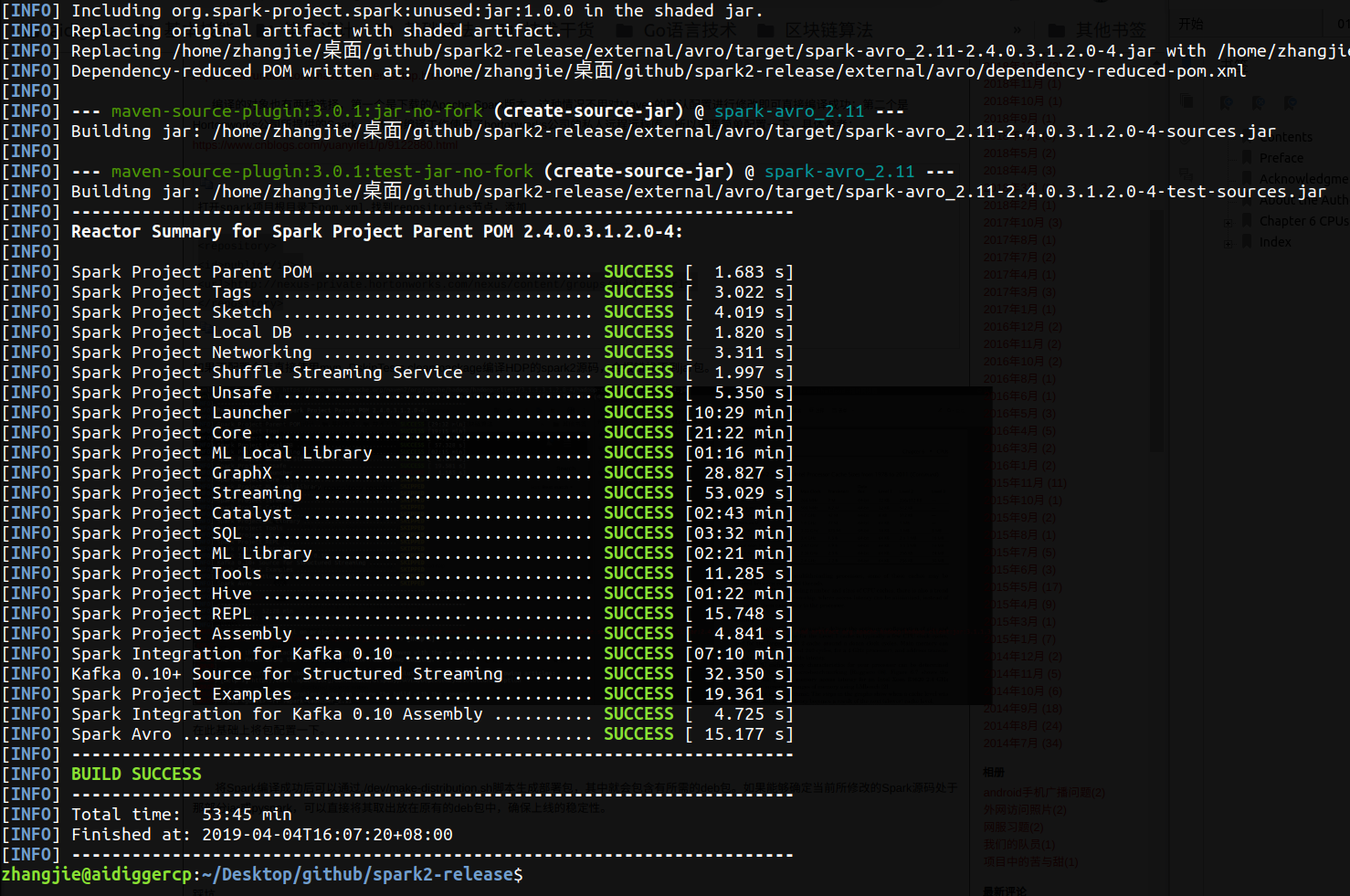

编译的对象也有两种选择,第一个是下载的Apache Spark版本,这种情况不用对Maven的默认配置进行修改即可直接编译成功;第二个是Hortonworks公司所提供的Spark,他们的编译工作使用了hortonworks公司的私人远程远程库,所以需要简单配置一下,具体参考:https://www.cnblogs.com/yuanyifei1/p/9122880.html

打开spark项目根目录下pom.xml,找到repositories节点,添加 <repository> <id>public</id> <url>http://nexus-private.hortonworks.com/nexus/content/groups/public</url> </repository>

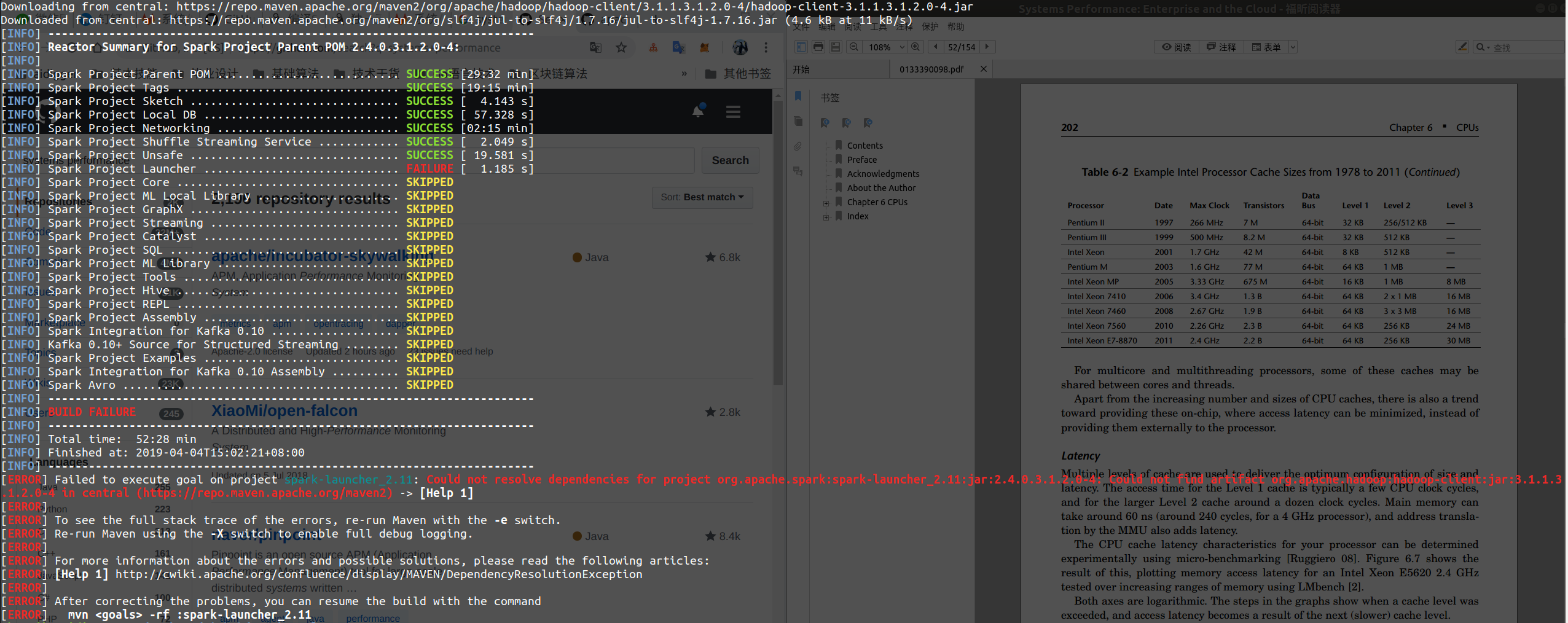

如果不配置仓库直接使用mvn -DskipTests clean package编译HDP的spark2源码,会报错找不到jar包。

在此基础上将包配置一下。

将Spark编译成功后可以通过./dev/make-distribution.sh脚本生成部署包,其中就会包含有所需的deb包。如果能够确定当前所修改的Spark源码处于那部分jar或pyspark,可以直接将其取出放在原有的deb包中,确保上线的稳定性。

将Hortonworks所提供的源码编译打包后需要对其进行安装工作。他们本身是不欢迎对源码进行修改上线的,在安装包中也是配置了GPG校验,在安装工作时需要对加密文件进行破解后安装。

踩坑

main: [exec] fatal: 不是一个 git 仓库(或者直至挂载点 / 的任何父目录) [exec] 停止在文件系统边界(未设置 GIT_DISCOVERY_ACROSS_FILESYSTEM)。 [exec] fatal: 不是一个 git 仓库(或者直至挂载点 / 的任何父目录) [exec] 停止在文件系统边界(未设置 GIT_DISCOVERY_ACROSS_FILESYSTEM)。 [INFO] Executed tasks [INFO] [INFO] --- maven-resources-plugin:2.7:resources (default-resources) @ spark-core_2.11 --- [INFO] Using 'UTF-8' encoding to copy filtered resources. [INFO] Copying 37 resources [INFO] Copying 1 resource [INFO] Copying 3 resources [INFO] [INFO] --- maven-compiler-plugin:3.7.0:compile (default-compile) @ spark-core_2.11 --- [INFO] Not compiling main sources [INFO] [INFO] --- scala-maven-plugin:3.2.2:compile (scala-compile-first) @ spark-core_2.11 --- [INFO] Using zinc server for incremental compilation [warn] Pruning sources from previous analysis, due to incompatible CompileSetup. [info] Compiling 490 Scala sources and 77 Java sources to /home/zhangjie/spark-2.3.1/core/target/scala-2.11/classes... [error] /home/zhangjie/spark-2.3.1/core/src/main/scala/org/apache/spark/api/python/PythonRunner.scala:184: value getLocalProperties is not a member of org.apache.spark.TaskContextImpl [error] val localProps = context.asInstanceOf[TaskContextImpl].getLocalProperties.asScala [error] ^ [error] one error found [error] Compile failed at Dec 24, 2018 3:28:39 PM [15.814s] [INFO] ------------------------------------------------------------------------ [INFO] Reactor Summary for Spark Project Parent POM 2.3.1: [INFO] [INFO] Spark Project Parent POM ........................... SUCCESS [ 1.751 s] [INFO] Spark Project Tags ................................. SUCCESS [ 9.577 s] [INFO] Spark Project Sketch ............................... SUCCESS [ 2.242 s] [INFO] Spark Project Local DB ............................. SUCCESS [01:08 min] [INFO] Spark Project Networking ........................... SUCCESS [ 16.155 s] [INFO] Spark Project Shuffle Streaming Service ............ SUCCESS [ 2.053 s] [INFO] Spark Project Unsafe ............................... SUCCESS [ 24.178 s] [INFO] Spark Project Launcher ............................. SUCCESS [01:03 min] [INFO] Spark Project Core ................................. FAILURE [01:18 min] [INFO] Spark Project ML Local Library ..................... SKIPPED [INFO] Spark Project GraphX ............................... SKIPPED [INFO] Spark Project Streaming ............................ SKIPPED [INFO] Spark Project Catalyst ............................. SKIPPED [INFO] Spark Project SQL .................................. SKIPPED [INFO] Spark Project ML Library ........................... SKIPPED [INFO] Spark Project Tools ................................ SKIPPED [INFO] Spark Project Hive ................................. SKIPPED [INFO] Spark Project REPL ................................. SKIPPED [INFO] Spark Project Assembly ............................. SKIPPED [INFO] Spark Integration for Kafka 0.10 ................... SKIPPED [INFO] Kafka 0.10 Source for Structured Streaming ......... SKIPPED [INFO] Spark Project Examples ............................. SKIPPED [INFO] Spark Integration for Kafka 0.10 Assembly .......... SKIPPED [INFO] ------------------------------------------------------------------------ [INFO] BUILD FAILURE [INFO] ------------------------------------------------------------------------ [INFO] Total time: 04:26 min [INFO] Finished at: 2018-12-24T15:28:39+08:00 [INFO] ------------------------------------------------------------------------ [ERROR] Failed to execute goal net.alchim31.maven:scala-maven-plugin:3.2.2:compile (scala-compile-first) on project spark-core_2.11: Execution scala-compile-first of goal net.alchim31.maven:scala-maven-plugin:3.2.2:compile failed.: CompileFailed -> [Help 1] [ERROR] [ERROR] To see the full stack trace of the errors, re-run Maven with the -e switch. [ERROR] Re-run Maven using the -X switch to enable full debug logging. [ERROR] [ERROR] For more information about the errors and possible solutions, please read the following articles: [ERROR] [Help 1] http://cwiki.apache.org/confluence/display/MAVEN/PluginExecutionException [ERROR] [ERROR] After correcting the problems, you can resume the build with the command [ERROR] mvn <goals> -rf :spark-core_2.11

原因是修改Scale源码后没有修改干净,遇见这种问题还是要将自己所修改的代码仔细review一波

同时在编译源码是注意路径中不要出现中文名,会导致编译出现卡死的情况;

参考资料: