Hive hook是hive的钩子函数,可以下面这几种情况下被执行

参考

https://www.slideshare.net/julingks/apache-hive-hooksminwookim130813

这些hook函数的hive sql运行过程中的执行顺序

Driver.run() => HiveDriverRunHook.preDriverRun()(hive.exec.driver.run.hooks) => Driver.compile() => HiveSemanticAnalyzerHook.preAnalyze()(hive.semantic.analyzer.hook) => SemanticAnalyze(QueryBlock, LogicalPlan, PhyPlan, TaskTree) => HiveSemanticAnalyzerHook.postAnalyze()(hive.semantic.analyzer.hook) => QueryString redactor(hive.exec.query.redactor.hooks) => QueryPlan Generation => Authorization => Driver.execute() => ExecuteWithHookContext.run() || PreExecute.run() (hive.exec.pre.hooks) => TaskRunner => if failed, ExecuteWithHookContext.run()(hive.exec.failure.hooks) => ExecuteWithHookContext.run() || PostExecute.run() (hive.exec.post.hooks) => HiveDriverRunHook.postDriverRun()(hive.exec.driver.run.hooks)

参考

https://my.oschina.net/kavn/blog/1514648

1.ExecuteWithHookContext接口

下面将实现ExecuteWithHookContext接口来实现一个钩子函数,其他的hook还有实现HiveSemanticAnalyzerHook接口,继承AbstractSemanticAnalyzerHook抽象类等

ExecuteWithHookContext可以实现3种类型的hook,分别是pre-execution,post-execution和execution-failure,这个在hive sql的执行过程中已经处于最后几个步骤了

依赖

需要注意依赖的版本需要和集群保持一致,我的cdh集群的版本为cdh5.16.2

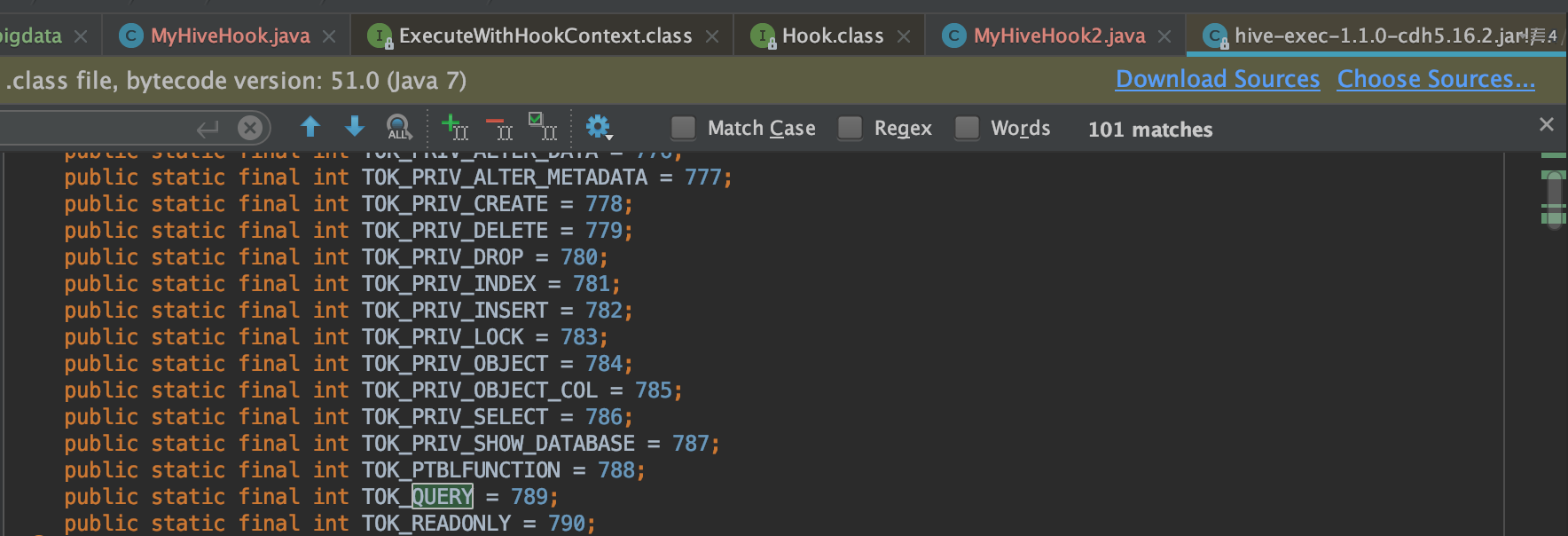

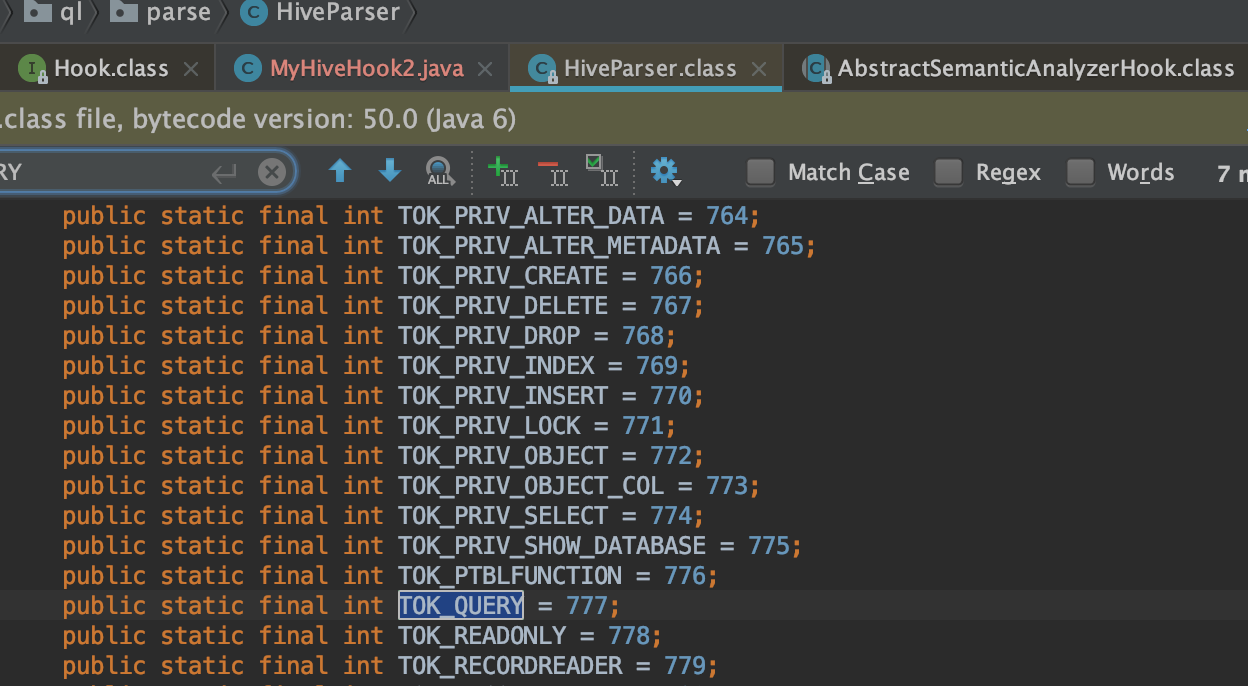

如果使用的是apache版本的1.1.0版本的hive-exec的话,代码的内容会和cloudera的1.1.0-cdh5.16.2的hive-exec会有些不同,比如

1.1.0-cdh5.16.2版本的TOK_QUERY=789

但是1.1.0版本的TOK_QUERY=777

<?xml version="1.0" encoding="UTF-8"?>

<project xmlns="http://maven.apache.org/POM/4.0.0"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance"

xsi:schemaLocation="http://maven.apache.org/POM/4.0.0 http://maven.apache.org/xsd/maven-4.0.0.xsd">

<parent>

<artifactId>interview-parent</artifactId>

<groupId>com.interview</groupId>

<version>1.0-SNAPSHOT</version>

</parent>

<modelVersion>4.0.0</modelVersion>

<artifactId>interview-bigdata</artifactId>

<dependencies>

<!-- logback -->

<dependency>

<groupId>org.slf4j</groupId>

<artifactId>log4j-over-slf4j</artifactId>

<version>1.7.25</version>

</dependency>

<!--hive-->

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-exec</artifactId>

<version>1.1.0-cdh5.16.2</version>

</dependency>

<dependency>

<groupId>org.apache.hive</groupId>

<artifactId>hive-metastore</artifactId>

<version>1.1.0-cdh5.16.2</version>

</dependency>

<!--hadoop-->

<dependency>

<groupId>org.apache.hadoop</groupId>

<artifactId>hadoop-common</artifactId>

<version>2.6.0-cdh5.16.2</version>

</dependency>

</dependencies>

<!--<build>-->

<!--<plugins>-->

<!--<plugin>-->

<!--<groupId>org.apache.maven.plugins</groupId>-->

<!--<artifactId>maven-shade-plugin</artifactId>-->

<!--<executions>-->

<!--<!– Run shade goal on package phase –>-->

<!--<execution>-->

<!--<phase>package</phase>-->

<!--<goals>-->

<!--<goal>shade</goal>-->

<!--</goals>-->

<!--<configuration>-->

<!--<filters>-->

<!--<filter>-->

<!--<!– Do not copy the signatures in the META-INF folder.-->

<!--Otherwise, this might cause SecurityExceptions when using the JAR. –>-->

<!--<artifact>*:*</artifact>-->

<!--<excludes>-->

<!--<exclude>META-INF/*.SF</exclude>-->

<!--<exclude>META-INF/*.DSA</exclude>-->

<!--<exclude>META-INF/*.RSA</exclude>-->

<!--</excludes>-->

<!--</filter>-->

<!--</filters>-->

<!--<createDependencyReducedPom>false</createDependencyReducedPom>-->

<!--</configuration>-->

<!--</execution>-->

<!--</executions>-->

<!--</plugin>-->

<!--<plugin>-->

<!--<groupId>org.apache.maven.plugins</groupId>-->

<!--<artifactId>maven-compiler-plugin</artifactId>-->

<!--<configuration>-->

<!--<source>1.8</source>-->

<!--<target>1.8</target>-->

<!--</configuration>-->

<!--</plugin>-->

<!--</plugins>-->

<!--</build>-->

</project>

代码,只是简单的打印了一行日志

package com.bigdata.hive;

import org.apache.hadoop.hive.ql.hooks.ExecuteWithHookContext;

import org.apache.hadoop.hive.ql.hooks.HookContext;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

public class MyHiveHook implements ExecuteWithHookContext {

private static Logger logger = LoggerFactory.getLogger(MyHiveHook.class);

public void run(HookContext hookContext) throws Exception {

logger.info("this is my hive hook");

}

}

打包

mvn clean package

将打好的jar包上传到所有hiveserver2所在机器的/var/lib/hive目录下,或者找一个hdfs目录

root@master:/var/lib/hive# ls examples.desktop interview-bigdata-1.0-SNAPSHOT.jar

修改owner成hive

sudo chown hive:hive ./interview-bigdata-1.0-SNAPSHOT.jar

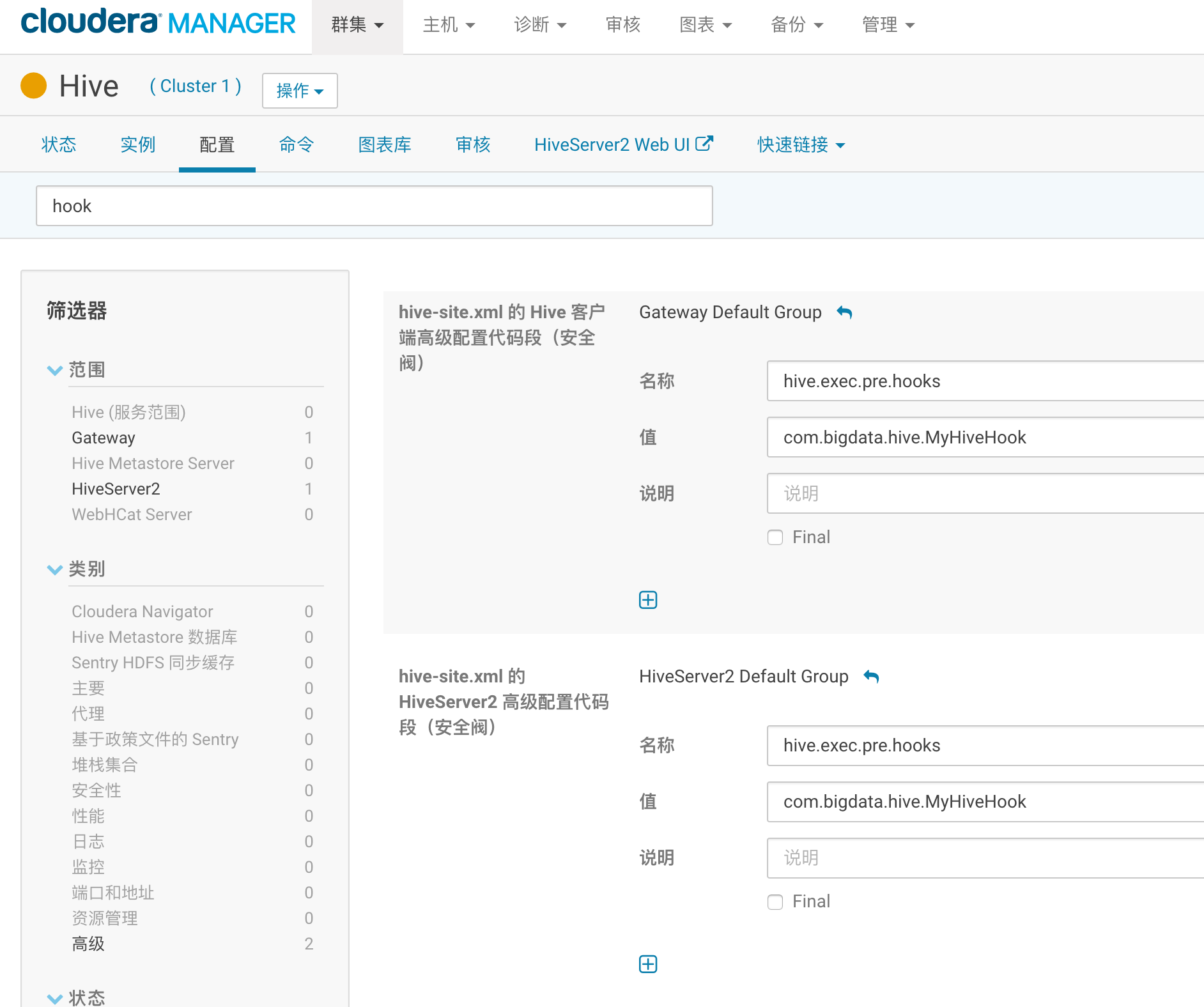

去cloudera manager中配置hive的辅助jar目录和hook函数

jar目录

hook函数,此处配置成hive.exec.pre.hooks,此时添加的hook函数将在sql执行之前运行

第一次配置之后需要重启hive集群,之后替换jar包的时候就只需要在hue中执行reload命令即可

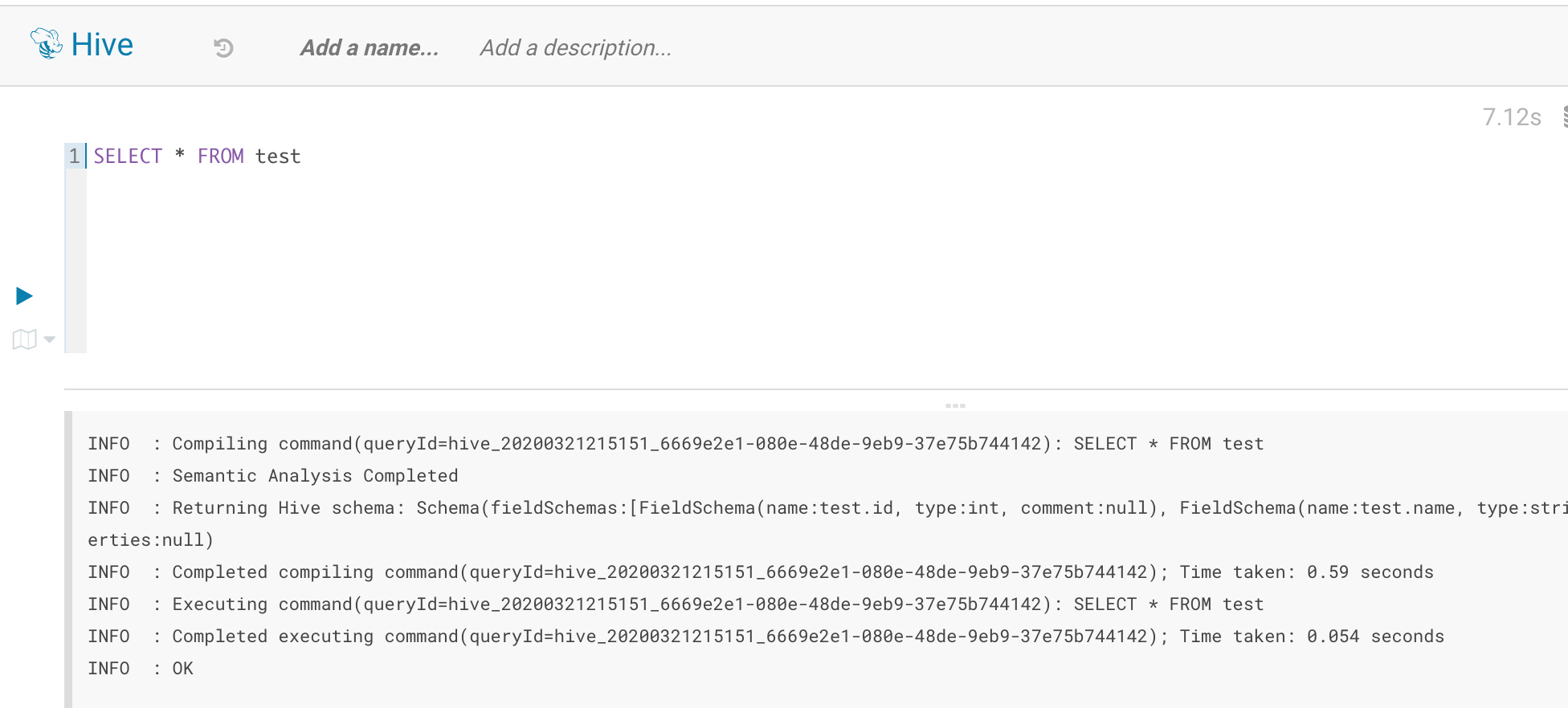

执行sql

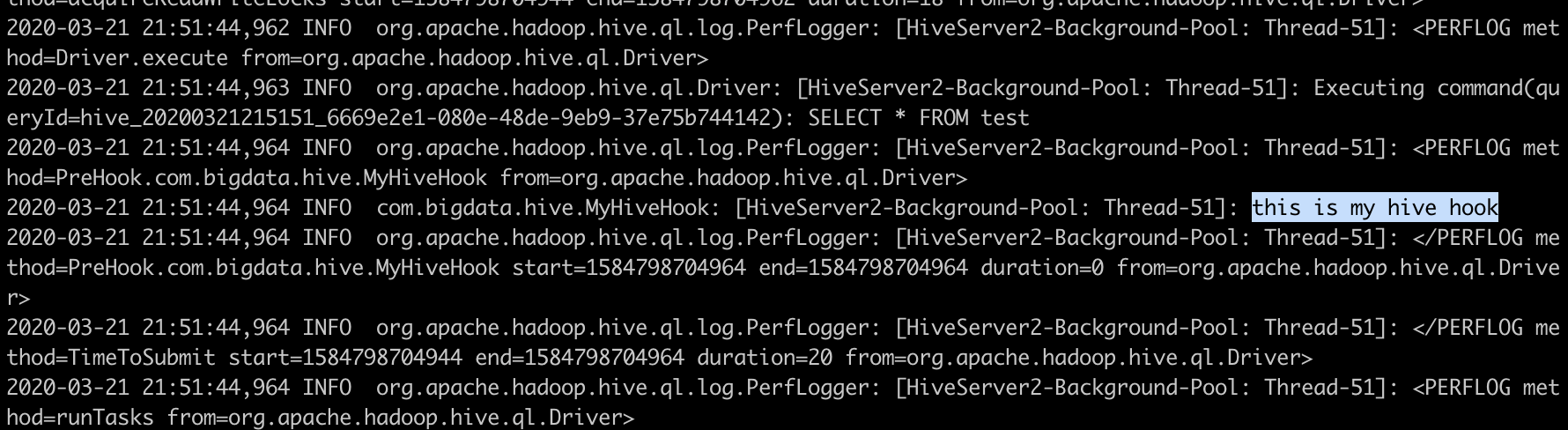

查看hiveserver2日志

tail -n 100 /var/log/hive/hadoop-cmf-hive-HIVESERVER2-master.log.out

可以看到打印的日志

下面尝试获取一下截取query,并进行打印

参考了

https://towardsdatascience.com/apache-hive-hooks-and-metastore-listeners-a-tale-of-your-metadata-903b751ee99f

代码,替换jar包的时候需要重启hive才能生效

package com.bigdata.hive;

import org.apache.hadoop.hive.metastore.api.Database;

import org.apache.hadoop.hive.ql.QueryPlan;

import org.apache.hadoop.hive.ql.hooks.*;

import org.apache.hadoop.hive.ql.plan.HiveOperation;

import org.codehaus.jackson.map.ObjectMapper;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import java.util.HashSet;

import java.util.Set;

public class MyHiveHook implements ExecuteWithHookContext {

private static Logger logger = LoggerFactory.getLogger(MyHiveHook.class);

private static final HashSet<String> OPERATION_NAMES = new HashSet<>();

static {

OPERATION_NAMES.add(HiveOperation.CREATETABLE.getOperationName());

OPERATION_NAMES.add(HiveOperation.ALTERDATABASE.getOperationName());

OPERATION_NAMES.add(HiveOperation.ALTERDATABASE_OWNER.getOperationName());

OPERATION_NAMES.add(HiveOperation.ALTERTABLE_ADDCOLS.getOperationName());

OPERATION_NAMES.add(HiveOperation.ALTERTABLE_LOCATION.getOperationName());

OPERATION_NAMES.add(HiveOperation.ALTERTABLE_PROPERTIES.getOperationName());

OPERATION_NAMES.add(HiveOperation.ALTERTABLE_RENAME.getOperationName());

OPERATION_NAMES.add(HiveOperation.ALTERTABLE_RENAMECOL.getOperationName());

OPERATION_NAMES.add(HiveOperation.ALTERTABLE_REPLACECOLS.getOperationName());

OPERATION_NAMES.add(HiveOperation.CREATEDATABASE.getOperationName());

OPERATION_NAMES.add(HiveOperation.DROPDATABASE.getOperationName());

OPERATION_NAMES.add(HiveOperation.DROPTABLE.getOperationName());

}

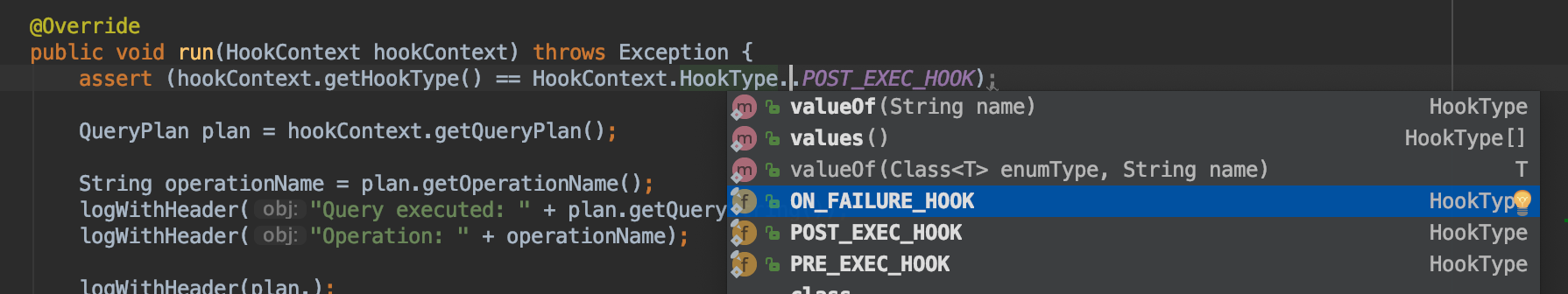

@Override

public void run(HookContext hookContext) throws Exception {

assert (hookContext.getHookType() == HookContext.HookType.POST_EXEC_HOOK);

QueryPlan plan = hookContext.getQueryPlan();

String operationName = plan.getOperationName();

logWithHeader("Query executed: " + plan.getQueryString());

logWithHeader("Operation: " + operationName);

if (OPERATION_NAMES.contains(operationName)

&& !plan.isExplain()) {

logWithHeader("Monitored Operation");

Set<ReadEntity> inputs = hookContext.getInputs();

Set<WriteEntity> outputs = hookContext.getOutputs();

for (Entity entity : inputs) {

logWithHeader("Hook metadata input value: " + toJson(entity));

}

for (Entity entity : outputs) {

logWithHeader("Hook metadata output value: " + toJson(entity));

}

} else {

logWithHeader("Non-monitored Operation, ignoring hook");

}

}

private static String toJson(Entity entity) throws Exception {

ObjectMapper mapper = new ObjectMapper();

switch (entity.getType()) {

case DATABASE:

Database db = entity.getDatabase();

return mapper.writeValueAsString(db);

case TABLE:

return mapper.writeValueAsString(entity.getTable().getTTable());

}

return null;

}

private void logWithHeader(Object obj){

logger.info("[CustomHook][Thread: "+Thread.currentThread().getName()+"] | " + obj);

}

}

输出,可以看到select * from test,执行的将会成为两个operation,

一个是SWITCHDATABASE

2020-03-22 23:50:33,374 INFO org.apache.hadoop.hive.ql.log.PerfLogger: [HiveServer2-Handler-Pool: Thread-39]: <PERFLOG method=PreHook.com.bigdata.hive.MyHiveHook from=org.apache.hadoop.hive.ql.Driver> 2020-03-22 23:50:33,374 INFO com.bigdata.hive.MyHiveHook: [HiveServer2-Handler-Pool: Thread-39]: [CustomHook][Thread: HiveServer2-Handler-Pool: Thread-39] | Query executed: USE `default` 2020-03-22 23:50:33,374 INFO com.bigdata.hive.MyHiveHook: [HiveServer2-Handler-Pool: Thread-39]: [CustomHook][Thread: HiveServer2-Handler-Pool: Thread-39] | Operation: SWITCHDATABASE 2020-03-22 23:50:33,374 INFO com.bigdata.hive.MyHiveHook: [HiveServer2-Handler-Pool: Thread-39]: [CustomHook][Thread: HiveServer2-Handler-Pool: Thread-39] | Non-monitored Operation, ignoring hook 2020-03-22 23:50:33,375 INFO org.apache.hadoop.hive.ql.log.PerfLogger: [HiveServer2-Handler-Pool: Thread-39]: </PERFLOG method=PreHook.com.bigdata.hive.MyHiveHook start=1584892233374 end=1584892233375 duration=1 from=org.apache.hadoop.hive.ql.Driver>

一个是QUERY

2020-03-22 23:50:35,282 INFO org.apache.hadoop.hive.ql.Driver: [HiveServer2-Background-Pool: Thread-50]: Executing command(queryId=hive_20200322235050_83cdb414-52bd-4990-9d20-87f5dc0d76dc): SELECT * from test 2020-03-22 23:50:35,283 INFO org.apache.hadoop.hive.ql.log.PerfLogger: [HiveServer2-Background-Pool: Thread-50]: <PERFLOG method=PreHook.com.bigdata.hive.MyHiveHook from=org.apache.hadoop.hive.ql.Driver> 2020-03-22 23:50:35,283 INFO com.bigdata.hive.MyHiveHook: [HiveServer2-Background-Pool: Thread-50]: [CustomHook][Thread: HiveServer2-Background-Pool: Thread-50] | Query executed: SELECT * from test 2020-03-22 23:50:35,283 INFO com.bigdata.hive.MyHiveHook: [HiveServer2-Background-Pool: Thread-50]: [CustomHook][Thread: HiveServer2-Background-Pool: Thread-50] | Operation: QUERY 2020-03-22 23:50:35,283 INFO com.bigdata.hive.MyHiveHook: [HiveServer2-Background-Pool: Thread-50]: [CustomHook][Thread: HiveServer2-Background-Pool: Thread-50] | Non-monitored Operation, ignoring hook 2020-03-22 23:50:35,283 INFO org.apache.hadoop.hive.ql.log.PerfLogger: [HiveServer2-Background-Pool: Thread-50]: </PERFLOG method=PreHook.com.bigdata.hive.MyHiveHook start=1584892235283 end=1584892235283 duration=0 from=org.apache.hadoop.hive.ql.Driver>

如果执行的是建表语句,比如

CREATE TABLE `test1`( `id` int, `name` string) ROW FORMAT SERDE 'org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe' STORED AS INPUTFORMAT 'org.apache.hadoop.mapred.TextInputFormat' OUTPUTFORMAT 'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat' LOCATION 'hdfs://master:8020/user/hive/warehouse/test' TBLPROPERTIES ( 'COLUMN_STATS_ACCURATE'='true', 'numFiles'='3', 'numRows'='6', 'rawDataSize'='36', 'totalSize'='42', 'transient_lastDdlTime'='1584893066')

那么hook函数的输出将会是

2020-03-23 00:08:16,486 INFO org.apache.hadoop.hive.ql.log.PerfLogger: [HiveServer2-Background-Pool: Thread-94]: <PERFLOG method=PreHook.com.bigdata.hive.MyHiveHook from=org.apache.hadoop.hive.ql.Driver>

2020-03-23 00:08:16,486 INFO com.bigdata.hive.MyHiveHook: [HiveServer2-Background-Pool: Thread-94]: [CustomHook][Thread: HiveServer2-Background-Pool: Thread-94] | Query executed: CREATE TABLE `test1`(

`id` int,

`name` string)

ROW FORMAT SERDE

'org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe'

STORED AS INPUTFORMAT

'org.apache.hadoop.mapred.TextInputFormat'

OUTPUTFORMAT

'org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat'

LOCATION

'hdfs://master:8020/user/hive/warehouse/test'

TBLPROPERTIES (

'COLUMN_STATS_ACCURATE'='true',

'numFiles'='3',

'numRows'='6',

'rawDataSize'='36',

'totalSize'='42',

'transient_lastDdlTime'='1584893066')

2020-03-23 00:08:16,486 INFO com.bigdata.hive.MyHiveHook: [HiveServer2-Background-Pool: Thread-94]: [CustomHook][Thread: HiveServer2-Background-Pool: Thread-94] | Operation: CREATETABLE

2020-03-23 00:08:16,486 INFO com.bigdata.hive.MyHiveHook: [HiveServer2-Background-Pool: Thread-94]: [CustomHook][Thread: HiveServer2-Background-Pool: Thread-94] | Monitored Operation

2020-03-23 00:08:16,487 INFO com.bigdata.hive.MyHiveHook: [HiveServer2-Background-Pool: Thread-94]: [CustomHook][Thread: HiveServer2-Background-Pool: Thread-94] | Hook metadata input value: null

2020-03-23 00:08:16,497 INFO com.bigdata.hive.MyHiveHook: [HiveServer2-Background-Pool: Thread-94]: [CustomHook][Thread: HiveServer2-Background-Pool: Thread-94] | Hook metadata output value: {"description":"Default Hive database","name":"default","parameters":{},"ownerType":"ROLE","setName":true,"setDescription":true,"locationUri":"hdfs://master:8020/user/hive/warehouse","setLocationUri":true,"setOwnerName":true,"ownerName":"public","setPrivileges":false,"setOwnerType":true,"parametersSize":0,"setParameters":true,"privileges":null}

2020-03-23 00:08:16,519 INFO com.bigdata.hive.MyHiveHook: [HiveServer2-Background-Pool: Thread-94]: [CustomHook][Thread: HiveServer2-Background-Pool: Thread-94] | Hook metadata output value: {"lastAccessTime":0,"parameters":{},"owner":"hive","ownerType":"USER","dbName":"default","tableType":"MANAGED_TABLE","tableName":"test1","setTableName":true,"setOwner":true,"retention":0,"setRetention":false,"partitionKeysSize":0,"partitionKeysIterator":[],"setPartitionKeys":true,"viewOriginalText":null,"setViewOriginalText":false,"viewExpandedText":null,"setViewExpandedText":false,"setTableType":true,"setPrivileges":false,"setTemporary":false,"setOwnerType":true,"setDbName":true,"createTime":1584893296,"setCreateTime":true,"setLastAccessTime":false,"setSd":true,"parametersSize":0,"setParameters":true,"privileges":null,"sd":{"location":null,"parameters":{},"numBuckets":-1,"inputFormat":"org.apache.hadoop.mapred.SequenceFileInputFormat","outputFormat":"org.apache.hadoop.hive.ql.io.HiveSequenceFileOutputFormat","compressed":false,"sortCols":[],"parametersSize":0,"setParameters":true,"cols":[],"colsSize":0,"serdeInfo":{"setSerializationLib":true,"name":null,"parameters":{"serialization.format":"1"},"setName":false,"parametersSize":1,"setParameters":true,"serializationLib":"org.apache.hadoop.hive.serde2.MetadataTypedColumnsetSerDe"},"skewedInfo":{"skewedColNamesSize":0,"skewedColNamesIterator":[],"setSkewedColNames":true,"skewedColValuesSize":0,"skewedColValuesIterator":[],"setSkewedColValues":true,"skewedColValueLocationMapsSize":0,"setSkewedColValueLocationMaps":true,"skewedColValueLocationMaps":{},"skewedColNames":[],"skewedColValues":[]},"bucketCols":[],"setNumBuckets":true,"setSerdeInfo":true,"bucketColsSize":0,"bucketColsIterator":[],"setBucketCols":true,"sortColsSize":0,"sortColsIterator":[],"setSortCols":true,"setSkewedInfo":true,"storedAsSubDirectories":false,"setStoredAsSubDirectories":false,"colsIterator":[],"setCols":true,"setLocation":false,"setInputFormat":true,"setOutputFormat":true,"setCompressed":false},"temporary":false,"partitionKeys":[]}

2020-03-23 00:08:16,519 INFO org.apache.hadoop.hive.ql.log.PerfLogger: [HiveServer2-Background-Pool: Thread-94]: </PERFLOG method=PreHook.com.bigdata.hive.MyHiveHook start=1584893296486 end=1584893296519 duration=33 from=org.apache.hadoop.hive.ql.Driver>

2020-03-23 00:08:16,519 INFO org.apache.hadoop.hive.ql.log.PerfLogger: [HiveServer2-Background-Pool: Thread-94]: </PERFLOG method=TimeToSubmit start=1584893296477 end=1584893296519 duration=42 from=org.apache.hadoop.hive.ql.Driver>

如果执行的是修改字段的语句,比如

ALTER TABLE test1 CHANGE id id String COMMENT "test"

那么hook函数的输出会是

2020-03-28 14:19:12,912 INFO org.apache.hadoop.hive.ql.log.PerfLogger: [HiveServer2-Handler-Pool: Thread-39]: </PERFLOG method=compile start=1585376352892 end=1585376352912 duration=20 from=org.apache.hadoop.hive.ql.Driver>

2020-03-28 14:19:12,912 INFO org.apache.hadoop.hive.ql.Driver: [HiveServer2-Handler-Pool: Thread-39]: Completed compiling command(queryId=hive_20200328141919_d2739f08-478e-4f95-949b-e0bd176e4eab); Time taken: 0.02 seconds

2020-03-28 14:19:12,913 INFO org.apache.hadoop.hive.ql.log.PerfLogger: [HiveServer2-Background-Pool: Thread-79]: <PERFLOG method=Driver.run from=org.apache.hadoop.hive.ql.Driver>

2020-03-28 14:19:12,913 INFO org.apache.hadoop.hive.ql.log.PerfLogger: [HiveServer2-Background-Pool: Thread-79]: <PERFLOG method=TimeToSubmit from=org.apache.hadoop.hive.ql.Driver>

2020-03-28 14:19:12,913 INFO org.apache.hadoop.hive.ql.log.PerfLogger: [HiveServer2-Background-Pool: Thread-79]: <PERFLOG method=acquireReadWriteLocks from=org.apache.hadoop.hive.ql.Driver>

2020-03-28 14:19:12,922 INFO org.apache.hadoop.hive.ql.log.PerfLogger: [HiveServer2-Background-Pool: Thread-79]: </PERFLOG method=acquireReadWriteLocks start=1585376352913 end=1585376352922 duration=9 from=org.apache.hadoop.hive.ql.Driver>

2020-03-28 14:19:12,922 INFO org.apache.hadoop.hive.ql.log.PerfLogger: [HiveServer2-Background-Pool: Thread-79]: <PERFLOG method=Driver.execute from=org.apache.hadoop.hive.ql.Driver>

2020-03-28 14:19:12,922 INFO org.apache.hadoop.hive.ql.Driver: [HiveServer2-Background-Pool: Thread-79]: Executing command(queryId=hive_20200328141919_d2739f08-478e-4f95-949b-e0bd176e4eab): ALTER TABLE test1 CHANGE id id String COMMENT "test"

2020-03-28 14:19:12,923 INFO org.apache.hadoop.hive.ql.log.PerfLogger: [HiveServer2-Background-Pool: Thread-79]: <PERFLOG method=PreHook.com.bigdata.hive.MyHiveHook from=org.apache.hadoop.hive.ql.Driver>

2020-03-28 14:19:12,923 INFO com.bigdata.hive.MyHiveHook: [HiveServer2-Background-Pool: Thread-79]: [CustomHook][Thread: HiveServer2-Background-Pool: Thread-79] | Query executed: ALTER TABLE test1 CHANGE id id String COMMENT "test"

2020-03-28 14:19:12,923 INFO com.bigdata.hive.MyHiveHook: [HiveServer2-Background-Pool: Thread-79]: [CustomHook][Thread: HiveServer2-Background-Pool: Thread-79] | Operation: ALTERTABLE_RENAMECOL

2020-03-28 14:19:12,923 INFO com.bigdata.hive.MyHiveHook: [HiveServer2-Background-Pool: Thread-79]: [CustomHook][Thread: HiveServer2-Background-Pool: Thread-79] | Monitored Operation

2020-03-28 14:19:12,928 INFO com.bigdata.hive.MyHiveHook: [HiveServer2-Background-Pool: Thread-79]: [CustomHook][Thread: HiveServer2-Background-Pool: Thread-79] | Hook metadata input value: {"lastAccessTime":0,"ownerType":"USER","parameters":{"last_modified_time":"1585376257","totalSize":"0","numRows":"-1","rawDataSize":"-1","COLUMN_STATS_ACCURATE":"false","numFiles":"0","transient_lastDdlTime":"1585376257","last_modified_by":"hive"},"owner":"hive","tableName":"test1","dbName":"default","tableType":"MANAGED_TABLE","privileges":null,"sd":{"location":"hdfs://master:8020/user/hive/warehouse/test","parameters":{},"inputFormat":"org.apache.hadoop.mapred.TextInputFormat","outputFormat":"org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat","compressed":false,"numBuckets":-1,"sortCols":[],"cols":[{"comment":"test","name":"id","type":"string","setName":true,"setType":true,"setComment":true},{"comment":null,"name":"name","type":"string","setName":true,"setType":true,"setComment":false}],"colsSize":2,"serdeInfo":{"setSerializationLib":true,"name":null,"parameters":{"serialization.format":"1"},"serializationLib":"org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe","parametersSize":1,"setParameters":true,"setName":false},"skewedInfo":{"skewedColNamesSize":0,"skewedColNamesIterator":[],"setSkewedColNames":true,"skewedColValuesSize":0,"skewedColValuesIterator":[],"setSkewedColValues":true,"skewedColValueLocationMapsSize":0,"setSkewedColValueLocationMaps":true,"skewedColValueLocationMaps":{},"skewedColNames":[],"skewedColValues":[]},"bucketCols":[],"parametersSize":0,"setParameters":true,"colsIterator":[{"comment":"test","name":"id","type":"string","setName":true,"setType":true,"setComment":true},{"comment":null,"name":"name","type":"string","setName":true,"setType":true,"setComment":false}],"setCols":true,"setLocation":true,"setInputFormat":true,"setOutputFormat":true,"setCompressed":true,"setNumBuckets":true,"setSerdeInfo":true,"bucketColsSize":0,"bucketColsIterator":[],"setBucketCols":true,"sortColsSize":0,"sortColsIterator":[],"setSortCols":true,"setSkewedInfo":true,"storedAsSubDirectories":false,"setStoredAsSubDirectories":true},"temporary":false,"partitionKeys":[],"setTableName":true,"setOwner":true,"retention":0,"setRetention":true,"partitionKeysSize":0,"partitionKeysIterator":[],"setPartitionKeys":true,"viewOriginalText":null,"setViewOriginalText":false,"viewExpandedText":null,"setViewExpandedText":false,"setTableType":true,"setPrivileges":false,"setTemporary":false,"setOwnerType":true,"setDbName":true,"createTime":1584893296,"setCreateTime":true,"setLastAccessTime":true,"setSd":true,"parametersSize":8,"setParameters":true}

2020-03-28 14:19:12,935 INFO com.bigdata.hive.MyHiveHook: [HiveServer2-Background-Pool: Thread-79]: [CustomHook][Thread: HiveServer2-Background-Pool: Thread-79] | Hook metadata output value: {"lastAccessTime":0,"ownerType":"USER","parameters":{"last_modified_time":"1585376257","totalSize":"0","numRows":"-1","rawDataSize":"-1","COLUMN_STATS_ACCURATE":"false","numFiles":"0","transient_lastDdlTime":"1585376257","last_modified_by":"hive"},"owner":"hive","tableName":"test1","dbName":"default","tableType":"MANAGED_TABLE","privileges":null,"sd":{"location":"hdfs://master:8020/user/hive/warehouse/test","parameters":{},"inputFormat":"org.apache.hadoop.mapred.TextInputFormat","outputFormat":"org.apache.hadoop.hive.ql.io.HiveIgnoreKeyTextOutputFormat","compressed":false,"numBuckets":-1,"sortCols":[],"cols":[{"comment":"test","name":"id","type":"string","setName":true,"setType":true,"setComment":true},{"comment":null,"name":"name","type":"string","setName":true,"setType":true,"setComment":false}],"colsSize":2,"serdeInfo":{"setSerializationLib":true,"name":null,"parameters":{"serialization.format":"1"},"serializationLib":"org.apache.hadoop.hive.serde2.lazy.LazySimpleSerDe","parametersSize":1,"setParameters":true,"setName":false},"skewedInfo":{"skewedColNamesSize":0,"skewedColNamesIterator":[],"setSkewedColNames":true,"skewedColValuesSize":0,"skewedColValuesIterator":[],"setSkewedColValues":true,"skewedColValueLocationMapsSize":0,"setSkewedColValueLocationMaps":true,"skewedColValueLocationMaps":{},"skewedColNames":[],"skewedColValues":[]},"bucketCols":[],"parametersSize":0,"setParameters":true,"colsIterator":[{"comment":"test","name":"id","type":"string","setName":true,"setType":true,"setComment":true},{"comment":null,"name":"name","type":"string","setName":true,"setType":true,"setComment":false}],"setCols":true,"setLocation":true,"setInputFormat":true,"setOutputFormat":true,"setCompressed":true,"setNumBuckets":true,"setSerdeInfo":true,"bucketColsSize":0,"bucketColsIterator":[],"setBucketCols":true,"sortColsSize":0,"sortColsIterator":[],"setSortCols":true,"setSkewedInfo":true,"storedAsSubDirectories":false,"setStoredAsSubDirectories":true},"temporary":false,"partitionKeys":[],"setTableName":true,"setOwner":true,"retention":0,"setRetention":true,"partitionKeysSize":0,"partitionKeysIterator":[],"setPartitionKeys":true,"viewOriginalText":null,"setViewOriginalText":false,"viewExpandedText":null,"setViewExpandedText":false,"setTableType":true,"setPrivileges":false,"setTemporary":false,"setOwnerType":true,"setDbName":true,"createTime":1584893296,"setCreateTime":true,"setLastAccessTime":true,"setSd":true,"parametersSize":8,"setParameters":true}

2.HiveSemanticAnalyzerHook接口

参考:https://www.iteye.com/blog/crazymatrix-2092830

实现HiveSemanticAnalyzerHook接口可以在hive执行语法分析前后插入hook函数,即preAnalyze和postAnalyze

有时,用户写的sql会带有上下文,比如select * from test,这时test这张表会属于用户当前session中的某个库,比如use default,可以使用

private final SessionState ss = SessionState.get();

来获得当前用户session中的库

package com.bigdata.hive;

import java.io.Serializable;

import java.util.ArrayList;

import java.util.List;

import org.apache.commons.lang.StringUtils;

import org.apache.hadoop.hive.metastore.api.FieldSchema;

import org.apache.hadoop.hive.ql.exec.Task;

import org.apache.hadoop.hive.ql.metadata.Hive;

import org.apache.hadoop.hive.ql.metadata.HiveException;

import org.apache.hadoop.hive.ql.metadata.Table;

import org.apache.hadoop.hive.ql.parse.*;

import org.apache.hadoop.hive.ql.session.SessionState;

import org.apache.hadoop.hive.ql.session.SessionState.LogHelper;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

public class MyHiveHook2 implements HiveSemanticAnalyzerHook {

private final static String NO_PARTITION_WARNING = "WARNING: HQL is not efficient, Please specify partition condition! HQL:%s ;USERNAME:%s";

private final SessionState ss = SessionState.get();

private final LogHelper console = SessionState.getConsole();

private Hive hive = null;

private String username;

private String currentDatabase = "default";

private String hql;

private String whereHql;

private String tableAlias;

private String tableName;

private String tableDatabaseName;

private Boolean needCheckPartition = false;

private static Logger logger = LoggerFactory.getLogger(MyHiveHook2.class);

@Override

public ASTNode preAnalyze(HiveSemanticAnalyzerHookContext context, ASTNode ast) throws SemanticException {

try {

logger.info(context.getCommand()); // 当前query

logger.info(ss.getUserName()); // 当前user

hql = StringUtils.replaceChars(context.getCommand(), '

', ' ');

logger.info("hql: " + hql);

if (hql.contains("where")) {

whereHql = hql.substring(hql.indexOf("where"));

}

username = context.getUserName();

logger.info(ast.getToken().getText()); // TOK_QUERY

logger.info(String.valueOf(ast.getToken().getType())); // token code

logger.info("" + (ast.getToken().getType() == HiveParser.TOK_QUERY));

if (ast.getToken().getType() == HiveParser.TOK_QUERY) {

try {

hive = context.getHive();

currentDatabase = hive.getDatabaseCurrent().getName();

logger.info("current database: " + currentDatabase); // session db

} catch (HiveException e) {

throw new SemanticException(e);

}

extractFromClause((ASTNode) ast.getChild(0));

String dbname = StringUtils.isEmpty(tableDatabaseName) ? currentDatabase : tableDatabaseName;

String tbname = tableName;

String[] parts = tableName.split(".");

if (parts.length == 2) {

dbname = parts[0];

tbname = parts[1];

}

logger.info("this is hive database name: " + dbname); // current db

logger.info("this is hive table name: " + tbname); // current table

Table t = hive.getTable(dbname, tbname);

if (t.isPartitioned()) {

if (StringUtils.isBlank(whereHql)) {

console.printError(String.format(NO_PARTITION_WARNING, hql, username));

} else {

List<FieldSchema> partitionKeys = t.getPartitionKeys();

List<String> partitionNames = new ArrayList<String>();

for (int i = 0; i < partitionKeys.size(); i++) {

partitionNames.add(partitionKeys.get(i).getName().toLowerCase());

}

if (!containsPartCond(partitionNames, whereHql, tableAlias)) {

console.printError(String.format(NO_PARTITION_WARNING, hql, username));

}

}

}

}

} catch (Exception ex) {

logger.info("error: ", ex);

}

return ast;

}

private boolean containsPartCond(List<String> partitionKeys, String sql, String alias) {

for (String pk : partitionKeys) {

if (sql.contains(pk)) {

return true;

}

if (!StringUtils.isEmpty(alias) && sql.contains(alias + "." + pk)) {

return true;

}

}

return false;

}

private void extractFromClause(ASTNode ast) {

if (HiveParser.TOK_FROM == ast.getToken().getType()) {

ASTNode refNode = (ASTNode) ast.getChild(0);

if (refNode.getToken().getType() == HiveParser.TOK_TABREF && ast.getChildCount() == 1) {

ASTNode tabNameNode = (ASTNode) (refNode.getChild(0));

int refNodeChildCount = refNode.getChildCount();

if (tabNameNode.getToken().getType() == HiveParser.TOK_TABNAME) {

if (tabNameNode.getChildCount() == 2) {

tableDatabaseName = tabNameNode.getChild(0).getText().toLowerCase();

tableName = BaseSemanticAnalyzer.getUnescapedName((ASTNode) tabNameNode.getChild(1))

.toLowerCase();

} else if (tabNameNode.getChildCount() == 1) {

tableName = BaseSemanticAnalyzer.getUnescapedName((ASTNode) tabNameNode.getChild(0))

.toLowerCase();

} else {

return;

}

if (refNodeChildCount == 2) {

tableAlias = BaseSemanticAnalyzer.unescapeIdentifier(refNode.getChild(1).getText())

.toLowerCase();

}

needCheckPartition = true;

}

}

}

}

@Override

public void postAnalyze(HiveSemanticAnalyzerHookContext context,

List<Task<? extends Serializable>> rootTasks) throws SemanticException {

logger.info(context.getCommand());

}

}

输出是

2020-04-01 00:41:41,485 INFO org.apache.hadoop.hive.ql.Driver: [HiveServer2-Handler-Pool: Thread-39]: Compiling command(queryId=hive_20200401004141_bf4c578a-6872-4947-8396-7c11b0530539): select * from test 2020-04-01 00:41:41,486 INFO org.apache.hadoop.hive.ql.log.PerfLogger: [HiveServer2-Handler-Pool: Thread-39]: <PERFLOG method=parse from=org.apache.hadoop.hive.ql.Driver> 2020-04-01 00:41:41,501 INFO org.apache.hadoop.hive.ql.log.PerfLogger: [HiveServer2-Handler-Pool: Thread-39]: </PERFLOG method=parse start=1585672901486 end=1585672901501 duration=15 from=org.apache.hadoop.hive.ql.Driver> 2020-04-01 00:41:41,502 INFO org.apache.hadoop.hive.ql.log.PerfLogger: [HiveServer2-Handler-Pool: Thread-39]: <PERFLOG method=semanticAnalyze from=org.apache.hadoop.hive.ql.Driver> 2020-04-01 00:41:41,550 INFO com.bigdata.hive.MyHiveHook2: [HiveServer2-Handler-Pool: Thread-39]: select * from test 2020-04-01 00:41:41,551 INFO com.bigdata.hive.MyHiveHook2: [HiveServer2-Handler-Pool: Thread-39]: hive 2020-04-01 00:41:41,551 INFO com.bigdata.hive.MyHiveHook2: [HiveServer2-Handler-Pool: Thread-39]: hql: select * from test 2020-04-01 00:41:41,551 INFO com.bigdata.hive.MyHiveHook2: [HiveServer2-Handler-Pool: Thread-39]: TOK_QUERY 2020-04-01 00:41:41,551 INFO com.bigdata.hive.MyHiveHook2: [HiveServer2-Handler-Pool: Thread-39]: 789 2020-04-01 00:41:41,551 INFO com.bigdata.hive.MyHiveHook2: [HiveServer2-Handler-Pool: Thread-39]: true 2020-04-01 00:41:41,561 INFO com.bigdata.hive.MyHiveHook2: [HiveServer2-Handler-Pool: Thread-39]: current database: default 2020-04-01 00:41:41,561 INFO com.bigdata.hive.MyHiveHook2: [HiveServer2-Handler-Pool: Thread-39]: this is hive database name: default 2020-04-01 00:41:41,561 INFO com.bigdata.hive.MyHiveHook2: [HiveServer2-Handler-Pool: Thread-39]: this is hive table name: test 2020-04-01 00:41:41,621 INFO org.apache.hadoop.hive.ql.parse.SemanticAnalyzer: [HiveServer2-Handler-Pool: Thread-39]: Starting Semantic Analysis 2020-04-01 00:41:41,623 INFO org.apache.hadoop.hive.ql.parse.SemanticAnalyzer: [HiveServer2-Handler-Pool: Thread-39]: Completed phase 1 of Semantic Analysis 2020-04-01 00:41:41,623 INFO org.apache.hadoop.hive.ql.parse.SemanticAnalyzer: [HiveServer2-Handler-Pool: Thread-39]: Get metadata for source tables 2020-04-01 00:41:41,648 INFO org.apache.hadoop.hive.ql.parse.SemanticAnalyzer: [HiveServer2-Handler-Pool: Thread-39]: Get metadata for subqueries 2020-04-01 00:41:41,656 INFO org.apache.hadoop.hive.ql.parse.SemanticAnalyzer: [HiveServer2-Handler-Pool: Thread-39]: Get metadata for destination tables 2020-04-01 00:41:41,708 INFO hive.ql.Context: [HiveServer2-Handler-Pool: Thread-39]: New scratch dir is hdfs://master:8020/tmp/hive/hive/3fc884ec-0f81-49e7-ae87-45ce85ca4139/hive_2020-04-01_00-41-41_485_2813780198360550808-1 2020-04-01 00:41:41,710 INFO org.apache.hadoop.hive.ql.parse.SemanticAnalyzer: [HiveServer2-Handler-Pool: Thread-39]: Completed getting MetaData in Semantic Analysis