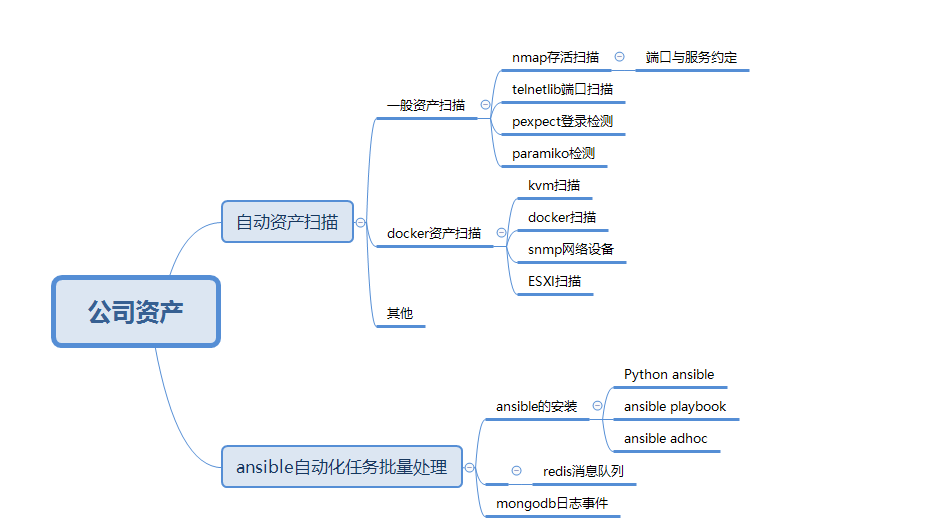

项目总体设计:资产的发现,自动化任务的处理

模块拆解:

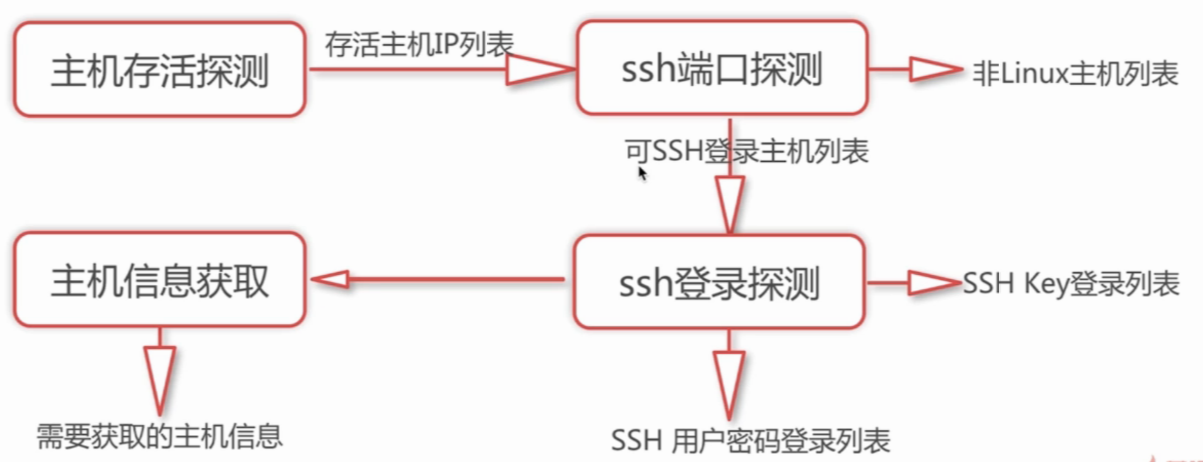

利用icmp与tcp协议。使用nmap实现主机存活探测;

pip install python-nmap

nmap的一般使用 使用tcp协议 sudo nmap -n -PE 192.168.8.0/24 使用icmp协议,但是仅仅发送一个arp,没有数据包。与ping有较大的差别。 sudo namp -n -sP 192.168.8.0/24

def nmap_sship(self,ports,nmap_net): ''' 扫描主机指定ssh端口是否开通ssh端口 :param ports: :param port_list: :param unkown_list: :param nmap_net: :return: ''' ports = ports port_list = ports.split(',') nm = nmap.PortScanner() # 创建端口扫描对象 ssh_info = {} unkown_list = [] # 调用扫描方法,参数指定扫描主机hosts,nmap扫描命令行参数arguments nm.scan(hosts=nmap_net, arguments='-n -sP -PE') tcp_all_ip = nm.all_hosts() host_list = [] for ip in tcp_all_ip: # 遍历扫描主机 if nm[ip]['status']['state'] == "up": host_list.append(ip) for port in port_list: try: print("Scan ip %s ..... Port %s"%(ip,port)) logger.info("Scan ip %s ..... Port %s"%(ip,port)) tm = telnetlib.Telnet(host=ip,port=port,timeout=4) tm_res = tm.read_until(" ".encode(),timeout=4) if tm_res: if re.search("ssh".encode(),tm_res.lower()): print(ip,port) if ip not in self.black_list: ssh_info[ip]=port connet = "IP:%s Port:%s Server:%s"%(ip,port,tm_res.lower()) logger.info("IP:%s Port:%s Server:%s"%(ip,port,tm_res.lower())) print("[?]IP:%s Port:%s Server:%s"%(ip,port,tm_res)) else: if ip not in unkown_list and ip not in ssh_info.keys(): unkown_list.append(ip) logger.info("Telnet not ssh server:%s,%s,%s"%(ip,port,tm_res)) print("Open Res.....",ip,port,tm_res) else: if ip not in unkown_list and ip not in ssh_info.keys(): unkown_list.append(ip) logger.info("Telnet no data:%s,%s"%(ip,port)) print("Open....",ip,port) except EOFError as e: if ip not in unkown_list and ip not in ssh_info.keys(): unkown_list.append(ip) unkown_list.append(ip) logger.exception("Telnet port EOFError:%s,%s,%s"%(ip,port,e)) print("Open....",ip,port) except Exception as e: import traceback print(traceback.print_exc()) if ip not in unkown_list and ip not in ssh_info.keys(): unkown_list.append(ip) logger.exception("Telnet port Exception:%s,%s,%s"%(ip,port,e)) print("error...",ip,port,e) return ssh_info,host_list,list(set(unkown_list))

使用默认的规定,比如开启ssh服务的主机是linux。端口例如22.【为了安全起见,各公司都会修改ssh的默认端口】

pexpect用来通过启动子程序,使用正则表达式对程序输出做出特定响应,以此实现与其自动交互的Python模块。

paramiko基于Python实现的SSH远程安全连接,用于SSH远程执行命令、文件传输等功能的ssh客户端模块。

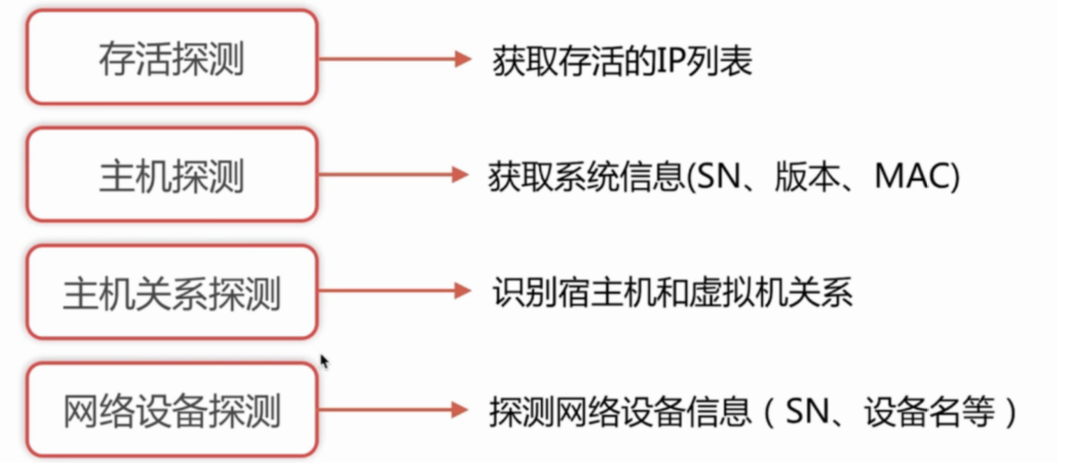

主机的探测流程

import pexpect def login_ssh_passwd(port="",user="",host="",passwd=""): '''函数:用于实现pexepect实现ssh的自动化用户密码登录''' # print 'ssh -p %s %s@%s' % (port,user, host) if port and user and host and passwd: ssh = pexpect.spawn('ssh -p %s %s@%s' % (port,user, host)) i = ssh.expect(['password:', 'continue connecting (yes/no)?'], timeout=5) if i == 0 : ssh.sendline(passwd) elif i == 1: ssh.sendline('yes ') ssh.expect('password: ') ssh.sendline(passwd) index = ssh.expect (["#", pexpect.EOF, pexpect.TIMEOUT]) if index == 0: print "logging in as root!" ssh.interact() elif index == 1: print "logging process exit!" elif index == 2: print "logging timeout exit" else: print "Parameter error!" def login_ssh_key(keyfile="",user="",host="",port=""): '''函数:用于实现pexepect实现ssh的自动化密钥登录''' if port and user and host and keyfile: ssh = pexpect.spawn('ssh -i %s -p %s %s@%s' % (keyfile,port,user, host)) i = ssh.expect( [pexpect.TIMEOUT,'continue connecting (yes/no)?'], timeout=2) # print '...................................',0 if i == 1: ssh.sendline('yes ') index = ssh.expect (["#", pexpect.EOF, pexpect.TIMEOUT]) else: index = ssh.expect (["#", pexpect.EOF, pexpect.TIMEOUT]) if index == 0: print "logging in as root!" ssh.interact() elif index == 1: print "logging process exit!" elif index == 2: print "logging timeout exit" else: print "Parameter error!" def main(): '''主函数:实现两种方式分别的登录''' # login_ssh_passwd(port='22',user='root',host='192.168.1.101',passwd='imooccs') login_ssh_key(keyfile="/tmp/id_rsa",port='22',user='root',host='192.168.1.101') if __name__ == "__main__": main()

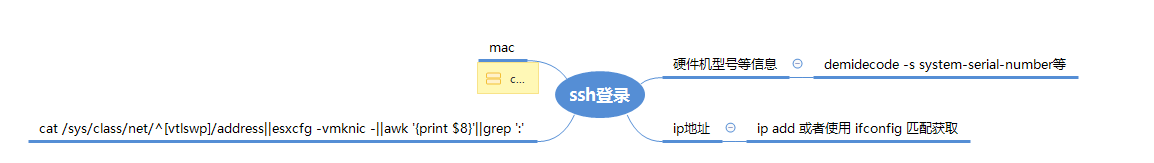

在使用ssh匹配登录到其他主机的时候。将会进行如下的操作

处理kvm与docker的资产记录问题

docker资产的记录主要确定:宿主机唯一标识与端口映射关系。如下:

可以使用docker ps,通过shell的awk,获取关键信息。如下:

class NmapDocker(NmapDev): def __init__(self,d_cmds,pass_lst,ip_key_dic): NmapDev.__init__(self) # super(NmapDocker,self).__init__() self.docker_cmd_list = ["docker ps |awk -F '->' '{print $1}'|grep -v 'CONTAINER'|awk 'BEGIN{FS~/s+/;}{print $NF" "$1" "$2;}'|sed s/0.0.0.0://"] # self.docker_cmd_list = ["docker ps |awk -F '->' '{print $1}'|grep -v 'CONTAINER'|awk -F ':' '{print $NF','$1}'"] # self.docker_cmd_list = ["docker ps |awk -F '->' '{print $1}'|grep -v 'CONTAINER'|awk -F ':' '{print $NF}'|grep '^[0-9]'"] self.docker_cmd = d_cmds self.password_list = pass_lst self.p_docker_relate = {} self.ip_key_dic = ip_key_dic def do_nmap(self,host_list): ip_items = HostLoginifo.objects.filter(ip__in=host_list) ns = prpcrypt() for ip_item in ip_items: docker_dct = {} tmp_sship_list = [] doobj = J_ssh_do([ip_item.ip,ip_item.ssh_port,ip_item.ssh_user]) if ip_item.ssh_type==0: ssh_passwd = ns.decrypt(ip_item.ssh_passwd) login_info = (ip_item.ip,int(ip_item.ssh_port),ip_item.ssh_user,ssh_passwd) res = doobj.pass_do(login_info,self.docker_cmd_list) if ip_item.ssh_type==1: login_info = (ip_item.ip,int(ip_item.ssh_port),ip_item.ssh_user,ip_item.ssh_rsa) res = doobj.rsa_do(login_info,self.docker_cmd_list) if ip_item.ssh_type==2: login_info = (ip_item.ip,int(ip_item.ssh_port),ip_item.ssh_user,ip_item.ssh_rsa) res = doobj.dsa_do(login_info,self.docker_cmd_list) if ip_item.ssh_type==3: login_info = (ip_item.ip,int(ip_item.ssh_port),ip_item.ssh_user,ip_item.ssh_rsa,ip_item.rsa_pass) res = doobj.imoocc_rsa_do(login_info,self.docker_cmd_list) # port_list = res["docker ps |awk -F '->' '{print $1}'|grep -v 'CONTAINER'|awk -F ':' '{print $NF}'|grep '^[0-9]'"].split(" ") port_list = res["docker ps |awk -F '->' '{print $1}'|grep -v 'CONTAINER'|awk 'BEGIN{FS~/s+/;}{print $NF" "$1" "$2;}'|sed s/0.0.0.0://"].split(" ") for d_item in port_list: if d_item: print("...............d_item",d_item) d_port,d_id,d_dn = re.split('s+',d_item)[:3] d_cid = d_id + d_dn docker_dct[d_port] = sn_trans(d_cid) sship_list = [(ip_item.ip,port) for port in docker_dct.keys() if port] nr = prpcrypt() canlogin_list,notlogin_list = self.try_docker_login(sship_list,self.password_list,self.docker_cmd) for ip,port in sship_list: # sship_obj = {} # sship_obj[ip] = port if port in canlogin_list: id = ConnectionInfo.objects.all().count() + 1 item_val = canlogin_list[port] ssh_passwd = nr.encrypt(item_val[1]) ConnectionInfo.objects.create(id = id,ssh_username=item_val[2],ssh_userpasswd=ssh_passwd,ssh_hostip=ip,ssh_host_port=port, ssh_status=1,ssh_type=4,sn_key=docker_dct[port]) py_id = PhysicalServerInfo.objects.get(conn_phy__sn_key=self.ip_key_dic[ip]).id VirtualServerInfo.objects.create(server_ip=ip,server_type="Docker Contianer",system_ver=item_val[3],sys_hostname=item_val[4],mac=item_val[5],sn=docker_dct[port],vir_phy_id=py_id,conn_vir_id=id) if port in notlogin_list: item_val = notlogin_list[ip] ConnectionInfo.objects.create(id = id,ssh_username=item_val[2],ssh_hostip=ip,ssh_host_port=item_val[0], ssh_status=0,ssh_type=5,sn_key=docker_dct[port]) py_id = PhysicalServerInfo.objects.get(conn_phy__sn_key=self.ip_key_dic[ip]).id VirtualServerInfo.objects.create(server_ip=ip,server_type="Docker Contianer",vir_phy_id=py_id,conn_vir_id=id)

ESXI与主机关联的方式:

class NmapVMX(): def __init__(self,vmware_p_list,ip_key_dic): self.vmware_p_list = vmware_p_list self.ip_key_dic = ip_key_dic def dosnmp(self): nr = prpcrypt() for vm_item in self.vmware_p_list: vm_sn = self.ip_key_dic[vm_item] p_item = ConnectionInfo.objects.filter(sn_key=vm_sn) try: vmip = p_item[0].ssh_hostip.split(',')[0] ssh_passwd = nr.decrypt(p_item[0].ssh_userpasswd) ne = SnmpESXI(host=vmip,user=p_item[0].ssh_username,passwd=ssh_passwd) res = ne.dosnmp() py_id = PhysicalServerInfo.objects.get(conn_phy__sn_key=vm_sn).id for v_item in res: v_uuid = v_item['uuid'] v_vmname = v_item['name'] v_obj = VirtualServerInfo.objects.filter(sn__contains=v_uuid) if v_obj: v_obj.update(vir_phy=py_id,server_type=u"VMX:%s"%(v_vmname)) else: logger.error("Error:no vmx matched! %s %s"%(v_uuid,v_vmname)) print("Error:no vmx matched! %s %s"%(v_uuid,v_vmname)) except Exception as e: print("Vmware host sdk connect failed!%s"%(p_item[0].ssh_hostip)) logger.error("Error:Vmware host sdk connect failed!%s"%(p_item[0].ssh_hostip))

而kvm的话。可以使用mac地址唯一性来确定。(具体操作,前面已经有)

class NmapKVM(): def __init__(self,ip_key_dic): self.p_kvm_cmds = ['cat /sys/class/net/vnet*/address'] self.ip_key_dic = ip_key_dic def do_nmap(self,p_kvm_list): for item in p_kvm_list: ip_items = HostLoginifo.objects.filter(ip=item) docker_dct = {} nr = prpcrypt() for ip_item in ip_items: doobj = J_ssh_do([ip_item.ip,ip_item.ssh_port,ip_item.ssh_user]) if ip_item.ssh_type==0: ssh_passwd = nr.decrypt(ip_item.ssh_passwd) login_info = (ip_item.ip,int(ip_item.ssh_port),ip_item.ssh_user,ssh_passwd) res = doobj.pass_do(login_info,self.p_kvm_cmds) if ip_item.ssh_type==1: login_info = (ip_item.ip,int(ip_item.ssh_port),ip_item.ssh_user,ip_item.ssh_rsa) res = doobj.rsa_do(login_info,self.p_kvm_cmds) if ip_item.ssh_type==2: login_info = (ip_item.ip,int(ip_item.ssh_port),ip_item.ssh_user,ip_item.ssh_rsa) res = doobj.dsa_do(login_info,self.p_kvm_cmds) if ip_item.ssh_type==3: login_info = (ip_item.ip,int(ip_item.ssh_port),ip_item.ssh_user,ip_item.ssh_rsa,ip_item.rsa_pass) res = doobj.imoocc_rsa_do(login_info,self.p_kvm_cmds) kvm_mac_list = res[self.p_kvm_cmds[0]].split(' ') for kvm_item in kvm_mac_list: if kvm_item: kvm_mac_str = mac_trans(kvm_item)[-10:] py_id = PhysicalServerInfo.objects.get(conn_phy__sn_key=self.ip_key_dic[item]).id v_obj = VirtualServerInfo.objects.filter(mac__contains=kvm_mac_str) if v_obj: v_obj.update(vir_phy=py_id,server_type="KVM") else: p_ob = PhysicalServerInfo.objects.filter(mac__contains=kvm_mac_str) if p_ob and len(p_ob) < 2: p_ob.update(vir_phy=py_id,server_type="KVM")

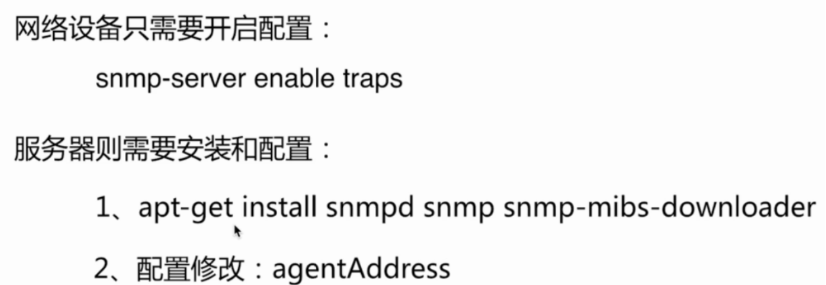

服务端网络设备的探测:

ansible批量化处理:

# import os,sys # PROJECT_ROOT = '/Users/renren/Work/imoocc/code/iops' # sys.path.insert(0,PROJECT_ROOT) # os.environ["DJANGO_SETTINGS_MODULE"] = 'admin.settings.settings' # import django # django.setup() import json,sys,os from ansible import constants from collections import namedtuple from ansible.parsing.dataloader import DataLoader from ansible.playbook.play import Play from ansible.executor.task_queue_manager import TaskQueueManager from ansible.executor.playbook_executor import PlaybookExecutor from ansible.plugins.callback import CallbackBase from ansible.inventory.manager import InventoryManager from ansible.vars.manager import VariableManager from ansible.inventory.host import Host,Group from admin.settings.settings import BASE_DIR class MyInventory(): """ this is IOPS ansible inventory object. """ def __init__(self,resource,loader,variable_manager): self.resource = resource self.loader = DataLoader() self.inventory = InventoryManager(loader=self.loader, sources=['%s/conf/hostslist'%BASE_DIR]) # self.variable_manager.set_inventory(self.inventory) self.variable_manager = VariableManager(loader=self.loader, inventory=self.inventory) self.dynamic_inventory() def add_dynamic_group(self, hosts, groupname, groupvars=None): """ add hosts to a group """ self.inventory.add_group(groupname) my_group = Group(name=groupname) # if group variables exists, add them to group if groupvars: for key, value in groupvars.iteritems(): my_group.set_variable(key, value) # add hosts to group for host in hosts: # set connection variables hostname = host.get("hostname") hostip = host.get('ip', hostname) hostport = host.get("port") username = host.get("username") password = host.get("password") ssh_key = host.get("ssh_key") my_host = Host(name=hostname, port=hostport) self.variable_manager.set_host_variable(host=my_host,varname='ansible_ssh_host',value=hostip) self.variable_manager.set_host_variable(host=my_host,varname='ansible_ssh_pass',value=password) self.variable_manager.set_host_variable(host=my_host,varname='ansible_ssh_port',value=hostport) self.variable_manager.set_host_variable(host=my_host,varname='ansible_ssh_user',value=username) self.variable_manager.set_host_variable(host=my_host,varname='ansible_ssh_private_key_file',value=ssh_key) # my_host.set_variable('ansible_ssh_pass', password) # my_host.set_variable('ansible_ssh_private_key_file', ssh_key) # set other variables for key, value in host.iteritems(): if key not in ["hostname", "port", "username", "password"]: self.variable_manager.set_host_variable(host=my_host,varname=key,value=value) # add to group self.inventory.add_host(host=hostname,group=groupname,port=hostport) ghost = Host(name="192.168.8.119") def dynamic_inventory(self): """ add hosts to inventory. """ if isinstance(self.resource, list): self.add_dynamic_group(self.resource, 'default_group') elif isinstance(self.resource, dict): for groupname, hosts_and_vars in self.resource.iteritems(): self.add_dynamic_group(hosts_and_vars.get("hosts"), groupname, hosts_and_vars.get("vars")) class ModelResultsCollector(CallbackBase): def __init__(self, *args, **kwargs): super(ModelResultsCollector, self).__init__(*args, **kwargs) self.host_ok = {} self.host_unreachable = {} self.host_failed = {} def v2_runner_on_unreachable(self, result): self.host_unreachable[result._host.get_name()] = result def v2_runner_on_ok(self, result, *args, **kwargs): self.host_ok[result._host.get_name()] = result def v2_runner_on_failed(self, result, *args, **kwargs): self.host_failed[result._host.get_name()] = result class PlayBookResultsCollector(CallbackBase): CALLBACK_VERSION = 2.0 def __init__(self, *args, **kwargs): super(PlayBookResultsCollector, self).__init__(*args, **kwargs) self.task_ok = {} self.task_skipped = {} self.task_failed = {} self.task_status = {} self.task_unreachable = {} def v2_runner_on_ok(self, result, *args, **kwargs): self.task_ok[result._host.get_name()] = result def v2_runner_on_failed(self, result, *args, **kwargs): self.task_failed[result._host.get_name()] = result def v2_runner_on_unreachable(self, result): self.task_unreachable[result._host.get_name()] = result def v2_runner_on_skipped(self, result): self.task_ok[result._host.get_name()] = result def v2_playbook_on_stats(self, stats): hosts = sorted(stats.processed.keys()) for h in hosts: t = stats.summarize(h) self.task_status[h] = { "ok":t['ok'], "changed" : t['changed'], "unreachable":t['unreachable'], "skipped":t['skipped'], "failed":t['failures'] } class ANSRunner(object): """ This is a General object for parallel execute modules. """ def __init__(self,resource,redisKey=None,logId=None,*args, **kwargs): self.resource = resource self.inventory = None self.variable_manager = None self.loader = None self.options = None self.passwords = None self.callback = None self.__initializeData() self.results_raw = {} self.redisKey = redisKey self.logId = logId def __initializeData(self): """ 初始化ansible """ Options = namedtuple('Options', ['connection','module_path', 'forks', 'timeout', 'remote_user', 'ask_pass', 'private_key_file', 'ssh_common_args', 'ssh_extra_args', 'sftp_extra_args', 'scp_extra_args', 'become', 'become_method', 'become_user', 'ask_value_pass', 'verbosity', 'check', 'listhosts', 'listtasks', 'listtags', 'syntax','diff']) self.loader = DataLoader() self.options = Options(connection='smart', module_path=None, forks=100, timeout=10, remote_user='root', ask_pass=False, private_key_file=None, ssh_common_args=None, ssh_extra_args=None, sftp_extra_args=None, scp_extra_args=None, become=None, become_method=None, become_user='root', ask_value_pass=False, verbosity=None, check=False, listhosts=False, listtasks=False, listtags=False, syntax=False, diff=True) self.passwords = dict(sshpass=None, becomepass=None) myinvent = MyInventory(self.resource, self.loader, self.variable_manager) self.inventory = myinvent.inventory self.variable_manager = myinvent.variable_manager # self.variable_manager.set_inventory(self.inventory) # self.variable_manager = VariableManager(loader=self.loader, inventory=self.inventory) def run_model(self, host_list, module_name, module_args): """ run module from andible ad-hoc. module_name: ansible module_name module_args: ansible module args """ play_source = dict( name="Ansible Play", hosts=host_list, gather_facts='no', tasks=[dict(action=dict(module=module_name, args=module_args))] ) play = Play().load(play_source, variable_manager=self.variable_manager, loader=self.loader) tqm = None # if self.redisKey:self.callback = ModelResultsCollectorToSave(self.redisKey,self.logId) # else:self.callback = ModelResultsCollector() self.callback = ModelResultsCollector() import traceback try: tqm = TaskQueueManager( inventory=self.inventory, variable_manager=self.variable_manager, loader=self.loader, options=self.options, passwords=self.passwords, stdout_callback = "minimal", ) tqm._stdout_callback = self.callback constants.HOST_KEY_CHECKING = False #关闭第一次使用ansible连接客户端是输入命令 tqm.run(play) except Exception as err: print(traceback.print_exc()) # DsRedis.OpsAnsibleModel.lpush(self.redisKey,data=err) # if self.logId:AnsibleSaveResult.Model.insert(self.logId, err) finally: if tqm is not None: tqm.cleanup() def run_playbook(self, playbook_path,extra_vars=None): """ run ansible palybook """ try: # if self.redisKey:self.callback = PlayBookResultsCollectorToSave(self.redisKey,self.logId) self.callback = PlayBookResultsCollector() if extra_vars:self.variable_manager.extra_vars = extra_vars executor = PlaybookExecutor( playbooks=[playbook_path], inventory=self.inventory, variable_manager=self.variable_manager, loader=self.loader, options=self.options, passwords=self.passwords, ) executor._tqm._stdout_callback = self.callback constants.HOST_KEY_CHECKING = False #关闭第一次使用ansible连接客户端是输入命令 executor.run() except Exception as err: return False def get_model_result(self): self.results_raw = {'success':{}, 'failed':{}, 'unreachable':{}} for host, result in self.callback.host_ok.items(): hostvisiable = host.replace('.','_') self.results_raw['success'][hostvisiable] = result._result for host, result in self.callback.host_failed.items(): hostvisiable = host.replace('.','_') self.results_raw['failed'][hostvisiable] = result._result for host, result in self.callback.host_unreachable.items(): hostvisiable = host.replace('.','_') self.results_raw['unreachable'][hostvisiable]= result._result # return json.dumps(self.results_raw) return self.results_raw def get_playbook_result(self): self.results_raw = {'skipped':{}, 'failed':{}, 'ok':{},"status":{},'unreachable':{},"changed":{}} for host, result in self.callback.task_ok.items(): self.results_raw['ok'][host] = result for host, result in self.callback.task_failed.items(): self.results_raw['failed'][host] = result for host, result in self.callback.task_status.items(): self.results_raw['status'][host] = result # for host, result in self.callback.task_changed.items(): # self.results_raw['changed'][host] = result for host, result in self.callback.task_skipped.items(): self.results_raw['skipped'][host] = result for host, result in self.callback.task_unreachable.items(): self.results_raw['unreachable'][host] = result return self.results_raw if __name__ == '__main__': # resource = [ # {"hostname": "192.168.8.119"}, # # {"hostname": "192.168.6.43"}, # # {"hostname": "192.168.1.233"}, # ] resource = { "dynamic_host": { "hosts": [ {'username': u'root', 'password': '123456', 'ip': '192.168.1.108','hostname':'nginx01','port':'22'}, {"hostname":"778da6afsdwf","ip": "192.168.1.109", "port": "22", "username": "root", "password":"123456"}, ], "vars": { "var1":"ansible", "var2":"saltstack" } } } rbt = ANSRunner(resource,redisKey='1') # Ansible Adhoc rbt.run_model(host_list=['778da6a023b7','248dbc0fblj40e','testoae'],module_name='shell',module_args="ls /tmp") data = rbt.get_model_result() # Ansible playbook # rbt.run_playbook(playbook_path='/etc/ansible/playbook/OPUSE.yml') # print rbt.get_playbook_result() # rbt.run_model(host_list=[],module_name='yum',module_args="name=htop state=present")