实战演练

1. 分析目标

目标:百度百科Python词条相关词条网页-标题和简介

入口页:https://baike.baidu.com/item/Python/407313

URL格式:

- 词条页面URL:/item/Perl

数据格式:

- 标题:

<dd class="lemmaWgt-lemmaTitle-title> <h1> ... </h1> </dd>

- 简介:

<div class="lemma-summary" > ,,, </div>

页面编码:UTF-8

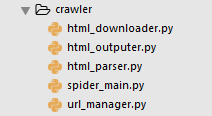

2. 调度程序

建立五个模块

打开siider_main.py爬虫总调度程序

#!/usr/bin/env python3 import url_manager import html_downloader import html_parser import html_outputer import pdb class SpiderMain(object): """入口主函数""" def __init__(self): self.urls = url_manager.UrlManager() self.downloader = html_downloader.HtmlDownloader() self.parser = html_parser.HtmlParser() self.outputer = html_outputer.HtmlOutputer() def craw(self, root_url): count = 1 # 初始url self.urls.add_new_url(root_url) # 当存在可爬取的url时 while self.urls.has_new_url(): try: # 获取带爬取url new_url = self.urls.get_new_url() print('craw %d : %s' % (count, new_url)) # 启动下载器下载页面 html_cont = self.downloader.download(new_url) # 启动解析器将新的url和爬到的数据进行保存 new_urls, new_data = self.parser.parse(new_url, html_cont) pdb.set_trace() # 将新的url添加 self.urls.add_new_urls(new_urls) # 收集爬取数据 self.outputer.collect_data(new_data) # 爬取100个页面 if count == 100: break count = count + 1 except: print("%d craw failed" % count) # 输出内容 self.outputer.output_html() if __name__ == "__main__": root_url = "https://baike.baidu.com/item/Python/407313" obj_spider = SpiderMain() obj_spider.craw(root_url)

3. URL管理器

#!/usr/bin/env python3 class UrlManager(object): """URL管理器""" def __init__(self): # 将url保存到内存中 self.new_urls = set() self.old_urls = set() # 控制url存储到内存的函数 def add_new_url(self, root_url): url = root_url if url is None: return # 如果这个url既不在待爬取的url中也不在爬取过的url中说明它是一个新的url if url not in self.new_urls and url not in self.old_urls: self.new_urls.add(url) # 处理爬取到新的url的函数 def add_new_urls(self, new_urls): urls = new_urls if urls is None or len(urls) == 0: return for url in urls: self.add_new_url(url) def has_new_url(self): return len(self.new_urls) != 0 def get_new_url(self): # 获取一个新的url,在new_urls中删除,在old_urls中添加 new_url = self.new_urls.pop() self.old_urls.add(new_url) return new_url

4. HTML下载器

from urllib import request class HtmlDownloader(object): def download(self, url): if url is None: return None response = request.urlopen(url) if response.getcode() != 200: return None return response.read()

5. HTML解析器

#!/usr/bin/env python3 from bs4 import BeautifulSoup import re import urllib.parse class HtmlParser(object): def parse(self, page_url, html_cont): if page_url is None or html_cont is None: return # 构建网页解析器 soup = BeautifulSoup(html_cont, 'html.parser', from_encoding='utf-8') new_urls = self._get_new_urls(page_url, soup) new_data = self._get_new_data(page_url, soup) return new_urls, new_data def _get_new_urls(self, page_url, soup): new_urls = set() # /item/任意字符 links = soup.find_all('a', href=re.compile(r"/item/")) for link in links: new_url = link['href'] new_full_url = urllib.parse.urljoin(page_url, new_url) new_urls.add(new_full_url) return new_urls def _get_new_data(self, page_url, soup): res_data = {} # url res_data['url'] = page_url # <dd class="lemmaWgt-lemmaTitle-title"><h1>Python</h1> title_node = soup.find( 'dd', class_="lemmaWgt-lemmaTitle-title").find('h1') res_data['title'] = title_node.get_text() # div class ="lemma-summary"> summary_node = soup.find('div', class_="lemma-summary") res_data['summary'] = summary_node.get_text() # 三个属性:url、title、summary return res_data

6. HTMl输出器

#!/usr/bin/env python3 class HtmlOutputer(object): """docstring for HtmlOutputer""" def __init__(self): self.datas = [] def collect_data(self, new_data): data = new_data if data is None: return self.datas.append(data) def output_html(self): fout = open('output.html', 'w', encoding='utf-8') # utf-8编码 fout.write("<html>") fout.write("<head>") fout.write("<meta charset='utf-8'>") # 给html声明 fout.write("</head>") fout.write("<body>") fout.write("<table>") for data in self.datas: fout.write("<tr>") fout.write("<td>%s</td>" % data['url']) fout.write("<td>%s</td>" % data['title']) fout.write("<td>%s</td>" % data['summary']) fout.write("</tr>") fout.write("</table>") fout.write("</body>") fout.write("</html>") fout.close()

7.最终爬取效果