解决策树如何预测患者需要佩戴的隐形眼镜类型。使用小数据

集,我们就可以利用决策树学到很多知识:眼科医生是如何判断患者需要佩戴的镜片类型;一旦

理解了决策树的工作原理,我们甚至也可以帮助人们判断需要佩戴的镜片类型。

隐 形 眼 镜 数 据 集 是 非 常 著 名 的 数 据 集 ,它 包 含 很 多 患 者 眼 部 状 况 的 观 察 条 件 以 及 医 生 推 荐 的

隐 形 眼 镜 类 型 。隐 形 眼 镜 类 型 包 括 硬 材 质 、软 材 质 以 及 不 适 合 佩 戴 隐 形 眼 镜 。数 据 来 源 于UCI 数 据

库。

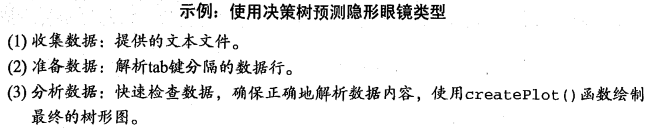

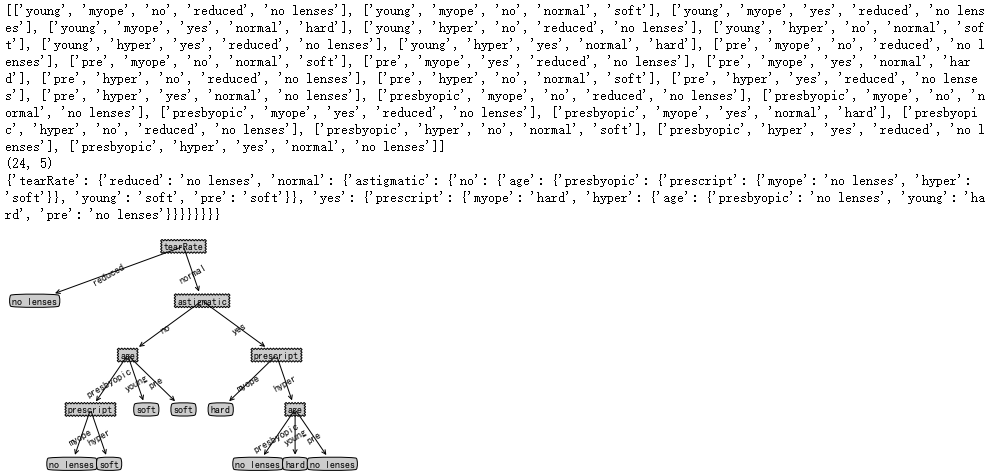

import numpy as np import operator as op from math import log def calcShannonEnt(dataSet): labelCounts = {} for featVec in dataSet: currentLabel = featVec[-1] if(currentLabel not in labelCounts.keys()): labelCounts[currentLabel] = 0 labelCounts[currentLabel] += 1 shannonEnt = 0.0 rowNum = len(dataSet) for key in labelCounts: prob = float(labelCounts[key])/rowNum shannonEnt -= prob * log(prob,2) return shannonEnt def splitDataSet(dataSet, axis, value): retDataSet = [] for featVec in dataSet: if(featVec[axis] == value): reducedFeatVec = featVec[:axis] reducedFeatVec.extend(featVec[axis+1:]) retDataSet.append(reducedFeatVec) return retDataSet def chooseBestFeatureToSplit(dataSet): numFeatures = np.shape(dataSet)[1]-1 baseEntropy = calcShannonEnt(dataSet) bestInfoGain = 0.0 bestFeature = -1 for i in range(numFeatures): featList = [example[i] for example in dataSet] uniqueVals = set(featList) newEntropy = 0.0 for value in uniqueVals: subDataSet = splitDataSet(dataSet, i, value) prob = len(subDataSet)/float(len(dataSet)) newEntropy += prob * calcShannonEnt(subDataSet) infoGain = baseEntropy - newEntropy if (infoGain > bestInfoGain): bestInfoGain = infoGain bestFeature = i return bestFeature def majorityCnt(classList): classCount={} for vote in classList: if(vote not in classCount.keys()): classCount[vote] = 0 classCount[vote] += 1 sortedClassCount = sorted(classCount.items(), key=op.itemgetter(1), reverse=True) return sortedClassCount[0][0] def createTree(dataSet,labels): classList = [example[-1] for example in dataSet] if(classList.count(classList[0]) == len(classList)): return classList[0] if len(dataSet[0]) == 1: return majorityCnt(classList) bestFeat = chooseBestFeatureToSplit(dataSet) bestFeatLabel = labels[bestFeat] myTree = {bestFeatLabel:{}} del(labels[bestFeat]) featValues = [example[bestFeat] for example in dataSet] uniqueVals = set(featValues) for value in uniqueVals: subLabels = labels[:] myTree[bestFeatLabel][value] = createTree(splitDataSet(dataSet, bestFeat, value),subLabels) return myTree def classify(inputTree,featLabels,testVec): for i in inputTree.keys(): firstStr = i break secondDict = inputTree[firstStr] featIndex = featLabels.index(firstStr) key = testVec[featIndex] valueOfFeat = secondDict[key] if isinstance(valueOfFeat, dict): classLabel = classify(valueOfFeat, featLabels, testVec) else: classLabel = valueOfFeat return classLabel data = open("F:\machinelearninginaction\Ch03\lenses.txt") dataSet = [inst.strip().split(" ") for inst in data.readlines()] print(dataSet) print(np.shape(dataSet)) labels = ["age","prescript","astigmatic","tearRate"] tree = createTree(dataSet,labels) print(tree) import matplotlib.pyplot as plt decisionNode = dict(boxstyle="sawtooth", fc="0.8") leafNode = dict(boxstyle="round4", fc="0.8") arrow_args = dict(arrowstyle="<-") def getNumLeafs(myTree): numLeafs = 0 for i in myTree.keys(): firstStr = i break secondDict = myTree[firstStr] for key in secondDict.keys(): if type(secondDict[key]).__name__=='dict': numLeafs += getNumLeafs(secondDict[key]) else: numLeafs +=1 return numLeafs def getTreeDepth(myTree): maxDepth = 0 for i in myTree.keys(): firstStr = i break secondDict = myTree[firstStr] for key in secondDict.keys(): if type(secondDict[key]).__name__=='dict': thisDepth = 1 + getTreeDepth(secondDict[key]) else: thisDepth = 1 if thisDepth > maxDepth: maxDepth = thisDepth return maxDepth def plotNode(nodeTxt, centerPt, parentPt, nodeType): createPlot.ax1.annotate(nodeTxt, xy=parentPt, xycoords='axes fraction',xytext=centerPt, textcoords='axes fraction',va="center", ha="center", bbox=nodeType, arrowprops=arrow_args ) def plotMidText(cntrPt, parentPt, txtString): xMid = (parentPt[0]-cntrPt[0])/2.0 + cntrPt[0] yMid = (parentPt[1]-cntrPt[1])/2.0 + cntrPt[1] createPlot.ax1.text(xMid, yMid, txtString, va="center", ha="center", rotation=30) def plotTree(myTree, parentPt, nodeTxt): numLeafs = getNumLeafs(myTree) depth = getTreeDepth(myTree) for i in myTree.keys(): firstStr = i break cntrPt = (plotTree.xOff + (1.0 + float(numLeafs))/2.0/plotTree.totalW, plotTree.yOff) plotMidText(cntrPt, parentPt, nodeTxt) plotNode(firstStr, cntrPt, parentPt, decisionNode) secondDict = myTree[firstStr] plotTree.yOff = plotTree.yOff - 1.0/plotTree.totalD for key in secondDict.keys(): if type(secondDict[key]).__name__=='dict': plotTree(secondDict[key],cntrPt,str(key)) else: plotTree.xOff = plotTree.xOff + 1.0/plotTree.totalW plotNode(secondDict[key], (plotTree.xOff, plotTree.yOff), cntrPt, leafNode) plotMidText((plotTree.xOff, plotTree.yOff), cntrPt, str(key)) plotTree.yOff = plotTree.yOff + 1.0/plotTree.totalD def createPlot(inTree): fig = plt.figure(1, facecolor='white') fig.clf() axprops = dict(xticks=[], yticks=[]) createPlot.ax1 = plt.subplot(111, frameon=False, **axprops) #createPlot.ax1 = plt.subplot(111, frameon=False) #ticks for demo puropses plotTree.totalW = float(getNumLeafs(inTree)) plotTree.totalD = float(getTreeDepth(inTree)) plotTree.xOff = -0.5/plotTree.totalW; plotTree.yOff = 1.0; plotTree(inTree, (0.5,1.0), '') plt.show() createPlot(tree)

可 以 发 现 ,医 生 最 多 需 要 问 四 个 问 题 就 能 确 定 患 者 需 要 佩 戴 哪 种 类 型 的 隐 形 眼 镜 。

小结: