import pandas as pd df = pd.read_json('/Users/chenyi/Documents/News_Category_Dataset.json', lines=True) df.head()

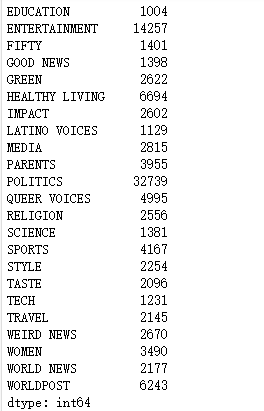

df.category = df.category.map(lambda x:"WORLDPOST" if x == "THE WORLDPOST" else x) categories = df.groupby('category') print("total categories: ", categories.ngroups) print(categories.size())

from keras.preprocessing import sequence from keras.preprocessing.text import Tokenizer, text_to_word_sequence, one_hot df['text'] = df.headline + " " + df.short_description # 将单词进行标号 tokenizer = Tokenizer() tokenizer.fit_on_texts(df.text) X = tokenizer.texts_to_sequences(df.text) df['words'] = X #记录每条数据的单词数 df['word_length'] = df.words.apply(lambda i: len(i)) #清除单词数不足5个的数据条目 df = df[df.word_length >= 5] df.word_length.describe()

maxlen = 50 X = list(sequence.pad_sequences(df.words, maxlen=maxlen)) # 将分类进行编号 categories = df.groupby('category').size().index.tolist() category_int = {} int_category = {} for i, k in enumerate(categories): category_int.update({k:i}) int_category.update({i:k}) df['c2id'] = df['category'].apply(lambda x: category_int[x])

import numpy as np import keras.utils as utils from sklearn.model_selection import train_test_split import numpy as np X = np.array(X) Y = utils.to_categorical(list(df.c2id)) # 将数据分成两部分,80%用于训练,20%用于测试 seed = 29 x_train, x_val, y_train, y_val = train_test_split(X, Y, test_size=0.2, random_state=seed)

word_index = tokenizer.word_index EMBEDDING_DIM = 100 embeddings_index = {} f = open('/Users/chenyi/Documents/glove.6B/glove.6B.100d.txt') for line in f: values = line.split() word = values[0] coefs = np.asarray(values[1:], dtype='float32') embeddings_index[word] = coefs f.close() print('Total %s word vectors.' %len(embeddings_index))

from keras.initializers import Constant embedding_matrix = np.zeros((len(word_index) + 1, EMBEDDING_DIM)) for word, i in word_index.items(): embedding_vector = embeddings_index.get(word) #根据单词挑选出对应向量 if embedding_vector is not None: embedding_matrix[i] = embedding_vector embedding_layer = Embedding(len(word_index)+1, EMBEDDING_DIM, embeddings_initializer=Constant(embedding_matrix), input_length = maxlen, trainable=False )

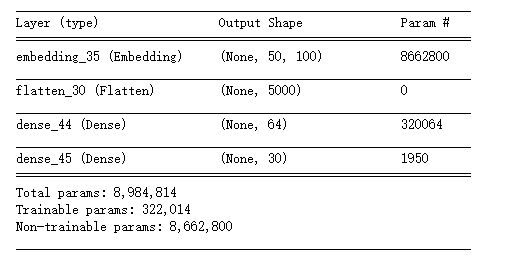

model = Sequential() model.add(embedding_layer) model.add(Flatten()) model.add(layers.Dense(64, activation='relu')) #当结果是输出多个分类的概率时,用softmax激活函数,它将为30个分类提供不同的可能性概率值 model.add(layers.Dense(len(int_category), activation='softmax')) #对于输出多个分类结果,最好的损失函数是categorical_crossentropy model.compile(optimizer='rmsprop', loss='categorical_crossentropy', metrics=['accuracy']) model.summary()

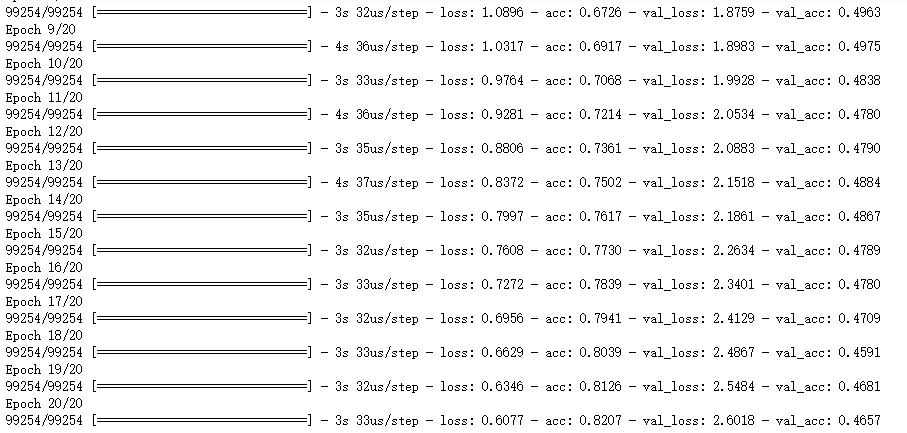

history = model.fit(x_train, y_train, epochs=20, validation_data=(x_val, y_val), batch_size=512)

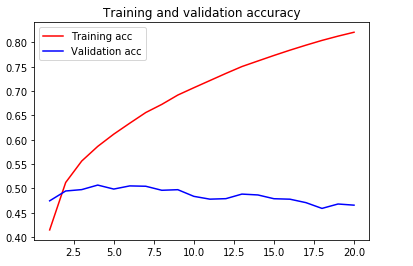

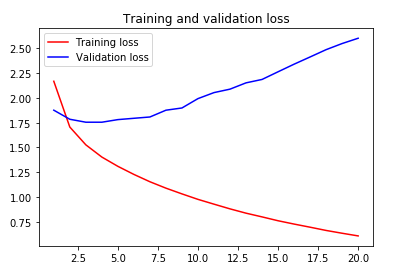

acc = history.history['acc'] val_acc = history.history['val_acc'] loss = history.history['loss'] val_loss = history.history['val_loss'] epochs = range(1, len(acc) + 1) plt.title('Training and validation accuracy') plt.plot(epochs, acc, 'red', label='Training acc') plt.plot(epochs, val_acc, 'blue', label='Validation acc') plt.legend() plt.figure() plt.title('Training and validation loss') plt.plot(epochs, loss, 'red', label='Training loss') plt.plot(epochs, val_loss, 'blue', label='Validation loss') plt.legend() plt.show()