我们以一个acc解码为例看看这两个函数

static void decode(AVCodecContext *dec_ctx, AVPacket *pkt, AVFrame *frame, FILE *outfile) { int i, ch; int ret, data_size; // pkt是由av_parser_parse2拿到,一帧数据ADTS Frame ret = avcodec_send_packet(dec_ctx, pkt); ret = avcodec_send_packet(dec_ctx, pkt); if (ret < 0) { fprintf(stderr, "Error submitting the packet to the decoder "); exit(1); } /* read all the output frames (in general there may be any number of them */ while (ret >= 0) { ret = avcodec_receive_frame(dec_ctx, frame); if (ret == AVERROR(EAGAIN) || ret == AVERROR_EOF) return; else if (ret < 0) { fprintf(stderr, "Error during decoding "); exit(1); } // 采样位数 4位 data_size = av_get_bytes_per_sample(dec_ctx->sample_fmt); if (data_size < 0) { /* This should not occur, checking just for paranoia */ fprintf(stderr, "Failed to calculate data size "); exit(1); } // ffmpeg内部存储格式:AV_SAMPLE_FMT_FLTP planar格式 实际文件都是Packed模式 // 样本数量循环 for (i = 0; i < frame->nb_samples; i++) // 通道数遍历 for (ch = 0; ch < dec_ctx->channels; ch++) fwrite(frame->data[ch] + data_size*i, 1, data_size, outfile); } }

这里分为几步:

- 拿到原始数据,一般是av_parser_parse2解析得到,这里一般是ADTS格式一帧Frame。

- 发送数据给解码器,返回使用的大小

- 从解码器获取pcm数据

- 按照packet模式写入文件

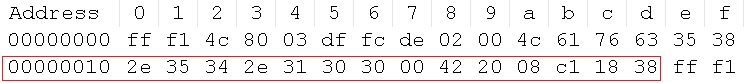

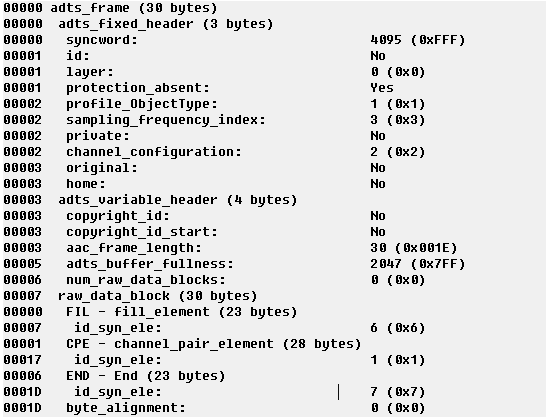

这里看一下输入的数据:

可以看到就是原始的aac文件第一帧

我们看看解析

avcodec_send_packet 函数

int attribute_align_arg avcodec_send_packet(AVCodecContext *avctx, const AVPacket *avpkt) { AVCodecInternal *avci = avctx->internal; int ret; // 检查AVCodecContext是否打开 if (!avcodec_is_open(avctx) || !av_codec_is_decoder(avctx->codec)) return AVERROR(EINVAL); if (avctx->internal->draining) return AVERROR_EOF; if (avpkt && !avpkt->size && avpkt->data) return AVERROR(EINVAL); // 数据拷贝到buffer_pkt av_packet_unref(avci->buffer_pkt); if (avpkt && (avpkt->data || avpkt->side_data_elems)) { ret = av_packet_ref(avci->buffer_pkt, avpkt); if (ret < 0) return ret; } // 将avci->buffer_pkt移动到avci->filter.bsfs[0] ret = av_bsf_send_packet(avci->filter.bsfs[0], avci->buffer_pkt); if (ret < 0) { av_packet_unref(avci->buffer_pkt); return ret; } // 解码 if (!avci->buffer_frame->buf[0]) { ret = decode_receive_frame_internal(avctx, avci->buffer_frame); if (ret < 0 && ret != AVERROR(EAGAIN) && ret != AVERROR_EOF) return ret; } return 0; }

avcodec_receive_frame函数

int attribute_align_arg avcodec_receive_frame(AVCodecContext *avctx, AVFrame *frame) { AVCodecInternal *avci = avctx->internal; int ret, changed; av_frame_unref(frame); if (!avcodec_is_open(avctx) || !av_codec_is_decoder(avctx->codec)) return AVERROR(EINVAL); if (avci->buffer_frame->buf[0]) { // 缓存有数据之间移动到frame,一般有数据都走这里 av_frame_move_ref(frame, avci->buffer_frame); } else { // 解码接受数据 ret = decode_receive_frame_internal(avctx, frame); if (ret < 0) return ret; } if (avctx->codec_type == AVMEDIA_TYPE_VIDEO) { ret = apply_cropping(avctx, frame); if (ret < 0) { av_frame_unref(frame); return ret; } } avctx->frame_number++; if (avctx->flags & AV_CODEC_FLAG_DROPCHANGED) { if (avctx->frame_number == 1) { avci->initial_format = frame->format; switch(avctx->codec_type) { case AVMEDIA_TYPE_VIDEO: avci->initial_width = frame->width; avci->initial_height = frame->height; break; case AVMEDIA_TYPE_AUDIO: avci->initial_sample_rate = frame->sample_rate ? frame->sample_rate : avctx->sample_rate; avci->initial_channels = frame->channels; avci->initial_channel_layout = frame->channel_layout; break; } } if (avctx->frame_number > 1) { changed = avci->initial_format != frame->format; switch(avctx->codec_type) { case AVMEDIA_TYPE_VIDEO: changed |= avci->initial_width != frame->width || avci->initial_height != frame->height; break; case AVMEDIA_TYPE_AUDIO: changed |= avci->initial_sample_rate != frame->sample_rate || avci->initial_sample_rate != avctx->sample_rate || avci->initial_channels != frame->channels || avci->initial_channel_layout != frame->channel_layout; break; } if (changed) { avci->changed_frames_dropped++; av_log(avctx, AV_LOG_INFO, "dropped changed frame #%d pts %"PRId64 " drop count: %d ", avctx->frame_number, frame->pts, avci->changed_frames_dropped); av_frame_unref(frame); return AVERROR_INPUT_CHANGED; } } } return 0; }

两个都调用了decode_receive_frame_internal,我们看看实现

static int decode_receive_frame_internal(AVCodecContext *avctx, AVFrame *frame) { AVCodecInternal *avci = avctx->internal; int ret; av_assert0(!frame->buf[0]); if (avctx->codec->receive_frame) ret = avctx->codec->receive_frame(avctx, frame); else ret = decode_simple_receive_frame(avctx, frame); if (ret == AVERROR_EOF) avci->draining_done = 1; if (!ret) { /* the only case where decode data is not set should be decoders * that do not call ff_get_buffer() */ av_assert0((frame->private_ref && frame->private_ref->size == sizeof(FrameDecodeData)) || !(avctx->codec->capabilities & AV_CODEC_CAP_DR1)); if (frame->private_ref) { FrameDecodeData *fdd = (FrameDecodeData*)frame->private_ref->data; if (fdd->post_process) { ret = fdd->post_process(avctx, frame); if (ret < 0) { av_frame_unref(frame); return ret; } } } } /* free the per-frame decode data */ av_buffer_unref(&frame->private_ref); return ret; }

这里如果解码器有receive_frame就调用,没有就走decode_simple_receive_frame,这里走decode_simple_receive_frame

static int decode_simple_receive_frame(AVCodecContext *avctx, AVFrame *frame) { int ret; while (!frame->buf[0]) { ret = decode_simple_internal(avctx, frame); if (ret < 0) return ret; } return 0; }

/* * The core of the receive_frame_wrapper for the decoders implementing * the simple API. Certain decoders might consume partial packets without * returning any output, so this function needs to be called in a loop until it * returns EAGAIN. **/ static int decode_simple_internal(AVCodecContext *avctx, AVFrame *frame) { AVCodecInternal *avci = avctx->internal; DecodeSimpleContext *ds = &avci->ds; AVPacket *pkt = ds->in_pkt; // copy to ensure we do not change pkt int got_frame, actual_got_frame; int ret; // 拿到源数据保存到pkt if (!pkt->data && !avci->draining) { av_packet_unref(pkt); ret = ff_decode_get_packet(avctx, pkt); if (ret < 0 && ret != AVERROR_EOF) return ret; } // Some codecs (at least wma lossless) will crash when feeding drain packets // after EOF was signaled. if (avci->draining_done) return AVERROR_EOF; if (!pkt->data && !(avctx->codec->capabilities & AV_CODEC_CAP_DELAY || avctx->active_thread_type & FF_THREAD_FRAME)) return AVERROR_EOF; got_frame = 0; // 调用解码器解码 if (HAVE_THREADS && avctx->active_thread_type & FF_THREAD_FRAME) { ret = ff_thread_decode_frame(avctx, frame, &got_frame, pkt); } else { ret = avctx->codec->decode(avctx, frame, &got_frame, pkt); if (!(avctx->codec->caps_internal & FF_CODEC_CAP_SETS_PKT_DTS)) frame->pkt_dts = pkt->dts; if (avctx->codec->type == AVMEDIA_TYPE_VIDEO) { if(!avctx->has_b_frames) frame->pkt_pos = pkt->pos; //FIXME these should be under if(!avctx->has_b_frames) /* get_buffer is supposed to set frame parameters */ if (!(avctx->codec->capabilities & AV_CODEC_CAP_DR1)) { if (!frame->sample_aspect_ratio.num) frame->sample_aspect_ratio = avctx->sample_aspect_ratio; if (!frame->width) frame->width = avctx->width; if (!frame->height) frame->height = avctx->height; if (frame->format == AV_PIX_FMT_NONE) frame->format = avctx->pix_fmt; } } } emms_c(); actual_got_frame = got_frame; if (avctx->codec->type == AVMEDIA_TYPE_VIDEO) { if (frame->flags & AV_FRAME_FLAG_DISCARD) got_frame = 0; if (got_frame) frame->best_effort_timestamp = guess_correct_pts(avctx, frame->pts, frame->pkt_dts); } else if (avctx->codec->type == AVMEDIA_TYPE_AUDIO) { uint8_t *side; int side_size; uint32_t discard_padding = 0; uint8_t skip_reason = 0; uint8_t discard_reason = 0; if (ret >= 0 && got_frame) { frame->best_effort_timestamp = guess_correct_pts(avctx, frame->pts, frame->pkt_dts); if (frame->format == AV_SAMPLE_FMT_NONE) frame->format = avctx->sample_fmt; if (!frame->channel_layout) frame->channel_layout = avctx->channel_layout; if (!frame->channels) frame->channels = avctx->channels; if (!frame->sample_rate) frame->sample_rate = avctx->sample_rate; } side= av_packet_get_side_data(avci->last_pkt_props, AV_PKT_DATA_SKIP_SAMPLES, &side_size); if(side && side_size>=10) { avctx->internal->skip_samples = AV_RL32(side) * avctx->internal->skip_samples_multiplier; discard_padding = AV_RL32(side + 4); av_log(avctx, AV_LOG_DEBUG, "skip %d / discard %d samples due to side data ", avctx->internal->skip_samples, (int)discard_padding); skip_reason = AV_RL8(side + 8); discard_reason = AV_RL8(side + 9); } if ((frame->flags & AV_FRAME_FLAG_DISCARD) && got_frame && !(avctx->flags2 & AV_CODEC_FLAG2_SKIP_MANUAL)) { avctx->internal->skip_samples = FFMAX(0, avctx->internal->skip_samples - frame->nb_samples); got_frame = 0; } if (avctx->internal->skip_samples > 0 && got_frame && !(avctx->flags2 & AV_CODEC_FLAG2_SKIP_MANUAL)) { if(frame->nb_samples <= avctx->internal->skip_samples){ got_frame = 0; avctx->internal->skip_samples -= frame->nb_samples; av_log(avctx, AV_LOG_DEBUG, "skip whole frame, skip left: %d ", avctx->internal->skip_samples); } else { av_samples_copy(frame->extended_data, frame->extended_data, 0, avctx->internal->skip_samples, frame->nb_samples - avctx->internal->skip_samples, avctx->channels, frame->format); if(avctx->pkt_timebase.num && avctx->sample_rate) { int64_t diff_ts = av_rescale_q(avctx->internal->skip_samples, (AVRational){1, avctx->sample_rate}, avctx->pkt_timebase); if(frame->pts!=AV_NOPTS_VALUE) frame->pts += diff_ts; #if FF_API_PKT_PTS FF_DISABLE_DEPRECATION_WARNINGS if(frame->pkt_pts!=AV_NOPTS_VALUE) frame->pkt_pts += diff_ts; FF_ENABLE_DEPRECATION_WARNINGS #endif if(frame->pkt_dts!=AV_NOPTS_VALUE) frame->pkt_dts += diff_ts; if (frame->pkt_duration >= diff_ts) frame->pkt_duration -= diff_ts; } else { av_log(avctx, AV_LOG_WARNING, "Could not update timestamps for skipped samples. "); } av_log(avctx, AV_LOG_DEBUG, "skip %d/%d samples ", avctx->internal->skip_samples, frame->nb_samples); frame->nb_samples -= avctx->internal->skip_samples; avctx->internal->skip_samples = 0; } } if (discard_padding > 0 && discard_padding <= frame->nb_samples && got_frame && !(avctx->flags2 & AV_CODEC_FLAG2_SKIP_MANUAL)) { if (discard_padding == frame->nb_samples) { got_frame = 0; } else { if(avctx->pkt_timebase.num && avctx->sample_rate) { int64_t diff_ts = av_rescale_q(frame->nb_samples - discard_padding, (AVRational){1, avctx->sample_rate}, avctx->pkt_timebase); frame->pkt_duration = diff_ts; } else { av_log(avctx, AV_LOG_WARNING, "Could not update timestamps for discarded samples. "); } av_log(avctx, AV_LOG_DEBUG, "discard %d/%d samples ", (int)discard_padding, frame->nb_samples); frame->nb_samples -= discard_padding; } } if ((avctx->flags2 & AV_CODEC_FLAG2_SKIP_MANUAL) && got_frame) { AVFrameSideData *fside = av_frame_new_side_data(frame, AV_FRAME_DATA_SKIP_SAMPLES, 10); if (fside) { AV_WL32(fside->data, avctx->internal->skip_samples); AV_WL32(fside->data + 4, discard_padding); AV_WL8(fside->data + 8, skip_reason); AV_WL8(fside->data + 9, discard_reason); avctx->internal->skip_samples = 0; } } } if (avctx->codec->type == AVMEDIA_TYPE_AUDIO && !avci->showed_multi_packet_warning && ret >= 0 && ret != pkt->size && !(avctx->codec->capabilities & AV_CODEC_CAP_SUBFRAMES)) { av_log(avctx, AV_LOG_WARNING, "Multiple frames in a packet. "); avci->showed_multi_packet_warning = 1; } if (!got_frame) av_frame_unref(frame); if (ret >= 0 && avctx->codec->type == AVMEDIA_TYPE_VIDEO && !(avctx->flags & AV_CODEC_FLAG_TRUNCATED)) ret = pkt->size; #if FF_API_AVCTX_TIMEBASE if (avctx->framerate.num > 0 && avctx->framerate.den > 0) avctx->time_base = av_inv_q(av_mul_q(avctx->framerate, (AVRational){avctx->ticks_per_frame, 1})); #endif /* do not stop draining when actual_got_frame != 0 or ret < 0 */ /* got_frame == 0 but actual_got_frame != 0 when frame is discarded */ if (avctx->internal->draining && !actual_got_frame) { if (ret < 0) { /* prevent infinite loop if a decoder wrongly always return error on draining */ /* reasonable nb_errors_max = maximum b frames + thread count */ int nb_errors_max = 20 + (HAVE_THREADS && avctx->active_thread_type & FF_THREAD_FRAME ? avctx->thread_count : 1); if (avci->nb_draining_errors++ >= nb_errors_max) { av_log(avctx, AV_LOG_ERROR, "Too many errors when draining, this is a bug. " "Stop draining and force EOF. "); avci->draining_done = 1; ret = AVERROR_BUG; } } else { avci->draining_done = 1; } } avci->compat_decode_consumed += ret; if (ret >= pkt->size || ret < 0) { av_packet_unref(pkt); } else { int consumed = ret; pkt->data += consumed; pkt->size -= consumed; avci->last_pkt_props->size -= consumed; // See extract_packet_props() comment. pkt->pts = AV_NOPTS_VALUE; pkt->dts = AV_NOPTS_VALUE; avci->last_pkt_props->pts = AV_NOPTS_VALUE; avci->last_pkt_props->dts = AV_NOPTS_VALUE; } if (got_frame) av_assert0(frame->buf[0]); return ret < 0 ? ret : 0; }

到这里发现最终走了aa解码器的aac_decode_frame